1. Introduction

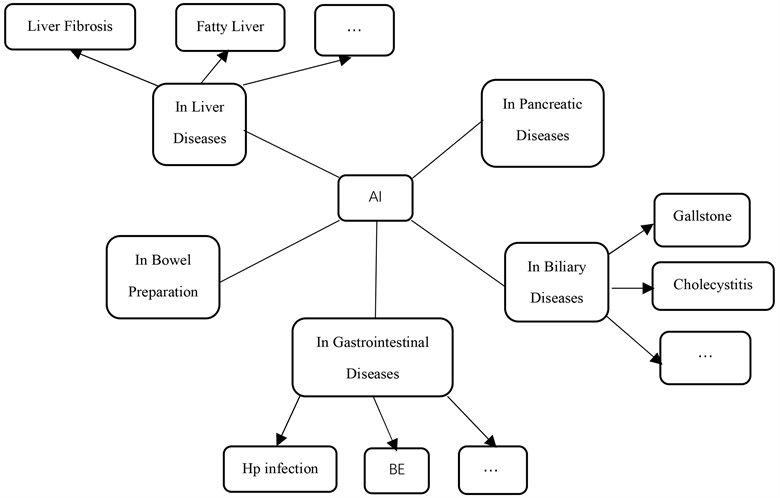

The three pillars of artificial intelligence (AI) are data, computing power, and algorithms. Data serves as the “feed” for AI algorithms and is ubiquitous in the era of big data. The rise of “health data science” has stimulated interdisciplinary thinking and methods, creating a knowledge-sharing system among computer science, biostatistics, epidemiology, and clinical medicine, which has propelled the development of AI in the field of medicine [1] . Algorithms act as the driving force behind AI, playing a pivotal role in clinical diagnosis, prediction, and treatment. AI algorithms are mainly divided into traditional machine learning algorithms and deep learning algorithms (DL). Traditional machine learning algorithms include linear regression, support vector machines (SVM), random forests, decision trees, and logistic regression, while deep learning algorithms are primarily applied in deep neural networks (DNN), recurrent neural networks (RNN), and convolutional neural networks (CNN). Furthermore, the development of AI in conjunction with 5G technology has also advanced the progress of robotics in the field of surgery [2] . This article provides an overview of models built using both traditional machine learning methods and deep learning methods, as well as research progress on robotics in the digestive system diseases, aiming to provide references for further studies (Picture 1).

2. Research Progress of AI in Bowel Preparation

With increasing age, the detection rate of polyps exhibits an upward trend. In addition to high-risk populations, research has found that individuals aged 40

Picture 1. Research applications in digestive system diseases.

and above in the general population also require colonoscopy [3] [4] . However, as many as one-fourth of the screened population experience inadequate bowel preparation during the colonoscopy procedure [5] . To prevent situations of missed diagnoses and misdiagnoses during the examination, healthcare professionals often employ written and verbal education to improve the quality of patients’ bowel preparation. With the emergence of AI technology, smartphone applications have become a novel educational tool, providing convenience and assurance for patients’ bowel cleansing preparations [6] . Furthermore, the accuracy of AI continues to improve with the establishment of Internet of Things data platforms, as sample data increases [7] .

In recent years, scholars both domestically and internationally have developed an application that utilizes big data analysis on uploaded stool images to generate a score for bowel preparation. Medical professionals can then provide improvement suggestions based on the score to help patients enhance the quality of their bowel preparation. A study compared the results of seven smartphone applications in terms of bowel preparation during colonoscopy procedures. Six of the studies demonstrated that smartphone applications were able to improve the quality of bowel preparation. Additionally, five studies found that the use of smartphone applications significantly increased patient satisfaction during the perioperative period of colonoscopy [8] . Further research has been conducted by scholars in this field. van der Zander [9] and others developed a smartphone application called Prepit (Ferring B V), which consists of six parts: colonoscopy examination date, educational tools, bowel preparation schedule, low-fiber diet examples, visually assisted preparation instructions, and examples of transparent liquid intake. They provided oral and written education to 86 patients while the remaining 87 patients followed the steps outlined in the smartphone application for bowel cleansing preparation. The research results indicated that the smartphone application was able to significantly improve the quality of bowel preparation, particularly in the right colon. However, no significant difference was observed in terms of patient satisfaction. Cho [10] and others reported that the application group had significantly higher BBPS scores and satisfaction levels compared to the control group, which was consistent with the conclusions reached by Madhav [6] . Furthermore, some domestically and internationally developed applications include an intelligent automatic scoring function for bowel preparation. Researchers such as Wen Jing [11] and Xun Linjuan [12] believed that AI-assisted educational applications significantly improved the quality of bowel preparation in various segments of the intestine. Further research by Sheng Ruli [13] and others found that smartphone apps equipped with an AI-assisted bowel preparation scoring system could effectively improve the quality of bowel preparation in overweight patients. However, Jung [14] and others arrived at opposing conclusions using the OBPS score. The results showed that the average OBPS score for the non-application group vs. the application group was 2.79 ± 2.064 vs. 2.53 ± 1.264 (p = 0.950), and it was observed that patients receiving the application used a relatively lower dosage of polyethylene glycol (PEG) (3713.2 ± 405.8 vs. 3979.2 ± 102.06, p = 0.001). Due to the limited number of patient cases in some studies, there may be insufficient sample sizes that affect the statistical reliability of the results. Additionally, factors such as patient age, proficiency in using the app, smartphone system, and examination equipment variations can also impact the accuracy of bowel scores and polyp detection rates. Therefore, further research is needed in the future to develop a universally applicable and freely downloadable application.

3. Research Progress of AI in Gastrointestinal Diseases

3.1. Hp Infection

The deep learning technology has attracted attention and research from scholars both domestically and internationally in the diagnosis and classification of Hp infection, as it utilizes information such as imaging, biology, and clinical data to train models for automatic detection and localization of Hp infection.

Nakashima et al. [15] have developed an AI system using two novel laser IEE systems, BLI-bright and Linked Color Imaging (LCI). Compared to white light imaging (WLI), BLI-bright and LCI show significantly higher AUC values, while there is no difference in diagnostic time among these three AI systems. The research group also developed a computer-assisted diagnosis (CAD) system, which constructs deep learning models using endoscopic still images captured by WLI and LCI. The results showed that LCI-CAD outperforms WLI-CAD by 9.2% in non-infection cases, by 5.0% in current infection cases, and by 5.0% in post-eradication cases. Additionally, the diagnosis accuracy of endoscopists using LCI images is consistent with this [16] . Brazilian scholars Gonçalves et al. [17] believe that CNN models can detect HP infection and inflammation spectrum in gastric mucosal tissue pathology biopsies, and they provide the DeepHP database. A study on digital pathology (DP) found that virtual slide images obtained by scanning Warthin-Starry (W-S) silver-stained tissues with a 20× resolution scanner can reliably identify HP, with an F1 score of 0.829 for the AI classifier [18] . Additionally, Song Xiaobin et al. [19] proposed the feasibility of constructing an Hp tongue image classification model using Alexnet convolutional neural network by analyzing the relationship between Helicobacter pylori and tongue color, coating color, and moisture based on extracted characteristic information from tongue image, such as tongue color and shape, coating color and moisture. However, this is only a concept, and no specific research results have been reported by other scholars at present.

3.2. Barrett’s Esophagus and Esophageal Cancer

Barrett’s esophagus (BE) is a precancerous condition for esophageal adenocarcinoma (EAC), with a cancer risk of up to 0.5%. The progression from normal esophageal epithelium to EAC typically involves the stages of normal esophageal epithelium, with or without intestinal metaplasia (IM), low-grade dysplasia, high-grade dysplasia, and EAC [20] . To accurately distinguish between BE and early-stage EAC, Alanna et al. [21] developed a real-time deep learning artificial intelligence (AI) system. High et al. [22] demonstrated that in the differentiation between normal tissue and Barrett’s esophagus, the performance of a dual pre-training model, which was trained sequentially on the ImageNet and HyperKvasir databases, was superior to that of a single pre-training model trained solely on the ImageNet database. Additionally, Shahriar et al. [23] developed a ResNet101 model that exhibited good sensitivity and specificity in predicting the grade of dysplasia in Barrett’s esophagus, with a sensitivity of 81.3% and a specificity of 100% for low-grade dysplasia, and sensitivities and specificities exceeding 90% for nondysplastic Barrett’s esophagus and high-grade dysplasia. Furthermore, Dutch researchers, including Manon et al. [24] , developed a classifier based on hematoxylin and eosin (H & E) staining imaging and a model based on mass spectrometry imaging (MSI), both of which were able to predict the grade of dysplasia in Barrett’s esophagus. It was also found that the H & E-based classifier performed well in differentiating tissue types, while the MSI-based model provided more accurate differentiation of dysplastic grades and progression risk. In the diagnosis of BE, the gastroesophageal junction (GEJ) and squamocolumnar junction (SCJ) are of significant importance, and a distance of ≥1 cm between the two is highly indicative of the presence of BE. A study utilized a fully convolutional neural network (FCN) to automatically identify the range of GEJ and SCJ in endoscopic images, enabling targeted pathological biopsy of suspicious areas. The segmentation results of the BE scope developed in this study were consistent with the accuracy of expert manual assessment [25] . In terms of prognosis after BE treatment, Sharib et al. [26] generated depth maps using a deep learning-based depth estimator network and achieved esophageal 3D reconstruction based on 2D endoscopic images. This AI system was able to automatically measure the Prague C & M criteria, quantify the Barrett’s epithelium area (BEA), and assess the extent of islands. For 131 BE patients, the system constructed esophageal 3D models for pre- and post-treatment comparisons, enabling effective evaluation of treatment outcomes and improved follow-up.

Esophageal cancer, apart from adenocarcinoma, is predominantly squamous cell carcinoma, accounting for over 90% of cases. In the study on esophageal squamous cell carcinoma (ESCC), Meng et al. [27] , compared the diagnostic performance of endoscopists with different levels of experience using CAD systems in WLI and NBI combination modes. They found that the accuracy (91.0% vs. 78.3%, p < 0.001), sensitivity (90.0% vs. 76.1%, p < 0.001), and specificity (93.0% vs. 82.5%, p = 0.002) of expert endoscopists were significantly higher than those of non-expert endoscopists. After referencing the CAD system, the differences in accuracy (88.2% vs. 93.2%, p < 0.001), sensitivity (87.6% vs. 92.3%, p = 0.013), and specificity (89.5% vs. 94.7%, p = 0.124) between non-experts and experts were significantly reduced. Zhao et al. [28] diagnosed early esophageal cancer and benign esophageal lesions by constructing an Inception V3 image classification system, and the results showed that AI-NBI had a faster diagnostic rate than doctors, and its sensitivity, specificity, and accuracy were consistent with those of doctors.

3.3. Chronic Atrophic Gastritis and Gastric Cancer

Chronic atrophic gastritis (CAG) and gastric intestinal metaplasia (GIM) are precancerous conditions of gastric cancer [29] . Zhang et al. [30] compared the diagnosis results of a deep learning model with those of three experts and found that the CNN system had accuracies of 0.93, 0.95, and 0.99 for mild, moderate, and severe atrophic gastritis, respectively. They concluded that the CNN outperformed in detecting moderate and severe atrophic gastritis compared to mild atrophic gastritis. This conclusion was consistent with the findings of Zhao et al.’s U-Net network model [31] . Wu et al. [32] conducted experiments using 4167 electronic gastroscopy images, including early gastric cancer, chronic superficial gastritis, gastric ulcers, gastric polyps, and normal images. They trained a CNN convolutional neural network model and verified its excellent performance in identifying early gastric cancer and benign images. The model also showed good performance in accurately locating early gastric cancer and real-time recognition in videos. Goto et al. [33] designed an AI classifier using the EfficientnetB1 model for deep learning to differentiate between intramucosal and submucosal cancers. They tested 200 case images using the AI classifier, endoscopists, and a diagnostic method combining AI and endoscopic experts. The measured accuracy, sensitivity, specificity, and F1 score were (77% vs 72.6% vs 78.0%), (76% vs 53.6% vs 76.0%), (78% vs 91.6% vs 80.0%), and (0.768 vs 0.662 vs 0.776), respectively. The study results indicated that the collaboration between artificial intelligence and endoscopic experts can improve the diagnostic capability of early gastric cancer infiltration depth. Additionally, Tang et al. [34] found that the NBI AI system for diagnosing early gastric cancer (EGC) outperformed both senior and junior endoscopists in terms of performance.

Artificial intelligence has been widely applied in the preoperative predictive assessment, intraoperative treatment, and postoperative rehabilitation of curative resection for early gastric cancer. Bang et al. [35] utilized machine learning (ML) models to predict the likelihood of curative resection for undifferentiated early gastric cancer (U-EGC) with the identification of variables such as patient age, gender, endoscopic lesion size, morphology, and presence of ulceration prior to endoscopic submucosal dissection (ESD). They developed 18 models, with extreme gradient boosting classifiers achieving the best performance with an F1 score of 95.7%. In a retrospective study, Kuroda et al. [36] compared two types of robotic gastrectomy, namely ultrasonic shears-assisted robotic gastrectomy and conventional forceps-assisted robotic gastrectomy. They found that the console time for the ultrasonic shears group (310 minutes [interquartile range (IQR), 253 - 369 minutes]) and the console time for gastrectomy (222 minutes [IQR, 177 - 266 minutes]) were significantly shorter than the conventional forceps group (332 minutes [IQR, 294 - 429 minutes]; p = 0.022 and 247 minutes [IQR, 208 - 321 minutes]; p = 0.004, respectively). Additionally, the ultrasonic shears group had less blood loss compared to the conventional forceps group (20 mL [IQR, 10 - 40 mL] vs. 30 mL [IQR, 16 - 80 mL]; p = 0.014). Shen et al. [37] conducted a study on 32 patients undergoing elective robotic gastrectomy and explored the role of “diurnal light and nocturnal darkness” theory in postoperative recovery for gastric cancer using an artificial intelligence-based heart rate variability monitoring device. They found that maintaining the “diurnal light and nocturnal darkness” state was beneficial for the restoration of autonomic neurocircadian rhythms, alleviation of postoperative inflammation, and promotion of organ function recovery in gastric cancer patients.

3.4. IBD

AI has shown new advantages and significant potential in gastrointestinal endoscopy examinations, demonstrating excellent performance in both endoscopy and biopsy samples. In addition, it has achieved good results in the diagnosis, treatment, and prediction of gastrointestinal diseases [38] [39] . For instance, in terms of diagnosis, endoscopy is crucial for evaluating inflammatory bowel disease (IBD). Some studies have utilized an ensemble learning method based on fine-tuned ResNet architecture to construct a “meta-model”. Compared to a single ResNet model, the combined model has improved the IBD detection performance of endoscopic imaging in distinguishing positive (pathological) samples from negative (healthy) samples (P vs N), distinguishing ulcerative colitis from Crohn’s disease samples (UC vs CD), and distinguishing ulcerative colitis from negative (healthy) samples (UC vs N) [40] .

Additionally, capsule endoscopy (CE) is also an accurate clinical tool for diagnosing and monitoring Crohn’s disease (CD). Research has used the EfficientNet-B5 network to evaluate the accuracy of identifying intestinal strictures in Crohn’s disease patients, achieving an accuracy rate of approximately 80% and effectively distinguishing strictures from different grades of ulcers [41] . There have also been studies using CNN algorithms to automatically grade the severity of ulcers captured in capsule endoscopy images [42] . Moreover, Mascarenhas et al. [43] developed and tested a CNN-based model by collecting a large number of CE images, discovering that deep learning algorithms can be used to detect and differentiate small bowel lesions with moderate to high bleeding potential under the Saurin classification.

In terms of treatment and prediction, Charilaou et al. [39] used traditional logistic regression (cLR) as a reference model and compared it with more complex ML models. They constructed multiple IM prediction models and surgical queue models, converting the best-performing QLattice model (symbolic regression equation) into a network-based calculator (IM-IBD calculator), which achieved good validation in stratifying the risk of inpatient mortality and predicting surgical sub-queues. Additionally, there have been studies using machine learning methods to process a large number of predictive factors for predicting complications in pediatric Crohn’s disease [44] .

3.5. Colorectal Cancer (CRC)

In the diagnosis and staging of colorectal cancer, two applications of artificial intelligence are computer-aided detection (CADe) and computer-aided diagnosis or differentiation (CADx). CADe is used for detecting lesions, while CADx characterizes the detected lesions through real-time diagnosis of tissue using optical biopsy, which utilizes the properties of light [45] . In the early identification and differential diagnosis of colorectal cancer, Kudo et al. [46] trained an EndoBRAIN system using 69,142 endoscopic images. This system analyzes cell nuclei, crypt structures, and microvasculature in endoscopic images to identify colonic tumors. Comparative testing revealed that EndoBRAIN had higher accuracy and sensitivity in both staining and NBI modes compared to resident endoscopists and experts. Additionally, a study based on deep learning demonstrated that an AI CAD system can assist inexperienced endoscopists in accurately predicting the histopathology of colorectal polyps, maintaining diagnostic accuracy of over 80% regardless of polyp size, location, and morphology [47] .

Furthermore, the combination of AI technology and medical imaging can assist radiologists in diagnosing the T stage, molecular subtype, adjuvant therapy, and prognosis of patients with colorectal cancer (CRC) more efficiently and accurately [48] . For instance, Wang et al. [49] conducted a retrospective study in which they trained and validated an artificial intelligence-assisted imaging diagnosis system to identify positive circumferential resection margin (CRM) status using 12,258 high-resolution pelvic MRI T2-weighted images from 240 rectal cancer patients. This system employed the Faster R-CNN AI method, which is based on region-based convolutional neural network approach, and achieved an accuracy of 0.932 in determining CRM status, with an automatic image recognition time of only 0.2 seconds. Additionally, in a study utilizing the Faster R-CNN approach, Tong et al. [50] trained on 28,080 MRI images for identifying metastatic lymph nodes, demonstrating good performance with an AUC of 0.912.

In the field of colorectal cancer surgery, Kitaguchi et al. [51] conducted a retrospective study in which they annotated over 82 million frames for phase and action classification tasks, as well as 4000 frames for tool segmentation tasks, from 300 laparoscopic colorectal surgery (LCRS) videos. This led to the development of the LapSig300 model, which encompasses nine surgical phases (P1 - P9), three actions (dissection, exposure, and other actions), and five target tools (grasper, T1; dissecting point, T2; linear cutter, T3; Maryland dissector, T4; scissors, T5). The overall accuracy of the multi-phase classification model was found to be 81.0%, with a mean intersection over union (mIoU) of 51.2% for tools T1 - T5. Although LapSig300 achieved high accuracy in phase, action, and tool recognition, there is still room for improvement in terms of recognition accuracy, and the available dataset is not yet large enough, necessitating further research. Furthermore, Ichimasa et al. [52] proposed a novel analysis method for the resection of T2 colorectal cancer (CRC) after endoscopic full-thickness resection (EFTR). This simple and non-invasive tool, the Random Forest (RF) model, was developed and validated specifically for T2 CRC, following the development of a new predictive tool for lymph node metastasis (LNM) in T1 CRC. However, due to the technical immaturity of EFTR, further validation is required for the RF model in predicting LNM metastasis in T2 CRC patients (Table 1).

4. Advancements in AI Research in Biliary Diseases

4.1. Gallstone, Cholecystitis, and Cholecystocolic Fistula

In 2022, the first human clinical trial on single-arm robotic-assisted cholecystectomy was conducted by Capital Medical University Affiliated Hospital and other institutions, and the surgical procedure was successful. The surgical incision was only 2.5 cm, which not only reduced surgical trauma but also alleviated patient pain [53] . Rasa et al. [54] conducted a retrospective study on 40 cases of single-port robotic cholecystectomy (SPRC), and the results showed a median operative time of 93.5 minutes, with an average time of 101.2 ± 27.0 minutes and an average hospital stay of 1.4 ± 0.6 days. Fourteen patients (35.0%) experienced Clavien-Dindo grade I complications, including five cases (12.5%) related to wound problems. Tschuor et al. [55] reviewed 26 patients who underwent robotic-assisted cholecystectomy, with an intraoperative blood loss of 50 mL (range: 0 - 500 mL). Only mild postoperative complications (Clavien-Dindo ≤ II within 90 days) were observed, without any reports of major complications or death.

Cholecystocolic fistula (CCF) is a rare complication of biliary disease, with a preoperative imaging detection rate of less than 8%. Krzeczowski et al. [56] reported a case study of successful management of CCF patients using the da Vinci® Xi surgical system, with a smooth surgical procedure and discharge on the first day postoperatively, and no complications observed during a six-month follow-up. Additionally, a case of CCF discovered during robotic cholecystectomy was reported, where the patient was initially diagnosed with CCF during the dissection process and subsequently underwent the surgery in a robotic manner. However, there is no report available regarding the postoperative condition and prognosis of this patient. Due to the limited number of robotic CCF surgery cases and the need for further validation of the potential advantages compared to laparoscopic surgery, more research is required.

4.2. Cholangiocarcinoma and Gallbladder Cancer

Wang et al. [57] divided 65 eligible patients with extrahepatic cholangiocarcinoma (ECC) into two groups: Group A (patients with postoperative pathological stage Tis, T1, or T2 ECC) and Group B (patients with postoperative pathological stage T3 or T4 ECC). They then used the MaZda software to delineate the regions of interest (ROIs) on MRI images and analyzed the texture features of these regions. Finally, the selected texture features were incorporated into a binary logistic regression model using the Enter method to establish a prediction

model. The results showed that this model had certain value in predicting the presence of extrahepatic bile duct invasion in ECC, with a sensitivity of 86.0% and specificity of 86.4% for predicting stage T3 or above. However, further prospective experiments are still needed to validate this finding. Similarly, Yao et al. [58] used the MaZda software to establish a PSO-SVM radiomics model to predict the degree of differentiation (DD) and lymph node metastasis (LNM) in patients with ECC. The results showed that the average AUC of the model for DD in the training group and test group was 0.8505 and 0.8461, respectively, while the average AUC for LNM was 0.9036 and 0.8800, respectively. Another study also demonstrated that machine learning using MRI and CT radiomic features can effectively differentiate combined hepatocellular-cholangiocarcinoma (cHCC-CC) from cholangiocarcinoma (CC) and hepatocellular carcinoma (HCC), with good predictive performance [59] .

In recent years, robotic surgery has been widely used in the treatment of cholangiocarcinoma in hepatobiliary surgery. Chang et al. [60] reported a case series of 34 patients with hilar cholangiocarcinoma, including 13 cases of Bismuth type I, 3 cases of Bismuth type IIIa, 9 cases of Bismuth type IIIb, and 9 cases of Bismuth type IV, treated with robotic surgery. In addition, Yin et al. [61] performed a robotic-assisted radical resection for a patient with Bismuth type IIIb hilar cholangiocarcinoma. Shi et al. [62] also performed a robot-assisted radical resection for a patient with hilar cholangiocarcinoma, and the surgery was successful with approximately 50 ml intraoperative blood loss. The patient had no postoperative complications and was discharged six days after the surgery. These studies suggest that robotic surgery has shown good efficacy in the treatment of cholangiocarcinoma.

Gallbladder cancer is the most common malignancy of the biliary tract with a poor prognosis. One study compared the clinical outcomes of laparoscopic extended cholecystectomy (LEC) with open extended cholecystectomy (OEC). The results showed that LEC was comparable to OEC in terms of short-term clinical outcomes. There were no statistically significant differences between the LEC and OEC groups in terms of operation time (p = 0.134), intraoperative blood loss (p = 0.467), postoperative morbidity (p = 0.227), or mortality (p = 0.289). In terms of long-term outcomes, the 3-year disease-free survival rate (43.1% vs 57.2%, p = 0.684) and overall survival rate (62.8% vs 75.0%, p = 0.619) were similar between the OEC and LEC groups [63] .

5. Research Progress on AI in Liver Diseases

5.1. Liver Fibrosis

Methods for the diagnosis of liver fibrosis include invasive liver biopsy and non-invasive imaging, serological diagnostic models, and transient elastography. With the development of artificial intelligence in pathology and radiology, we can utilize technologies such as artificial intelligence to extract information that is difficult to perceive or identify with the naked eye. This is of great significance for the pathological analysis of liver biopsy tissue sections and other non-invasive detection methods. Additionally, artificial intelligence also plays a supportive role and has research value in prognostic assessment and surgical procedures for patients with liver fibrosis [64] [65] .

In the research on ultrasound elastography for diagnosing liver fibrosis, Xie et al. [66] utilized convolutional neural networks to analyze and extract 11 ultrasound image features from 100 liver fibrosis patients. They selected four classic models (including AlexNet, VGGNet-16, VGGNet-19, and GoogLeNet) for experimental comparison. The results showed that the GoogLeNet model had better recognition accuracy than the other models. By controlling variables such as batch size, learning rate, and iteration times, they verified the performance of the network model and obtained the best recognition effect. Fu et al. [67] employed traditional machine classification models, including SVM classifier, sparse representation classifier, and deep learning classification model based on LeNet-5 neural network. They trained, validated, and tested ultrasound images of 354 patients who underwent liver resection surgery. They performed automated classification using a binary classification (S0/S1/S2 vs. S3/S4) and two ternary classifications (S0/S1 vs. S2/S3/S4 and S0 vs. S1/S2/S3/S4). The research results showed an accuracy of around 90% for binary classification and around 80% for ternary classification, with the deep learning classifier having slightly higher accuracy than the other two traditional models.

In the CT imaging-based diagnosis of liver fibrosis, Wu et al. [68] demonstrated that multi-slice CT (MSCT) based on artificial intelligence (AI) algorithms provided a new approach for clinical diagnosis of liver cirrhosis and fibrosis. However, further in-depth research is still needed in multiple centers and large hospitals. Furthermore, related studies reported the construction of a liver fibrosis staging network (LFS network) based on contrast-enhanced portal venous phase CT images, which achieved an accuracy of approximately 85.2% in diagnosing significant fibrosis (F2 - F4), advanced fibrosis (F3 - F4), and cirrhosis (F4) [69] .

5.2. Fatty Liver

Artificial intelligence can effectively identify patients with non-alcoholic steatohepatitis (NASH) and advanced fibrosis, as well as accurately assess the severity of non-alcoholic fatty liver disease (NAFLD) [70] . Okanoue et al. [71] developed a novel non-invasive system called NASH-Scope, which consists of 11 features. After training and validation on 446 patients with NAFLD and non-NAFLD, the results showed that NASH-Scope achieved an area under the curve (AUC) and sensitivity both exceeding 90%, and was able to accurately distinguish between NAFLD and non-NAFLD cases. Zamanian et al. [72] applied a deep learning algorithm based on B-mode images to classify ultrasound images of patients with fatty liver disease, achieving a high accuracy rate of 98.64%. Another study suggested that using the CNN model Inception v3 in B-mode ultrasound imaging significantly improves the evaluation of hepatic steatosis [73] .

5.3. Hepatocellular Carcinoma

Primary liver cancer patients comprise 75% - 85% of cases diagnosed with hepatocellular carcinoma (HCC), with the risk factors for HCC varying by region. In China, chronic hepatitis B virus infection and/or exposure to aflatoxin are the main contributing factors [74] . With the rapid development of artificial intelligence (AI) in the field of medical imaging, AI models based on CT and MR images have been widely applied for automatic segmentation, lesion detection, characterization, risk stratification, treatment response prediction, and automated classification of liver nodules in hepatocellular carcinoma [75] . Riccardo et al. [76] proposed a novel AI-based pipeline that utilizes convolutional neural networks to provide virtual Hematoxylin and Eosin-stained images, and employs AI algorithms based on color and texture content to automatically identify regions with different progression features of HCC, such as steatosis, fibrosis, and cirrhosis. Compared to manual segmentation performed by histopathologists, the AI approach achieved an accuracy rate of over 90%. Xu et al. [77] constructed a diagnostic model based on CT images using Support Vector Machines (SVM), showing good performance in distinguishing between HCC and intrahepatic cholangiocarcinoma (ICCA). Additionally, Rela et al. [78] employed various classification techniques such as SVM, K-Nearest Neighbors (KNN), Naïve Bayes (NB), Decision Tree (DT), Composite, and Discriminant classifiers to classify liver CT images of 68 patients, aiming to differentiate between hepatocellular carcinoma and liver abscess (LA). The results indicated that the SVM classifier outperformed other classifiers in terms of accuracy and specificity, while slightly lagging behind the Discriminant Analysis classifier in sensitivity. Overall, the SVM classifier demonstrated the best performance. Relevant studies have shown that gadolinium ethoxybenzyl diethylenetriamine pentaacetic acid (Gd-EOB-DTPA)-enhanced MRI can be used to evaluate the differentiation degree of HCC [79] . Due to the limited performance of CT or MRI in detecting lesions smaller than 1.0 cm in HCC patients, and biopsy being the gold standard for diagnosing HCC, Ming et al. [80] developed a diagnostic tool for pathological image classification of HCC. This diagnostic tool evaluated several architectures including ResNet-34, ResNet-50, and DenseNet, and selected ResNet-34 as the benchmark architecture for building the AI model due to its superior performance. The AI model achieved sensitivities, specificities, and accuracies all above 98% in the validation and test sets, and demonstrated more stable performance compared to experts. Furthermore, Ming et al. also applied transfer learning methods to image classification of colorectal cancer and invasive ductal carcinoma of the breast, where the AI algorithm achieved slightly lower performance compared to HCC.

With the rapid development of artificial intelligence, an increasing number of clinical trials are exploring the feasibility of using robots for liver resection surgery. According to the research findings of Shogo et al. [81] , they found no significant differences between robot-assisted liver resection (RALR) and laparoscopic liver resection (LLR) in terms of blood loss, transfusion ratio, postoperative complication rate, mortality rate, or length of hospital stay. However, studies by Linsen et al. [82] pointed out that compared to laparoscopic major liver resection, robot-assisted major liver resection had less blood loss (118.9 ± 99.1 vs 197.0 ± 186.3, P = 0.002). Furthermore, although there were differences in surgical time (255.5 ± 56.3 min vs 206.8 ± 69.2, p < 0.001), this difference gradually narrowed with increasing surgeon experience. Some scholars abroad have also reported individual cases. For example, Machado [83] reported a successful case of extensive hepatocellular carcinoma resection using the Glissonian method in a 77-year-old male patient. The surgery went smoothly, and the patient was discharged on the 8th day after the operation. In addition, Peeyush [84] reported a case of multifocal hepatocellular carcinoma in a 70-year-old male patient who underwent robot-assisted total right hepatectomy. The blood loss was minimal (400 ml), but the surgery duration was longer (520 min). These research findings suggest that robot-assisted liver resection surgery has the potential to play a role in providing more precise operations while reducing certain surgical risks.

6. Research Progress of AI in Pancreatic Diseases

The auxiliary diagnosis of pancreatic diseases mainly involves imaging and pathological images, with commonly used examination methods including CT, MRI, MRCP, EUS, etc. Some studies have used deep learning algorithms to improve diagnostic performance. For example, Jiawen et al. [85] developed the DeepCT-PDAC model using contrast-enhanced CT and deep learning algorithms to predict the overall survival (OS) of patients with pancreatic ductal adenocarcinoma (PDAC) before and after surgery. In addition, another study compared four networks, NLLS, GRU, CNN, and U-Net, through quantitative analysis (comparing SSIM and nRMSE scores), qualitative analysis (comparing parameters), and Bland-Altman analysis (consistency analysis). The study found that GRU performed the best and proposed a method combining GRU and attention layer for analyzing the concentration curve of dynamic contrast-enhanced magnetic resonance imaging (DCE-MRI) and ensuring stable output of expanded parameter estimation. This MRI technique can be used for non-invasive detection of various diseases, including pancreatic cancer [86] . Furthermore, Filipe et al. [87] developed a deep learning algorithm using endoscopic ultrasound (EUS) images of pancreatic cysts, achieving an accuracy rate of over 98% in automatically identifying mucinous pancreatic cysts. There is also a DCNN system that uses EUS-FNA stained histopathological images for pancreatic cell cluster differentiation, which performs comparably to pathologists in terms of discrimination performance [88] .

7. Conclusions

Artificial intelligence has made significant progress in the application of digestive system diseases in the field of medicine. Different scholars have compared and analyzed various algorithm networks for different diseases to find the best-performing models. On this basis, methods such as MCA attention mechanism, feature selection, gradient descent, and ensemble models can be introduced to further improve the diagnostic performance of the models. With the establishment of data platforms, the accuracy of the models will also gradually improve. However, in achieving high accuracy and applicability, further efforts are still needed for the development of artificial intelligence in the medical field.

As far as this study is concerned, the sources and quality of the data used to build the AI model are uneven, which may affect the accuracy of the data; there is a lack of standardization and normalization, and it is currently impossible to provide some universally applicable and highly accurate models. In the future, AI can not only help patients self-manage single or multiple diseases, monitor and manage their own diseases in a standardized and reasonable manner, but also predict and treat digestive system diseases at the genetic level.

Support

The article has no fund support.

NOTES

*Corresponding author.