A Comparative Study of Machine Learning Algorithms and Their Ensembles for Botnet Detection ()

1. Introduction

As a network of compromised devices called bots, a botnet executes malicious tasks under the control of the attacker, a botmaster. A botnet has been a threat to cybersecurity [1] [2] . According to Ref. [3] , the primary goals of botnets are as follows:

・ Information dispersion: sending SPAM, executing Denial of Service (DoS) attack, distributing false information from illegal sources.

・ Information harvesting: obtaining identity, password and financial data.

・ Information processing: processing data to crack the password for access to additional hosts.

A botnet has been grown as a menace since the first botnet. EggDrop was reported in 1993 [1] . For example, [4] it was reported that the Necurs botnet was one of the most active distributors of malware in 2016. More than 2.3 million spam emails carrying JavaScript downloaders, VBS, and WSF attachments were sent out by Necurs in just one day on November 24, 2016.

The Mirai botnet, according to the same report [4] , drove the largest DDoS attack ever recorded in 2016 on the French hosting company OVH peaking at 1Tbps mostly targeting IoT devices, such as home routers and IP cameras. As Gartner predicted that there would be more than 20 billion IoT devices by 2020 [5] , it is important that botnets like Mirai should be addressed.

The speed of growth of botnet threat is also rapid. According to the report from Spamhaus [6] , the number of IP addresses that was figured out as a Control and Command (C & C) server hosted by Amazon in 2017 increased 6 times against that of 2016.

A botnet usually has distinguishable architecture features [7] . A botmaster usually sets up a Control and Command server to easily communicate with his bots. For this type of centralized architecture, IRC protocol has been the most popular, but HTTP or POP3 have been used as well. The centralized botnets like Rxbot, Festi, and Bobax using IRC, HTTP, TCP can be depicted as Figure 1. On the other hand, a botnet can feature a Peer-to-peer architecture. In a P2P botnet, a bot can bypass connections to other bots in case there are firewalls in some of the connections. The decentralized botnets like TDL-4 utilizing P2P protocol can be depicted in Figure 2.

To detect botnet, approaches focusing on anomalies in bot(net)s’ network behavior with or without temporal behavior have been proposed. Most of the previous studies adopted machine learning technologies along with heuristic rules [1] [2] . Even though the previously proposed detection systems utilized both supervised and unsupervised machine learning algorithms, the ensemble methods have not been discussed yet.

In this paper, focusing on the ensemble methods, supervised machine learning algorithms popularly used in previous studies were evaluated with their ensembles. As the ensemble methods were designed to strengthen weak classifiers, it would be meaningful to figure it out if the ensemble methods are indeed beneficial when it comes to botnet detection.

In the following chapter, popular classification algorithms that have been used in serval botnet detection proposals and the ensemble technology are explained.

2. Related Works

2.1. Machine Learning for Botnet Detection

In the previous studies where they used supervised machine learning algorithms,

![]() (a) (b)

(a) (b)

Figure 1. Examples of botnet architecture. (a) Centralized C & C architecture; (b) Decentralized P2P C & C architecture.

![]()

Figure 2. Amount of data on each botnet scenario [17] .

three algorithms―Naive Bayes, decision tree, and (artificial) neural networks were popularly adopted [8] [9] [10] [11] .

2.1.1. Naive Bayes

Naive Bayes algorithm is a simple and intuitive classification technique based on the Bayes theorem assuming each feature contributes independently to the probability of an event [12] . Specifically in machine learning, Naive Bayes classifier calculates all the probability for all classes for a target feature and selects one with the highest probability. Furthermore, Gaussian Naive Bayes (GNB) assumes that the values associated with each class of each feature follow a Gaussian distribution. Although those assumptions do not happen often in real life, Naive Bayes shows relatively better results than other models like logistic regression. Also, it can generate models very quickly with very little computation overhead . It is therefore a popular choice for SPAM filters and other real-time anomaly detection algorithms [13] .

2.1.2. Artificial Neural Networks

Neural networks, analogous to the human brain, refer to large connections of simple units called neurons. Consisting of three layers―input layer, hidden layer(s) and output layer, Neural network takes each record to pass its features onto input layer, and then the model makes decisions calculating weights of hidden neurons to get the single highest value at the output layer. A feed-forward neural network where the output of one layer is used as input to the next layer does iterate for the same data to compare the output to true value so that it adjusts the weights in the hidden neurons with its error term. Recurrent neural networks, however, adopts feedback loops between neurons that resemble human brains [14] .

2.1.3. Decision Tree

As another popular classification method, decision tree generates a tree-like model of decisions based on decision rules inferred from the data. The goal is to create a model that predicts the value of a target variable based on several input variables. In classification decision tree, dependent variables can be categorical. Unlikely other machine learning algorithms, a decision tree is easy to interpret with tree visualized.

2.2. Ensemble Methods

Ensemble methods make a set of classifiers into an ensemble by combining the prediction from each classifier either with weight or not. It is regarded as one of the possibilities to improve the accuracy. Typically, there are three types of ensemble methods as introduced below [15] .

・ Voting: as the simplest way to form an ensemble, voting classifier consists of multiple models of diverse types. In the training step, all the models are trained separately with whole training data and it averages the posterior probabilities that are calculated by each model in the recognition step.

・ Bagging: it, also called bootstrap aggregation, manipulates the training data to generate multiple models. Instead of training the model with the whole training data, bagging randomly samples the training set from the total training data to make sub-models.

・ Boosting: it also samples out the training data like bagging does but maintains a set of weights on the data. Especially, AdaBoost where the weighted errors of each model update weights on the training data gives more weight on the data with lower accuracy and less weight on the data with higher.

Along with the concept of bagging, random forest is an ensemble of multiple decision trees. By randomly selecting features from the data, it generates decision trees and then for unseen data, the class that the majority of decision trees predict is selected as the prediction for the input. Random forest is known as a way of avoiding overfitting that can happen in a single decision tree [16] .

Although ensemble methods are an effective way of reducing variances when it comes to prediction model, it is obvious that they come with more computation. Thus, a poor model can enhance its accuracy as the cost of the extra computation. For this reason, the ensemble methods are often used with a fast classifier such as decision tree as the case of random forest.

3. Methodology

3.1. Dataset

Finding an appropriate network traffic dataset for machine learning is often challenging. For supervised machine learning, the fact that the data should be properly labeled unless the target feature is already in the dataset makes the task onerous. Addressing this problem, Sebastian Garcia et al. created the CTU-13 dataset labeled as botnet, normal and background in the previous research [17] . Even though there had been several botnet datasets downloadable, such as, [18] , [19] , [20] , [21] , they were either not representative of the real-world traffic, or not labeled, or not suitable for every detection algorithms that the authors wanted to compare [17] . For those reasons, the CTU-13 dataset was generated with several fundamental design goals, to have real botnets attacks from several types of botnets, to aggregate the packet data to NetFlow flows because of the privacy issue, and to have the data labeled, etc. For more details and characteristics are as shown in Figure 2 and Figure 3. In this comparative study, out of 13 different captures called scenario, the scenario 4, 10 and 11 featuring 1, 10, and 3 Rbot(s) respectively were used.

3.2. Data Features

When the researchers of the CTU-13 creating the dataset, they conducted preprocessing converting pcap files to NetFlow files. In that stage, they configured the data with those following features: start time, end time, duration, protocol, source IP address, source port, direction, destination IP address, destination port, flags, type of services, number of packets, number of bytes, number of flows, and label.

3.3. Metrics

To measure the accuracy of a classifier, taking account confusion matrix is the most common way. Precision meaning the percentage of correctly predicted event from the pool of total predicted event, and recall meaning the percentage of correctly predicted event from the pool of actual events respectively are important.

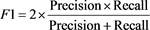

3.3.1. F1 Score

Taking both precision and recall into account, the F1 score gives a more balanced view compared to using only precision or recall. The F1 score can be between 0 and 1 where 1 means its best accuracy.

(1)

(1)

![]()

Figure 3. Distribution of labels in the NetFlows for each scenario in the dataset [17] .

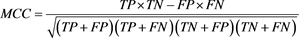

3.3.2. Matthews Correlation Coefficient (MCC)

Unlike metrics above, MCC which is also known as the phi coefficient is considered to be less biased because it incorporates True Negative as well. According to [22] , MCC is more robust to an imbalanced data where classification methods tend to biased toward the majority class than the F1 score or accuracy. The range of MCC lies between −1 to +1 where +1 means a perfect prediction, 0 no better than a random prediction and −1 and inverse prediction.

(2)

(2)

To evaluate the classification algorithms along with ensemble methods for the CTU-13 dataset, Scikit-learn on a single core of Intel Xeon-E5 with 64GB of memory was used. Because some of the features were categorical which Scikit-learn cannot handle properly, data preparation including encoding and standardization were conducted.

4. Results and Discussion

The evaluation results are described in Table 1 and Table 2. Figure 4 also provides graphical views to compare the classifiers against each scenario based on their time consumption for training and MCC score.

For every data and algorithms, F1 scores are higher than MCC scores. This is because the F1 score does not consider the true negatives. For this reason, MCC is preferred for a binary classification. In the following discussion, accuracy refers to MCC score and S denotes scenario of the dataset.

Among individual algorithms, for S10 and S11, NN and DT show decent accuracies over 0.91 and 0.98, respectively. However, NN takes much longer time, about 3 - 4 times longer in S4 and S10. For S4, the accuracy score dramatically goes down. The only structural difference between those three datasets is the ratio of botnet traffic. Even though S4 is the largest dataset, it only has one Rbot with 0.15% of botnet traffic ratio, which means the data is highly imbalanced or skewed. On the other hand, S10 has 8.11% of botnet traffic and S11 has 7.6%.

This pattern appears the same on the result of voting. This is on the ground that voting works by averaging out each outcome from the model. Boosting method does not significantly help either GNB or DT. The nature of boosting is turning weak models, which has slightly better prediction than random, into a strong one. In this regard, it obviously does not make DT strong as it already

![]()

Figure 4. Time and MCC evaluation against each dataset.

![]()

Table 1. Time consumed for model training (sec).

had a good accuracy. The interesting thing comes with boosting-GNB. For S4, the MCC scores are near zero which means the prediction is no better than random. Also, it shows around 0.16 for S10 and S11, which are opposite results of using sole GNB. In the study by Ting and Zheng [23] , the similar drop-down appeared in a specific dataset, Tic-Tac-Toe. They explained it is because Naive Bayes is very stable carrying a strong bias, in the boosting process the sub-classifiers may not be diverse enough [23] . But finding the exact reason of the drop-down is put to the future work at this moment.

Bagging each algorithm seems very similar to using a single classifier only for each dataset. While training a bagging model, multiple sub-datasets sampled out from the original dataset make their own classifier and then predictions from those classifiers are voted. This dataset, however, may not take benefit from sampling because the data is too imbalanced.

While the ensemble methods offered by Scikit-learn are not significantly beneficial on each algorithm, random forest appears highly effective in terms of both accuracy and training time. As a combination of decision trees, it performs implicit feature selection taking feature importance into consideration. Also making multiple sub-decision trees with part of features and data rows, it can run extremely faster than other methods and even can be easily parallelized. Considering parallelization is tough to be implemented in boosting and large neural networks, random forest seems like an excellence.

Compared to the previous research [17] where they measured the F1 score to several different botnet detection systems based on rule-based approaches and clustering methods, the F1 scores from this research is far above for all of the scenarios except S11. According to [16] , they used all dataset and separated them into the training and test data in a way that the methods can generalize, detect new behaviors, and avoid the bias. Thus, the evaluation utilizing the three machine, learning algorithms and their ensembles offer better detection accuracy compared to the previous research.

5. Conclusion

In this study, three popular machine learning algorithms―Gaussian Naive Bayes, neural networks, decision tree were tested. Furthermore, the ensemble methods―voting, adaboosting, and bagging were also compared to figure out if ensemble methods would be significantly beneficial for botnet detection. Random forest which is a refined ensemble of decision tree was also tested. To detect botnet traffic out of all network traffic, decision tree without any ensemble method or random forest would be the most reliable approaches. It runs much faster than NN alone, with the better accuracy. Even though GNB runs the fastest, the accuracy varies on the dataset. Unlike the common expectation, adopting ensemble methods on machine learning algorithms for botnet detection in a hope of enhancing the accuracy is not preferable because it does not give remarkably more accurate result while consuming much more time. The question that this evaluation gives is that why the accuracy scores drop down when boosting is applied to GNB. Even though it was explained in [23] , the reason why it makes the poor results rather than remain the same could be studied further.

Acknowledgements

This work was supported in part by a grant from Intel Grant #301620.