1. Introduction

There are so many real life applications for the convex quadratic programming (QP) problem. The applications include portfolio analysis, structural analysis, discrete-time stabilisation, optimal control, economic dispatch and finite impulse design; see [1] -[3] . Some of the methods for solving the convex quadratic problem are active set, interior point, branch and bound, gradient projection, and Lagrangian methods, see [4] -[9] for more information on these methods.

In this paper we present a new heuristic to linearise the convex quadratic programming problem. The usual Karush-Kuhn-Tucker conditions are still used but in this case a linear objective function is also formulated from the set of linear objective function equations and the complementary slackness conditions. There is an unboundedness challenge that is associated with the proposed linear formulation. To alleviate this challenge, an additional constraint is constructed and added to the linear formulation. The new linear formulation can be solved efficiently by the available simplex and interior point algorithms. There is no restricted base entry in the proposed approach. The time consuming complementarity pivoting is no longer necessary. Some computational experiments have been carried out and the objective of the computational experiments was to determine CPU times of the:

1) Proposed heuristic;

2) Regularised Active Set Method Mae and Saunders [10] ;

3) Primal-Dual Interior Point Algorithm.

It may be noted that the proposed method is suitable only if the quadratic programming problem satisfies conditions (1) to (5) mentioned in Section 2.1.

2 Mathematical Background

2.1 The Quadratic Programming Problem

Let a quadratic programming (QP) problem be represented by (1).

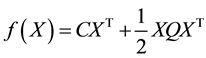

Minimize

Subject to:

(1)

(1)

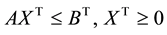

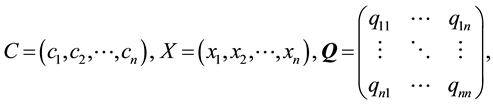

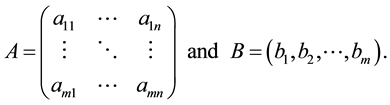

where

It is assumed that:

1) Matrix  is a

is a  symmetric and positive definite,

symmetric and positive definite,

2) Function  is strictly convex,

is strictly convex,

3) The conditions  and

and  hold. Here

hold. Here  and

and  are dual and primal slack variables, respectively.

are dual and primal slack variables, respectively.

4) Since constraints are linear then the solution space is convex, and

5) Any maximization quadratic problem can be changed into a minimization and vice versa.

When the function  is strictly convex for all points in the convex region then the quadratic problem has a unique local minimum which is also the global minimum [11] .

is strictly convex for all points in the convex region then the quadratic problem has a unique local minimum which is also the global minimum [11] .

2.2. Karush-Kuhn-Tucker Conditions

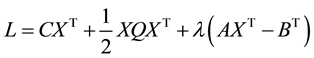

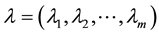

The convex quadratic programming problem has special features that we can capitalize on when solving. All constraints are linear and the only nonlinear expression is the objective function. Let the Lagrangian function for the QP problem be  and in this case

and in this case

(2)

(2)

where  and

and . In this case we exclude the non-negativity conditions

. In this case we exclude the non-negativity conditions![]() . If

. If ![]() and

and ![]() then the Karush-Kuhn-Tucker conditions as given in [11] for a local minimum are:

then the Karush-Kuhn-Tucker conditions as given in [11] for a local minimum are:

![]() (3)

(3)

![]() (4)

(4)

![]()

Complementary slackness conditions are given in (5) and are only satisfied at the optimal point. These conditions are:

![]() (5)

(5)

Note ![]() and

and ![]() are

are ![]() and

and ![]() dimensional vectors representing the slack variables. At this stage, we are unable to apply the simplex algorithm due to restricted base entry and this makes the simplex method approximately 8 times slower than its full speed compared to its unrestricted basis version.

dimensional vectors representing the slack variables. At this stage, we are unable to apply the simplex algorithm due to restricted base entry and this makes the simplex method approximately 8 times slower than its full speed compared to its unrestricted basis version.

2.3. Some Matrix Operations

Suppose ![]() and

and ![]() are single row matrices of the same dimension

are single row matrices of the same dimension ![]() and

and ![]() is an

is an ![]() dimensional matrix, the following must hold.

dimensional matrix, the following must hold.

![]() (6)

(6)

![]() (7)

(7)

Equations (6) and (7) can be easily verified. These simple results are used to eliminate the complementary slackness conditions.

3. Elimination of Complementary Slackness Conditions

3.1. Elimination of ![]()

Pre-multiply (3) by X, we have:

![]() (8)

(8)

![]() (9)

(9)

From (6) ![]() and from (5)

and from (5)![]() , then

, then

![]() (10)

(10)

By rearranging, we have

![]() (11)

(11)

3.2. Elimination of ![]()

Pre-multiply (4) by![]() , we have

, we have

![]() (12)

(12)

![]() (13)

(13)

Since from (4)![]() , then

, then

![]() (14)

(14)

3.3. Elimination of ![]() or

or ![]()

From (7), we have: ![]() , hence we can replace

, hence we can replace ![]() by

by ![]() in relations (11) to get t(15):

in relations (11) to get t(15):

![]() (15)

(15)

Subtracting (14) from (15), we obtain (16):

![]() (16)

(16)

3.4. Linear Objective Function for the Quadratic Programming Problem

Note that the expression in relation (13) is nonlinear but it can be rearranged so that the original quadratic programming objective function becomes a linear quantity. This can be achieved as follows:

Divide relation (16) by two, one obtains:

![]() (17)

(17)

Rearranging (17), we obtain (18):

![]() (18)

(18)

From (1)![]() , then, (18) becomes (19) or equivalently (20):

, then, (18) becomes (19) or equivalently (20):

![]() (19)

(19)

![]() (20)

(20)

Thus the nonlinear objective function of the QP problem is now linearised but it creates a new challenge. We will discuss this in the next section.

3.5. LP Equivalent to the Given QP

From (1), (3) and (20), we have the following LP problem:

Minimize ![]()

Subject to:

![]() (21)

(21)

The minimisation problem (21) will have an unbounded solution due to negative coefficient of ![]() in the objective function and negative coefficients of the slack variable

in the objective function and negative coefficients of the slack variable ![]() in the constraints. These are the only source

in the constraints. These are the only source

of unboundedness in the LP (21). Here, we let: ![]() and

and ![]() where

where ![]() a row vec-

a row vec-

tor of dimension![]() . The objective function is now modified as :

. The objective function is now modified as :

Minimize![]() , where

, where ![]() and

and ![]() are very large constants relative to all other objective

are very large constants relative to all other objective

coefficients. Both of these constants do not have to assume the same large values. A large number of experiments were done on a large number of quadratic programming problems and and it was observed that ![]() seems to work well. These experiments have been recorded later in this paper. In these experiments, it was noted that values of

seems to work well. These experiments have been recorded later in this paper. In these experiments, it was noted that values of ![]() and

and ![]() on higher side can be as much as

on higher side can be as much as ![]() and

and![]() .

.

3.6. Existence of a Linear Objective Function and Verification of Optimality

The optimal solution of a convex quadratic programming model is unique and it satisfies the complementary conditions ![]() and

and![]() . The unique optimal solution to the convex quadratic programming is a corner point

. The unique optimal solution to the convex quadratic programming is a corner point![]() . Since the KKT conditions can be expressed as a linear objective function that can make

. Since the KKT conditions can be expressed as a linear objective function that can make ![]() exist.

exist.

4. Numerical Illustrations

4.1. Example 1

Minimize ![]()

Subject to:

![]() (22)

(22)

This example was taken from Jensen and Bard (2012) without any modifications.

Linear formulation of the above QP

In this case we took ![]() and

and ![]() which are very large compared to coefficients 4; 8; 2.5; and 1.5. The LP problem is given by:

which are very large compared to coefficients 4; 8; 2.5; and 1.5. The LP problem is given by:

Maximize ![]()

Subject to

![]()

![]()

![]()

![]()

![]()

![]() (23)

(23)

The solution of (23) by the simplex method is given by:

![]() (24)

(24)

From the original QP objective function, we have the objective value given in (25).

![]() (25)

(25)

Verification of optimality

The solution is optimal because complementary slackness conditions are satisfied as given in (26).

![]() (26)

(26)

4.2. Two More Examples

Two more examples are solved to illustrate how the large constants are selected. Example 2 is taken from [12] and example 3 is from [13] .

Example 2 from [12]

Minimize ![]()

Subject to:

![]() (27)

(27)

The linear formulation of (27) becomes as given in (28).

Maximize ![]()

Such that:

![]() ,

,

![]() ,

,

![]() ,

,

![]() ,

,

![]()

![]() ,

,

![]()

![]() (28)

(28)

The solution of (28) is as given in (29) and once again it is optimal as all complementary slackness conditions are satisfied.

![]() (29)

(29)

Example 3 from [13]

Minimize ![]()

Subject to: ![]()

The linear formulation of the above example is given by (30).

Maximize ![]()

Subject to:

![]() ;

;

![]() (30)

(30)

The solution is given by: ![]() This solution is once again optimal as all complementary slackness conditions are satisfied.

This solution is once again optimal as all complementary slackness conditions are satisfied.

5. Computational Experiments

A set of convex quadratic programming test problems are given in [14] . All these test problems were used in testing the proposed approach. The objective of the computational experiments was:

1) To determine that the LP optimal solution is also optimal to the given QP.

2) Compare CPU times of the proposed heuristics with Regularized Active Set Method and Primal-Dual Interior Point Method

The results are tabulated in Table 1. MATLAB R2013 (version 8.2) running on an Intel Pentium Dual desktop

(Dual core G2020 2.9 GHz CPU, 2GB DDR3 1333 RAM) was used in these experiments. There were no advanced processing techniques embedded within the three methods. The set up time was excluded from the CPU times in all three methods. The zero (~0.00) means CPU time is less than 0.01 second. In all the test problems, it was found that the LP optimal solution was optimal to the QP problem. However, in the CPU time challenges were observed with the BODYD2 for the proposed heuristic and as a result we could not accurately obtain the necessary CPU time for these two cases. There was no challenge with the other two methods on the same BODYD2 problem. This experiment was conducted twice, but the same observation. We have no reason to support this behaviour but we believe it may be due to some local computational environment.

6. Conclusion

The convex QP problem can be solved like a linear programming problem efficiently either by the simplex method or the interior point algorithm. The restricted base entry is not necessary by the proposed approach. Complementary slackness can retard the simplex method, which is roughly eight times slower than the full speed simplex method. Taking complementary slackness conditions away itself is a big reduction in the number of constraints in the proposed linear formulation of the quadratic programming problem. More experiments are likely to give more insight and advantages of the proposed approach. The proposed method is in fact the usual simplex method applied to solving an ordinary LP that was obtained from the given convex QP. Also note that a large number of Maros-Maszaros test problems are giving rise to small to medium size LPs and therefore the proposed method dominates solving a large number of QPs, as is reflected in Table 1. From these results, it may be noted that, for example in the case of medium sized problems at serial 118 to 124 and large sized problems at serial number 125 to 132, the proposed heuristic outperformed the other two with respect to the cpu time.

Acknowledgements

The authors are thankful to the referees for their helpful and constructive comments.

NOTES

*Corresponding author.