1. Introduction

A simple form of Perron-Frobenius theorem states (see [1,2]):

If  is a real

is a real  matrix with strictly positive entries

matrix with strictly positive entries , then:

, then:

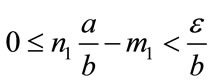

1) A has a positive eigenvalue r which is equal to the spectral radius of A2) r is a simple3) r has a unique positive eigenvector v4) An estimate of r is given by the inequalities:

The general form of Perron-Frobenius theorem involves non-negative irreducible matrices. For simplicity, we confine ourselves in this paper with the case of positive matrices. The proof, for the more general form of the theorem can be obtained by modifying the proof for positive matrices given here.

Perron-Frobenius theorem has many applications in numerous fields, including probability, economics, and demography. Its wide use stems from the fact that eigenvalue problems on these types of matrices frequently arise in many different fields of science and engineering [3]. Reference [3] discusses the applications of the theorem in diverse areas such as steady state behaviour of Markov chains, power control in wireless networks, commodity pricing models in economics, population growth models, and Web search engines.

We became interested in the theorem for its important role in interval matrices. The elements of an interval matrix are intervals of . In [4], the theorem is used to establish conditions for regularity of an interval matrix. (An interval matrix is regular if every point in the interval matrix is invertible). In Section 4 we develop a method for approximation of the Perron’s interval eigenvalue of a given positive interval matrix. See [5] for a broad exposure to interval matrices.

. In [4], the theorem is used to establish conditions for regularity of an interval matrix. (An interval matrix is regular if every point in the interval matrix is invertible). In Section 4 we develop a method for approximation of the Perron’s interval eigenvalue of a given positive interval matrix. See [5] for a broad exposure to interval matrices.

Since after Perron-Frobenius theorem evolved from the work of Perron [1] and Frobenius [2], different proofs have been developed. A popular line starts with the Brouwer fixed point theorem, which is also how our proof begins. Another popular proof is that of Wielandt. He used the Collatz-Wielandt formula to extend and clarify Frobenius’s work. See [6] for some interesting discussion of the different proofs of the theorem.

It is interesting how this theorem can be proved and applied with very different flavours. Most proofs are based on algebraic and analytic techniques. For example, [7] uses Markov’s chain and probability transition matrix. In addition, some interesting geometric proofs are given by several authors: see [8,9]. Some techniques and results, such as Perron projection and bounds for spectral radius, are developed within these proofs. More detailed history of the geometry based proofs of the theorem can be found in [8].

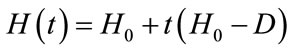

In our proof, a homotopy method is used to construct the eigenpairs of the positive matrix A. Starting with some matrix  with known eigenpairs, we find the eigenpairs of the matrix

with known eigenpairs, we find the eigenpairs of the matrix  for t starting at 0 and going to 1. If for each t all eigenvalues of

for t starting at 0 and going to 1. If for each t all eigenvalues of  are simple, then the eigencurves

are simple, then the eigencurves  do not intersect as t varies from 0 to 1.

do not intersect as t varies from 0 to 1.

Our proof requires that the curve formed by the greatest eigenvalues  and its reflection about the real axis (i.e.,

and its reflection about the real axis (i.e., ) will not intersect with any other eigencurve. Together they form a “restricting area” for all other eigenvalue curves. As a result, the absolute value of any other eigenvalue will be strictly less than

) will not intersect with any other eigencurve. Together they form a “restricting area” for all other eigenvalue curves. As a result, the absolute value of any other eigenvalue will be strictly less than  for

for . By choosing an initial matrix

. By choosing an initial matrix  that has the desired properties stated in the Perron-Frobenius theorem, we will show that the “restricting area” preserves these properties along the eigencurves for all

that has the desired properties stated in the Perron-Frobenius theorem, we will show that the “restricting area” preserves these properties along the eigencurves for all , and for

, and for  in particular.

in particular.

Our proof is elementary, and therefore is easier to understand than other proofs. While most of the other proofs focus on the matrix A itself, we approach the problem by analysing a family of matrices. In our proof we study some intuitive structures of the eigenvalues of positive matrices and show how those structures are preserved for matrices in a homotopy. Thus, our proof provides an alternative perspective of studying the behaviour of eigenvalues in a homotopy.

Furthermore, our proof is constructive. The idea is to start with the known eigenpair corresponding to the maximal eigenvalue of , then use the homotopy method and follow the eigencurve corresponding to the maximal eigenvalues of positive matrices

, then use the homotopy method and follow the eigencurve corresponding to the maximal eigenvalues of positive matrices , applying techniques such as Newton’s method. Recently, many articles are devoted to using homotopy methods to find eigenvalues, for example see [10-12] and the references therein. In most cases, the diagonal of A is used as starting matrix

, applying techniques such as Newton’s method. Recently, many articles are devoted to using homotopy methods to find eigenvalues, for example see [10-12] and the references therein. In most cases, the diagonal of A is used as starting matrix . Still, people are interested in finding a more efficient

. Still, people are interested in finding a more efficient , one which has a smaller difference from A. The

, one which has a smaller difference from A. The  constructed in our proof provides an alternative to the query. It is promising because by proper scaling, it can behave as some “average” matrix.

constructed in our proof provides an alternative to the query. It is promising because by proper scaling, it can behave as some “average” matrix.

2. The Proof

In the following sections,  will denote a real

will denote a real  matrix with strictly positive entries, i.e.

matrix with strictly positive entries, i.e. . If

. If  is an eigenvalue for A, and v is its corresponding eigenvector, then

is an eigenvalue for A, and v is its corresponding eigenvector, then  forms an eigenpair for A. A vector is positive if all of its components are positive. An eigenpair is positive if both of its eigenvalue and eigenvector components are positive.

forms an eigenpair for A. A vector is positive if all of its components are positive. An eigenpair is positive if both of its eigenvalue and eigenvector components are positive.

Lemma 2.1.  has a positive eigenpair

has a positive eigenpair .

.

Proof. Define the function  to be:

to be:

where

and  denotes the maximum norm of

denotes the maximum norm of

Then f is continuous (since V does not contain the zero vector and  is positive for any v in V), V is convex and compact (since V is closed and bounded, it is compact, while convexity follows trivially),

is positive for any v in V), V is convex and compact (since V is closed and bounded, it is compact, while convexity follows trivially),  (since the maximum norm of v in V is dominated by

(since the maximum norm of v in V is dominated by ). According to Brouwer fixed point theorem, a continuous function f which maps a convex compact subset K of a Euclidean space into itself must have a fixed point in K. Thus, there exists v in V such that

). According to Brouwer fixed point theorem, a continuous function f which maps a convex compact subset K of a Euclidean space into itself must have a fixed point in K. Thus, there exists v in V such that . No component of v can be 0, since any positive matrix operating on a non-negative vector with at least one positive element will result in a strictly positive vector. So v is a positive eigenvector of A, and the associated eigenvalue r is also positive.

. No component of v can be 0, since any positive matrix operating on a non-negative vector with at least one positive element will result in a strictly positive vector. So v is a positive eigenvector of A, and the associated eigenvalue r is also positive.

Lemma 2.2. If r is the positive eigenvalue associated with the eigenvector v in the previous lemma, then r has no other (independent) eigenvector.

Proof. Suppose on the contrary, there is another positive eigenvector x for r. Assume that x and v are independent.

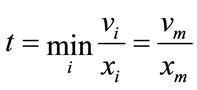

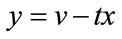

Let

Let m be an index such that . Let

. Let , then y is an eigenvector for A associated with eigenvalue r. It’s clear that

, then y is an eigenvector for A associated with eigenvalue r. It’s clear that  and

and  for all i. Since x and v are linearly independent,

for all i. Since x and v are linearly independent, . Therefore,

. Therefore, . On the other hand,

. On the other hand,  , a contradiction. Therefore v is the only eigenvector for r.

, a contradiction. Therefore v is the only eigenvector for r.

Lemma 2.3. v is the only positive eigenvector for A.

Proof. Suppose on the contrary, there is another positive eigenvector x (independent of v) associated with an eigenvalue . It’s clear that

. It’s clear that . According to Lemma 2.2,

. According to Lemma 2.2, . Without loss of generality, assume

. Without loss of generality, assume . Suppose

. Suppose

Let , then just as in the previous lemma,

, then just as in the previous lemma,  ,

,  for all i, and

for all i, and . It follows that

. It follows that  is a positive vector.

is a positive vector.

But , which contradicts

, which contradicts  .

.

Remark. The previous lemmas imply that there exists a unique positive eigenpair  for A.

for A.

Lemma 2.4. There is no negative eigenvalue  for A such that

for A such that , where

, where  is the positive eigenpair of A.

is the positive eigenpair of A.

Proof. Suppose the statement of the lemma is false. It follows that there exists an eigenpair  such that

such that

. Then

. Then  is an eigenpair for

is an eigenpair for . On the other hand,

. On the other hand,  is also an eigenpair for

is also an eigenpair for . There are two different eigenvectors associated with

. There are two different eigenvectors associated with . Since

. Since  is a positive matrix, this contradicts Lemma 2.2 and this completes the proof of this lemma.

is a positive matrix, this contradicts Lemma 2.2 and this completes the proof of this lemma.

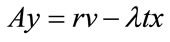

Lemma 2.5. Suppose . Then

. Then ,

,  such that

such that

(1)

(1)

(2)

(2)

Proof. Inequalities (1) and (2) are equivalent to

(3)

(3)

(4)

(4)

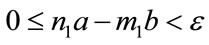

According to Dirichlet’s approximation theorem, for any , there is

, there is  such that

such that

Let .

.

Then  satisfy (3).

satisfy (3).

Now let . If

. If , then

, then  satisfy (4). If

satisfy (4). If , then

, then

so  satisfy (4).

satisfy (4).

Lemma 2.6. There does not exist complex eigenvalue  of A such that

of A such that .

.

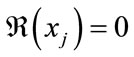

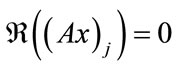

Proof. Suppose, on the contrary, that there exists an eigenpair  such that

such that , where

, where  and

and . Let

. Let . It’s impossible that

. It’s impossible that

for all j, for this would make

for all j, for this would make

for all j. However, it’s clear that  when

when .

.

Therefore, there exists some xj such that . (if not, then consider

. (if not, then consider ). Suppose

). Suppose

and t is obtained at . Let

. Let , then

, then  for all i. Either

for all i. Either  or there exists some n such that

or there exists some n such that . Since if

. Since if  for all i, then let m be the index of the element with non-zero imaginary part. For any

for all i, then let m be the index of the element with non-zero imaginary part. For any ,

,

If , then according to lemma 2.5, there exists

, then according to lemma 2.5, there exists  such that

such that

It follows that , a contradiction.

, a contradiction.

The case for  is similar.

is similar.

If , then there exists some p such that

, then there exists some p such that . Let

. Let ,

, . Require

. Require  to be sufficiently small so that

to be sufficiently small so that  is still a positive vector. It follows that for any

is still a positive vector. It follows that for any ,

,

. But according to lemma 2.5, for any

. But according to lemma 2.5, for any , there exists

, there exists  such that

such that  . Then

. Then . This again results in a contradiction, and hence the eigenpair

. This again results in a contradiction, and hence the eigenpair  does not exist.

does not exist.

Remark. The previous lemmas imply that if  is the unique positive eigenpair of

is the unique positive eigenpair of , then

, then  is equal to the spectral radius of A (since if

is equal to the spectral radius of A (since if  is any eigenpair corresponding to an eigenvalue of the maximum absolute value, then it can be shown that

is any eigenpair corresponding to an eigenvalue of the maximum absolute value, then it can be shown that  is an eigenpair with positive eigenvector, and the above lemmas will then imply that

is an eigenpair with positive eigenvector, and the above lemmas will then imply that .)

.)

Lemma 2.7. The matrix

has a simple eigenvalue n and eigenvalue 0 with algebraic multiplicity . In addition, the eigenvector associated with n is positive.

. In addition, the eigenvector associated with n is positive.

Proof. Since , n is an eigenvalue of D. Likewise,

, n is an eigenvalue of D. Likewise,  are

are  independent eigenvectors of D associated with the eigenvalue 0. So 0 is an eigenvalue for D with multiplicity

independent eigenvectors of D associated with the eigenvalue 0. So 0 is an eigenvalue for D with multiplicity . Since an

. Since an  matrix have only n eigenvalues, these are all the eigenvalues of D. Therefore, the eigenvalue of the greatest absolute value of D is positive and simple, and its corresponding eivenvector has positive entries.

matrix have only n eigenvalues, these are all the eigenvalues of D. Therefore, the eigenvalue of the greatest absolute value of D is positive and simple, and its corresponding eivenvector has positive entries.

Theorem 2.1. Let A be any positive matrix. Then A has a positive simple maximal eigenvalue r such that any other eigenvalue λ satisfies  and a unique positive eigenvector v corresponding to r. In addition, this unique positive eigenpair,

and a unique positive eigenvector v corresponding to r. In addition, this unique positive eigenpair,  , can be found by following the maximal eigenpair curve

, can be found by following the maximal eigenpair curve  of the family of matrices

of the family of matrices

where D is the  matrix with defined in lemma 2.7.

matrix with defined in lemma 2.7.

Proof. The first part of the statement of the theorem follows from the previous lemmas. We will denote the eigenpair of the matrix D by  and

and  .

.

,

,  , are all positive matrices. We will now examine the eigencurves

, are all positive matrices. We will now examine the eigencurves , where

, where

is a particular eigenvalue for

is a particular eigenvalue for , and

, and  is an eigenvector associated with it. The eigencurve

is an eigenvector associated with it. The eigencurve  starting at

starting at  is not going to intersect any other eigencurve at any time and

is not going to intersect any other eigencurve at any time and  remains to be the largest eigenvalue. Therefore, the unique positive eigenpair,

remains to be the largest eigenvalue. Therefore, the unique positive eigenpair,  of the matrix A, can be found by following the maximal eigenpair curve

of the matrix A, can be found by following the maximal eigenpair curve .

.

Theorem 2.2. An estimate of r is given by:

Proof. Suppose

then

Therefore

Remark. This completes the proof of Perron-Frobenius theorem for positive matrices. The proof can be modified to prove the more general case for irreducible non-negative matrices. For example, this can be done by letting , where D is the matrix defined in Lemma 2.7. As we noted in the introduction, we will next demonstrate how to use homotopy method to find the largest eigenvalue of a positive matrix A numerically.

, where D is the matrix defined in Lemma 2.7. As we noted in the introduction, we will next demonstrate how to use homotopy method to find the largest eigenvalue of a positive matrix A numerically.

3. Numerical Example

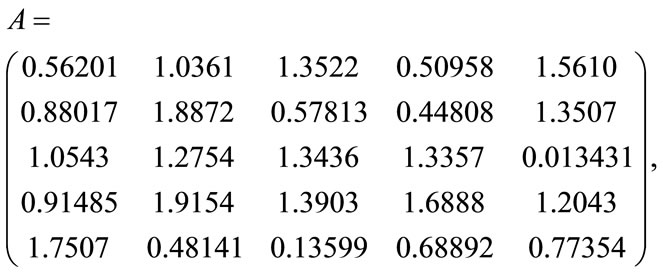

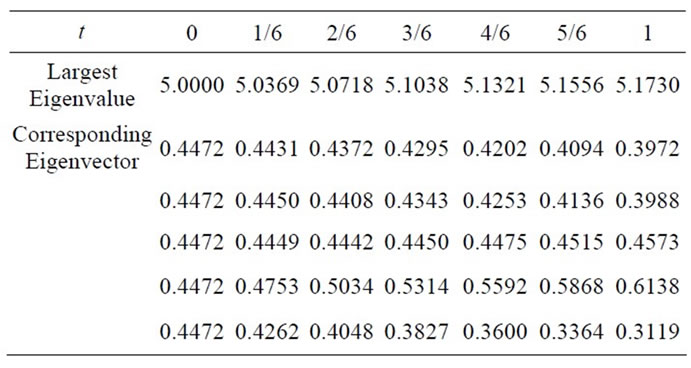

In this section we use the homotopy method to approximate the positive eigenpair of the matrix:

starting with the 5 × 5 matrix D of all entries ones. In [12] it is shown that the homotopy curves that connect the eigenpairs of the starting matrix D and those of A can be followed using Newton’s method. We use these techniques to follow the eigencurve associated with the largest eigenvalue of D. While [12] finds all the eigenvalues of tridiagonal symmetric matrices, the method works well in approximating the largest eigenvalue when it is applied to any positive matrix due to the separation of its eigencurves (see [12] for details).

The eigenpath of , shown in Figure 1, is constructed using the numerical results presented in the following table:

, shown in Figure 1, is constructed using the numerical results presented in the following table:

4. An Application to Positive Interval Matrices

To differentiate ordinary matrices in the previous sections from interval matrices, we will call them point matrices in this section. As stated in Section 1.2, an interval matrix is of the form , where

, where  and

and

are point matrices.

Definition 4.1. We call A a positive interval matrix if  and

and  are positive. The set E is Perron’s interval eigenvalue of A if E consists of all positive real maximal eienvalues of all the positive point matrices B with

are positive. The set E is Perron’s interval eigenvalue of A if E consists of all positive real maximal eienvalues of all the positive point matrices B with .

.

We are interested in determing Perron’s interval eigenvalue E of A. We’ll show that if s = the Perron’s eigenvalue of , t = the Perron’s eigenvalue of

, t = the Perron’s eigenvalue of , then

, then . Therefore, we can approximate E using the Homotopy method introduced in this paper.

. Therefore, we can approximate E using the Homotopy method introduced in this paper.

Lemma 4.1. Let B be an  positive point matrix with Perron’s eigenpair

positive point matrix with Perron’s eigenpair , and C be an

, and C be an  positive point matrix with Perron’s eigenpair

positive point matrix with Perron’s eigenpair . Suppose

. Suppose  for all

for all , then

, then .

.

Proof. Let , and suppose the maximum is obtained when

, and suppose the maximum is obtained when . Then

. Then

Figure 1. The maximal eigenvalue path for A.

Theorem 4.1. Let  be a positive interval matrix, and E is its Perron’s interval eigenvalue. Suppose

be a positive interval matrix, and E is its Perron’s interval eigenvalue. Suppose  the Perron’s eigenvalue of

the Perron’s eigenvalue of ,

,  the Perron’s eigenvalue of

the Perron’s eigenvalue of , then

, then .

.

Proof. For any  and

and , we have

, we have . Suppose

. Suppose  is the Perron’s eigenvalue of B, then

is the Perron’s eigenvalue of B, then  from the previous lemma. Therefore

from the previous lemma. Therefore .

.

Let . Define the function

. Define the function  to be:

to be:

Then  and

and . Since f is continuous, then from the Intermediate Value Theorem, for all

. Since f is continuous, then from the Intermediate Value Theorem, for all  there’s some

there’s some  such that

such that . Therefore

. Therefore .

.

It follows that

Remark. Theorem 4.1 shows that in order to find the Perron’s interval eigenvalue E of A, we only need to find the Perron’s eigenvalues of  and

and , which can be approximated using the technique introduced in the previous section.

, which can be approximated using the technique introduced in the previous section.

5. Acknowledgements

This research was partially carried out by two students: Yun Cheng and Timothy Carson, under the supervision of Professor M. B. M. Elgindi, and was partially sponsored by the NSF Research Experience for Undergraduates in Mathematics Grant Number: 0552350 and the Office of Research and Sponsored Programs at the University of Wisconsin-Eau Claire, Eau Claire, Wisconsin 54702-4004, USA.

NOTES