Modulus-Based Matrix Splitting Iteration Methods for a Class of Stochastic Linear Complementarity Problem ()

1. Introduction

The complementarity problems have been widely used in the engineering design, information technology, economic equilibrium, etc. Since some elements may involve uncertain data in practical applications, many problems can be attributed to stochastic variational inequality problems or stochastic linear complementarity problems, and arouse the attention of many researchers. Gurkan et al. [1] proposed the expected value (EV) formulation of stochastic variational inequality by using the sample-path method. Chen and Fukushima [2] proposed the expected residual minimization (ERM) formulation for stochastic linear complementarity problems by quasi-Monte Carlo methods. Lin and Fukushima [3] proposed the stochastic mathematical programs with equilibrium constraints (SMPEC) for the stochastic nonlinear complementarity problems. Zhou and Cacceta [4] transformed the monotone stochastic linear complementarity problem (SLCP) in finite sample space into a constrained minimization problem, and solved it with the Feasible Semi-smooth Newton Method. Mangasarian and Ren [5] given a new error bound for the monotone LCP based on the error bounds. Chen et al. [6] studied the SLCP involving a random matrix whose expectation matrix is positive semi-definite. Zhang and Chen [7] proposed a smooth projection gradient algorithm to solve the SLCP. However, these methods are only suitable for solving the small-scale SLCP.

In recent years, some scholars have proposed a series of methods for the study of large-scale complementarity problems. Dong and Jiang [8] proposed a class of modified modulus-based method. Bai [9] presented a class of modulus-based matrix splitting iteration methods. Bai and Evans [10] [11] also proposed a class of modulus-based synchronous multi-splitting (MSM) iteration methods. Bai and Zhang [12] further proposed a synchronous two-stage multi-splitting iteration method, which can be applied to solving the large-scale linear complementarity problems. Zhang [13] summarized the latest development and achievements of the modulus-based matrix splitting iteration methods, including the corresponding multi-splitting iteration methods, etc. Zhang [14] improved the convergence theorem of matrix multi-splitting methods for linear complementarity problems. Such methods are easy to be implemented and very efficient in practical applications, and there is no need to project iteration results into space

. Li et al. [15] [16] [17] [18] applied a class of modulus-based matrix splitting iteration methods to solving the nonlinear complementarity problem. Numerical results show that the methods are efficient. In the past decade many scholars have made many new achievements in this field, see the literatures [19] - [30] .

In this paper, we extend the modulus-based matrix splitting iteration methods to solve the large-scale stochastic linear complementarity problems. We also prove the convergence of these methods when the coefficient matrix is a positive definite matrix or a positive semi-definite matrix. The numerical results show that these methods are efficient.

The outline of the paper is as follows. In Section 2 we present some necessary results and lemmas. In Section 3 we establish the modulus-based matrix splitting iteration methods for solving the SLCP. The convergence of the methods is proved in Section 4. The numerical results are shown in Section 5. Finally, in Section 6, we give some concluding remarks.

2. Preliminaries

In this section, we briefly introduce some necessary results and lemmas.

Let

, A is said to be positive semi-definite if

for all

, and positive definite if

for all

.

is called a

-matrix if all of its principle minors are nonnegative.

Let

be a probability space, where

is a sample subset of

. Suppose that probability distribution is known, we consider the stochastic linear complementarity problem (SLCP): finding a vector

such that

(1)

where

and

are the rand matrices and vectors for

, respectively.

Usually there not exists z for all

for Problem (1). In order to get a reasonable solution of (1), in this paper we use the EV formulation proposed by Gurkan et al. [1] .

The Expected Value (EV) Formulation [1] :

Let

,

,

, and E be the expectation. We consider the following EV formulation: finding a vector

such that

(2)

We briefly denote it as

.

Define

where the min operator denotes the componentwise minimum of two vectors. It is generally known that

solves the

if and only if

solves the equations

The function RES is called the natural residual of the

and is often used in error analysis.

Lemma 1 (see [8] ) Let

be a scalar, then the

(2) is equivalent to the following fixed-point problem: finding

, satisfying that

(3)

Moreover, if x is the solution of (3), then

,

(4)

define a solution pair of Problem (2). On the other hand, if the vector pair z and r solves Problem (2), then

solves the fixed-point problem (3).

3. Modulus-Based Matrix Splitting Iteration Methods

In this section, we aim at the EV formulation of the stochastic linear complementarity problem (2). We give some corresponding modulus-based matrix splitting iteration methods.

For the strong monotone stochastic linear complementarity problem, the coefficient matrix is positive definite. For this case, we can apply the method proposed by Dong and Jiang [8] .

Method 3.1

Step 1: Select an arbitrary initial vector

and set

;

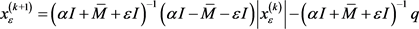

Step 2: Calculate

through the iteration scheme

Step 3: Let

, if

satisfies the termination rule, then stop; otherwise, set

and return to Step 2.

Unfortunately, the coefficient matrices of some stochastic linear complementarity problems are positive semi-definite, Method 3.1 is not suitable for solving the problem (2). Cottle et al. [31] presented a regularization method. Based on this method, we establish a regularized modulus-based matrix splitting iteration method. To simplify the notation, we will denote

and

for

and

, and denote the regularization problem for

.

Method 3.2

Step 1: Select a positive number

and an arbitrary initial vector

, and set

;

Step 2: Generate the iteration sequence

through solving the following equations

Let

.

Step 3: Set

, where

is a positive number,

, and return to Step 2.

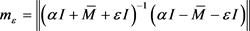

4. Convergence

In this section, we analyze the convergence of Method 3.1 and Method 3.2 when the coefficient matrix of the

is a symmetric positive definite matrix and a symmetric positive semi-definite matrix.

4.1. The Case of Symmetric Positive Definite Matrix

We first discuss the convergence of Method 3.1 when the coefficient matrix is symmetric positive definite.

Theorem 1 Suppose that the system matrix

is symmetric positive definite, then the sequence

generated by Method 3.1 converges to

.

Proof. By Lemma 1 we get

If

is a solution of (3), then

We can get that

Since matrix

is a symmetric positive definite, we have

where

denotes the set of all the eigenvalues of

. As

, it follows that

and thus

Hence, by the Banach contraction mapping theorem, we have the convergence of the infinite sequence

to the unique solution

of the fixed-point equation.

4.2. The Case of Symmetric Positive Semi-Definite Matrix

We now discuss the convergence of Method 3.2 when the coefficient matrix is symmetric positive semi-definite.

Lemma 2 (See [31] ) Let

be a

matrix,

be a decreasing sequence, where

is a positive scalar with

. For each k, let

be the unique solution of the

.

1) If

, then the sequence  is bounded; moreover, every accumulation point of

is bounded; moreover, every accumulation point of  solves the

solves the ;

;

2) If  is positive semi-definite and the

is positive semi-definite and the  is solvable, then the sequence

is solvable, then the sequence  converges to the least

converges to the least  -norm solution of

-norm solution of .

.

Theorem 2 Suppose that the system matrix  is symmetric positive semi-definite,

is symmetric positive semi-definite,  is a decreasing sequence, then the infinite sequence

is a decreasing sequence, then the infinite sequence  produced by Method 3.2 is bounded. Moreover, every accumulation point of

produced by Method 3.2 is bounded. Moreover, every accumulation point of  solves the

solves the ;

;

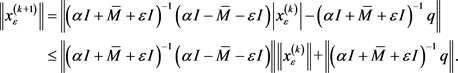

Proof Note that  is symmetric positive definite. By the Step (3) of Method 3.2, we can get

is symmetric positive definite. By the Step (3) of Method 3.2, we can get

Then

Let  and

and , there exist any positive numbers

, there exist any positive numbers ![]() and

and![]() , we have

, we have

![]() ,

, ![]() ,

,

Moreover,

![]()

Therefore

![]()

![]()

When![]() , the infinite sequence

, the infinite sequence ![]() is bounded. Since

is bounded. Since ![]() , we get that the sequence

, we get that the sequence ![]() is bounded.

is bounded.

By Lemma 2, we have that every accumulation point of ![]() solves the LCP

solves the LCP![]() . The proof is completed.

. The proof is completed.

5. Numerical Results

In this section, we test some numerical results to show the efficiency of our methods. Let![]() , n be the order of the matrix

, n be the order of the matrix![]() , IT denote the average iteration steps, and CPU denote the average iteration time.

, IT denote the average iteration steps, and CPU denote the average iteration time.

Let![]() . The steps to generate test problems can be found in the literature [4] . Numerical experimental results are shown in Tables 1-3.

. The steps to generate test problems can be found in the literature [4] . Numerical experimental results are shown in Tables 1-3.

Table 1 shows that Method 3.1 is effective when the coefficient matrix is symmetric positive definite.

Table 2 and Table 3 list the numerical experimental results of Method 3.2 when the coefficient matrix is symmetric positive semi-definite. We know that Method 3.2 is effective. (In the following, we briefly denote that Feasible Semi-smooth Newton Method is FSNM.)

Table 4 shows that, Method 3.2 is more effective than FSNM [19] . By Tables 1-4, we know that Method 3.2 improves the computational efficiency and is suitable for solving the large scale problems.

![]()

Table 1. The numerical results of Methods 3.1 (![]() ).

).

![]()

Table 2. The numerical results of Methods 3.2 (![]() ).

).

![]()

Table 3. The numerical results of Methods 3.2 (![]() ).

).

![]()

Table 4. Comparison of numerical results of Method 3.2 and FSNM [19] .

6. Conclusion

In this paper, we study the fast numerical methods for solving the stochastic linear complementarity problems. Firstly, we convert the expected value formulation of stochastic linear complementarity problems into the equivalent fixed point equations, then we establish a class of modulus-based matrix splitting iteration methods, and analyze the convergence of the method. These new methods can be applied to solve the large-scale stochastic linear complementarity problems. The numerical results also show the effectiveness of the new methods.

Acknowledgements

This work was supported by Natural Science Foundation of China (11661027), National Project for Research and Development of Major Scientific Instruments (61627807), and Guangxi Natural Science Foundation (2015 GXNSFAA 139014).