Automated Extraction and Analysis of CBC Test from Scanned Images ()

1. Introduction

With the widespread use of health applications for follow-up many applications have been developed to aid patients and those who care about their health life. Users perceive technology as a means to improve their engagement with routine medical procedures, notably in the context of managing and interpreting blood test records. Specifically, the Complete Blood Count (CBC) provides valuable insights into one’s health. Although the information may initially be unclear to patients, a more in-depth understanding typically necessitates additional exploration and explanation from a medical specialist. This research focuses on analyzing and providing a tool for developers to extract and analyze Blood test records. This provides tools for developers to use an integrated tool to develop a health application by integrating a scanning system to capture or using uploaded reports for analysis. Some limitations have been found in those applications during investigating them.

Blood test report is part of the routine medical care and for some people, it can be a routine health examination to keep up following their health condition such as monitoring Hemoglobin or Cholesterol levels. The lack of investigating blood test either by ignoring some important factors or search or look for an extra information and explanation can lead to problems due to lack of one’s knowledge. Blood tests are important as it can discover deceases as anemia, infection, and even blood cancer, but many patients can’t understand it, and this could put them at risk if they do not take a proper needed action. Although CBC tests are common, many people have a CBC performed when they have a routine health checkup, but they can’t understand it without a physician. On the other hand, technology plays a major role in transforming knowledge and understanding. It made it easy for us to understand and can be more intelligible when we discuss our test results with a physician or another qualified health care professional.

Automated extraction and evaluation of CBC parameters are critical for health care applications for several reasons. Automation is much faster to than manual methods as easy and faster diagnosis and treatment are often critical to make rapid decisions, especially in emergency situations. Automated analysis improves the reliability of diagnostic information and minimizes the risk of human error associated with wrong interpretation which results can have profound consequences. Moreover, automated CBC parameter extraction can be seamlessly integrated with electronic health record systems especially in developing countries, facilitating easy access to patient data by healthcare professionals. This integration enhances overall patient care by providing a comprehensive view of the patient’s health history. On the other hand, automated extraction of CBC parameters contributes to the generation of large datasets that can be used for research and data analytics, helping in identifying trends, patterns, and correlations, leading to advancements in medical knowledge and treatment strategies.

2. Background

2.1. Health Applications

The use of smart phones has been growing rapidly as over 2.5 billion people use smartphones globally, which accounts for 33% of the global population. Since 2014, the number of smartphone users has increased at a rate of 10% annually, which has led to a rapid rise in mobile applications [1] .

Interest in health apps has been expanding. There are different apps in the smartphones stores that offer a wide variety of health care features. The growth of these apps is continuing because of the demand from users and the widespread use of smartphones in general. Health care apps includes a wide range of categories that includes disease diagnosis, drug references, medical calculators, clinical communication, Hospital Information System (HIS), medical training, medical education, and general healthcare. Healthcare professionals and medical or nursing students found disease diagnosis, drug reference, and medical calculator applications to be the most beneficial among the various categories. Medical apps transform smartphones into valuable assets for evidence-based medicine at the point of care, complementing their role in mobile clinical communication. Furthermore, smartphones have significant potential in educating patients, enabling disease self-management, and remotely monitoring patients, highlighting their crucial role in healthcare [2] .

The increasing favorability of health apps has captured the interest of researchers. Health and fitness apps surged by 330% in a mere span of 3 years. The global mHealth app market was worth approximately 43.5 billion in 2022 and it is expected to expand at a compound annual growth rate (CAGR) of 11.6% from 2023 to 2030 (Figure 1). China Market profits of the digital health market reached $2.68 billion in 2018 and COVID-19 pandemic led to a high usage of health apps, as in March 2020 there were 40% rise in health and fitness app downloads [3] [4] .

2.2. Complete Blood Count Test (CBC)

The Complete Blood Count (CBC) test is a fundamental tool for healthcare professionals, enabling them to detect and monitor various health conditions. It

![]()

Figure 1. Growth in health application market [3] .

offers a comprehensive analysis of various blood components offering critical insights into a person’s overall health status. This routine test measures red blood cells (RBCs), white blood cells (WBCs), platelets, Hemoglobin (Hb), and Hematocrit levels (Hct), providing invaluable insights into a person’s physiological well-being. It is used to detect a variety of disorders, such as anemia, infection, and blood cancers [5] .

CBC test serves as a tool for monitoring health and disease progression such as chronic conditions or chemotherapy treatments. It enables healthcare providers to make informed decisions and adjustments in treatment plans based on dynamic blood parameters.

A standard CBC test typically counts the number of red blood cells, white blood cells, hemoglobin levels, hematocrit level, and platelets per volume of blood. Abnormalities in these counts can indicate underlying health issues. High white blood cell counts in CBC signal the presence of infections, inflammations, or leukemia. Platelet counts help assess clotting disorders and tendencies toward bleeding disorders, while high counts might indicate certain conditions. Hemoglobin carries oxygen throughout the body and low levels of hemoglobin might indicate anemia. Mean Corpuscular Volume (MCV), Mean Corpuscular Hemoglobin (MCH), and Mean Corpuscular Hemoglobin Concentration (MCHC) parameters help to evaluate the size, weight, and concentration of hemoglobin in red blood cells, offering additional insight into different types of anemia. In general, CBC test assists in diagnosing disorders and diseases, guiding treatment plans, and monitoring the response to therapies. Regular CBC tests are often recommended as a part of routine health check-ups, helping individuals maintain optimal health and catch potential issues early on [5] [6] .

Approximately 80% of doctors’ decisions regarding medical care rely on the data presented in laboratory reports [7] . Creating a standard range that is considered normal is crucial for accurately identifying diseases, considering age, sex, race, genetic diversity, and environmental elements. Over the past twenty years, there has been significant advancement in Full Blood Count (FBC) techniques, where automated methods have superseded manual ones. Modern hematology analyzers use technological progressions to precisely analyze complete blood counts. Moreover, new blood cell parameters have emerged to assist in diagnosing and treating numerous blood-related conditions [8] .

When assessing cell counts, they are compared to those of healthy individuals of similar age and gender. Any abnormal reading which is beyond the normal reading is considered blood cell disorder like Leukemia, Aplastic Anemia, and others. If a count falls either above or below the typical range, the physician will seek to understand the reason behind these atypical results. Table 1 shows the rough standard ranges for blood cell counts in healthy adults [9] .

This research paper focuses on the use of the CBC test normal range as a tool to diagnose abnormality in the given blood test with the use of technology and to aid doctors in interpreting the results and give appropriate advice to the patients.

![]()

Table 1. Normal blood count for men and women [9] .

2.3. Optical Character Recognition (OCR)

Optical Character Recognition (OCR) has been developed as a transformative tool in converting printed or handwritten text into digital formats, facilitating data entry, storage, and manipulation across various sectors. It was initially developed to convert typewritten text into machine-encoded text. Early systems struggled with handwriting recognition and limited language support. However, advancements in machine learning, artificial intelligence, and computer vision have propelled OCR to recognize different languages, various fonts, and even handwritten text with high accuracy [10] [11] .

OCR is widely used in applications across diverse sectors. In healthcare, it aids in extracting and digitizing patient records from handwritten notes for efficient management and analysis [12] . Furthermore, it is used for document digitization, enabling searchable databases, and streamlined workflows [13] . Banking institutions rely on OCR for automated data extraction from financial documents, enhancing efficiency and accuracy in processing [14] .

Modern technologies have integrated OCR into everyday applications. Translation services like Google Translate utilize OCR to instantly translate printed text captured through smartphone cameras [15] . Mobile applications employ OCR for tasks like digitizing business cards, scanning receipts, and extracting information from images for various purposes [16] .

Despite significant advancements, OCR still faces challenges, particularly in accurately recognizing complex layouts, poor-quality images, or handwritten text with varying styles. Future directions involve improving OCR algorithms, enhancing accuracy in diverse settings, and integrating it seamlessly into emerging technologies like augmented reality and smart assistants [17] .

Tesseract OCR is an open-source software widely used for text recognition from images or scanned documents. Developed by Hewlett-Packard Laboratories in 1980 and later maintained and enhanced by Google as an open-source project in 2005. Since then, it has seen substantial community-driven development, resulting in improved accuracy, language support, and performance. It is used as an accurate tool to extract extraction from various sources, including printed materials, handwriting, and other image-based documents. Its functionality involves the recognition of text characters from scanned documents or images, using machine learning algorithms and neural networks. Tesseract analyzes the input image, identifies its characters, and converts it into readable text. It can recognize multiple languages and handle various font types contribute to its versatility and widespread use [11] [18] .

In academic research, Tesseract has been a subject of interest for its potential in data extraction, digitization of historical documents, and aiding in text analysis across disciplines. For instance, a study by Smith et al. (2020) explored the efficacy of Tesseract OCR in digitizing and extracting text from archival materials, showcasing its applicability in preserving and analyzing historical documents [19] . Moreover, Tesseract OCR has found practical implementation in various industries. In healthcare, it assists in digitizing patient records, facilitating data management and analysis [20] . In banking and finance, it aids in processing financial documents and automating data extraction, enhancing operational efficiency [21] .

Tesseract’s flexibility and compatibility with different platforms, including Android applications, have made it a popular choice among developers for integrating text recognition capabilities into mobile apps. Its availability as a specialized library for Android applications has broadened its usage in extracting text from images captured through mobile devices [22] .

In conclusion, Tesseract OCR stands as a versatile and powerful tool for text recognition, offering applications across diverse fields such as academia, healthcare, finance, and mobile technology. As this research concerns analyzing paper-based blood test information, OCR is used to extract test results. It is used to scan printed text and then convert it to a digital/electronic form in a computer for further analysis according to normal blood count. This will aid doctors and patients to be aware of their health conditions simultaneity.

3. Related Work

A comparative study conducted to study various Optical Character Recognition (OCR) systems within Health Information Systems. It examines and compares different OCR technologies to determine their efficiency, accuracy, and suitability for use in health-related information systems. The study drew conclusions on the performance of three libraries. First, PyTesseract library exhibited high precision but longer runtime, making it suitable when time isn’t critical. TesseOCR showed faster execution, especially in lower-quality images, making it ideal for speed-centric processes. PyOCR had better execution times than PyTesseract but lagged behind TesseOCR, excelling in larger analysis areas where image preprocessing isn’t possible. Each library’s strengths make them suitable for specific scenarios based on factors like precision, speed, and the image analysis area [23] .

Another research addresses the challenge of digitizing paper-based laboratory reports, proposing an end-to-end Natural Language Processing (NLP) pipeline. The system comprises OCR module and an Information Extraction (IE) module. The OCR module identifies text from scanned reports, while the IE module extracts meaningful data to create digitalized tables. The study evaluates the pipeline’s performance using 153 laboratory reports, achieving an average text detection and recognition accuracy of 93% and an overall F1 score of 86% for extracting test item name, result, unit, and reference range. The system exhibits efficient processing, with an average inference time of 0.78 seconds per report using a single CPU. The study concludes that this lightweight pipeline offers practical and accurate digitization of diverse paper-based laboratory reports, feasible for real clinical settings with minimal computing resources. The robust performance on actual hospital data validates the pipeline’s viability for widespread adoption [24] .

A study in Bangladesh’s healthcare system identifies the lack of a proper referral system and the absence of comprehensive medical histories hindering accurate diagnoses. To address this, the paper proposes a system that creates medical histories using images of prescriptions. The system involves text extraction, classification, and conversion using OCR and deep learning techniques. It categorizes information into symptoms, medicines, diagnostic tests, and others, utilizing VGG-16, a convolutional neural network architecture primarily used for image recognition and Bidirectional Encoder Representations from Transformers BERT, a language representation model developed by Google that revolutionized natural language processing (NLP) for text classification. The authors stated that the system’s promising performance indicates its potential to improve healthcare, not only in Bangladesh but in any country facing similar challenges [25] .

Kumar, A., Goyal et al., introduces an OCR-based system designed for analyzing medical prescriptions and reports solving prevalent issue of improperly written prescriptions and reports, posing risks to patient safety due to difficulties in understanding and monitoring medical documents. To address this, the MPRA model (OCR-based Medical Prescription and Report Analyzer) aims to aid patients by extracting handwritten and printed text from images using Handwriting Character Recognition (HCR) and Printer Character Recognition (PCR) techniques. The model employs image processing methods to refine images, aiming to provide a more accurate and easily understandable output, thus assisting patients in comprehending their reports and prescribed medication [26] .

Mannino, R. G., Myers, D. R., et al., introduced anemia screening smartphone application that is designed for non-invasive detection of anemia using photos provided by patients. The app aims to analyze certain visual characteristics of fingernails captured in photos, potentially indicating anemia without the need for invasive blood tests. By utilizing smartphone cameras, the app intends to assess specific markers or features visible in fingernails’ photos, enabling a preliminary assessment of anemia. This non-invasive approach could potentially advance how anemia is screened and diagnosed, offering a convenient and accessible method for individuals to monitor their health [27] . Another study aimed to create a comprehensive mobile application to aid manual blood cell differential count and assess its usability among undergraduate medical students. The application, developed for Android devices, was validated by experienced pathologists, and offered features for various differential counts, including WBC, platelets, reticulocytes, malaria parasite quantification, and bone marrow. Usability testing among 60 participants revealed high ratings for ease of use (95%), time efficiency (91.7%), and helpfulness in healthcare practice learning (96.7%). The app received an overall high usability score of 6.11, indicating its effectiveness in aiding manual blood cell count and its positive reception among users [28] .

4. The Proposed System

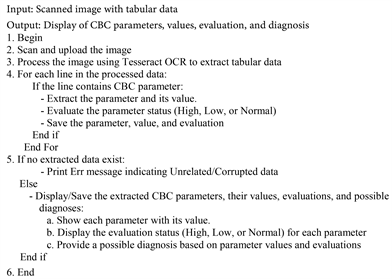

The proposed system model as seen in Figure 2 has four stages to perform the analysis for the blood test. As the model aims to make instant support for CBC test analysis to patients using image processing to their CBC test results, this requires scanning and uploading the blood test results. The second stage is extracting tabular data directly from an image. For this purpose, Optical Character Recognition (OCR) techniques will be used to deal with tables in image format (like PNG, JPEG, etc.). Tesseract OCR is a popular choice for performing OCR in Java. It has faster execution, especially in lower-quality images, making it ideal for speed-centric processes. Extracting structured table data directly from an image requires additional processing to interpret and format the data correctly is evolved in the third stage. To parse extracted text into a structured table format, the text output needs to be analyzed to identify table rows, columns, and data structures.

Finally the extracted data will be evaluated to normal range to specific gender and given to the user for awareness and future reference, but it’s crucial for patients to consult with a healthcare specialist, commonly a doctor or hematologist, to precisely evaluate the CBC test results and make a proper diagnosis. They usually examine the overall clinical picture, patient symptoms, medical history, and potentially order more blood tests or investigations to identify the cause of any abnormalities found in the CBC test. Algorithm 1 is adopted to extract and process the data from scanned image results.

Algorithm 1. Scanned blood test analyzer.

To test Algorithm 1, an application has been developed to load images and process them. Proper high-quality images show accurate and useful information. The application considers the fault images as no related results. The only limitation for this app is low quality CBC test images.

5. Discussion

Implementation of the application has been developed based on Optical Character Recognition (OCR) techniques which are used to extract text data from images with different formats (like PNG, JPEG, etc.). Tesseract OCR is used within Java application to simplify the process for data extraction. The main application screenshot is shown in Figure 3.

It has left panel that will hold the loaded CBC test image, the right panel will hold the extracted CBC results along with the analysis of the main five parameters in CBC test data that includes red blood cells, white blood cells, hemoglobin levels, hematocrit level, and platelets. On the top-left corner (menu bar) has two main file options.

First, option allows the user to open the scanned image to be analyzed, and the second option allows the user to save the CBC test results and the analysis report. On the bottom panel the user selects the gender as the results will vary due to standard ranges for blood cell counts in healthy adults.

To start the process the user clicks on the file menu to open the image. If the image is opened successfully, Tesseract OCR will extract the image tabular data into normal text format. Once the text is extracted, the process of analysis will be started and the report is displayed on the right panel, see Figure 4. On the other hand, if the image extracted information is not valid an error message is displayed. As seen in Figure 5, Tesseract OCR data extraction was not correct and corrupted because of the image quality and this resulted in the application as an error message. Some tested images provide some of CBC test parameters, but not all for the same reason and the application will only show the correct extracted parameters results. This leads to high accuracy and trusted results as only correct data extractions is analyzed and presented to the user in the application interface and can be saved for future reference. It only exports correct data that has been extracted from scanned documents giving an instant report that includes correct extracted data along with full analysis for the results with the standards measurements listed in Table 1. The application is an entry point for other blood test diagnosis as it can be generalized to cover all type of tests using the same algorithm to extract data and then analyses these data according to its medical evaluation boundaries taking the account of the gender differences.

![]()

Figure 3. Blood test analyzer application interface.

![]()

Figure 4. Scanned CBC test with its analysis report.

![]()

Figure 5. Error caused by low qulity images.

The application has been evaluated on different types of images to diagnose the efficiency of the used algorithm and the application. The overall results showed a high percentage of accuracy if the image quality is high. The overall time complexity would be approximately O(n) + O(m) + O(k), considering “n” as the image size, “m” as OCR complexity, and “k” as the number of lines in the processed data containing CBC parameters.

6. Conclusion

Image processing and OCR techniques in CBC test analysis represent a pivotal advancement in enhancing medical diagnostics, providing rapid, insightful information for both patients and healthcare specialists. The system presents a substantial impact, empowering patients with accessible health awareness, while giving healthcare professionals, such as doctors and hematologists, an expedited tool for informed analysis. While acknowledging limitations, it offers a valuable avenue for improving diagnostic processes and patient understanding. It aids patients to comprehend their health status and offers a rapid analysis platform for healthcare professionals. The limitation of this work is the challenges with image variability. The proposed system shows high performance as the extracted data was extracted with high accuracy from high quality images. Despite high accuracy, inconsistencies in image quality and formatting pose challenges, affecting the precision of table structure retention. For future, this work can be extended to be generalized for all types of blood tests and work on image enhancement for low quality images prior to data extraction.