Received 22 September 2015; accepted 14 December 2015; published 17 December 2015

1. Introduction

Holevo’s theorem [1] is one of the pillars of quantum information theory. It can be informally summarized as follows: “It is not possible to communicate more than n classical bits of information by the transmission of n qubits alone”. It therefore sets a useful upper bound on the classical information rate using quantum channel.

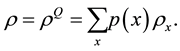

Suppose Alice prepares a state  in some systems Q, where

in some systems Q, where  with probabilities

with probabilities . Bob performs a measurement described by the POVM elements

. Bob performs a measurement described by the POVM elements  on that state, with measurement outcome Y. Let

on that state, with measurement outcome Y. Let

(1)

(1)

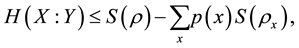

The Holevo bound states that [2]

(2)

(2)

where S is the von Neumann entropy and  is the Shannon mutual information of X and Y. Recent proofs of the Holevo bound can be found in [3] [4] .

is the Shannon mutual information of X and Y. Recent proofs of the Holevo bound can be found in [3] [4] .

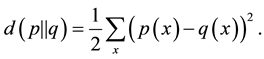

Consider the following trace distance between two probability distributions  and

and  on X (note that the trace distance here is different than the one used in [2] , chapter 9, by a square)

on X (note that the trace distance here is different than the one used in [2] , chapter 9, by a square)

(3)

(3)

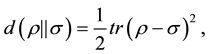

We can extend the definition to density matrices  and

and

(4)

(4)

where we use  for

for . This is known as the Hilbert-Schmidt (HS) norm [5] -[7] (in fact this is one half of the HS norm). Recently, the above distance measure was coined the “logical divergence” of two densities [8] .

. This is known as the Hilbert-Schmidt (HS) norm [5] -[7] (in fact this is one half of the HS norm). Recently, the above distance measure was coined the “logical divergence” of two densities [8] .

We prove a Holevo-type upper bound on the mutual information of X and Y, where the mutual information is written this time in terms of the HS norm instead of the Kullback-Leibler divergence. It is recently suggested by Ellerman [8] that employing the HS norm in the formulation of classical mutual information is natural. This is consistent with the identification of information as a measure of distinction [8] . Note that employing the Kullback-Leibler divergence in the standard form of the Holevo bound gives an expression which can be identified with quantum mutual information, however, the “coherent information” is considered as a more appropriate expression (see also [2] chapter 12). In view of the above we hereby take Ellerman’s idea a step further and write a Holevo-type bound based on the HS norm.

The question whether ![]() was the right measure of quantum mutual information was discussed in [9] , within the context of area laws. It was used there to provide an upper bound on the correlations between two distant operators

was the right measure of quantum mutual information was discussed in [9] , within the context of area laws. It was used there to provide an upper bound on the correlations between two distant operators ![]() and

and![]() , where A is a region inside a spin grid and B is its complement:

, where A is a region inside a spin grid and B is its complement:

![]() (5)

(5)

where ![]() is the correlation function of

is the correlation function of ![]() and

and![]() .

.

In addition, the HS norm was suggested as an entanglement measure [6] [10] , however, this was criticized in [11] , claiming it did not fulfill the so called CP non-expansive property (i.e. non-increasing under every completely-positive trace-preserving map).

In the following, we will prove a Holevo-type bound on the above HS distance between the probability ![]() on the product space

on the product space ![]() and the product of its marginal probabilities

and the product of its marginal probabilities![]() :

:

![]() (6)

(6)

where

![]() (7)

(7)

and where

![]() (8)

(8)

![]() (9)

(9)

are the partial traces of![]() , and q is the dimension of the space Q. Note that both sides of Inequality (6) are measures of mutual information. Therefore, our claim is that the classical HS mutual information is bounded by the corresponding quantum one multiplied by the dimension of the quantum density matrices used in the channel. We will also show that

, and q is the dimension of the space Q. Note that both sides of Inequality (6) are measures of mutual information. Therefore, our claim is that the classical HS mutual information is bounded by the corresponding quantum one multiplied by the dimension of the quantum density matrices used in the channel. We will also show that

![]() (10)

(10)

where ![]() and

and ![]() are the Tsallis entropies [12]

are the Tsallis entropies [12]

![]() (11)

(11)

also known as the linear entropy, purity [13] or logical entropy of ![]() [8] .

[8] .

All the above is proved for the case of projective measurements. However, we expect similar results in the general case of POVM, in light of Naimark’s dilation theorem (see [14] or [15] for instance).

In the next section we review some basic properties of quantum logical divergence and then use these properties to demonstrate the new Holevo-type bound.

2. The HS Norm and the Holevo-Type Bound

Let

![]() (12)

(12)

In what follows we recall some basic properties of the HS distance measure, then we state and prove the main result of this paper.

Theorem 2.1. Contractivity of the HS norm with respect to projective measurements

Let ![]() and

and ![]() be two density matrices of a system S. Let

be two density matrices of a system S. Let ![]() be the trace preserving operator

be the trace preserving operator

![]() (13)

(13)

where the projections ![]() satisfy

satisfy![]() ,

, ![]() and

and ![]() for every i, then

for every i, then

![]() (14)

(14)

Proof: We now write![]() . Then X is Hermitian with bounded spectrum, and using Lemma 2 in [16] we conclude that

. Then X is Hermitian with bounded spectrum, and using Lemma 2 in [16] we conclude that

![]() (15)

(15)

Theorem 2.2. The joint convexity of the HS norm

The logical divergence ![]() is jointly convex.

is jointly convex.

Proof: First observe that ![]() is convex from the convexity of

is convex from the convexity of ![]() and the linearity of the trace. Next we can write

and the linearity of the trace. Next we can write

![]() (16)

(16)

where the inequality is due to the convexity of![]() . This constitutes the joint convexity.

. This constitutes the joint convexity.

Theorem 2.3. The monotonicity of the HS norm with respect to partial trace

Let ![]() and

and ![]() be two density matrices, then

be two density matrices, then

![]() (17)

(17)

where b is the dimension of B.

Proof: One can find a set of unitary matrices ![]() over B and a probability distribution

over B and a probability distribution ![]() such that

such that

![]() (18)

(18)

![]() (19)

(19)

(see [2] chapter 11). Now since ![]() is jointly convex on both densities, we can write

is jointly convex on both densities, we can write

![]() (20)

(20)

Observe now that the divergence is invariant under unitary conjugation, and therefore the sum in the right hand side of the above inequality is![]() .

.

We can now state the main result:

Theorem 2.4. A Holevo-type bound for the HS trace distance between ![]() and

and ![]()

Suppose Alice is using a distribution![]() , where x is in

, where x is in![]() , to pick one of n densities

, to pick one of n densities ![]() in Q. She then sends the signal in a quantum physical channel to Bob. We can add an artificial quantum system P and write

in Q. She then sends the signal in a quantum physical channel to Bob. We can add an artificial quantum system P and write ![]() for Alice as:

for Alice as:

![]() (21)

(21)

where the vectors ![]() are orthogonal. Let

are orthogonal. Let ![]() and

and ![]() be the partial traces of

be the partial traces of![]() . Suppose Bob is measuring the system using a projective measurement as in Theorem 2.1, then

. Suppose Bob is measuring the system using a projective measurement as in Theorem 2.1, then

![]() (22)

(22)

where q is the dimension of the space Q.

Proof: First we consider one more auxiliary quantum system, namely M for the measurement outcome for Bob. Initially the system M is in the state![]() . Let

. Let ![]() be the operator defined by Bob’s measurement

be the operator defined by Bob’s measurement

as in Theorem 2.1 above: let ![]() on Q be defined such that

on Q be defined such that ![]() and

and

![]() (23)

(23)

One can easily extend ![]() to the space

to the space ![]() by

by

![]() (24)

(24)

This can be done by choosing a set of operators, conjugating ![]() to

to![]() . It amounts to writing the measurement result in the space M (see also Ch. 12.1.1 in [2] ). If we now trace out Q we find

. It amounts to writing the measurement result in the space M (see also Ch. 12.1.1 in [2] ). If we now trace out Q we find

![]() (25)

(25)

Moreover, ![]() can be extended to

can be extended to ![]() by

by

![]() (26)

(26)

If we trace out Q we arrive at

![]() (27)

(27)

Finally, we can extend ![]() to

to ![]() by

by

![]() (28)

(28)

If we trace out Q we get

![]() (29)

(29)

We can now use the properties stated in the above theorems, Equation (27) and Equation (29) to deduce

![]() (30)

(30)

where in the first inequality we have used Theorem 2.1 and in the second inequality Theorem 2.3. The final equality is an easy consequence of the definition of the HS norm.

Corollary: Suppose Alice is sending classical information to Bob using a quantum channel Q, Bob measures the quantum state using a projective measurement defined above (having results in space Y). Under all the above assumptions

![]() (31)

(31)

where ![]() and

and ![]() are Tsallis entropies of the second type (the quantum logical entropies) of

are Tsallis entropies of the second type (the quantum logical entropies) of ![]() and

and![]() .

.

Proof: Clearly (see also [17] )

![]() (32)

(32)

It is easy to see (by a matrix representation) that for ![]() as in Equation (21)

as in Equation (21)

![]() (33)

(33)

therefore

![]() (34)

(34)

However, ![]() , and

, and ![]() (see [17] Theorem II.2.4 and Theorem II.3), therefore

(see [17] Theorem II.2.4 and Theorem II.3), therefore

![]() (35)

(35)

Combining this with Theorem 2.4 we find

![]() (36)

(36)

Example: Suppose Alice sends the state ![]() with probability 1/2 and the state

with probability 1/2 and the state ![]() with probability 1/2, then

with probability 1/2, then

![]() (37)

(37)

where ![]() and

and![]() . By partial tracing we get

. By partial tracing we get

![]() (38)

(38)

and ![]() is a balanced coin. The eigenvalues of

is a balanced coin. The eigenvalues of ![]() are

are ![]() and therefore

and therefore

![]() (39)

(39)

Also ![]() and

and![]() , hence

, hence

![]() (40)

(40)

The left hand side of the above inequality is a measure of the classical mutual information according to the HS norm between X and Y. The very fact that it is smaller than the Tsallis information measure of X (which is 1/2) means that the quantum channel restricts the rate of classical information transfer, where the mutual information is measured by the HS norm and the source of information X is measured by Tsallis entropy. This is analogous to Holevo’s upper bound in the framework of Tsalis/linear entropy. We find this result similar in spirit to the well-known limitation on the rate of classical information transmission via a quantum channel (without utilizing entanglement): one cannot send more than one bit for each use of the channel using a one qubit channel.

In the above example, if ![]() and

and ![]() are mixed states, then by the same argument we can show that:

are mixed states, then by the same argument we can show that:

![]() (41)

(41)

This gives a bound on the classical mutual information using the quantum “logical entropy” (the Tsallis entropy).

3. Discussion

We proved a Holevo-type bound employing the Hilbert-Schmidt distance between the density matrices on the product space ![]() and the tensor of the two marginal density matrices on

and the tensor of the two marginal density matrices on![]() . Using a different measure of mutual information, we showed that this Holevo-type upper bound on classical information transmission could be written as an inequality between the classical mutual information expression and its quantum counterpart.

. Using a different measure of mutual information, we showed that this Holevo-type upper bound on classical information transmission could be written as an inequality between the classical mutual information expression and its quantum counterpart.

It seems that by utilizing Naimark’s dilation [14] [15] , the above result can be generalized to any POVM if one is willing to employ the suitable channel in a higher dimensional Hilbert space.

As was claimed in [17] , the divergence distance used above is the natural one in the context of quantum logical entropy [8] . Being the “right” measure of mutual information in quantum channels passing classical information, we expect that this formalism would be helpful in further studies of various problems such as channel capacity theory, entanglement detection and area laws.

Acknowledgements

E.C. was supported by Israel Science Foundation Grant No. 1311/14 and by ERC AdG NLST.