Intelligent Control and Automation

Vol. 3 No. 2 (2012) , Article ID: 19231 , 6 pages DOI:10.4236/ica.2012.32013

Enhanced Map-Based Indoor Navigation System of a Humanoid Robot Using Ultrasound Measurements

Department of Biomedical Engineering, Tampere University of Technology, Seinäjoki, Finland

Email: iivari.back@tut.fi

Received April 12, 2012; revised May 12, 2012; accepted May 20, 2012

Keywords: Indoor Navigation; Humanoid Robot; Service Robot

ABSTRACT

During recent years, walking humanoid robots have gained popularity from wheeled vehicle robots in various assistive roles in human’s environment. Self-localization is a necessary requirement for the humanoid robots used in most of the assistive tasks. This is because the robots have to be able to locate themselves in their environment in order to accomplish their tasks. In addition, autonomous navigation of walking robots to the pre-defined destination is equally important mission, and therefore it is required that the robot knows its initiate location precisely. The indoor navigation is based on the map of the environment used by the robot. Assuming that the walking robot is capable of locating itself based on its initiate location and the distance walked from it, there are still factors that impair the map-based navigation. One of them is the robot’s limited ability to keep its direction when it is walking, which means that the robot is not able to walk directly from one point to another due to a stochastic error in walking direction. In this paper we present an algorithm for straightening the walking path using distance measurements by built-in sonar sensors of a NAO humanoid robot. The proposed algorithm enables the robot to walk directly from one point to another, which enables precise mapbased indoor navigation.

1. Introduction

A humanoid robot is a robot with an overall appearance based on that of the human body [1]. The robot is based on the general structure of a human, and hence it usually has a torso with a head, two arms and it walks on two legs. The humanoid robot typically has autonomous capabilities, which means that it can adapt to changes in its environment or itself and continue to reach its goal. In practice this may mean self-maintenance such as automatic recharging, autonomous learning and movement as well as avoiding harmful situations. Obviously safe interaction with human beings and the environment is a key characteristics required from these kinds of robots. The robots are increasingly used in human’s environment, and hence the problem of “working coexistence” of humans and humanoid robots has become acute [2].

The humanoid robots have been created to imitate some of the same physical and mental tasks that humans undergo daily, and therefore they are ideal to be used as assistive robots in many areas. Assistive robotics is a broad class of robots whose function is to provide assistance to the users, and they are being used in e.g. elderly care and to help people with different kinds of physical disabilities. A socially assistive robot (SAR) describes a class of robots that is the intersection of assistive robots and socially interactive robotics (robots that communicate with the users) [3]. During recent years, humanoid robots have gained popularity in many research-related activities. Compared to the previously employed wheeled vehicles, walking humanoid robots have certain benefits. For example, they are able to access different types of terrain and to climb stairs. On the other hand, there are also drawbacks including foot slippage, stability problems during walking, and limited payload capabilities [4]. One essential drawback is also the fact that the robot has limited ability to keep constant walking direction.

Self-localization of a robot is a fundamental problem in mobile robotics, and a necessary requirement for the humanoid robots used in most of the assistive tasks. This is because the robots have to be able to locate themselves in their environment in order to accomplish their tasks. In addition, autonomous navigation of walking robots to the pre-defined destination is equally important mission, and it requires that the robot precisely knows its initiate location and can perform self-localization when needed. The navigation is usually based on the map of the environment used by the robot. The map-based navigation can be augmented by using additional information on the environment provided by different sensors of the robot. For example visual information provided by the built-in cameras of the robot [5] or distance information from the ultrasonic sensors can be used to assist the navigation and the avoidance of the obstacles. During recent years, it has been proposed a number of approaches for robot navigation in indoor and outdoor environments [6]. The approach presented by Seara and Schmidt [7] is based on the maximization of the predicted visual information in guided robot navigation. Tu and Baltes [8] have applied fuzzy learning to build a map that facilitates planning robot tasks for real paths. Also obstacle avoidance and object handling are key tasks in the autonomous navigation [9].

Assuming that the walking robot is capable of locating itself based on its initiate location and the distance walked from it, there are still factors that may impair the map-based navigation and self-localization. One essential factor is the robot’s limited ability to keep its direction when it is walking. According to our experiments, the walking path of a humanoid robot may differ up to two meters from its initial path on a distance of ten meters. Consequently the robot is not able to walk directly from one point to another, which causes difficulties for the map-based the navigation. In this paper we present an algorithm for straightening the walking path using distance measurements by built-in sonar sensors of the robot. This way the indoor navigation can be improved and the robot is able to walk directly from one point to another, which enables map-based indoor navigation.

The application field for the robotic approach in our research is elderly care. We study how a humanoid robot can be used as an assistant in elderly care institutions such as nursing homes and hospitals. One central task assigned for the robot is computer vision based remote monitoring of the nursing home residents. The robot is able to receive alerts from the telecare system installed in the nursing home and autonomously navigate to the room of the resident in case of e.g. emergency call. The robot can transmit video or still images to remote destination over Internet. It is also possible to establish a speech connection between the remote caregiver and the resident via robot. This way the remote caregiver is able to check the situation in the room, and take appropriate actions. To make this kind of procedure possible, the robot needs an accurate system for self-localization and autonomous indoor navigation.

2. Methodology

2.1. Humanoid Robot

In our research, we are using a NAO V. 4.0 robot manufactured by Aldebaran Robotics [10]. NAO (Figure 1) is

Figure 1. NAO humanoid robot used in this research. The robot is equipped with camera sensors, microphones, speakers and distance sensors [10].

a programmable humanoid robot 57 cm “tall”. It is capable of autonomous movement using its electric motors and actuators that allow 25 degrees of freedom. It contains a variety of sensors and devices including two CMOS cameras, four microphones, two hi-fi speakers with voice synthesizer and sonar sensors for distance estimation. Tactile sensors and ultrasound system make it possible for the robot to avoid obstacles when walking. The robot has a WLAN (Wireless Local Area Network) connection for communication and information transmission.

2.2. Indoor Navigation System

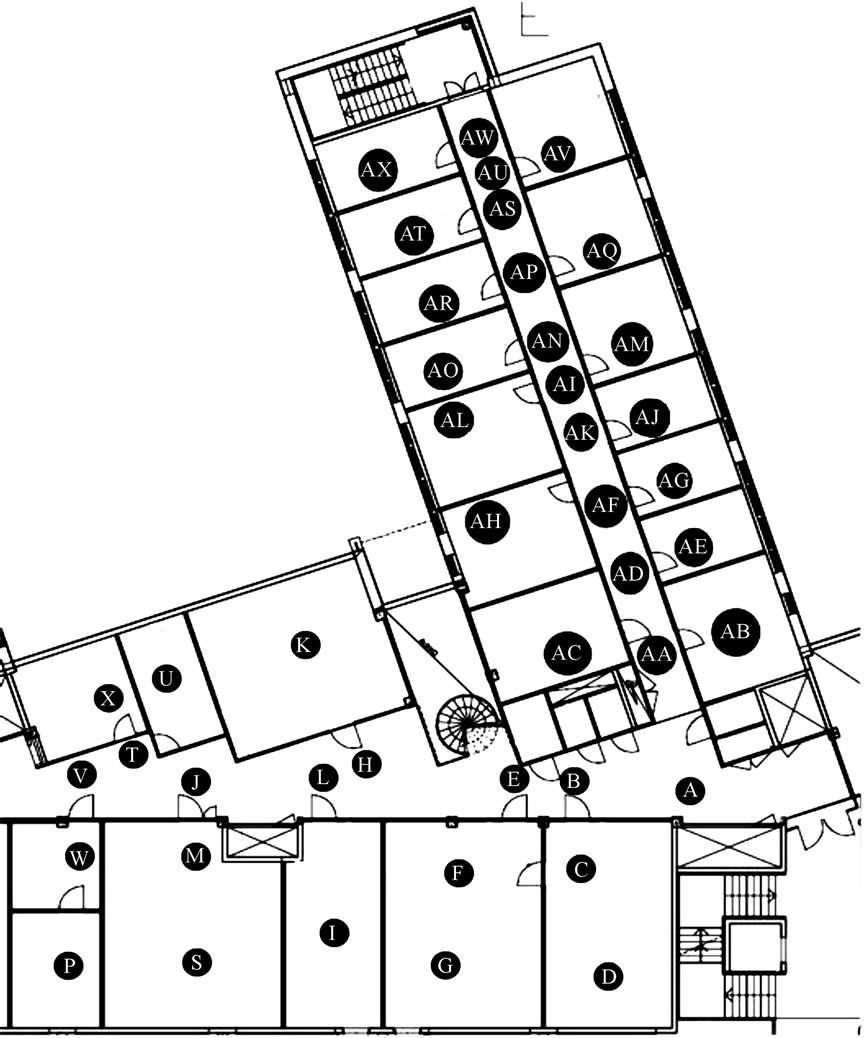

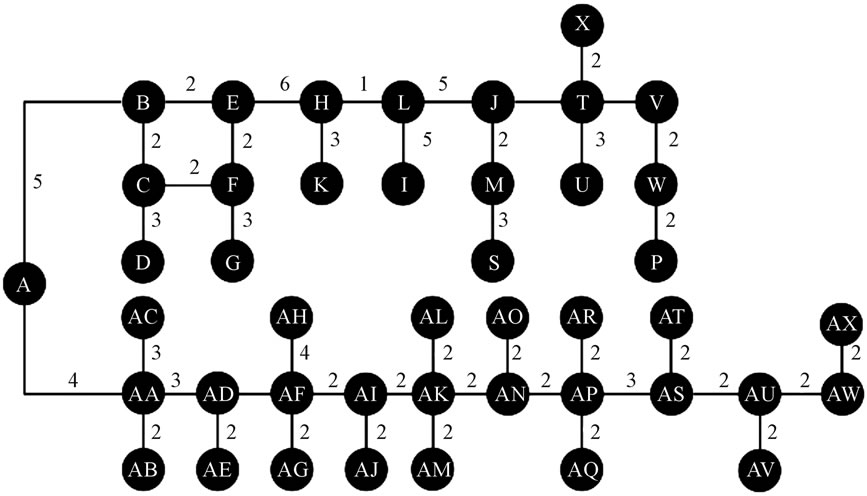

To be able to autonomously move in the indoor environment, the robot uses a map-based navigation system. Once the robot has an accurate map, it can move precisely in the defined environment. Provided that the robot precisely knows its initial location, it can find its way to e.g. a particular room. Figure 2(a) shows an example map of a building in which the robot can be used. The stand-by position of the robot is marked with A (source), and other points represent vertices B-AX. The map is transferred to a graph that contains the source (A), vertices (B-) and edges between them (Figure 2(b)). The weight of each edge is proportional to the physical distance of this edge in the map. The path finding system is based on Dijkstra’s graph search algorithm [11] that solves the single-source shortest path problem. In practice, this means that the path, whose combined weight is

(a)

(a) (b)

(b)

Figure 2. (a) An example map from an indoor environment in which the robot can be used. The points represent vertices in the robot’s path; (b) A graph produced based on the map. The weight of each edge corresponds to the physical distance between the vertices.

small as possible, is selected. All the available paths have been saved to the robot’s memory as a graph-type data structure. As soon as the robot receives the alarm from one of the rooms, it can automatically select the shortest path and navigate to this destination.

2.3. Algorithm for Walking Path Straightening

The goal of the walking path straightening algorithm presented in this paper is to steer the robot to keep its direction when it is walking. There are several reasons why the robot cannot keep its direction without steering. The most important of them is probably the design of the walking that is operated by the electric motors and actuators in its legs. Also the properties of the floor seem to affect on the walking. For these reasons, the deviation from the original path is not systematic, and therefore we can not make any constant correction to the path.

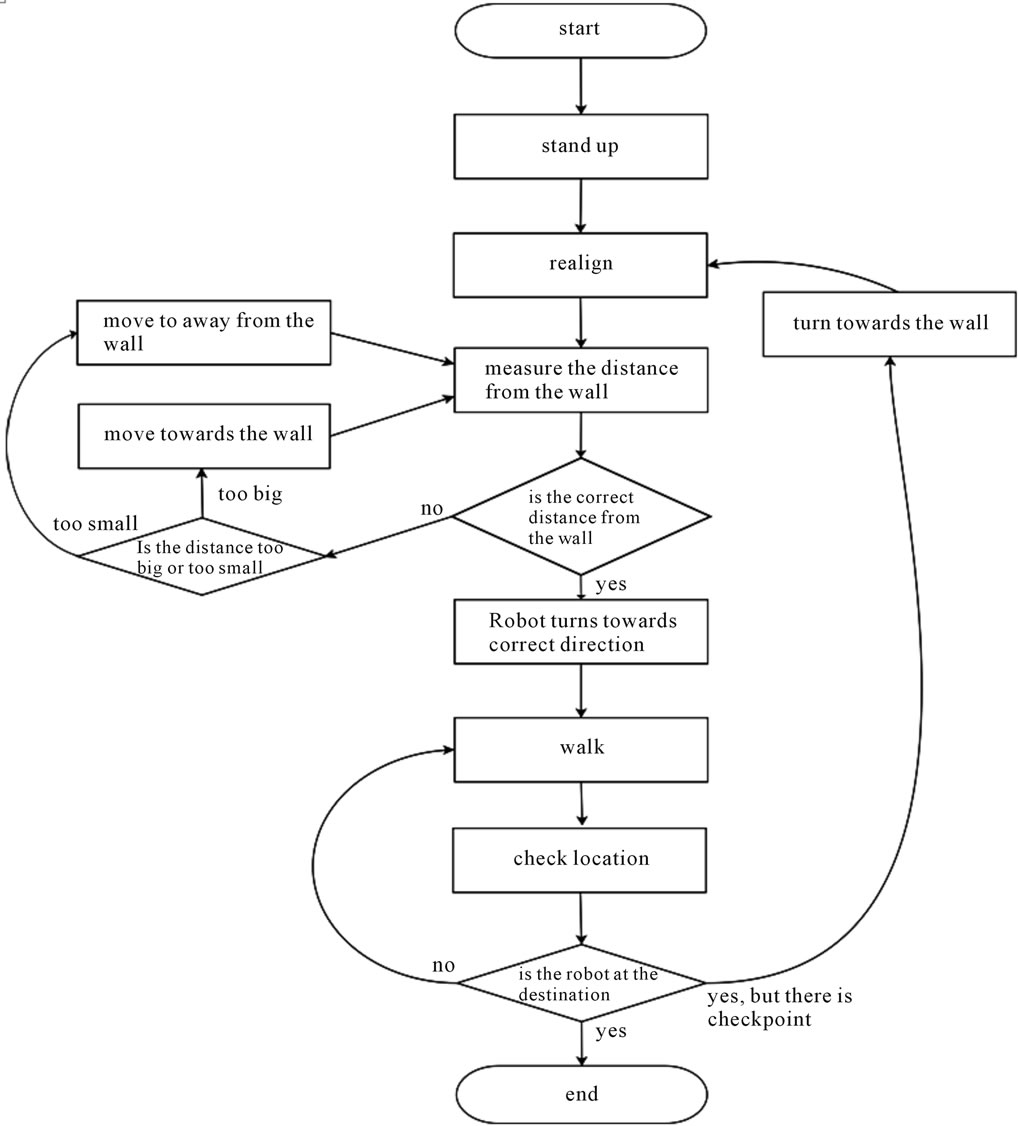

The straightening algorithm employs the sonar sensors of the robot. These sensors use ultrasound measurement for distance measurement. The walking paths defined in the map-based navigation are usually located close to the walls of the rooms and corridors. Assuming that the distances of the vertices A and B to the nearest wall is known, it is possible to define the distance from the direct path AB to the wall. Then the path of the walking robot is known, and the navigation algorithm can steer the robot to stay on this path by sensing the distance between the robot and wall by keeping this distance constant whenever the robot is walking between these two vertices. A flow chart describing the walking from A to B is presented in Figure 3. When the robot starts walking from vertex A to B, it makes distance measurement from

Figure 3. A flow chart describing the walking algorithm.

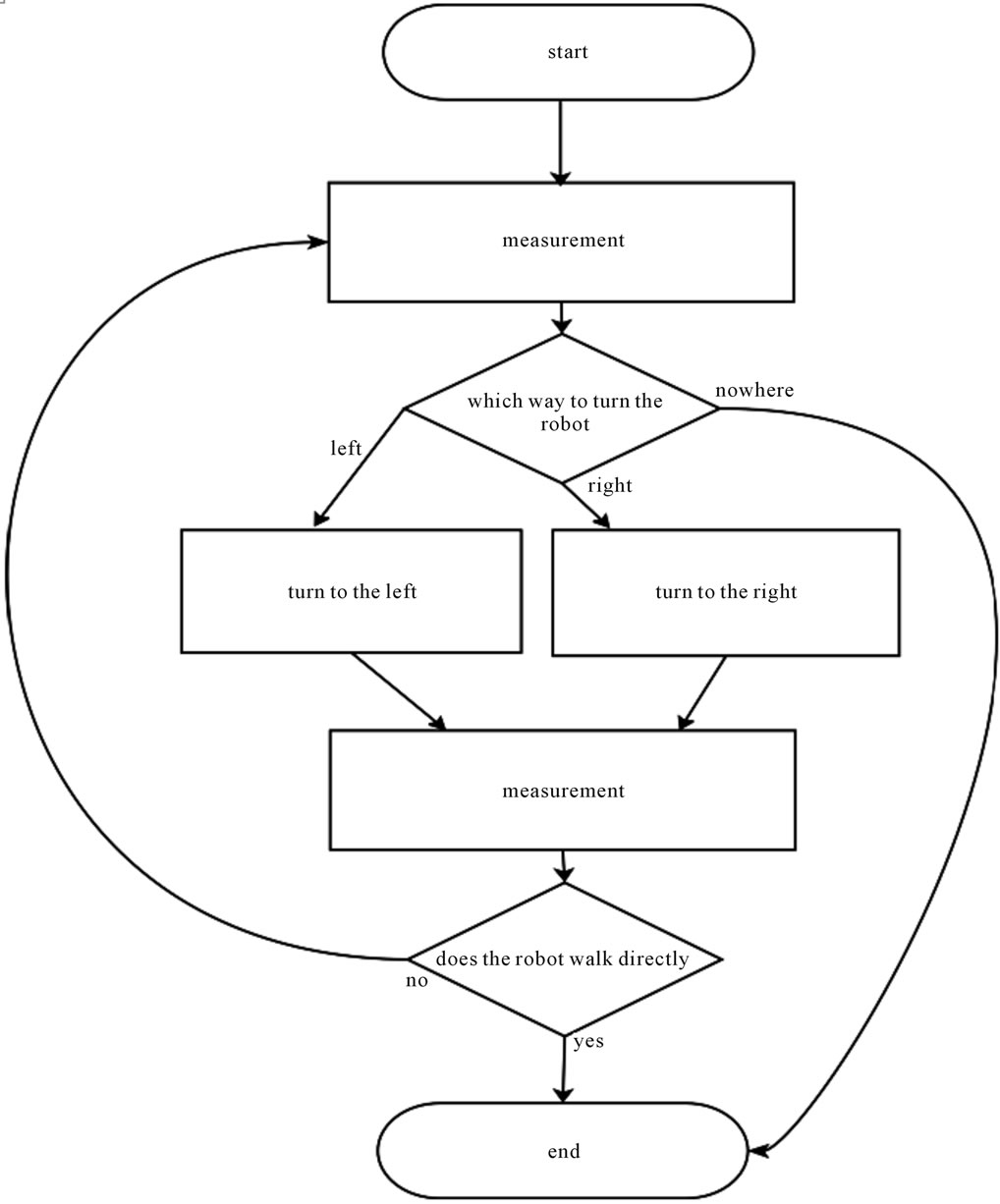

the wall in locations with pre-defined intervals. Preliminary test have shown that a suitable interval is between 1.5 and 2 meters. If the distance differs from the known distance between the wall and direct bath from A to B, the algorithm corrects the walking direction and checks the distance from the wall again in the next distance measurement location, as presented in Figure 4.

3. Experiments

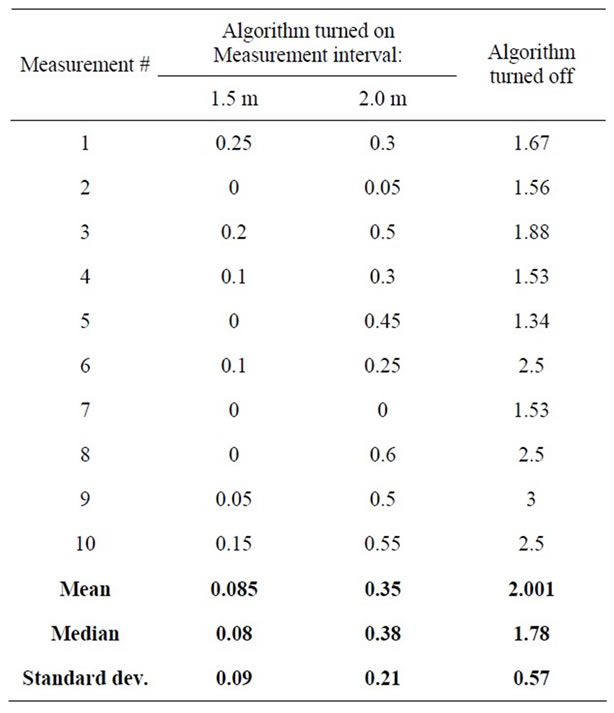

We tested the algorithm in a corridor in which it is easy to measure the distance between the correct path and the path used by the robot. The length of the path was ten meters, and the robot was programmed to walk on this path by using the proposed path correction algorithm. We also repeated this test when the correction algorithm was turned off. The test was carried out ten times, and the results are listed in Table 1. We also tested how much the measurement interval affects on the results, since the test was carried out with intervals 1.5 and 2 meters.

Results

As shown in Table 1, the walking path of the robot diverges from the correct path nearly two meters (median value 1.78 meters) in a walking distance of ten meters without using the path correction algorithm. By using the algorithm, the deviation from the correct path is around

Figure 4. A flow chart describing the walking path straightening.

Table 1. The deviation of the robot’s path from the straight line between two vertices with distance of ten meters.

90% smaller (median values 0.08 and 0.38 meters for measurement intervals of 1.5 and 2 meters, respectively).

4. Conclusions

Walking humanoid robots are nowadays widely being used in various assistive roles. However, most of these roles require ability for autonomous movement and navigation, and therefore precise walking is an essential requirement for the robot. The path correction algorithm presented has proved to be a powerful method for correcting the walking path, which enables precise mapbased indoor navigation by using the pre-defined vertices.

The algorithm itself is simple and does not cause significant computational cost for the robot’s processor. However, frequent ultrasound measurements with necessary direction corrections somewhat decrease the walking speed of the robot. On the other hand, using longer measurement intervals can minimize this. Our experimental results reveal that the measurement interval can be exceeded to two meters, if a mean deviation of 0.35 meters is acceptable. A more precise result of less than 0.1 meters mean deviation is achievable with interval of 1.5 meters. In conclusion, the final performance of the algorithm is a tradeoff between walking time and precision.

5. Acknowledgements

The authors wish to thank Technology Development Center of Finland (TEKES) for financial support.

REFERENCES

- H. Hirukawa, F. Kanehiro and K. Kaneko, “Humanoid Robotics Platforms Developed in HRP,” Journal of Robotics and Automation Systems, Vol. 48, No. 4, 2004, pp. 165-175. doi:10.1016/j.robot.2004.07.007

- H. Yussof, M. Yamano, Y. Nasu and M. Ohka, “Humanoid Robot Navigation Based on Groping Locomotion Algorithm to Avoid an Obstacle,” A. Lazinica, Ed., Mobile Robots: towards New Applications, I-Tech Education and Publishing, Vienna, 2006.

- D. Feil-Seifer and M. J. Matanic, “Socially Assistive Robotics,” IEEE Robotics and Automation Magazine, Vol. 18, No. 1, 2011, pp. 24-31. doi:10.1109/MRA.2010.940150

- A. Hornung, K. M. Wurm and M. Bennewitz, “Humanoid Robot Localization in Complex Indoor Environments,” Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, 18-22 October 2010, pp. 1690-1695.

- G. N. De Souza and A. C. Kak, “Vision for Mobile Robot Navigation: A Survey,” IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 24, No. 2, 2002.

- A. Barrera, “Advances in Robot Navigation,” InTech, Vienna, 2011.

- J. F. Seara and G. Schmidt, “Intelligent Gaze Control for Vision-Guided Humanoid Walking: Methodological Aspects,” Journal of Robotics and Autonomous System, Vol. 48, No. 4, 2004, pp. 231-248. doi:10.1016/j.robot.2004.07.003

- K. Y. Tu and J. Baltes, “Fuzzy Potential Energy for a Map Approach to Robot Navigation,” Journal of Robotics and Autonomous Systems, Vol. 54, No. 7, 2006, pp. 574- 589. doi:10.1016/j.robot.2006.04.001

- A. Clerentin, L. Delahoche, E. Brassart and C. Drocourt, “Self Localization: A New Uncertainty Propagation Architecture,” Journal of Robotics and Autonomous Systems, Vol. 51, No. 2-3, 2005, pp. 151-166. doi:10.1016/j.robot.2004.11.002

- Aldebaran Robotics. http://www.aldebaran-robotics.com/

- E. W. Dijkstra, “A note on Two Problems in connexion with graphs,” Numerische Mathematik, Vol. 1, No. 1, 1959, pp. 269-271. doi:10.1007/BF01386390