A New Filled Function with One Parameter to Solve Global Optimization ()

1. Introduction

Global optimization methods have wide applications in many fields, such as engineering, finance, management, decision science and so on. The task of global optimization is to find a solution with the smallest or largest objective function value. In this paper, we mainly discuss the method to find the global minimizer of the objective function. For some problems with only one minimizer, there are many local optimization methods available, for instance, the steepest decent method, the Newton method, the trust region method and so on. However, many problems include multiple local minimizers, and most of the existing methods will not be applicable to these problems.

The difficulty for global optimization is to escape from the current local minimizer to a better one. One of the most efficient methods to deal with this issue is the filled function method which was proposed by Ge [1] [2] . The generic framework of the filled function method can be described as follows:

1) An arbitrary point is taken as an initial point to minimize the objective function by using a local optimi- zation method, and a minimizer of the objective function is obtained.

2) Based on the current minimizer of the objective function, a filled function is designed and a point near the current minimizer is used as an initial point to further minimize the filled function. As a result, a minimizer of the filled function will be found. This minimizer falls into a better region (called basin) of the original objective function.

3) The minimizer of the filled function obtained in 2 is taken as an initial point to minimize the objective function and a better minimizer of the objective function will be found.

4) By repeating steps 2 and 3, the number of the local minimizers will be gradually reduced, and a global minimizer will be found at last.

Although the filled function method is an efficient global optimization method and different filled functions have been proposed, there are some drawbacks for the existing filled functions, such as more than one para- meters to be controlled, being sensitive to the parameters and ill-condition. For example, the filled functions proposed in [1] [2] contain exponent term or logarithm term which will cause ill-condition problem; the filled functions proposed in [3] [4] are non-smooth functions to which the usual classical local optimization methods can not be used; the filled functions proposed in [1] [5] [6] have more than one parameter which is difficult to adjust. To overcome these shortcomings, a new filled function with only one parameter is presented. Although it is not a smooth function, it can be approximated uniformly by a continuously differentiable function. Thus its minimizer can be easily obtained. Based on this new filled function, a new filled function method is proposed.

The remainder of this paper is organized as follows. Related concepts of the filled function method are given in Section 2. In Section 3, a new filled function is proposed and its properties are analyzed. Furthermore, an ap- proximate function of the proposed filled function is given. Finally, the method for avoiding numerical difficulty is presented. In Section 4, a new filled function method is proposed and the numerical experiments on several test problems are made. Finally, some concluding remarks are drawn in Section 5.

2. The Related Concepts

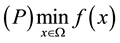

Consider the following global optimization problem with a box constraint:

where  is a twice continuously differentiable function on

is a twice continuously differentiable function on  and

and . Generally, we

. Generally, we

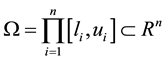

assume that  has only a finite number of minimizers and the set of the minimizers is denoted as

has only a finite number of minimizers and the set of the minimizers is denoted as

in

in  (

( is the number of minimizers of

is the number of minimizers of ).

).

Some useful concepts and notations are introduced as follows:

: A local minimizer of

: A local minimizer of  on

on  found so far;

found so far;

: Set;

: Set;

: Set;

: Set;

: A constant satisfying

: A constant satisfying ;

;

![]() : A constant satisfying

: A constant satisfying![]() .

.

Assumption. All of the local minimizers of ![]() fall into the interior of

fall into the interior of![]() .

.

Definition 1 The basin [7] of ![]() at an isolated minimizer

at an isolated minimizer ![]() is a connected domain

is a connected domain ![]() which

which

contains![]() , and in which the steepest descent sequences of

, and in which the steepest descent sequences of ![]() starting from any point in

starting from any point in ![]() con-

con-

verge to![]() , while the minimization sequences of

, while the minimization sequences of ![]() starting from any point outside of

starting from any point outside of ![]() doesn’t converge to

doesn’t converge to![]() . Correspondingly, if

. Correspondingly, if ![]() is an isolated maximizer of

is an isolated maximizer of![]() , the basin of

, the basin of ![]() at

at ![]() is defined as the hill of

is defined as the hill of ![]() at

at![]() .

.

It is obvious that if![]() , then

, then![]() . If there is another minimizer

. If there is another minimizer ![]() of

of ![]() and

and

![]() or

or![]() , then the basin

, then the basin ![]() of

of ![]() at

at ![]() is said to be lower (or higher) than

is said to be lower (or higher) than ![]() of

of

![]() at.

at.

The first concept of the filled function was introduced by Ge [1] [2] . Since the concept of the filled function was introduced, different filled functions are given (e.g., [8] [9] ). A new concept of the filled function was presented which is easier to understand in [8] . It can be described as follows:

The first concept of the filled function was introduced in [1] .

Definition 2 A function ![]() is said to be a filled function of

is said to be a filled function of ![]() at

at![]() , if it satisfies the following properties:

, if it satisfies the following properties:

1) ![]() is a strict local maximizer of

is a strict local maximizer of ![]() over

over![]() ;

;

2) ![]() has no stationary point in the set

has no stationary point in the set![]() ;

;

3) if the set ![]() is not empty, then there exists a point

is not empty, then there exists a point ![]() such that

such that ![]() is a local minimizer of

is a local minimizer of![]() .

.

Based on definition 2, we present a new filled function with only one parameter in Section 3.

3. A New Filled Function and Its Properties

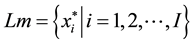

Assume that a local minimizer ![]() of

of ![]() has been found so far. Consider the following function for pro- blem (P):

has been found so far. Consider the following function for pro- blem (P):

![]() (1)

(1)

where ![]() is a parameter.

is a parameter.

The following theorems will show that the formula (1) is a filled function which satisfies definition 2.

Theorem 1 Suppose ![]() is a local minimizer of

is a local minimizer of ![]() and

and ![]() is defined by (1), then

is defined by (1), then ![]() is a

is a

strict local maximizer of ![]() for all

for all![]() .

.

Proof. Since ![]() is a local minimizer of

is a local minimizer of![]() , there exists a neighborhood

, there exists a neighborhood ![]() of

of![]() ,

, ![]() such that

such that ![]() for all

for all![]() . For all

. For all![]() ,

, ![]() , one has

, one has

![]() (2)

(2)

Thus, ![]() is a strict local maximizer of

is a strict local maximizer of![]() . □

. □

Theorem 2 Suppose ![]() is a local minimizer of

is a local minimizer of![]() ,

, ![]() is a point in set

is a point in set![]() , then

, then ![]() is not a stationary

is not a stationary

point of ![]() for all

for all![]() .

.

Proof. Due to![]() , one has

, one has ![]() and

and![]() , so

, so

![]()

Namely ![]() is not a stationary point of

is not a stationary point of![]() . □

. □

Theorem 3 Suppose ![]() is a local minimizer of

is a local minimizer of ![]() but not a global minimizer of

but not a global minimizer of![]() , which means

, which means ![]() is not empty, then there exists a point

is not empty, then there exists a point ![]() such that

such that ![]() is a local minimizer of

is a local minimizer of ![]() when

when![]() .

.

Proof. Since ![]() is a local minimizer of

is a local minimizer of![]() , and

, and ![]() is not a global minimizer of

is not a global minimizer of![]() , there exists

, there exists

another local minimizer ![]() of

of ![]() such that

such that![]() .

.

By the definition of ![]() and continuity of

and continuity of![]() , there exists a point

, there exists a point ![]() in rectangular area

in rectangular area![]() , such that

, such that

![]() (3)

(3)

So

![]() (4)

(4)

By ![]() is a local minimizer of

is a local minimizer of![]() , there exists a point

, there exists a point ![]() such that

such that ![]() ,

,

Then, there exists a point ![]() which is a

which is a

minimizer of![]() .

.

Furthermore, by the Theorem 2, one has![]() . Consequently, Theorem 3 is true. □

. Consequently, Theorem 3 is true. □

From Theorem 1, 2 and 3, we know that if there is a better local minimizer ![]() of

of ![]() than

than![]() , then there exists a point

, then there exists a point ![]() which is minimizer of

which is minimizer of![]() . It falls into a lower basin. These mean that if one minimizes

. It falls into a lower basin. These mean that if one minimizes ![]() with initial point

with initial point![]() , a better minimizer of

, a better minimizer of ![]() will be found.

will be found.

We can find that if ![]() is not a global minimizer of the objective function, then

is not a global minimizer of the objective function, then ![]() is non-diff- erentiable at some point in

is non-diff- erentiable at some point in![]() . Gradient-based algorithms for local optimization cannot be used to obtain the minimizer of

. Gradient-based algorithms for local optimization cannot be used to obtain the minimizer of![]() . A smoothing technique [10] to approximate

. A smoothing technique [10] to approximate ![]() is employed here as follows.

is employed here as follows.

Let

![]() (5)

(5)

where ![]() is a positive parameter. It is obvious that

is a positive parameter. It is obvious that ![]() is a differentiable. Further, because

is a differentiable. Further, because

![]()

and

![]()

we have that the inequality

![]() (6)

(6)

holds. From above discussion, we can see that ![]() uniformly converges to

uniformly converges to ![]() as

as ![]() tends

tends

to infinity. Therefore, by selecting a sufficiently large![]() , the minimization of

, the minimization of ![]() can be replaced by

can be replaced by

![]() (7)

(7)

In order to obtain a more precise minimizer of ![]() by solving

by solving![]() ,

, ![]() in

in ![]() should be large enough. However, if the value of p is too large, it will cause the overflow of the function values

should be large enough. However, if the value of p is too large, it will cause the overflow of the function values![]() . To prevent the occurrence of this situation, a shrinkage factor r is introduced to

. To prevent the occurrence of this situation, a shrinkage factor r is introduced to ![]() as follows.

as follows.

First of all, it is necessary to estimate ![]() and give a fixed and sufficiently large

and give a fixed and sufficiently large ![]()

(e.g., take it as![]() ) which guarantees that the

) which guarantees that the ![]() accurately approximates to

accurately approximates to![]() .

.

Then, in order to prevent the difficulty of numerical computation, a large ![]() can be taken. Finally,

can be taken. Finally,

![]() can be rewritten as

can be rewritten as

![]() (8)

(8)

By doing so, the existing shortcomings can be overcome.

4. A New Filled Function Algorithm and Numerical Experiments

4.1. A New Filled Function Algorithm

Based on the theorems and discussions in the previous section, a new filled function algorithm for finding a global minimizer of ![]() will be proposed, and then some explanations on the algorithm will be given. The details are as follows.

will be proposed, and then some explanations on the algorithm will be given. The details are as follows.

Step 1 (Initialization). Choose the initial values ![]() (e.g.,

(e.g.,![]() ), a shrinkage factor

), a shrinkage factor ![]() (e.g.,

(e.g.,![]() ), a lower bound of A (denote it as Lba), sufficiently large p and

), a lower bound of A (denote it as Lba), sufficiently large p and![]() . Some directions

. Some directions ![]()

are also given in advance, where ![]() and

and![]() ,

, ![]() ,

, ![]() is

is

the dimension of the optimization problems. Set![]() .

.

Step 2 Minimize ![]() starting from an initial point

starting from an initial point ![]() and obtain a minimizer

and obtain a minimizer ![]() of

of![]() .

.

Step 3 Construct

![]()

Set![]() .

.

Step 4 If![]() , then set

, then set ![]() and go to Step 5; otherwise, go to Step 6.

and go to Step 5; otherwise, go to Step 6.

Step 5 Use ![]() as an initial point for minimization of

as an initial point for minimization of![]() , if the minimization sequences of

, if the minimization sequences of ![]() go out of

go out of![]() , set

, set ![]() and go back to Step 4; Otherwise, a minimizer

and go back to Step 4; Otherwise, a minimizer ![]() of

of ![]() will be found in

will be found in ![]() and set

and set![]() ,

, ![]() ,

, ![]() and go back to Step 2.

and go back to Step 2.

Step 6 If![]() , the algorithm stops and

, the algorithm stops and ![]() is taken as the global minimizer of

is taken as the global minimizer of![]() ; Otherwise, decrease

; Otherwise, decrease ![]() by setting

by setting![]() , go to Step 3;

, go to Step 3;

Before we go to the experiments, we have to give some explanations on the above filled function algorithm.

1) In minimization of ![]() and

and![]() , we need to select a local optimization method first. In the pro- posed algorithm, the trust region method is employed.

, we need to select a local optimization method first. In the pro- posed algorithm, the trust region method is employed.

2) In Step 4, the smaller ![]() is needed to select accurately, in our algorithm, the

is needed to select accurately, in our algorithm, the ![]() is selected to guarantee

is selected to guarantee

![]() is greater than a threshold (e.g., take the threshold as

is greater than a threshold (e.g., take the threshold as![]() ).

).

3) Step 5 means that if a local minimizer ![]() of

of ![]() is found in

is found in ![]() and with

and with![]() , then a

, then a

better local minimizer of ![]() will be obtained by using

will be obtained by using ![]() as the initial point to minimize

as the initial point to minimize![]() .

.

4.2. Numerical Experiment

In this section, the proposed algorithm is tested on some benchmark problems taken from some literatures.

Problem 1. (Two-dimensional function)

![]()

where![]() . The global minimum solution satisfies

. The global minimum solution satisfies ![]() for all

for all![]() .

.

Problem 2. (Three-hump back camel function)

![]()

The global minimum solution is ![]() and

and![]() .

.

Problem 3. (Six-hump back camel function)

![]()

The global minimum solution is ![]() or

or![]() , and

, and ![]() .

.

Problem 4. (Treccani function)

![]()

The global minimum solution are ![]() and

and ![]() and

and![]() .

.

Problem 5. (Goldstein and Price function function)

![]()

where![]() , and

, and

![]() . The global minimum solution is

. The global minimum solution is

![]() and.

and.

Problem 6. (Two-dimensional Shubert function)

![]()

This function has 760 minimizers in total. The global minimum value is![]() .

.

Problem 7. (Hartman function)

![]()

where ![]() is the

is the ![]() th element of vector

th element of vector![]() ,

, ![]() and

and ![]() are the elements at the

are the elements at the ![]() th row and the

th row and the ![]() th column of matrices

th column of matrices ![]() and

and![]() , respectively.

, respectively.

![]()

for![]() ,

,

![]()

The known global minimizer is ![]() so far.

so far.

For![]() ,

,

![]()

![]()

The known global minimizer is ![]() so far.

so far.

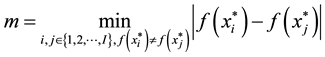

Enerally, in order to illustrate the performance of the filled function method, it is necessary to record the total number of function evaluations of ![]() and

and ![]() until the algorithm terminates. The numerical results of the proposed algorithm are summarized in Table 1 for the above 7 problems.

until the algorithm terminates. The numerical results of the proposed algorithm are summarized in Table 1 for the above 7 problems.

Additionally, the proposed algorithm is compared with the algorithm presented in [11] . A series of minimizers obtained by the above two algorithms are recorded in Tables 2-14 for all testing problems.

Some symbols used in the following tables are given firstly.

![]() : The initial point which satisfies

: The initial point which satisfies![]() .

.

![]() : An approximate global minimizer obtained by the proposed algorithm.

: An approximate global minimizer obtained by the proposed algorithm.

![]() : The total number of function evaluations of

: The total number of function evaluations of ![]() and

and ![]() until the algorithm terminates.

until the algorithm terminates.

The initial value of ![]() is taken as 1 for all problems.

is taken as 1 for all problems.

![]() : The iteration number in finding the

: The iteration number in finding the ![]() th local minimizer of the objective function;

th local minimizer of the objective function;

![]() : The

: The ![]() th local minimizer;

th local minimizer;

![]() : The function value of

: The function value of ![]()

![]() : The algorithm proposed in this paper;

: The algorithm proposed in this paper;

![]()

Table 1. Numerical results of all testing problems.

![]()

Table 2. Computational results for problem 1 with![]() .

.

![]()

Table 3. Computational results for problem 1 with![]() .

.

![]()

Table 4. Computational results for problem 1 with![]() .

.

![]()

Table 5. Computational results for problem 2 with initial point![]() .

.

![]()

Table 6. Computational results for problem 2 with initial point![]() .

.

![]()

Table 7. Computational results for problem 3 with initial point![]() .

.

![]() : The algorithm proposed in reference [11] .

: The algorithm proposed in reference [11] .

According to the Tables 2-13, we will find that our algorithm is effective, and it is affected by the initial va- lue of ![]() and the selection of

and the selection of![]() . The larger initial value of

. The larger initial value of![]() , the less local minimizer will be found and

, the less local minimizer will be found and

![]()

Table 8. Computational results for problem 3 with initial point![]() .

.

![]()

Table 9. Computational results for problem 3 with initial point![]() .

.

![]()

Table 10. Computational results for problem 4.

![]()

Table 11. Computational results for problem 5.

![]()

Table 12. Computational results for problem 6.

![]()

Table 13. Computational results for problem 7.

![]()

Table 14. Computational results for problem 7 with![]() .

.

also the lower computation cost will be; meanwhile, if the function value of the current local minimizer is closed to that of the global minimizer, then the sufficiently small ![]() is necessary, while a relatively large initial value of

is necessary, while a relatively large initial value of ![]() will cause increasing of number of iterations. Therefore, the initial value of

will cause increasing of number of iterations. Therefore, the initial value of ![]() and

and ![]() are needed to be selected accurately. The selection of

are needed to be selected accurately. The selection of ![]() ensure the accuracy of the global minimizer, so that the sufficiently small

ensure the accuracy of the global minimizer, so that the sufficiently small ![]() and appropriate small initial

and appropriate small initial ![]() should be selected or

should be selected or ![]() contained in the algorithm is taken as small as possible.

contained in the algorithm is taken as small as possible.

5. Concluding Remarks

The filled function method is a kind of efficient approaches for the global optimization. The existing filled func- tions have some drawbacks, for example, some are non-differentiable functions, some contain more than one adjust parameter and some contain ill-condition terms and so on. These drawbacks may result in failure or diff- iculty of the algorithm in searching global optimal solution. In order to overcome these shortcomings, a new filled function with only one parameter is proposed in this paper. Although the proposed filled function is non- differentiable at some points, it can be approximated uniformly by a continuous differentiable function. The inherent shortcomings of the approximate function can be eliminated by simple treatment. The effectiveness of the new filled function method is demonstrated by numerical experiments on some testing optimization pro- blems.

Acknowledgements

This work was supported by the National Nature Science Foundation of China (No. 11161001, No. 61203372, No. 61402350, No. 61375121), The Research Foundation of Jinling Institute of Technology (No. jit-b-201314 and No. jit-n-201309) and Ningxia Foundation for key disciplines of Computational Mathematics.