1. Introduction

The importance of biometric recognition is increasing each day and different technologies have been developed according to the different requirements of the users [1]. In the world epidemic environment, masks have become a necessity in order to reduce the contact between people, so for biometric technologies, palm-print recognition is easier compared to face recognition. Palmprints as a biometric feature were first used for personal identification about a decade ago [2] and have been widely studied for their uniqueness, cost-effectiveness, user-friendliness and high accuracy [3]. Various palmprint recognition systems have been developed and many palmprint recognition algorithms have been proposed [4] [5] [6] [7]. The palmprint region of interest is also an important aspect of palmprint recognition research. Kumar et al. [8] applied distance transformation on the binarized palmprint image after the deflection angle, obtained the Euclidean distance, took the largest Euclidean distance as the center point, and took this as the center to intercept a fixed size rectangle (300 × 300) as ROI. The principle of this method is simple, but the obtained ROI area is too large, and the center point positioning error is susceptible to arise. Saliha et al. [9] calculated the boundaries by determining the limits of the palm contour on the four sides, and extracted key points by calculating local minima. However, this method is prone to interference leading to errors in local minima, thus giving inaccurate keypoint positions and leading to ROI interception errors. Lin et al. [10] proposed an inscribed circle-based ROI extraction algorithm, in which the ROI region was located and extracted based on the maximum inscribed circle and centroid method. The disadvantage of it is that sometimes the largest inscribed circle of the palm cannot be accurately detected, resulting in poor ROI extraction performance. Various extraction methods have their own areas for improvement. Different methods of palmprint ROI extraction are used for better feature extraction.

In recent years wavelet transform has been used as a powerful analysis tool in several fields and has a relatively wide application in the field of image processing and retrieval [11] [12]. Wavelet analysis overcomes the short-time Fourier transform’s shortcomings in single resolution and has multi-resolution analysis characteristics, which can effectively distinguish the signal from noise and form a necessary tool for image analysis, thus better able to perform better image processing. Wavelets have played an important role in the field of image analysis as a tool with good multi-scale and time-frequency localization characteristics. Image retrieval using wavelet properties is applied in many ways [13] [14] [15], for example, Prathibha et al. [16] applied wavelet transform retrieval to medical images. Wong et al. [17] combined wavelet transform retrieval with face images. Qiang et al. [18] used wavelet transform for tire pattern image retrieval. In this paper, palmprint region of interest and wavelet transform are combined for image retrieval.

In this paper, a two-dimensional wavelet transform is applied to the palmprint region of interest (ROI) to decompose at different scales. The processed image is divided into low-frequency part and high-frequency part, and different feature vector combinations are obtained, which can better analyze the palmprint image. to search. The rest of this paper is organized as follows, and Section II presents the principles and techniques of some related works. Section III presents the experiments and results. The fourth section is the conclusion of the article.

2. Fundamental Principles and Critical Technologies

Firstly, the desired palmprint image is acquired and pre-processed, the palmprint image is ROI extracted, the extracted image is wavelet transformed and feature vectors are extracted, and finally the retrieval rate is calculated. The flow chart is shown in Figure 1.

In the process of extracting ROI the palmprint region of interest is extracted using the distance between the center of mass and the boundary, and rotation correction is performed on the basis of tangent, which is defined as follows:

(1)

(1)

Wavelet analysis is a time-frequency analysis method developed on the basis of Fourier transform and short-time Fourier transform. In the display of spectrum images, when the Fourier transform directly displays the spectrum, the dynamic range of the image display device is limited. Frequently cannot meet the requirements, thus losing a lot of dark details, so the logarithmic transformation is generally used to reinforce the darker parts of an image, so as to expand the darker pixels in the compressed high-value image. The expression for the logarithmic function is:

(2)

(2)

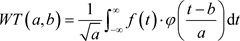

where c is the proportional scale constant, s is the source gray value and t is the transformed target gray value. It can be seen from the function curve that the slope is higher when the gray value is low, and the slope is low when the gray value is high. The wavelet transform inherits and develops the idea of time-frequency localization of the short-time Fourier transform. The expression of the wavelet transform is:

(3)

(3)

![]()

Figure 1. Palmprint image retrieval procedure diagram.

where: a is called scale, which is used to describe the scaling of the wavelet function; b is called shift, which is used to describe the shift scale of the wavelet function. The reciprocal of the scale is proportional to the frequency, and the shift b corresponds to time.

The function of the two-dimensional wavelet transform is one of the simplest and earliest used orthogonal wavelet basis functions with tight support, which is formulated as follows:

(4)

(4)

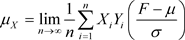

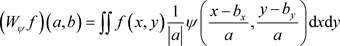

The two-dimensional wavelet transform is developed on the basis of the one-dimensional wavelet. The two-dimensional wavelet transform expression is:

(5)

(5)

where bx and by are the translation factors in two dimensions. A two-dimensional wavelet decomposition is performed on the image, and the decomposition equation is as follows:

(6)

(6)

(7)

(7)

(8)

(8)

(9)

(9)

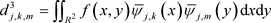

where  is the original image; R2 is the two-dimensional square productable space;

is the original image; R2 is the two-dimensional square productable space;  is the scale basis function;

is the scale basis function;  is the wavelet basis function;

is the wavelet basis function;  is the conjugate of

is the conjugate of ;

;  is the conjugate of

is the conjugate of ; d1, d2, d3 are the high frequency components in the horizontal, vertical and diagonal directions respectively; C is the low frequency component; j represents the decomposition to the j-th level, and

; d1, d2, d3 are the high frequency components in the horizontal, vertical and diagonal directions respectively; C is the low frequency component; j represents the decomposition to the j-th level, and .

.

3. Experiments and Results

3.1. Image Acquisition

The images used in this paper are the CASIA palmprint image database of the Chinese Academy of Sciences for Automation Research, which is all grayscale images with uniform black background and few interference factors. The first 200 groups of left palmprint images were used for the experimental group images, and each group of images was 6. The database images are shown in Figure 2. This database acquires images with variable angles and positions, which is conducive to testing the feasibility of the program.

3.2. ROI Extraction

Palm images have many variations in lighting, angle and orientation during the shooting process, and the same set of images may have deviations such as rotation

or translation. In order to keep the orientation of the extracted region of interest consistent, and also to decrease the noise in the image, the palm image needs to be pre-processed first. The process of preprocessing and extracting ROI for palm images in this experiment is as follows.

1) The binarization of the palm image is to set the grayscale of the points on the image to 0 or 255, which means that the whole image will have a distinct black and white effect, as shown in Figure 3. The grayscale image with 256 brightness levels is selected with appropriate threshold values to obtain a binarized image that can still reflect the overall and local characteristics of the image. The purpose of the binarization process is to extract the edges of the palm image, and the edge data of the palm image is stored in an array.

2) Find the center-of-mass coordinates of the image, establish a connection with the edge coordinates of the hand, and calculate the Euclidean distance from the edge coordinates to the center-of-mass, whose distance formula is:

![]() (10)

(10)

3) The edge data with distances are smoothed to find the maximum of them as well as the data location to find the rough coordinates of the region of interest. The top and bottom coordinates of the found coordinates are sorted and Euclidean distance calculation is performed. The image is rotated and corrected so

![]() (a)

(a)![]() (b)

(b)

Figure 3. Palmprint image binarization (a) Histogram of the original grayscale image; (b) Binarized palm image.

that the image orientation is consistent and tracks the center-of-mass trajectory, and the top and bottom coordinates are found in the rotated image. And determine the ROI position and size, as shown in Figure 4.

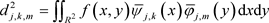

3.3. Wavelet Transform Retrieval

In this paper, two-dimensional wavelets are used to perform the transformation. The two-dimensional wavelet decomposition and reconstruction process is shown in Figure 5. The decomposition process is as follows: firstly, 1D-DWT is performed on each row of the image to obtain the low-frequency component L and the high-frequency component H in the horizontal direction of the original image, and then 1D-DWT is performed on each column of the transformed data to obtain the low-frequency component LL in the horizontal and vertical directions, the low-frequency in the horizontal direction and the high-frequency in the vertical direction of the original image, LH, the high-frequency in the horizontal direction and the high-frequency in the vertical direction, and the high-frequency

![]() (a)

(a) ![]() (b)

(b) ![]() (c)

(c)

Figure 4. Rotationally corrected ROI image (a) Rotationally corrected image; (b) ROI frame selection; (c) ROI image.

![]()

Figure 5. Two-dimensional wavelet decomposition and reconstruction process diagram.

component HH in the horizontal and vertical directions. The reconstruction process is as follows: first, each column of the transformed data is inverted by a discrete wavelet, and then each row of the transformed data is inverted by a one-dimensional discrete wavelet to obtain the reconstructed image. From the above process, it can be seen that the wavelet decomposition of the image is a process of separating the signal according to the low frequency and the directed high frequency, and further wavelet decomposition can be performed on the obtained LL components according to the need in the decomposition process until the requirement is achieved.

In this work, the palmprint region of interest images is transformed at three scales and four scales for pyramidal oriented filter bank decomposition. The data after the image undergoes wavelet decomposition is subjected to eigenvalue operations: including standard deviation, smoothness, absolute value mean, variance, roughness, and kurtosis. The eigenvalues of each scale variation are extracted and the Canberra distance is calculated to calculate the average retrieval rate. n-dimensional space of the Canberra distance is:

![]() (11)

(11)

The proposed scheme uses MATLAB to retrieve the image database. The database used contains 200 palmprints of different people, each person includes 6 palmprint images, and the size of the images is 192 × 192 pixels. First, the feature vector of each image is calculated to establish a multi-feature vector combination. Next, a single feature quantity distance calculation and a multi-feature vector distance calculation are performed. Finally, after obtaining the Canberra distance data, the retrieval rate calculation is performed. In the retrieval rate calculation, the 6th, 20th, 30th, 40th, 50th, 60th, 70th, 80th, 90th, and 100th images in the first 100 similar images are taken for calculation, and finally their average retrieval rates are taken respectively. During the operation process of the experimental group, different wavelet scales and filter combinations were transformed. The filters are pkva, 9-7, 5-3, Burt, haar. Table 1 is the comparison of the retrieval rates of different filter banks of three scale transformations, and Table 2 is the comparison of retrieval ratios of different filter banks of four scale transformations. Among them, R1, R2, R3, R4, R5, and R6 represent standard deviation, smoothness, absolute value mean, variance, roughness, and kurtosis, respectively.

The average retrieval rate results for different eigenvector combinations are slightly different, and in this scheme, the retrieval rate of all third-order wavelet transforms is lower than that of fourth-order transforms. The image retrieval rate of the feature combination of smoothness, absolute value mean, variance,

![]()

Table 1. Comparison of retrieval rates of different filter banks at three scales.

![]()

Table 2. Comparison of retrieval rates of different filter banks at four scales.

roughness, and kurtosis is higher than other feature combinations. The filter combination retrieval rate of haar and pkva, 9-7, 5-3 is the same. Among all filter combinations, the combination of Burt and pkva has the lowest retrieval rate. The filter combination of pkva and pkva for four-scale transformations, the feature vector combination of smoothness, absolute value mean, variance, roughness, and kurtosis has the highest retrieval rate and the retrieval rate is about 0.94.

4. Conclusion

In this paper, a wavelet transform ROI palmprint image retrieval system is proposed on the basis of image processing. By determining the palmprint region of interest, decomposing the palmprint region of interest using two-dimensional wavelet transform, obtaining the low-frequency components and high-frequency components by third-order decomposition and fourth-order decomposition of the image by wavelet function, extracting the ROI feature vectors after different filter combinations, performing distance criterion operation on the extracted feature vectors, and combining different feature vectors for retrieval rate calculation. The experimental results show that the highest retrieval rate for the combination of feature vectors of absolute value mean, kurtosis, variance, roughness and smoothness is 0.9374 for ROI images using pkva and pkva filter combinations for four scales of transformation. The retrieval system is to better extract feature vectors and lay the foundation for palmprint image recognition and application. This system still has a lot of room for improvement, and the speed and retrieval rate of palmprint processing can be better improved by continuously improving wavelet transform and feature extraction methods.