An Ensemble Learning Recommender System for Interactive Platforms ()

1. Introduction

Nowadays, interactive platforms such as Youtube, Amazon and Deezer generate a huge amount of data. Those data are often taken either from the clicks or the views or the comments of the users during their sessions of interactions with the system. Due to the overwhelmingness of the amount of those data, it becomes more difficult to compute the best items to recommend to users. But on another hand, it is a gift because, the more we have historical interactions of users, the more we could be precise in predictions. Such Recommender Systems that make use of the past interactions of users are generally classified in the collaborative filtering group [1] [2] [3]. In this paper, we suggest a combination of two techniques of this group in order to improve the accuracy of the resulting system. Such an approach is called an Ensemble Learning approach [4] [5]. The research purposes are to set up a system of recommendations based on hybrid filtering to reduce the user’s effort in finding items that would interest him, to offer items that he would not have thought of, to increase the time spent by the user on the system, to promote unpopular items and to assist the user in the choice of items. Hence, this paper is organized as follows: A state of the art of Collaborative Filtering techniques is presented in Section 2, Section 3 presents our algorithms, and Section 4 presents our results and finally a conclusion with some remarks and prospects.

2. State of Art of Collaborative Filtering

Before starting with the algorithms, let’s introduce the following conventions. We denote:

•

: the set of users;

•

: the set of items;

• R: the

matrix of ratings (so

represents the rating of the user

to the item

) (note that for use, in R lines represent the users, and in columns we have the items. And when a user has not already rated an item,

);

•

: the predicted rating of the user

to the item

;

•

: the set of items rated by the user

;

•

: the set of users that rated the item

;

•

: the average of ratings for the user

(calculated on

);

•

: the average of ratings for the item

(calculated on

).

2.1. Memory-Based Filtering

Memory-based filtering approaches compute predicted ratings using the similarities between either users or items [6] [7]. They are divided into two groups, user-based filtering and item-based filtering.

The user-based filtering consists in using the similarities between a given user and the others to predict his rating for a given item. The prediction is given by the following formula.

[8] (1)

where

represents the similarity between the user

and the user b, N can be the set of users whose similarities with the user

are positive.

The item-based filtering consists in using the similarities between a given item and the others to predict its rating from a given user. The prediction is given by the following formula.

[9] (2)

where

represents the similarity between the item

and the item p, M can also be the set of items which similarities with the item

are positive.

There are several ways to compute the similarity between elements. The most common is the Pearson Correlation Coefficient [9], the cosine similarity and the cosine adjusted similarity [8]. The Pearson Correlation Coefficient for the user-based filtering is given by the formula

(3)

where

is the set of items both rated by

and

.

Memory-based filtering has the advantage of being easy to implement and its database is relatively easy to update when getting new information. Nevertheless, it is very slow because it uses all the databases for every prediction and it produces imprecise predictions.

2.2. Model-Based Filtering

Model-based filtering consists in building a model that tends to approximate the rating patterns of users [10] [11], in order to predict their future ratings. It is usually based on Machine Learning techniques such as classification, clustering, neural networks, and so on. A model-based filtering technique could also rely on a probabilistic model. In this case, the model would use the historical interactions of users to build a probabilistic model that would help to compute the probability of certain interactions for a certain user, and based on those probabilities determine the most relevant items for users.

A common model-based filtering technique is matrix factorization. The idea here is to break down R into two Matrices, P a m × k matrix and Q a k × n matrix so that we have

for non-zero entries in R. After that, the zero entries in R are predicted by their corresponding entries in

. The optimal value of k is searched during the training of the model [7] [9] [12].

3. Our Approach

3.1. The Probabilistic Model

It consists first of all storing user activities by sessions. We classify a user’s activity into groups depending on the logic of the platform: the clicks, the research, the reviews and the bookmarks if it exists in the platform. Thus, we evaluate the probability that an item gets interesting for a user based on his historical actions. This probability is given by the law of total probability. Thus, for each user, we have a list of items (with the probability of interest). This list is ranked in descending order of probability. Let’s define the following events:

: “The user u is interested in the item o”;

: “The user u clicks on at least one item”;

: “The user u bookmarks at least one item”;

: “The user u makes a review about at least one item”.

Therefore, by assuming that the events

are independent, the probability that an item o is among the recommendations of a user u during his session defined by:

(4)

We consider that the number of occurrences of each of the actions

follow a poisson distribution. So we have to estimate the parameter

of each of these distributions.

For that, we consider the samples

where

and

is the random variable corresponding to the number of occurrences of the action i by the user u during the session j. We assume that given an user u and an action i, the random variables

are independent and identically distributed.

So, by the maximum likelihood estimator of the parameter of a poisson distribution, we have

(5)

Then,

(6)

3.2. The Matrix Factorization

It consists of grouping in matrix form the explicit notes that users have given to items. It will be using this matrix whose number of lines represent the number of users, and the number of columns that of items; to predict a note to items that have not been voted by the users i.e. to assign a non-zero value (if possible) to the matrix cells having the value 0. The resulting matrix of the prediction will have to take many parameters to know:

• Average marks awarded to all items;

• The average of the scores attributed to a given item;

• The average of the ratings voted by a given user;

• Influence areas of a point of interest.

So, we use the latent factor method which is a type of matrix factorization. The factorization of the matrix (M) consists in proposing two matrices, one (P) representing the users and the other the items (Q), which, multiplied together, will produce approximately this matrix. So, if a matrix is m × n, where m is the number of users and n is the number of items, we need an m × d matrix and a d × n matrix as factors, where d is chosen small enough for the calculation to be effective. After, we have taken d = 3. The score predicted by a given user for a given item is then the scalar product of the vector representing the user and the vector representing the item. This is expressed as follows:

[13] (7)

represents the explicit note that the user u gave to the item o. Then,

.

By adding a bias in the mix, the corrected prediction is given by:

[13] (8)

The formula introduces three new variables:

•

: the average score given on all the items by all users;

•

: the bias introduced by item. This is the difference

between and all the notes given to the item o;

•

: the bias introduced by the user. This is the difference between

and all the notes given to the user u.

But, the predictions do not take yet into account the location description above. The final equation is:

(9)

is a vector which represents the activity areas of user u. Each

indicates the possibility that a user u will appear in the location k of items. Thus,

indicates the influence of a point of interest o at a location k. It is given by standard normal distribution.

[14] (10)

is given by Haversine formula [15] knowing coordinates points of an item place and location k.

So, get value of

and

means to minimize the following equation:

(11)

is the explicit note the useru gave at the item o. If the user does not rate that item,

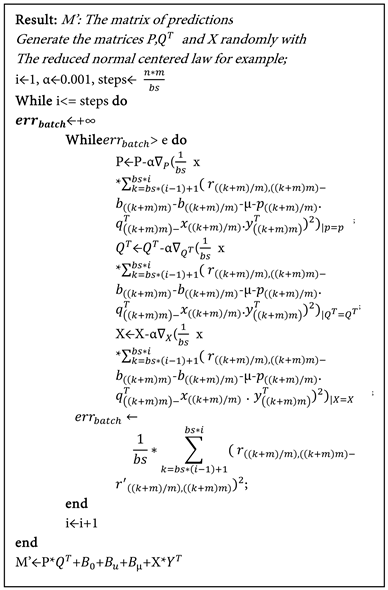

is equal to 0. We can easily minimize with the fixed pitch gradient. The main disadvantage of this algorithm is that updating the model so frequently is more computationally expensive and takes a lot longer to build models with large data sets. A much efficient algorithm is the descent of the stochastic gradient called “mini-batch gradient descent”. It consists in dividing the learning data set into small fixed-size batches used to calculate the error of the model coefficients (Algorithm 1).

While considering the following annotations:

•

: The matrix of predictions noted

;

• M: matrix of explicit notes. These are the notes of items voted by users noted

;

•

: matrix which each column represents a vector which represents the influence areas of point-of-interest noted

;

Algorithm 1. Custom mini bath gradient descent.

•

: vector which the average marks awarded to items;

•

: vector which represents the average explicit user notes;

•

: total average of items;

•

: matrix with the element have the same value

;

•

: the tolerance strictly greater than 0 and very small;

• u represents a user; i represents an item; n represents the number of users;

• m represents the number of items;

• bs represents the size of batch;

•

is mathematical expression which means of f in x applied in a.

3.3. The Combination Approach

The recommendation system uses a combination of the probabilistic model and the matrix factorization approach. Before predicting an o item on a user u, the probabilistic model predicts

score; and the matrix factorization predicts

score. The combination will predict

score which consists of averaging all predicted scores for the recommendation of item o to user u (Algorithm 2).

Algorithm 2. Top recommends.

While considering the following annotations:

• u: a user;

• o: an item;

•

: list of the items predicted by the matrix factorization to the user u. We denote

a score of item o of this list;

•

: list of the items predicted by the probabilistic model to the user u. We denote

a score of item o of this list;

•

: list of the items predicted by the combination approach to the user u at the last session. We denote

a score of item o of this list;

•

: list of the items predicted by the combination approach to the user u at a new the session. We denote

a score of item o of this list;

•

and

are the minimum and maximum value of

respectively;

n is the number of top items to recommend to the user u.

4. Our Results

The quality of a recommendation algorithm can be evaluated using different types of measurement

• Statistical accuracy metrics, which evaluate the accuracy of a filtering technique by comparing the predicted ratings directly with the actual user rating. One most popular measures for estimating the accuracy of grade predictions is Root Squared Mean Error (RMSE). Let I be the set of items and U that of the users. Let

be a test set of items,

a user note prediction u for item i and

the actual score assigned by the user u for the item i.

[11] (12)

• Decisions support accuracy metrics. We focuse on the following metrics: accuracy, precision, recall and f1 Score. These metrics help users in selecting items that are of very high quality out of the available set of items. Table 1 represents the possible outcomes after the recommendation process is applied.

• Accuracy is a ratio of correctly predicted observations to the total observations. Precision is the ratio of correctly predicted positive observations to the total predicted positive observations. Recall is the ratio of correctly predicted positive observations to the total useful predicted observations and f1 Score is the weighted average of Precision and Recall.

[11] (13)

[11] (14)

[11] (15)

[11] (16)

The assessment of the resulting model is made solely by the model produced by the matrix factorization method. So the problem we encountered was in the number of latent vectors. The first problem concerns the RSME error as a function of the number of latent vectors. And the second concerns the RMSE error as a function of the number of learning iterations.

From Figure 1, it is clear that the lower the number of latent vectors, the better we have RMSE error (Root Mean Squared Error). In Figure 2, we visualize the error RMSE according to the number of iterations for 3 latent vectors after each 100 iterations. And in Figure 3, we visualize the error RMSE according to the number of iterations for 10 latent vectors after each 50 iterations. We observe these two figures, the diminution of the error. Also, this decrease is almost similar. But in Figure 1, we notice a better RMSE error for 3 latent vectors compared to 10 latent vectors. This leads us to fix the number of latent vectors at 3; which is a wise choice since the number of latent vectors chosen must be the smallest possible. Knowing that we have opted for 3 latent vectors, Figure 4 gives

![]()

Table 1. Outcomes after the applied of recommendation process.

Legend: Appreciated: properly predicted model; Not appreciated: improperly predicted model; Recommended: exact outcome; Not recommended: incorrect outcome.

![]()

Figure 1. RMSE according to the number of latent vectors.

![]()

Figure 2. RMSE based on the number of learning iterations for 3 latent vectors.

![]()

Figure 3. RMSE based on the number of learning iterations for 10 latent vectors.

![]()

Figure 4. Value between 0 and 1 depending on the type of evaluation measures for 3 latent vectors.

us the evaluation measures for this collaborative filtering. We find better values for rmse, precision, recall and f1 score. The evaluation measure accuracy is bad because the matrix factorization method merely predicts a matrix so as to minimize a certain error. So it is possible to find no good prediction since this method is concerned to minimize a certain error in a local minimum.

5. Discussion

In the family of collaborative filtering techniques in recommender systems, the most popular is the matrix factorization technique. Most of the time, a bias is added to the technique. This bias is to adjust the fact that some users give ratings more elevated than others and also the fact that some items have more ratings than others [16]. In this work, we suggested a combination of a matrix factorization algorithm with a bias like the one used in [16] to a probabilistic model. This aims to get the best of both of these techniques in order to improve the recommendation accuracy. We got a better result than the matrix factorization alone, but we need to improve our probabilistic model in order to consider more events from the user or to get a better estimation of the law followed by those events.

6. Conclusion

In this paper, we suggested a combination of two standard approaches to recommender systems in order to improve the accuracy of their predictions. Indeed, we got better results than the standard techniques that we combined which were the matrix factorization and a probabilistic model. However, this work has some limitations. In fact, the set of events used to compute the probability in our probabilistic model is not a full set of events and also a parallelization of our matrix factorization algorithm could reduce the execution time.

Acknowledgements

We also thank Pr. Thomas BOUETOU BOUETOU, head of the Department of Computer Engineering for providing us with an adequate working area. We also thank the anonymous referees for their useful suggestions.