Algorithm for Gesture Recognition Using an IR-UWB Radar Sensor ()

1. Introduction

The impulse radio ultra-wideband (IR-UWB) technology uses extremely wide bandwidth impulse signal to detect moving objects by transmitting and receiving impulse signal [1]. IR-UWB technology has many outstanding features such as high range resolution, good penetrability [2] low power consumption and so on. Due to these features, IR-UWB becomes a technology which receives a great deal of attention in many application fields, for example ranging and positioning system [3] [4], human detection [5] [6], vital sign monitoring [7] and so on.

Human gesture recognition has become a popular research topic for a long time. Gesture recognition algorithms using other sensors such as cameras, RFID have been studied for years. Many of them have already been used in practical. Yet there exists problems in some ways for users, for example, privacy and inconvenience. IR-UWB radar can avoid these problems. Unfortunately, till now, researches of gesture recognition using IR- UWB radar could be rarely found. Because of the features IR-UWB technology owns, gesture recognition using IR-UWB radar is very likely to be achieved. In this paper, a gesture recognition algorithm using an IR-UWB radar sensor is presented. The algorithm mainly observes and analyzes the moving direction, moving distance and the frontal surface area of hand towards IR-UWB radar sensor. The algorithm could recognize 6 different hand gestures and performs quite well according to the experimental results.

In this paper, we describe the signal model in Section 2. In Section 3, we introduce our gesture recognition algorithm. The experimental results will be analyzed in Section 4. Finally we conclude the paper.

2. Signal Model

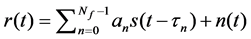

The signal received from the IR-UWB radar sensor can be represented as a summation of the multipath signals, target signals, and additive white Gaussian noise (AWGN) as follows [1]:

. (1)

. (1)

In Equation (1),  and

and  represent the amplitude and time delay of the nth received signal respectively. Whereas

represent the amplitude and time delay of the nth received signal respectively. Whereas  is white Gaussian noise of the channel,

is white Gaussian noise of the channel,  is transmitted template signal, and

is transmitted template signal, and  is the number of the signal path.

is the number of the signal path.

3. Gesture Recognition Algorithm

The gesture recognition algorithm in this paper is based on the moving direction of the hand and the change of the frontal surface area of hand towards IR-UWB radar sensor. The algorithm is about how we define different gestures when using only one hand, and while moving this hand, how we analyze the location change, and energy change of the hand part of the signal received from radar, which is called raw signal. After removing the clutter signal from raw signal, we get background subtracted signal.

To enter the main part of the gesture recognition algorithm, a human has to first stand still for a few seconds (to distinguish from people who just walk on by). After that, this human has to put out his hand towards the radar sensor for a while, arm straight or bended, palm towards the radar sensor. This will be considered as a ready action, to distinguish from people who just stand still in the observation range without doing anything. After these steps, one can start doing further gestures which will be discussed below. All of the gestures we designed by using the proposed algorithm are 2-step gestures.

Detecting the existence of human and hand is the first important part in the gesture recognition algorithm. Here we compare the signal with the threshold determined by us. If a moving object shows up in the observation range, the signal of that part will exceed the threshold. Then we say something moving is detected. If this lasts for a while, at the same time if the part of the signal that exceed the threshold is almost the human body size, we say human body is detected. When analyzing the change of the frontal surface area of hand towards radar sensor, we utilize information from raw signal such as peak value of amplitude, energy. By comparing the location change, energy change of moving object in raw signal, the gesture recognition algorithm could distinguish 6 kinds of gestures. The logic flow of the gesture recognition algorithm is shown as follows:

1) Human detect. Keep comparing received signal with the threshold. When the moving object stays at one place for over a few seconds, we recognize this object as human body and then go to step II.

2) Ready action detect. Ignore the signals of the human body and the part behind it. Then same as in step II, if hand ready action is detected, go to step III.

3) Hand gesture recognition. The 6 kinds of gestures we proposed are all 2-step gestures, which means after ready action is detected, this hand should move two more times to make this whole gesture process recognized by the gesture recognition system.

a) Through first sub step, we will analyze the moving direction of the hand. Therefore the hand could move forwards or backwards or stay at the same place during next few seconds (keep palm towards the radar all the time).

b) Through second sub step, we will analyze the change of the frontal surface area of the hand (more details will be described in the example below).

4) By combining the changes mentioned in step III we may create many multi-step gestures, here in this paper we only use our algorithm to recognize 6 basic ones of them.

Here we will give a detailed description using figures by taking one of the 6 gestures as an example. Figure 1 shows the experimental environment and the signal when no moving objects show up in the observation range. Figures 2-7 show 6 step by step gesture processes. We will take the gesture process in Figure 2 as an example and explain the process.

In Figure 2, when a human stands in front of an IR-UWB radar sensor, after the human body is recognized, he put out his arm and has his palm towards radar at position P1 as shown in the first picture in Figure 2. We define this position as P1 while the half-arm position as P2. After a while this ready action is recognized, then he moves his palm to P2 as shown in the second picture. Then the system recognizes that the position of the hand has changed. Then he flats his palm within a few seconds as shown in the third picture, we define this sub gesture as G3. Therefore, after all these steps are recognized, the result will show “P1−> P2−> G3”. At last step of the gesture, if he lays down the whole arm as shown in the third picture in Figure 3, this sub gesture will be defined as End and the result would be “P1−>P2−>End”.

By using combination of these sub gestures, we created 6 kinds of gestures. We define them as follows.

・ Gesture A: P1−>P2−>G3

![]()

Figure 1. Experimental environment and the signal when there is no moving object.

・ Gesture B: P1−>P2−>End

・ Gesture C: P2−>P1−>G3

・ Gesture D: P2−>P1−>End

・ Gesture E: P1−>P1−>G3

・ Gesture F: P1−>P1−>End

4. Experimental Results

Experimental results of the gesture recognition algorithm are shown in Table 1. The experiments are performed in a classroom of Hanyang University. The IR-UWB chip NVA6100 from Novelda is used for generating impulse signals and a commercial pseudo monostatic antenna with opening angle 45˚ is used. The observation range is 2 meters here in the experiments. We performed 50 times experiments on each kind of gestures we proposed. The success rates of the gestures show up to be pretty well in general.

5. Conclusion

We have proposed a gesture recognition algorithm using IR-UWB radar sensor. In this algorithm we analyze the

![]()

Table 1. Experimental results of 6 gestures proposed.

direction change and frontal surface area of hand towards radar sensor and proposed 6 kinds of gestures. Through experiments we demonstrated that the gestures could be recognized by the algorithm quite well at the lowest rate of success at 86%, and the highest rate of success at 96%.

Acknowledgements

This research was supported by “Development of convenience improvement technology for passengers in the metro station (15RTRP-B067918-03)” of the Railway Technology Research of the Ministry of Land, Infrastructure and Transport (MOLIT).