A New Conjugate Gradient Projection Method for Solving Stochastic Generalized Linear Complementarity Problems ()

Received 2 May 2016; accepted 10 June 2016; published 13 June 2016

1. Introduction

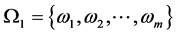

Suppose  is a probability space with

is a probability space with ; P is a known probability distribution. The stochastic generalized linear complementarity problems (denoted by SGLCP) is to find

; P is a known probability distribution. The stochastic generalized linear complementarity problems (denoted by SGLCP) is to find , such that

, such that

(1)

(1)

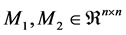

where  and

and  for

for , are random matrices and vectors. When

, are random matrices and vectors. When , stochastic generalized linear complementarity problems reduce to the classic Stochastic Linear Complementarity Problems (SLCP), which has been studied in [1] - [7] . Generally, they usually apply the Expected Value (EV) method and Expected Residual Minimization (ERM) method to solve this kind of problem.

, stochastic generalized linear complementarity problems reduce to the classic Stochastic Linear Complementarity Problems (SLCP), which has been studied in [1] - [7] . Generally, they usually apply the Expected Value (EV) method and Expected Residual Minimization (ERM) method to solve this kind of problem.

If  only contains a single realization, then (1) reduces to the following standard Generalized Linear Complementarity Problem (GLCP), which is to find a vector

only contains a single realization, then (1) reduces to the following standard Generalized Linear Complementarity Problem (GLCP), which is to find a vector  such that

such that

,

,

where  and

and .

.

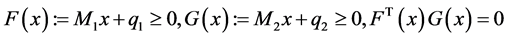

In this paper, we consider the following generalized stochastic linear complementarity problems. Denote , to find an

, to find an  such that

such that

![]()

![]() (2)

(2)

Let![]() , where

, where![]() ,

, ![]() ,

,![]() . Then (2) is equivalent to (3) and (4)

. Then (2) is equivalent to (3) and (4)

![]() (3)

(3)

![]() (4)

(4)

In the following of this paper, we consider to give a new conjugate gradient projection method for solving (2). The method is based on a suitable reformulation. Base on the Fischer-Burmeister function, x is a solution of (3)![]() , where

, where

![]() .

.

Define

![]() .

.

Then solving (3) is equivalent to find a global solution of the minimization problem

![]() .

.

So, (3) and (4) can be rewritten as

![]() , (5)

, (5)

where

![]() ,

,

![]() is slack variable with

is slack variable with![]() ,

,![]() .

.

Let![]() , where

, where ![]() and

and![]() . Then we know that

. Then we know that ![]() has

has ![]() equations with

equations with ![]() variables.

variables.

Let ![]() and define a merit function of (5) by

and define a merit function of (5) by

![]() .

.

If (2) has a solution, then solving (5) is equivalent to find a global solution of the following minimization problem

![]() (6)

(6)

![]()

where![]() .

.

2. Preliminaries

In this section, we give some Lemmas, which are taken from [8] - [10] .

Lemma 1. Let P be the projection onto Ω, let ![]() for given

for given ![]() and

and![]() , then

, then

1)![]() , for all

, for all![]() .

.

2) P is a non-expansive operator, that is, ![]() for all

for all![]() .

.

3)![]() .

.

Lemma 2. Let ![]() be the projected gradient of θ at

be the projected gradient of θ at![]() .

.

1)![]() .

.

2) The mapping ![]() is lower semicontinuous on Ω, that is, if

is lower semicontinuous on Ω, that is, if![]() , then

, then

![]() .

.

3) The point ![]() is a stationary point of problem (6) Û

is a stationary point of problem (6) Û![]() .

.

3. The Conjugate Gradient Projection Method and Its Convergence Analysis

In this section, we give a new conjugate gradient projection method and give some discussions about this method.

Given an iterate![]() , we let

, we let![]() ,

,

![]() , (7)

, (7)

where![]() . Inspired by the literature [8] - [11] , we take

. Inspired by the literature [8] - [11] , we take

![]() , (8)

, (8)

with![]() .

.

And ![]() is defined by

is defined by

![]() . (9)

. (9)

Method 1. Conjugate Gradient Projection Method (CGPM)

Step 0: Let![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , set

, set![]() .

.

Step 1: Compute![]() , such that

, such that

![]() ,

,

![]() .

.

Set![]() .

.

Step 2: If![]() , stop,

, stop,![]() .

.

Step 3: Let![]() , and go to Step 1.

, and go to Step 1.

In order to prove the global convergence of the Method 1, we give the following assumptions.

Assumptions 1

1) ![]() has a lower bound on the level set

has a lower bound on the level set![]() , where t1 is initial point.

, where t1 is initial point.

2) ![]() is continuously differentiable on the L0, and its gradient is Lipschitz continuous, that is, there exists a positive constant L such that

is continuously differentiable on the L0, and its gradient is Lipschitz continuous, that is, there exists a positive constant L such that

![]() .

.

Lemma 3. If tk is not the stability point of (6), ![]() , then search direction dk generated by (9) descent

, then search direction dk generated by (9) descent

direction, which is![]() .

.

Proof. From (7), Lemma 1, and (8), we have

![]()

Lemma 4. [11] Suppose that Assumptions 1 holds. Let ![]() continuously differentiable and lower bound on the Ω,

continuously differentiable and lower bound on the Ω, ![]() is uniformly continuous on the Ω and

is uniformly continuous on the Ω and ![]() is bounded, then

is bounded, then ![]() generated by Method 1 are satisfied

generated by Method 1 are satisfied

![]() ,

,![]() .

.

Theorem 1. Let ![]() continuously differentiable and lower bound on the Ω,

continuously differentiable and lower bound on the Ω, ![]() is uniformly conti-

is uniformly conti-

nuous on the Ω, ![]() is a sequence generated by Method 1, then

is a sequence generated by Method 1, then![]() , and any accumulation

, and any accumulation

point of ![]() is a stationary point of (6).

is a stationary point of (6).

Proof. By Lemma 2, we have![]() ,

, ![]() ,

, ![]() , satisfy

, satisfy

![]() , (10)

, (10)

for![]() , by Lemma 1, we know that

, by Lemma 1, we know that![]() , and we have

, and we have

![]() , so,

, so,

![]() . (11)

. (11)

Let![]() ,

, ![]() , from (11), we have

, from (11), we have

![]() .

.

By the above formula, (8) and Lemma 1, we get

![]()

Taking limit on both sides and by Lemma 4, we know that

![]() . (12)

. (12)

Because

![]() (13)

(13)

and Lemma 4, we have

![]() . (14)

. (14)

By (12), (13), (14) and ![]() is uniformly continuous on the Ω, we get

is uniformly continuous on the Ω, we get

![]() .

.

By (10), we know that

![]() . (15)

. (15)

Let![]() , where

, where![]() , by Lemma 2 and (15), we have

, by Lemma 2 and (15), we have

![]() .

.

From Lemma 2 3), we get any accumulation point of ![]() is a stationary point of (6).

is a stationary point of (6).

4. Numerical Results

In this section, we give the numerical results of the conjugate gradient projection method for the following given test problems, which are all given for the first time. We present different initial point t0, which indicates that Method 1 is global convergence.

Throughout the computational experiments, according to Method 1 for determining the parameters, we set the parameters as

![]() .

.

The stopping criterion for the method is ![]() or

or![]() .

.

In the table of the test results, t0 denotes initial point, ![]() denotes the solution, val denotes the final value of

denotes the solution, val denotes the final value of

![]() , Itr denotes the number of iteration.

, Itr denotes the number of iteration.

Example 1. Considering SGLCP with

![]() ,

, ![]() ,

,

![]() ,

, ![]() ,

,

![]() and

and![]() ,

,![]() .

.

The test results are listed in “Table 1” using different initial points.

![]()

Table 1. Results of the numerical Example 1-2 using method 1.

Example 2. Considering SGLCP with

![]() ,

, ![]() ,

,

![]() ,

, ![]() ,

,

![]() and

and![]() ,

,![]() .

.

The test results are listed in “Table 1” using different initial points.

5. Conclusion

In this paper, we present a new conjugate gradient projection method for solving stochastic generalized linear complementarity problems. The global convergence of the method is analyzed and numerical results show that Method 1 is effective. In future work, large-scale stochastic generalized linear complementarity problems need to be studied and developed.

Acknowledgements

This work is supported by National Natural Science Foundation of China (No. 11101231, 11401331), Natural Science Foundation of Shandong (No. ZR2015AQ013) and Key Issues of Statistical Research of Shandong Province (KT15173).