Some Results for Exact Support Recovery of Block Joint Sparse Matrix via Block Multiple Measurement Vectors Algorithm ()

1. Introduction

Compressed sensing [1] [2] [3] theory is a theoretical method to solve sparse solutions of large underdetermined linear systems, which has received lots of attention in the last decades and it has been applied to many research domains, including signal processing [4] , radar system [5] , medical imaging [6] , image compression [7] and so on. Suppose a signal

, we aim to reconstruct it from linear measurements

(1)

where

is called measurement vector,

denotes a measurement matrix with

, and

is the noise vector. Because the signal

we need to reconstruct is a vector, (1) is named as single measurement vector (SMV) model. Since the solution of (1) is not unique, some additional constraints on

and

are needed to reconstruct

uniquely. What we are interested in is that

is sparse.

is the

norm. When

, we say signal

is K-sparse. To reconstruct such a signal

from noisy model (1), a intuitive idea is to find a solution of

minimization

(2)

where

and

are known. The problem mentioned above is called the SMV problem, and it is also the most common problem model in the field of signal recovery. The optimization problem (2) has a lot of efficient and effective algorithms that use greed strategy to recover

, such as orthogonal matching pursuit (OMP) [8] , stagewise OMP [9] , regularized OMP [10] , compressive sampling matching pursuit [11] , subspace pursuit [12] , generalized OMP (gOMP) [13] , optimized OMP [14] , backtracking-based adaptive OMP [15] , etc. And various researches see [16] [17] [18] .

If the signal we need to recover is not a vector but a matrix

, then model (1) is transformed to multiple measurement vector (MMV) model as follows.

(3)

where

and

.

In many applications, including reconstruction multiband signals [19] and face recognition [20] , the matrix X has a few nonzero rows with blocks, i.e., we call block joint sparse matrix. In terms of how to measure the block joint sparsity, we can use a series of row blocks to present X. As in [21] and [22] , we assume that each block has the same length and is

. Therefore, for rewriting X, we firstly define

for

, where

denotes the i-th row of X. Then, X is represented as:

If there are no more than K blocks

in

, X is a block K-joint sparse matrix. The measurement matrix

can be rewritten as:

where

for

, and

denotes the i-th column of

.

Thus, to reconstruct X, we turn to solve the following optimization problem, which is called MMV problem.

(4)

where the

represents the set of nonzero blocks.

To discuss the performance of the reconstruction algorithm for solving (2), restricted isometry property (RIP) [23] is very common. Then, to study (4), block RIP (bRIP) as a generalization of RIP was proposed in [24] . Particularly, we say that matrix

obeys the bRIP with parameter

if

(5)

for any block K-sparse vectors

. The smallest one among all

meeting (5) is called the block restricted isometry constant (bRIC) of A with order K. For simplicity of writing, throughout this paper, we still use

to represents the bRIC whenever there is no confusion.

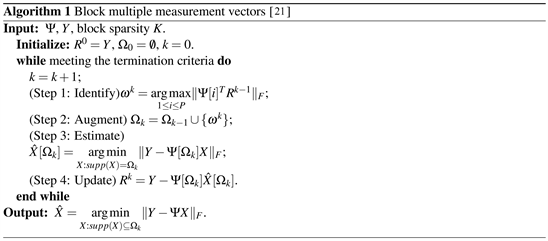

Based on the MMV algorithm [25] , Fu et al. firstly proposed a new greedy algorithm in [22] , which is called block MMV (BMMV), to recover the block joint sparse matrices by thinking about block joint sparsity. Simply, the BMMV algorithm is a block multiple measurement vectors version of OMP. For

, we use

to denote the cardinality of

. Let

denote the submatrix of the vector X whose rows indices are restricted to

and the submatrix of

that whose columns indices are only restricted to the set

is denoted by

. The BMMV algorithm selects the block index closest to the residual to add to the estimated support at each iteration. The estimated support is used to solve a least square problem to get the estimated original signal. We present the detailed framework of the BMMV algorithm which is described in Algorithm 1.

Like other signal reconstruction algorithms, many results about performance of recovering block MMV problem via BMMV are studied. It is represented in [22] that if

obeys

, then the block K-joint sparse matrices can be accurately reconstructed in K iterations from noiseless model

by BMMV. In [21] , the condition in [22] was improved to

which also is proved to be sharp. As far as we know, there are no researches on support recovery under noisy model (3) with block structure. We complete this part of content that has not yet been studied. It is necessary to study the recovery performance of the BMMV algorithm in noisy case because it is inevitably contaminated by noise in practical applications. Specifically, we firstly prove that if

and

, then the BMMV algorithm can perfectly recover

with stopping criteria

under

. Moreover, our sufficient condition is optimal if noisy model degenerates to noiseless model. Besides, when

and

, the BMMV algorithm turns to the OMP algorithm, and the sufficient condition we mentioned above degenerates to ( [17] , Theorem 1).

2. Preliminaries

2.1. Notation

Let

and

denote the

and Frobenius norm of a vector and matrix, respectively. The zero and identity matrix are denoted by

and I, respectively. The j-th element of vector

is denoted by

. Let

denotes the support of a matrix X with block structure, then

for any matrix X which is block K-joint sparse, where

denote the transpose of

. P as the number of blocks of X is assumed for simplity. Let

represents a set which elements are indexed by

and are not contained in set

for any set

. Similarly, we use

and

represent the complementary of set

and

, respectively. Let

denotes the pseudoinverse of

if the rank of the columns of

is full, where the inverse of a square matrix is represented by

. Therefore, the projector and its orthogonal complement on the columns space of

can be denoted by

and

, respectively.

2.2. Some Useful Lemmas

For proving our subsequent theoretical studies, we first present some useful lemmas for our theoretical analysis.

Lemma 1 ( [26] , Lemma 1) Suppose

obeys both the bRIP of orders

and

, then

with

.

Lemma 2 ( [11] , Proposition 3.1) Suppose

is a set with

and

obeys the bRIP of order K. Then

where

.

Lemma 3 ( [26] , Lemma 2) Let

and

satisfy

. Suppose

obeys

-order bRIP, then

where

.

Lemma 4 ( [27] , Lemma 1) Let

and

. Then

3. Main Results

Lemma 5 is proposed and plays a very important role in the subsequent theoretical proof.

Lemma 5 Let

with

, then

Proof 1 The skill of proof is similar to the results in ([21, Lemma 4, Lemma 5). To prove Lemma 5, we first show that

(6)

By assumption, it is easy to get

,

, and

, then

(i) is because

(7)

and (ii) is from Lemma 4 with

, and

. Thus, (6) holds. So, by (6), we can easily get that

(8)

Let

then, by some sample calculations, we obtain

(9)

(10)

To simplify the notation, for given

, it is necessary to introduce a new matrix

, and the p-th column of Z is defined as

(11)

where

is the p-th column of

. Furthermore, we define

(12)

(13)

(14)

Then

(15)

and

(16)

(17)

Moreover,

(18)

where (iii) is because (12)-(15), (iv) follows from (7), and (v) is from (11). Therefore, for any

, by applying (18), we can get

(19)

and

(20)

By the (19) and (20) are mentioned before, we can have

(21)

where the last equality because of (9). It is not hard to check that

(22)

where (vi) follows Lemma 3 and (12), (vii) is due to (16) and (17), and (viii) is from (10). By (15), (21), (22), and the fact that

, we have

Combining the aforementioned Equation with (8), we obtain

which implies that

Thus, we complete the proof.

Remark 6 Specially, Lemma 5 is a general block version of ( [28] , Theorem 3.2). The main difference between our Lemma 5 and ( [28] , Theorem 3.2) exist in two aspects. First, our model is block version of multiple measurement vectors. Second, the ways of calculating

are different.

Based on the Algorithm 1 and lemmas we presented above, we show our main results in this section. We provide a sufficient guarantee for the exact support recovery of block joint sparse matrices with the BMMV algorithm under the

bounded noise from noisy model (3).

Theorem 7 Consider (3). Assume that

and matrix X is block K-joint sparse. Provided that measurement matrix

obeys the bRIP of order

with

(23)

and X satisfies

(24)

Then based on the stopping criteria

, the BMMV algorithm can accurately recover the

in K iterations.

Proof 2 For proving Theorem 7, there are two points that need to be shown, namely, one is to prove that the BMMV algorithm selects a correct block index from

in each iteration, and the second is that the BMMV algorithm will select all the indices in

in all K iterations. The proof consists of two parts. First, we illustrate that all correct indices are identified in all iteration via BMMV. Second, we show that the process of identifying terminates after it is executed just

iterations. In the first step the mathematical induction method is used. Suppose that in the first

iterations, the BMMV algorithm has selected the correct indices, which means that

and

. Since

, it obviously holds when

. Therefore, we only have to prove that a correct index is selected by the BMMV algorithm at the k-th iteration, i.e.,

.

By the Step 3 and 4 in Algorithm 1, we can obtain that the orthogonal relationship between

and the columns space of

, then we have

for any

, so we get that

. For proving

, by the Step 1 of BMMV, we turn to proof

(25)

By the estimating step of the BMMV algorithm, we obtain

(26)

Then, from Step 4 of the BMMV algorithm and (26),

(27)

where (τ1) is because the definition of

is used, (τ2) is based on

and

, and (τ3) is due to

. Consequently, to show (25), we try to use (27) to obtain the lower and upper bound on the left and right side of (25), separately. For any

and

, by the triangle inequality, it is obtained that

(28)

and

(29)

Therefore, by (28) and (29), to show (25), we only need to prove

(30)

Next, we try to find a lower bound on the left side of (30). Using the inductive assumption

and

. Hence, we obtain

(31)

Since

and

, according to Lemma 5, we obtain

(32)

where (τ4) is due to the assumption of X in this paper and the fact that

and (τ5) is from Lemma 1, (23) and (31). Then, we deduce a upper bound on the right side of (30). There are

and

that make

Hence, we obtain

(33)

where (τ6) is because

is a matrix with two

subvectors and (τ7) are due to Lemma 2 and

(34)

respectively. Based on (32) and (33), (30) holds when

that is,

In addition, by (23), we obtain

. Hence, once (24) is satisfied, a correct index is identified by the BMMV algorithm in each iteration.

Next, the BMMV algorithm needs to be specified to be performed exact

iterations, that equals to prove that we always have

for all

and

. Since only one correct index is selected by the BMMV algorithm at a iteration under (24), by (27) and triangle inequality, for all

, we obtain

where (τ8) is from (34) and Lemma 3, and (τ9) is because of Lemma 1 and (31). Hence, if

(35)

then

for each

. After some transformations, it is easily get

(36)

which is from

and

. Therefore, from (35) and (36), if (24) holds,

for each

, i.e., it does not terminate until the K-th iteration is completed. Likewise, from (27), we have

where (τ10) is due to

. Due to the termination criteria, the BMMV algorithm stops after the K-th iteration. Hence, the BMMV algorithm just executes K iterations. Our proof is complete.

When

, the X turns to a block K-sparse vector

and V turns to a vector

. For block K-sparse vector, there are already many researches on recovery performance of block reconstruction algorithms, for example, block OMP (BOMP) ( [26] [29] ) and block gOMP (BgOMP) ( [30] [31] ).

Corollary 8 Taking

in (3) and Theorem 7. Assume that

and vector

is block K sparse. Provided that measurement matrix

obeys the bRIP of order

with

, and

satisfies

Then based on the stopping criteria

(

is a residual vector), the BMMV algorithm can accurately recover

in K iterations.

Remark 9 The results in the Corollary 8 are consistent with the results of [26] , which is a sharp sufficient condition. However, [29] gives a new analysis for support recovery that is less restrictive for

. For more details, please refer to [29] .

If the length of blocks

, the block K-joint sparse is equivalent to K-row sparse. In [32] gives two theorems to show the bound

is optimal in noiseless case. More details are in [32] , which means the bound based on bRIP we proposed is optimal in the noiseless case.

Corollary 10 Taking

and

in (3) and Theorem 7, X reduces to

, V reduces to

. Assume that

and vector

is K sparse. Provided that measurement matrix

obeys the bRIP of order

with

, and

satisfies

Then based on the stopping criteria

, the BMMV algorithm can accurately recover

in K iterations.

Remark 11 The Corollary 10 is same as ( [17] , Theorem 1), which is a sharp condition. [33] gives a less restrictive bound on

. This means that our result have room for improvement.

4. Conclusions and Future Works

In this paper, studies on the performance of support recovery of block K-joint sparse matrix via the BMMV algorithm from

are analyzed. We obtained that if

satisfies bRIP with

and

, then BMMV can accurately reconstruct the

in K iterations. With the help of ( [32] , Theorem 2), we also show the RIP-based condition is optimal when the problem reduces to SMV problem under the noiseless case.

Because our result about

is not yet optimal. Thus, one of future directions is to continue to refine this result and continue to study theoretical improvement of the related algorithms. Because the block length considered in this paper is a fixed value for simplicity, it is also worth considering that the block length is a variable. Then, the support recovery conditions are theoretical, and how it applies to practical applications is also a question. The BMMV algorithm selects only one index for each iteration, which means that the recovery program will directly fail once a wrong index is added to the estimation support. It is interesting to propose an improved algorithm to solve the problem.