Optimization Scheme Based on Differential Equation Model for Animal Swarming ()

1. Introduction

Let  be a real valued continuous function defined for

be a real valued continuous function defined for , where

, where  is a positive integer. The problem of finding the minimal value

is a positive integer. The problem of finding the minimal value

is called a D-dimensional optimization problem for . Such an optimization problem is one of fundamental problems in the study of science and technology and so far various methods have already been presented.

. Such an optimization problem is one of fundamental problems in the study of science and technology and so far various methods have already been presented.

When  is a

is a  function, the point

function, the point  which hits the minimal value

which hits the minimal value  is obtained in the set of solutions of the functional equation

is obtained in the set of solutions of the functional equation . But, in general, it is not so easy to solve this functional equation. So, approximate solutions become more important. The steepest descent method is a method of obtaining a sequence

. But, in general, it is not so easy to solve this functional equation. So, approximate solutions become more important. The steepest descent method is a method of obtaining a sequence  in

in  which approaches to

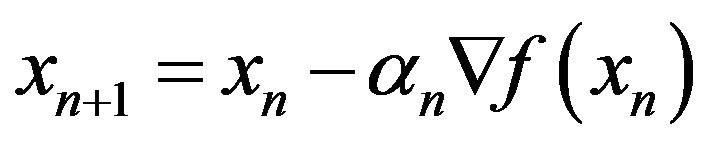

which approaches to  by using the recurrence formula

by using the recurrence formula

with some suitable coefficient

with some suitable coefficient . This method is very convenient and it is easily observed that

. This method is very convenient and it is easily observed that  converges to a limit

converges to a limit  such that

such that . But

. But  may hit very often some local minimal value of

may hit very often some local minimal value of  and not the very minimal value. So, when

and not the very minimal value. So, when  possesses many local minimal values, it is very difficult to find out the point

possesses many local minimal values, it is very difficult to find out the point  by this method.

by this method.

Recently, a development of techniques of numerical computations has yielded a new paradigm of optimization using a collection of particles in  which interact each other and move for striking out the point

which interact each other and move for striking out the point . Such a method is called the particle optimization. One of the typical particle optimization was devised by KennedyEberhart [1]. They consider a swarm of particles not only flying in

. Such a method is called the particle optimization. One of the typical particle optimization was devised by KennedyEberhart [1]. They consider a swarm of particles not only flying in  like bird but also behaving as an intellectual individual which can memorize its personal best through the whole past and, on the other hand, can know the swarm’s global best at each instant. In each step, processing such personal and swarm information, they move to a suitable position. The phase space in which the particles move about is therefore an abstract multi-dimensional space which is collision-free. They called their method the particle swarm optimization. Afterword, the particle swarm optimization has been developed extensively and applied to various problems. We will here quote only some of them [2-4]. A survey of the method has recently been published by Eslami-Shareef-Khajehzadeh-Mohamed [5].

like bird but also behaving as an intellectual individual which can memorize its personal best through the whole past and, on the other hand, can know the swarm’s global best at each instant. In each step, processing such personal and swarm information, they move to a suitable position. The phase space in which the particles move about is therefore an abstract multi-dimensional space which is collision-free. They called their method the particle swarm optimization. Afterword, the particle swarm optimization has been developed extensively and applied to various problems. We will here quote only some of them [2-4]. A survey of the method has recently been published by Eslami-Shareef-Khajehzadeh-Mohamed [5].

In this paper, we want to compose another type particle optimization which is inspired more directly from the animal swarming like fish schooling, bird flocking, or mammal herding. Our particles move truly according to the animal’s behavioral rules for forming swarm. We first set a D-dimensional physical space  in which the particles move about. We then assume among individuals two kinds of interactions, attraction and collision avoidance. These interactions will be formulated by generalized gravitation laws. Regarding

in which the particles move about. We then assume among individuals two kinds of interactions, attraction and collision avoidance. These interactions will be formulated by generalized gravitation laws. Regarding  as an environmental potential, we assume also that the particles are sensitive to the gradient of

as an environmental potential, we assume also that the particles are sensitive to the gradient of  at their positions and have tendency to move toward the most descending direction of the value of

at their positions and have tendency to move toward the most descending direction of the value of . But they cannot know which mate has the global best position of the swarm at any momemt. We will also incorporate uncertainty of their information processing and executing. The phase space of particles is therefore given by

. But they cannot know which mate has the global best position of the swarm at any momemt. We will also incorporate uncertainty of their information processing and executing. The phase space of particles is therefore given by , here

, here  means that at that moment the i-th particle is at position xi with velocity vi. As for animal’s behavioral rules, we are going to follow those presented by Camazine-Deneubourg-Franks-Sneyd-Theraulaz-Bonabeau ([6], Chapter 11). That is:

means that at that moment the i-th particle is at position xi with velocity vi. As for animal’s behavioral rules, we are going to follow those presented by Camazine-Deneubourg-Franks-Sneyd-Theraulaz-Bonabeau ([6], Chapter 11). That is:

1) The swarm has no leaders and each individual follows the same behavioral rules;

2) To decide where to move, each individual uses some form of weighted average of the position and orientation of its nearest neighbors;

3) There is a degree of uncertainty in the individual’s behavior that reflects both the imperfect informationgathering ability of an animal and the imperfect execution of its actions.

(We refer also a similar idea due to Reynolds [7].) The authors have in facttried to model in the previous paper [8] these mechanisms by stochastic differential Equations (2.1) and (2.2) below.

Our optimization scheme is actually composed on the basis of the continuous model Equations (2.1), (2.2) but just ignoring velocity matching (i.e., taking ) and setting the external force as the sum of resistance and gradient of the potential function to be optimized. At each step, the particles have a velocity determined by the sum of centering with nearby mates and acceleration by the external force. Its position is renewed by the sum of the velocity and a noise which reflects the imperfectness of information-gathering and execution of actions. At the first stage, the particles move striking out the global minimal value on one hand keeping a swarm and on the other hand keeping a territorial distance with other mates. The noise helps the swarm to escape from the traps of local minimal values and to reach into a neighborhood of the global one. At the second stage, the movement of particles slows down. The particles go to an equilibrium state in which some particle attains at the swarm’s best value.

) and setting the external force as the sum of resistance and gradient of the potential function to be optimized. At each step, the particles have a velocity determined by the sum of centering with nearby mates and acceleration by the external force. Its position is renewed by the sum of the velocity and a noise which reflects the imperfectness of information-gathering and execution of actions. At the first stage, the particles move striking out the global minimal value on one hand keeping a swarm and on the other hand keeping a territorial distance with other mates. The noise helps the swarm to escape from the traps of local minimal values and to reach into a neighborhood of the global one. At the second stage, the movement of particles slows down. The particles go to an equilibrium state in which some particle attains at the swarm’s best value.

In order to investigate swarm behavior of particles, we shall apply our optimization scheme to a few benchmark problems. When  has many local minimal values, the territorial distance must be chosen in a suitable length to find a good approximate solution. Optimal strength of noise is also required. If it is too small, the swarm is easily trapped by a local minimum; to the contrary, if too large, the particles cannot keep on swarming because of the strong dispersion. If these are suitably set, then our scheme can show very high performance even in 12- dimensional problems.

has many local minimal values, the territorial distance must be chosen in a suitable length to find a good approximate solution. Optimal strength of noise is also required. If it is too small, the swarm is easily trapped by a local minimum; to the contrary, if too large, the particles cannot keep on swarming because of the strong dispersion. If these are suitably set, then our scheme can show very high performance even in 12- dimensional problems.

2. Continuous Model

We being with reviewing the continuous model presented by [8].

We consider motion of I animals in the physical space . The position of i-th animal is denoted by

. The position of i-th animal is denoted by

, and its velocity by

, and its velocity by . The model equations are then written as

. The model equations are then written as

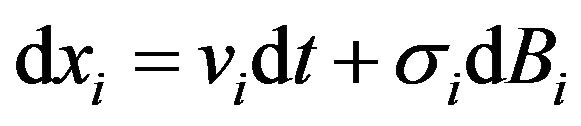

(2.1)

(2.1)

(2.2)

(2.2)

where  and

and . The first Equation (2.1) is a stochastic equation for xi, here

. The first Equation (2.1) is a stochastic equation for xi, here

denotes a system of independent 3-dimensional Brownian motions defined on a complete probability space with filtration

denotes a system of independent 3-dimensional Brownian motions defined on a complete probability space with filtration  (see [9]). The term

(see [9]). The term  therefore denotes a noise resulting from the imperfectness of information-gathering and action of the i-th animal,

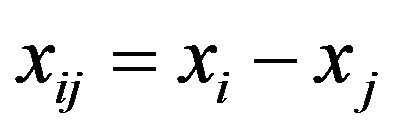

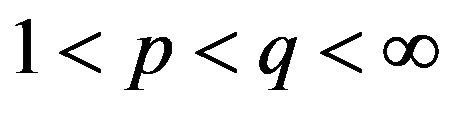

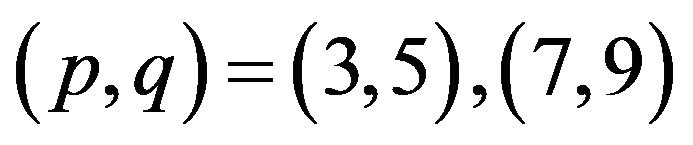

therefore denotes a noise resulting from the imperfectness of information-gathering and action of the i-th animal,  being some coefficient. In the meantime, (2.2) is a deterministic equation for vi. The first term in the right hand side denotes the centering and the collision avoidance of animal. The animals have tendency to stay nearby their mates and at the same time avoid colliding each other. As p and q are such that

being some coefficient. In the meantime, (2.2) is a deterministic equation for vi. The first term in the right hand side denotes the centering and the collision avoidance of animal. The animals have tendency to stay nearby their mates and at the same time avoid colliding each other. As p and q are such that , if

, if , then the

, then the  -th animal moves toward the j-th; to the contrary, if

-th animal moves toward the j-th; to the contrary, if , then it acts in order to avoid collision with the other. The number

, then it acts in order to avoid collision with the other. The number  therefore denotes a critical distance. If p is large, then, as the distance

therefore denotes a critical distance. If p is large, then, as the distance  increases, its power

increases, its power  decreases quickly. Hence, the larger p is, the shorter the range of centering is. The second term of (2.2) denotes the effect of velocity matching with nearby mates. Finally, the term

decreases quickly. Hence, the larger p is, the shorter the range of centering is. The second term of (2.2) denotes the effect of velocity matching with nearby mates. Finally, the term  denotes an external force imposed to the i-th animal at time t which is a given function for xi and vi. In the subsequent section, we take a function of the form

denotes an external force imposed to the i-th animal at time t which is a given function for xi and vi. In the subsequent section, we take a function of the form

(2.3)

(2.3)

where  denotes a resistance for motion and

denotes a resistance for motion and  denotes an external force determined by a

denotes an external force determined by a  potential function

potential function .

.

3. Optimization Scheme

Let  be a real valued

be a real valued  function defined for

function defined for  with positive integer

with positive integer . Consider the optimization problem

. Consider the optimization problem

(3.1)

(3.1)

On the basis of (2.1), (2.2), we introduce an optimization algorithm for (3.1). Let , denote the positions of I particles moving about in the space

, denote the positions of I particles moving about in the space , and let

, and let , denote their velocities. We ignore velocity matching of particles, namely, we set

, denote their velocities. We ignore velocity matching of particles, namely, we set , and take the function

, and take the function  in (2.2) in the form (2.3) above. Our scheme is then described by

in (2.2) in the form (2.3) above. Our scheme is then described by

(3.2)

(3.2)

(3.3)

(3.3)

where . In addition,

. In addition,

, is a family of independent stochastic functions defined on a complete probability space

, is a family of independent stochastic functions defined on a complete probability space  with values in

with values in  whose distributions are a normal distribution with mean 0 and variance

whose distributions are a normal distribution with mean 0 and variance . And

. And  is given by

is given by

.

.

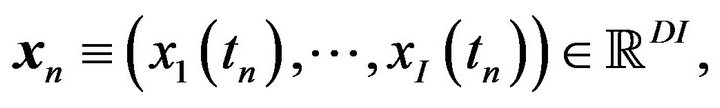

Set initial positions and initial velocities

respectively. Then, the algorithm (3.2), (3.3) defines a discrete trajectory

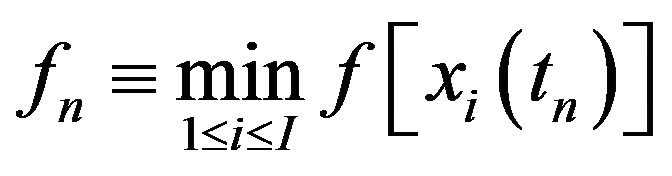

where . In each step n, we compute the minimal value

. In each step n, we compute the minimal value  and memorize its value together with the point

and memorize its value together with the point  hitting it, i.e.,

hitting it, i.e., . Repeating the iteration N-times,

. Repeating the iteration N-times,

is an approximation value of (3.1) and

is an approximation value of (3.1) and

such that  is an approximation solution of our scheme.

is an approximation solution of our scheme.

As  is one of members of swarm

is one of members of swarm  having interactions one and another, the approximate solution

having interactions one and another, the approximate solution  may not satisfy the condition

may not satisfy the condition . This means that there is a point

. This means that there is a point  in a neighborhood of

in a neighborhood of  which hits a local minimum of

which hits a local minimum of , i.e.,

, i.e., . In this case,

. In this case,  which can easily be obtained by classical methods (e.g., the steepest descent method) gives a better approximate solution of (3.1) than

which can easily be obtained by classical methods (e.g., the steepest descent method) gives a better approximate solution of (3.1) than .

.

4. Numerical Experiments

We show some numerical experiments to expose the particle’s behavior of our scheme. It is expected that the particles crowd around a point  giving the global minimum of

giving the global minimum of  or are dispersed into a number of neighborhoods of points giving local minimums. The behavior may change heavily depending on the choice of parameters

or are dispersed into a number of neighborhoods of points giving local minimums. The behavior may change heavily depending on the choice of parameters  and

and .

.

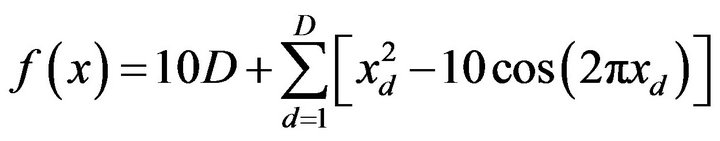

We here use three well known benchmark problems, namely, Problem (3.1) with Sphere function, Rastrigin function and Rosenbrock function. Problem (3.1) with the Sphere function

(4.1)

(4.1)

is the simplest problem. The optimal point is obviously given by . The following function

. The following function

(4.2)

(4.2)

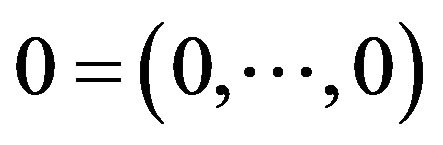

is called the Rastrigin function. Its optimal point is also given by  with the global minimum

with the global minimum

. It is easily seen that this function possesses many local minimums; indeed, at every lattice point,

. It is easily seen that this function possesses many local minimums; indeed, at every lattice point,  has a local minimum. Therefore, Problem (3.1) with function (4.2) is very difficult to treat especially in a high dimension

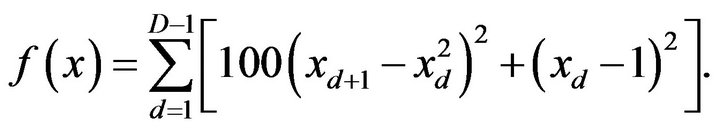

has a local minimum. Therefore, Problem (3.1) with function (4.2) is very difficult to treat especially in a high dimension . Finally, the Rosenbrock function is formulated by

. Finally, the Rosenbrock function is formulated by

(4.3)

(4.3)

This function takes its global minimum 0 at the lattice point

As shown below, behavior of the particles depends on the model parameters. We set

and

and

. The step size is fixed by

. The step size is fixed by

and the total step number is

and the total step number is . As for D, we consider 2-dimensional and 12-dimensional problems.

. As for D, we consider 2-dimensional and 12-dimensional problems.

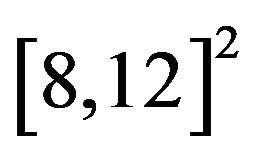

When , the initial position

, the initial position  is taken in,

is taken in,  , where no optional point exists in

, where no optional point exists in , moreover,

, moreover,  is far away from the optimal point. In addition, for 2-dimensional Rosenbrock problem, we will take

is far away from the optimal point. In addition, for 2-dimensional Rosenbrock problem, we will take , too, because the Rosenbrock function defined by (4.3) is not symmetric with respect to the transformation

, too, because the Rosenbrock function defined by (4.3) is not symmetric with respect to the transformation .

.

When , the initial position

, the initial position  is taken randomly in

is taken randomly in , but it is very difficult to find the optimal point in a higher dimensional search space.

, but it is very difficult to find the optimal point in a higher dimensional search space.

4.1. Sphere Function

Figure 1 shows the numerical results for Sphere function. The parameters are set as ,

, ;

; ;

; , and

, and . For

. For , the computed result is given by Figure 1(a); similarly for

, the computed result is given by Figure 1(a); similarly for , by Figure 1(b).

, by Figure 1(b).

In the first stage ,

,  decreases rapidly, which means that all the particles strike out for the optimal point 0 in group influenced form the force due to the gradient of

decreases rapidly, which means that all the particles strike out for the optimal point 0 in group influenced form the force due to the gradient of . In the second stage, since the interaction among particles becomes dominant rather than the potential force, decreasing of the value

. In the second stage, since the interaction among particles becomes dominant rather than the potential force, decreasing of the value  becomes slow. This means that the particles make local searches in a neighborhood of the global optimum. But, the interaction may prevent the optimal particle from attaining exactly the global minimum. So, our method makes it possible to search both wide range and small range by using the same scheme. On the other hand, it is an important problem to find a better combination of parameters in order to have a suitable balance between the global search and the local search.

becomes slow. This means that the particles make local searches in a neighborhood of the global optimum. But, the interaction may prevent the optimal particle from attaining exactly the global minimum. So, our method makes it possible to search both wide range and small range by using the same scheme. On the other hand, it is an important problem to find a better combination of parameters in order to have a suitable balance between the global search and the local search.

4.2. Rastrigin Function

Figure 2 shows the numerical results for the Rastrigin