1. Introduction

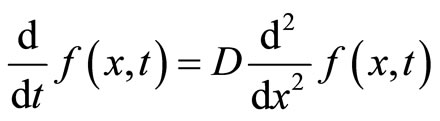

When a free particle is at a non-zero temperature, it is composed of a spectrum of frequencies that evolve at different rates which causes the probability distribution of where one can find the particle to spread. We will show that the entropy rate, associated with the probability distribution diffusing, is equal to twice the particle’s temperature.

(1)

(1)

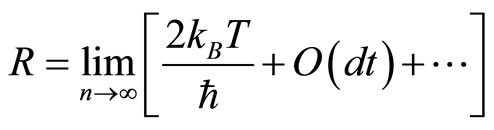

The rate, R, is calculated below using the natural logarithm, and thus the units for the rate are natural units of information per second, when the temperature (T) is expressed in degrees Kelvin, Boltzmann’s constant  is expressed in Joules per Kelvin, and hbar (ħ) is Planck’s constant divided by 2π in Joule-seconds.

is expressed in Joules per Kelvin, and hbar (ħ) is Planck’s constant divided by 2π in Joule-seconds.

This equation tells us the minimum amount of information we need, each second, in order to track a diffusing free particle to the highest precision that nature requires. By quantifying the amount of information needed to follow a free particle for a certain time, and showing it is finite, we are able to guarantee that a computer (or other discrete state-space machine with finite memory) can store a particle’s initial state and trajectory.

What is unique about this result is that there is no dependence on the mass of the particle or any other variable except the temperature.

2. Assumptions

We prove this primary result by making the following three assumptions:

1) The continuous diffusion of a free particle can be modeled as a discrete process with a time step  that is much smaller than the de-coherence time

that is much smaller than the de-coherence time  ,

,

where T is the temperature.

where T is the temperature.

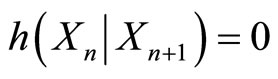

2) Knowing the particle’s location at time step n+1 allows one to determine the location of the particle at the previous time step n; i.e., conditional entropy is zero,

where  is the random variable that represents where the particle can be found at time step n.

is the random variable that represents where the particle can be found at time step n.

3) At each time step the minimum uncertainty wavepacket is localized around its new location, and thus the conditional entropy of the  step, given all previous steps, is the same as the conditional entropy of the 1st step given its initial state,

step, given all previous steps, is the same as the conditional entropy of the 1st step given its initial state,

.

.

These three assumptions taken together are reasonable and give insight into the behavior of the system.

Assumption 1 is aided by the analysis found in [1] which shows the time step of discrete diffusion is

and thus for non-relativistic particles, the assumption holds.

Assumption 2 says that there is no entropy beyond the minimum uncertainty wave-packet after a measurement of the particle’s location was made.

Assumption 3 says that the vacuum localizes the diffusing particle up to the minimum uncertainty wavepacket at each step in the process. Even though an undisrupted particle’s wave packet will spread, at the vacuum level the particle is re-initialized at each step, like in quantum nondemolition measurements [2].

3. Setup

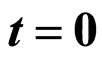

At  , a free particle in vacuum is initialized into a minimum uncertainty Gaussian wave-packet with a spatial variance equal to

, a free particle in vacuum is initialized into a minimum uncertainty Gaussian wave-packet with a spatial variance equal to  . As time increases so does its variance and thus its entropy.

. As time increases so does its variance and thus its entropy.

To calculate the entropy rate of this process, it is helpful to think of time as occurring in discrete units of a small size dt (assumption 1).

We can look at a Venn diagram of this process, figure 1.  (or X0 in the figure) is a random variable, drawn from

(or X0 in the figure) is a random variable, drawn from  , that describes the location of where the particle can be found at time

, that describes the location of where the particle can be found at time  .

.  (X1) is a random variable, drawn from

(X1) is a random variable, drawn from  , that describes the location of where the particle can be found at time

, that describes the location of where the particle can be found at time  .

.  (X2) is drawn from

(X2) is drawn from  and so on up to

and so on up to  which is drawn from

which is drawn from  where

where  .

.

As hinted to in the diagram (but explicitly stated here as assumption 2 and assumption 3), we will assume that the conditional entropy of each step is constant;

and  , where h is the differential entropy

, where h is the differential entropy  and where

and where  is the distribution which determines

is the distribution which determines  . This essentially means that knowing the location of the particle at any time allows one to calculate where it was in the previous time step and that the minimum uncertainty wave-packet maintains its coherence as its first moment (or average value) diffuses via a process with a variance as given by equation (2).

. This essentially means that knowing the location of the particle at any time allows one to calculate where it was in the previous time step and that the minimum uncertainty wave-packet maintains its coherence as its first moment (or average value) diffuses via a process with a variance as given by equation (2).

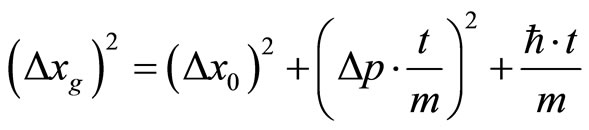

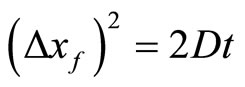

In Section 5, we show that as time increases, a free particle diffuses such that the variance of where the particle can be found (if localized) is

(2)

(2)

Thus  (or simply

(or simply  ) is a Gaussian random variable with variance

) is a Gaussian random variable with variance

.

.

4. Entropy Rate

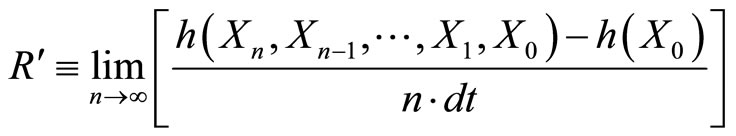

We can calculate the entropy rate of this process using the definition of the entropy rate. We will use the entropy rate, R, as calculated by taking the limit as the number of steps goes to infinity of the conditional entropy of the last step given all previous steps divided by the time step [3].

(3)

(3)

To solve for R, we first notice that since

(assumption 2) we can show by induction that

(4)

(4)

Due to the symmetric nature of mutual information, we can prove the equation below [2].

(5)

(5)

Bringing us to the equation for R below

(6)

(6)

Next, we use assumption 3 to re-write the difference in entropy at time step n and n-1 as equal to the difference in entropy at time step 1 and the initial state.

(7)

(7)

Since the Xn’s are Gaussian, we can easily calculate the differential entropy of each step using equation (2) and the differential entropy of the Gaussian distribution [2] (see Equation (8) below)

(8)

(8)

Figure 1. Venn diagram of the conditional entropies of the diffusion process.

(9)

(9)

Using equations (31) and (32) this is re-written

(10)

(10)

We are assured by assumption 1 that . Thus, we can Taylor expand the logarithm giving the first term plus the terms that are O(dt) or smaller.

. Thus, we can Taylor expand the logarithm giving the first term plus the terms that are O(dt) or smaller.

(11)

(11)

Ignoring the terms of O(dt) or smaller, we get our primary result

(12)

(12)

The other method to calculate the entropy rate is  , which equals the limit as n goes to infinity of the entropy of all the Xn’s divided by n times dt [3]. Since we are looking at the rate of generation of the entropy (not the initial conditions), we subtract the entropy of the initial state h(X0). This also assures that R is in the correct units.

, which equals the limit as n goes to infinity of the entropy of all the Xn’s divided by n times dt [3]. Since we are looking at the rate of generation of the entropy (not the initial conditions), we subtract the entropy of the initial state h(X0). This also assures that R is in the correct units.

(13)

(13)

Since  (assumption 2), we know that

(assumption 2), we know that

thus

thus

(14)

(14)

We can insert zero into the limit

(15)

(15)

becomes

becomes

(16)

(16)

Assumption 3 now lets us rewrite this as

(17)

(17)

We see that

(18)

(18)

We can safely conclude that

(19)

(19)

In this view, the temperature acts as an average energy and generates information (or entropy) at a rate equal to twice the average energy divided by ħ.

5. The Variance of Xn

Given the wave particle duality, which states that a free particle is both a wave and a particle, we see that our free particle undergoes both quantum mechanical diffusion of the wave and classical diffusion of the particle.

Introducing  ,

,  ,

,  and

and  makes this more clear.

makes this more clear.  is a random variable drawn from

is a random variable drawn from

the probability distribution associated with the quantum mechanical wave-function, which is the solution to the quantum diffusion equation, equation (33).

the probability distribution associated with the quantum mechanical wave-function, which is the solution to the quantum diffusion equation, equation (33).  is a random variable drawn from

is a random variable drawn from  and is the solution to real diffusion equation, equation (42).

and is the solution to real diffusion equation, equation (42).

If  were an observation of where the particle is located, it would be the sum of a sample

were an observation of where the particle is located, it would be the sum of a sample  drawn from

drawn from  and the uncorrelated sample

and the uncorrelated sample  , drawn from

, drawn from  .

.

(20)

(20)

Thus the action of  is to translate the center of the wavefunction,

is to translate the center of the wavefunction,  , by a sample of

, by a sample of  .

.

As we know from probability theory, the resulting distribution,  is equal to the convolution of

is equal to the convolution of  and

and  over the x variable (30) [4].

over the x variable (30) [4].

(21)

(21)

Since both  and

and  are Gaussian distributions, it is easy to show that the convolution of the two is again a Gaussian distribution with an expected value being equal to the sum of the two expected values (which in this case is zero) and a variance that is equal to the sum of the variances of the individual distributions.

are Gaussian distributions, it is easy to show that the convolution of the two is again a Gaussian distribution with an expected value being equal to the sum of the two expected values (which in this case is zero) and a variance that is equal to the sum of the variances of the individual distributions.

(22)

(22)

(23)

(23)

Shown in Equation (40) the variance of  is

is  .

.

(24)

(24)

In this equation t is the amount of time that has passed since the particle was initialized in the minimum uncertainty state,  is the standard deviation of the minimum uncertainty state,

is the standard deviation of the minimum uncertainty state,  is the standard deviation of the minimum uncertainty state in the momentum domain and m is the mass of the particle.

is the standard deviation of the minimum uncertainty state in the momentum domain and m is the mass of the particle.

Shown in Equation (48), the variance of  is

is .

.

(25)

(25)

Thus we get  .

.

(26)

(26)

Inserting into the last term the Heisenberg Uncertainty principle (32),  , we can group.

, we can group.

(27)

(27)

To understand the model, it is helpful to look at Equation (26).  is the sum of three variances. The first is from the Heisenberg Uncertainty Principle of the initialized state, the second is from the thermal drift of the center of the minimum uncertainty wavepacket moving with a group momentum taken as a sample of the momentum domain, and the third is from the classical diffusion of the center of the wave-function on top of the other two.

is the sum of three variances. The first is from the Heisenberg Uncertainty Principle of the initialized state, the second is from the thermal drift of the center of the minimum uncertainty wavepacket moving with a group momentum taken as a sample of the momentum domain, and the third is from the classical diffusion of the center of the wave-function on top of the other two.

It is also possible to derive Equation (27) by assuming no force on the particle, which lets you deduce

.

.

Squaring and taking the ensemble average is all you need [5].

6. The Imaginary Diffusion Equation

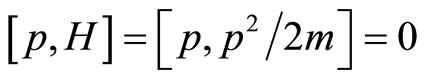

The Kinetic Energy Hamiltonian characterizes the wave packet of a free particle in one dimension, where H is the Hamiltonian, p is the momentum along the x direction, and m is the mass of the particle [6].

(28)

(28)

Given that the momentum commutes with the Hamiltonian,

each eigenvalue of the momentum is a constant of motion and thus the variance in momentum space does not grow with time. It is possible to learn the width of the variance of the momentum by looking at the equipartition of energy [7]. Using the equipartition of energy we know to equate the degree of freedom associated with the average Kinetic Energy to one half the temperature times Boltzmann’s constant.

each eigenvalue of the momentum is a constant of motion and thus the variance in momentum space does not grow with time. It is possible to learn the width of the variance of the momentum by looking at the equipartition of energy [7]. Using the equipartition of energy we know to equate the degree of freedom associated with the average Kinetic Energy to one half the temperature times Boltzmann’s constant.

(29)

(29)

Since we will assume that the average momentum is zero, we can solve for the variance of the momentum.

(30)

(30)

(31)

(31)

Also from the Heisenberg Uncertainty Principal, we can solve for the standard deviation of the wave-function in the spatial domain in terms of its width in the momentum space.

(32)

(32)

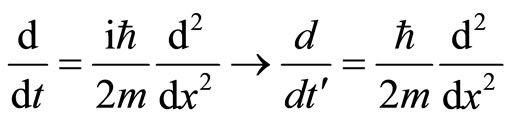

With these dependencies stated, we can move onto the imaginary diffusion equation, which takes the original Hamiltonian and rewrites it in terms of operators. Interpreting the Hamiltonian as the imaginary time derivative operator and the momentum as the negative imaginary spatial derivative operator we can take equation (28) and arrive at the imaginary diffusion equation

(33)

(33)

Don’t forget that we still have the eigenvalue Equations (34), (35) where H and p are the operators and ω and k are the eigenvalues.

(34)

(34)

(35)

(35)

We can calculate the different eigenvalues,  and

and  , through equations (28), (34), (35) and as we should expect arrive at the equation for kinetic energy.

, through equations (28), (34), (35) and as we should expect arrive at the equation for kinetic energy.

(36)

(36)

To solve equation (33), we will begin in the momentum domain  and take the inverse Fourier Transform to observe how

and take the inverse Fourier Transform to observe how  evolves over time [8]. We use

evolves over time [8]. We use  (the wavenumber divided by

(the wavenumber divided by  ) as the independent variable because we want both

) as the independent variable because we want both  and

and  to be normalizable to one.

to be normalizable to one.

(37)

(37)

Our assumption that the wave-function of the free particle in the momentum space is a Gaussian wave-packet is quite reasonable given the nice properties of the Gaussian. Similarly, this assumption is already implicit in the equipartition of energy which was used to find the width of the initial wave-packet. Because the equipartition theorem is derived from the perfect gas law (where particles are modeled using the binomial distribution, of which the Gaussian is the limit), the Gaussian is the right distribution to start with.

To properly account for the evolution of  governed by equation (33),

governed by equation (33),  is used as the kernel for the inverse Fourier Transform.

is used as the kernel for the inverse Fourier Transform.

(38)

(38)

Using equation (36) to substitute in for ω you can solve for equation (38) by completing the squares to get  [8].

[8].  is in Gaussian form; to calculate the variance, we need to take the magnitude squared of the wave-function and get the distribution of the particle.

is in Gaussian form; to calculate the variance, we need to take the magnitude squared of the wave-function and get the distribution of the particle.

(39)

(39)

Where

(40)

(40)

and

(41)

(41)

This is, of course, the well know result from quantum mechanics where the variance of the particle is the sum of the initial variance from the Heisenberg Uncertainty Principal and the associated variance of the momentum domain imparting a thermal group velocity  [9].

[9].

7. The Real Diffusion Equation

When the diffusion constant of a diffusion process is real and does not vary with position, the resulting diffusion equation is as below [10].

(42)

(42)

Of course the solution to this real diffusion equation is the Gaussian with variance equal to 2Dt [11].

(43)

(43)

(44)

(44)

To find D, we will start with the imaginary diffusion operator and using analytical continuation, perform a Minkowski transformation [6]. The imaginary diffusion operator (33) is

(45)

(45)

Upon applying the Minkowski transformation, imaginary time is replaced with real time,  [12]. Applied on the imaginary diffusion operator, the Minkowski transformation brings out the real diffusion constant we are looking for.

[12]. Applied on the imaginary diffusion operator, the Minkowski transformation brings out the real diffusion constant we are looking for.

(46)

(46)

By observation we see that

(47)

(47)

We can also derive  from kinematic arguments as was shown in [1]. We can calculate the variance of f(x,t).

from kinematic arguments as was shown in [1]. We can calculate the variance of f(x,t).

(48)

(48)

8. Entropy at

It is important to ask the entropy of the initial state. We find that at  the entropy is 1 natural unit. Since the wavefunction and associated probability distribution are continuous, we calculate the entropy using the equation for differential entropy. One might object that the differential entropy is only accurate up to a scale factor. However I argue (and so did Hirshman [13]) that if you add the differential entropy in the dual domain, the scale factor cancels out because of the scale property of the Fourier Transform and the result is an absolute measure. As before we will use the position and the wavenumber divided by

the entropy is 1 natural unit. Since the wavefunction and associated probability distribution are continuous, we calculate the entropy using the equation for differential entropy. One might object that the differential entropy is only accurate up to a scale factor. However I argue (and so did Hirshman [13]) that if you add the differential entropy in the dual domain, the scale factor cancels out because of the scale property of the Fourier Transform and the result is an absolute measure. As before we will use the position and the wavenumber divided by  as the dual domains.

as the dual domains.

(49)

(49)

Hirschman [13] showed that this entropy for any wavefunction is  and

and  when the wavefunction is Gaussian.

when the wavefunction is Gaussian.

While we are working with a Gaussian initial state, the answer appears to be a little more complex than just  . We learn from [1] that when solving for the quantum and relativistic length scales of dark particles, particles come in pairs. With only one particle and no reference frame there is no way of knowing the position or the momentum, even if there were universal measuring sticks.

. We learn from [1] that when solving for the quantum and relativistic length scales of dark particles, particles come in pairs. With only one particle and no reference frame there is no way of knowing the position or the momentum, even if there were universal measuring sticks.

We get around this with two particles and a measuring stick/clock by determining the relative displacement and speed. Thus we need to look at the entropy of the relative difference of position and momentum of the two particles.

Define

as the probability distribution on the location of particle 1 at  and similarly for

and similarly for  for particle 2. For the momentum space define

for particle 2. For the momentum space define

as the probability distribution on the wavenumber divided by  for the first particle and

for the first particle and  for the second particle.

for the second particle.

The probability distribution on the relative displacement and wavenumber,  and

and  are

are  and

and  , respectively, and will be Gaussian assuming both the reference particle and the initial particle have Gaussian wave-functions. Since differential entropy is invariant to the first moment we can assume without loss of generality, the first moment of the reference wave-function is zero. The second moment of

, respectively, and will be Gaussian assuming both the reference particle and the initial particle have Gaussian wave-functions. Since differential entropy is invariant to the first moment we can assume without loss of generality, the first moment of the reference wave-function is zero. The second moment of  and

and  will be the sum of the respective second moments of the particle and reference particle if the two are not correlated.

will be the sum of the respective second moments of the particle and reference particle if the two are not correlated.

We can go even further and show that the reference particle should have the same second moments as the particle we are measuring if we minimize the entropy. Thus, we arrive at the distributions for both domains for  and

and

(49)

(49)

(50)

(50)

Thus, the total absolute entropy of the initial state,  , is

, is

(51)

(51)

(52)

(52)

(53)

(53)

There are 2 things of note relative to the rate,  , calculated above. First we see that since the rate,

, calculated above. First we see that since the rate,  , from above, is the difference between the entropy at two times, the impact of the wider distribution of

, from above, is the difference between the entropy at two times, the impact of the wider distribution of  vs.

vs.  is negated. Thus we could have done the analysis above using

is negated. Thus we could have done the analysis above using  and

and  instead

instead  and

and  and the result would be the same. Second, we see that the entropy of the initial state is equal to the additional entropy generated by the diffusion process during the de-coherence time,

and the result would be the same. Second, we see that the entropy of the initial state is equal to the additional entropy generated by the diffusion process during the de-coherence time,

9. Conclusions

We have seen that by making three assumptions about the thermal diffusion of a free particle, we are able to show that entropy is generated at a rate equal to twice the particle’s temperature (when expressed in the correct units).

This result will be applicable to all studies on free particles and other environments that are governed by similar equations. Also a myriad of applications exist in computer modeling, including but not limited to the following: finite difference time domain methods, Block’s equations for nuclear magnetic resonance imaging, and plasma and semiconductor physics.

To check the primary result, one would perform a quantum non-demolition measurement on the quantum state of an ensemble of free particles. The minimum bit rate needed to describe the resulting string of numbers that describe the trajectory would be the entropy rate and should be equal to twice the temperature.

However, even before an experiment can be conducted, this result is useful by suggesting the use of different information theoretical techniques to examine problems with de-coherence and might give a different perspective on the meaning of temperature.

This result is interesting as a stand-alone data point, that the entropy rate is equal to twice the temperature. However, if we could go further and more generally say that temperature is the same as entropy rate, it would change the way we view temperature and entropy.

10. Acknowledgements

JLH thanks Thomas Cover for sharing his passion for the Elements of Information Theory, and McKinsey & Co. for the amazing environment where this article was written.