Relating Optimization Problems to Systems of Inequalities and Equalities ()

1. Introduction

Quantitative decision analysis involves notions of comparison and optimality. The result is that the mathematical models used to make decisions frequently involve an optimization problem to determine the values of the decision variables, a system S of possibly nonlinear inequalities and equalities to restrict these variables, or both. The solution of such a system S and optimization problems is thus essential to decision analysis. In this note we relate a general nonlinear programming problem to a system S to provide a solution of either by solving the other—with certain limitations. In particular, we present a method for either obtaining a solution for S or else establishing that a solution does not exist by using existing computational techniques. Our method generalizes phase 1 of the two-phase linear programming simplex method to nonlinear programming. We also present an algorithm to solve a general nonlinear programming problem as a series of such systems S by generalizing the “sliding objective” method for geometrically solving a two-dimensional linear programming problem.

As background, we note that systems of linear equations have been considered for at least three millennia. By then the Chinese had organized linear systems of equations in a matrix-like form and solved them with a procedure equivalent to Gaussian Elimination [1]. In the third century BCE, Archimedes [2] formulated his well-known cattle problem as a system of linear equations that required an integer solution. In the seventeenth century Descartes introduced systems of linear equations in geometry, and later that century Leibniz developed a systematic method of finding solution using determinants [3]. In the nineteenth century Cramer used Leibniz’s work to establish a way of getting explicit solutions via his eponymous Cramer’s rule, and Grassman began to synthesize these developments into what is now called linear algebra [4].

The history of the theory of linear inequalities is more recent and developed through the interactions between mathematics and other disciplines [5]. In the nineteenth century Fourier proposed the idea of constructing a mathematical theory for systems of linear inequalities. Shortly thereafter, Farkas developed a theory of systems of linear inequalities with respect to analytical mechanics that led to Farkas Lemma. At the end of the nineteenth century, Minkowski—independent of Farkas—derived a theory of linear inequalities with respect to convexity [5]. In the twentieth century Lovitt introduced the preferential voting problem as a set of inequalities and sought a geometric solution. Later, Dines approached the voting problem in an algebraic way [5]. The ideas behind his solution method led to the development of a theory of systems of linear inequalities [6]. He also examined the relation between the matrix of the coefficients of a system of linear inequalities, the existence of their solutions, and the characteristics of solutions. Further studies by Kuhn and Tucker [7] [8], for example, refined these results but did not relate optimization problems to linear systems as done here in the general case.

An efficient computational method to solve a system of linear inequalities and equalities did not exist until Dantzig [9] suggested a phase 1 involving artificial variables to start the simplex method. Subsequent work involving optimization has focused on the solvability of convex inequality constraints in a convex programming problem [11], solving systems of linear interval inequalities [12] [13], or considering variational inequalities [14] [15], for example.

Here we generalize Dantzig’s approach to systems of nonlinear inequalities and equalities S by considering an associated nonlinear programming problem. We then extend the geometric “sliding objective function method” [9] for solving a two-variable linear programming problem to solving a general nonlinear programming problem. Our approach requires determining the solvability of a nonlinear systems S at each iteration.

The paper is organized as follows. In Section 2, a correspondence is established between the solvability of a nonlinear system S and an associated non-linear programming minimization problem. We then present an algorithm for solving a general nonlinear programming minimization problem, to any degree of accuracy, as a series of systems S. In Section 3, examples are given. Conclusions are stated in Section 4.

2. Basic Results

For real-valued functions f, g, h, consider the system S of inequalities and equalities (1)-(3) and the minimization problem T below, where X is a set in which

is required to satisfy further requirements. For example, if nonnegativity restrictions

are automatically applied by the solver to be used, then X could be the set

. X could also be the set

, where

is the set of nonnegative integers. We note that each equality in (2) could be replaced by two inequalities in opposite directions so that S without (2) remains a general formulation.

(1)

(2)

(3)

(T) Minimize

subject to

(4)

(5)

(6)

(7)

(8)

The variables

in T but not in S are called slack variables as in linear programming. They represent the nonnegative difference between the left and right sides of (1). Similarly, the variables

, are called artificial variables and should each have the value 0 if (1)-(2) are to hold. The main result relating S and T is now stated.

Proposition 1. System S has a solution

if and only if problem T has a solution

for which

. In particular, a solution

to T for which

, determines the solution

to S.

Proof. Suppose that system S has a solution

. Then

satisfies (1)-(3). In particular,

(9)

(10)

It follows from (9) that for all

, there exists

such that

. Set

. Then

satisfies (4)-(8) and thus solves T since

, which is the minimum possible value of the objective function of T. Next suppose that

solves T and

. Then immediately

solves S, so the proof is complete.

Proposition 1 has two immediate corollaries.

Corollary 1. System S has no solution if and only if problem T has no solution for which

.

Corollary 2. Proposition 1 remains true for any one or more of the following modifications to T:

1) Any

artificial variables have been added in (4)-(5) but not necessarily one for each equation,

2) The coefficient of an added artificial variable in (4)-(5) is any nonzero scalar,

3) The objective function of T is the sum of the added artificial variables with any positive scalar coefficients.

Corollary 1 is simply an equivalent restatement of Proposition 1 in terms of a necessary and sufficient condition for the nonexistence of a solution to S. Proposition 1 and Corollary 1 together fully address the solvability of S. Corollary 2 generalizes the problem T under which Proposition 1 is valid. Corollary 2 is established with a proof similar to that of Proposition 1.

Observe that the efficiency of solving the problem T in either Proposition 1 or Corollary 2 depends both on the nature of T and the computational method used to solve it. For example, Proposition 1 can be applied to a Diophantine equation—a polynomial equation with integral coefficients, usually in two or more variables, for which integer solutions are required. It is well known that there are undecidable Diophantine equations [10]; that is, there is no possible algorithm to determine in finite time if a solution exists. It follows that a problem T associated with a system S consisting of a single Diophantine equation may be undecideable.

Now consider the following system

of inequalities and equalities (11)-(14) and the minimization problem

, where z is a variable representing the scalar

.

(11)

(12)

(13)

(14)

Minimize

subject to

(15)

(16)

(17)

Assume that

solves T' with

. Then

is obviously a solution to system

. On the other hand, a solution

to

is not necessarily a solution to

since it is possible that

. However, the minimum value

of the objective function

for

can be obtained to any degree of accuracy with the following algorithm by solving a sequence of systems

, each with a different value for z.

![]()

Algorithm 1 is an extension of the “sliding objective function method” for solving a two-variable linear programming problem [9]. More generally, it is a level-set method since

is a sublevel set [16] of f, a concept used extensively in quasiconvex minimization [17]. The difficulty in solving

by Algorithm 1 is that one must solve a series of systems

via Proposition 1 or Corollary 2. But advancing computer techniques [18] may allow a computer to “visualize” the m-dimensional sublevel sets of Algorithm 1 and thus determine at least an approximate solution to

geometrically in a manner analogous to finding the zeros of a real-valued function with graphing software.

Algorithm 1 may also be construed as an inverse approach for nonlinear problems to the linear active-set constraint selection method in m-dimensions described in [19] [20] [21] [22]. There, for a minimization problem, z increases at each iteration upon adding more active constraints until all constraints are active. In contrast, z decreases in Algorithm 1 until there are no solutions to

. In the former case, the additional constraints and resulting smaller feasible region cause z to increase. In the latter case, a smaller z gives a smaller feasible region for

. The significance of this comparison is that an approach related to Algorithm 1 has been efficiently implemented for large-scale linear programming problems

.

3. Applications

3.1. Example of Solving Nonlinear Inequalities and Equalities

Consider the following system S of inequalities and equalities:

(18)

(19)

(20)

(21)

To find a solution for S or else determine that a solution does not exist for (18)-(21), we apply Proposition 1. The associated problem problem

is

(P1) Minimize

subject to

Problem P1 is then solved by the nonlinear programming solver BARON in the General Algebraic Modeling System (GAMS) [23], which gives to two decimal places

,

,

,

,

,

with an objective function value of 0. It follows from Proposition 1 that

solves (18)-(21).

We note that applying the same approach to the system

(22)

(23)

(24)

(25)

gives a nonzero optimal objective function value after putting (22) in the standard inequality direction (1). Hence no solution exists for system (22)-(25).

3.2. Example of Finding the Core of a Cooperative Game

In cooperative game theory, the solution concept called the core of a game [24] reduces to the solution of a system of linear inequalities and equalities. A cooperative game is one in which players form coalitions, coordinate their strategies, and share the payoffs. Given a cooperative n-person game, let

denote the set of players. For each subset S of N, the characteristic function

of the game gives the amount

that the members of S can be certain of receiving if they form a coalition. A reward vector

stipulates the amount

that player i receives. If for

, a reward vector

satisfies

then

is called an imputation. The core of an n-person game is the set of all undominated imputations. An imputation

is in the core of an n-person game if and only if for each subset S of N the sum of its players’ rewards is at least

.

The following example is adapted from [24]. Let

be a 3-player game with characteristic function

and

. Then a reward vector

is an imputation if and only if

(26)

(27)

(28)

(29)

An imputation

will be in the core if and only if

also satisfies

(30)

(31)

(32)

(33)

To find the core defined by (26)-(33), we remove the redundant constraint (33). In addition, let

to avoid adding slack and artificial variables to the nonnegativity restrictions. We then find the solutions of the system

(34)

(35)

(36)

(37)

(38)

in the standard form (1)-(3) for S.

The associated minimization problem T for (34)-(38) is

(P2) Minimize

subject to

Solving P2 with the CPLEX solver in GAMS, we obtain the unique solution

,

,

,

. Thus the system (34)-(38) has a solution

according to Proposition 1, and the core of G1 is

.

3.3. Example of Solving a Linear Goal Program

Consider the following goal programming advertising model adapted from [25]. There are four resource constraints and two goals, where

and

are the nonnegative number of minutes for radio and television ads, respectively, to be bought for advertising some product. The resource constraints impose limitations on developing the ads. The first goal is that the ads should reach at least 45 million people, while the second goal is that the total cost spent on both ads should be no more than 100 thousand dollars. The explicit resource and goal constraints are given as the system

(39)

(40)

(41)

(42)

(43)

(44)

We apply Corollary 2 to solve (39)-(44) for

or else to determine that both goals cannot be satisfied. We include the nonnegativity constraints (41)-(42) in the set

to avoid adding slack variables to them. We then change the direction of (43) to ≤ as in (1), add slack variables to all inequalities, but only add artificial variables to the goal constraints. We do not distinguish between the relative importance of the goals. Problem T in Corollary 2 thus becomes

(P3) Minimize

subject to

The CPLEX solver in GAMS gives that P3 has no solution and hence that (39)-(44) cannot be jointly satisfied. However, a slight modification of P3 yields further information. We now subtract artificial variables instead of adding them in the goal constraints of P3, as allowed by Corollary 2. In this case, we get a solution

,

,

,

,

. The conclusion from Proposition 1 is again that (39)-(44) cannot be satisfied. But now

is the amount by which (43) cannot be met for

. This point satisfies (44), however, since

. Such information is available if artificial variables are used only in the goal constraints of (4) and are subtracted rather than added. In that case, the slack and artificial variables in a goal constraint act as a pair of deviational variables [26].

Reference [26] also discusses weighting the artificial variables differently in the objective function of T. Such a weighting can account for the relative importance of the different goals as well as normalize the goal constraints to a comparable scale. T would then provide a more accurate model.

3.4. Example of Solving a Nonlinear Goal Program

A nonlinear goal programming problem has either a nonlinear goal or a nonlinear resource constraint. Consider the following nonlinear two-goal programming problem, where

where

(45)

(46)

(47)

(48)

(49)

After taking the negative of (47) to add a slack variable and put (47) into the standard form (1), we use Proposition 1 to formulate (45)-(49) as a minimization problem and obtain

(P4) Minimize

subject to

The Baron solver in GAMS gives the solution

,

,

,

,

,

and

with an objective function value of 0. The zero objective function value indicates that

satisfies (45)-(49). Weighting the artificial variables in the objective function of problem T is also possible for nonlinear goal programming.

3.5. Example of Finding a Nash Equilibrium (NE)

Let G2 be the two-person nonzero sum game with the payoff matrix of Table 1. According to [27], the following system (50)-(58) is both necessary and sufficient for

and

to be a mixed NE for Players 1 and 2, respectively. Let

. The auxiliary variables

and

are needed in these conditions but not required to express the NE of G2.

(50)

(51)

(52)

(53)

(54)

(55)

(56)

(57)

(58)

Thus problem T of Proposition 1 is now

(P5) Minimize

subject to

The BARON solver in GAMS gives the solution

,

,

,

,

,

, with all the

and

equal to 0. It is well known that a mixed NE always exists [28] for a noncooperative game with a finite number of players having a finite number of strategies. This fact is confirmed here, and the mixed strategies of Players 1 and 2 for G2 are (0.6, 0.4) and (0.4, 0.6), respectively.

For

, necessary and sufficient conditions given in [29] can be similarly used to find an NE. Likewise, Berge [30] and more general equilibria can be found from the necessary and sufficient conditions stated in [31]. The reasoning behind these sets of conditions is that any equilibrium in noncooperative game theory is implicitly defined by an optimization problem with inequality and equality constraints. This fact results from the properties associated with the general meaning of an equilibrium. The system (50)-(58), for example, is just a way to simplify this optimization problem by writing it as a system of inequalities and equalities with auxiliary variables.

3.6. Example of Solving an Optimization Problem with Algorithm 1

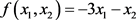

Consider the following problem with nonnegative integer variables.

(P6) Minimize

subject to

where

. Associated with the problem

of Algorithm 1 is the system

(59)

(60)

(61)

We apply Algorithm 1 to (59)-(61) with the following steps.

Step 1. We use Proposition 1 and the BARON solver in GAMS to find the point (1, 2) satisfying (60)-(61). Set

,

, and

.

Step 2. Set

. Using Proposition 1 for (59)-(61) with

, GAMS determines a solution. In general, Step 2 only requires information as to the existence or nonexistence of solutions to

for the current value of z.

Step 3. Set

.

Step 2. Set

. Then GAMS determines a solution for (59)-(61) with

.

Step 3. Set

.

Step 2. Set

. Now GAMS cannot find a solution to (59)-(61).

Step 4. The optimal objective function value of P6 lies in . Since the coefficients of the objective function

. Since the coefficients of the objective function  are integers, its minimum value must be −7 occurring at (2, 1).

are integers, its minimum value must be −7 occurring at (2, 1).

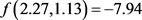

Figure 1 illustrates the application of Algorithm 1 to P6. For each fixed z, the set of  both satisfying (60)-(61) and lying to the right of the line

both satisfying (60)-(61) and lying to the right of the line  are feasible points to P6 that give smaller values of

are feasible points to P6 that give smaller values of . The solution to P6 is the point

. The solution to P6 is the point  as noted by the single dot with

as noted by the single dot with . The solution to two decimal places without the integer restriction is

. The solution to two decimal places without the integer restriction is  with

with .

.

![]()

Figure 1. Graphical Illustration of Solving P6 by Algorithm 1.

In general, the sublevel sets of the objective function f of the problem (T') solved by Algorithm 1 are considerably more complicated in higher dimensions, with more constraints, and for a nonlinear objective function than the level sets of P6. As seen in Figure 1, the sublevel sets for P6 are simple half-spaces. The two-dimensional level (not sublevel) sets of [18] provide more interesting visual examples in a context not involving optimization.

4. Conclusions

In this paper, we have related a general nonlinear programming problem to a system S of nonlinear inequalities and equalities in two ways. In the first, we solved S or else determined that a solution did not exist by solving an associated nonlinear programming problem. In particular, we used artificial variables and generalize phase 1 of the two-phase simplex method for the purpose of examining the solvability of S. Examples were given for a system of nonlinear inequalities and equalities, in cooperative and non-cooperative game theory, and in goal programming.

In the second way, we developed an algorithm to solve an a general nonlinear programming problem to any degree of accuracy by determining if a solution exists for each of a series of systems S, i.e., for a series of subproblems. The fact that an optimization problem can solve a given system S, but not vice versa, simply indicates that an optimization problem must essentially solve S as part of the optimization process. In this second approach, we generalized to nonlinear programming the “sliding objective function” method of linear programming, and an example was presented to illustrate its geometrical interpretation. We noted that in linear programming a sequential active-set method with an inverse interpretation of Algorithm 1 uses the simplex algorithm for each subproblem and has proved efficient in solving large-scale linear programming problems. This observation also emphasizes that both of our approaches here rely on existing computational techniques and thus might be construed as meta-approaches.