Robust Regression Diagnostics of Influential Observations in Linear Regression Model ()

1. Introduction

Multiple regressions assess relationship between one dependent variable and a set of independent variables. Ordinary Least Squares (OLS) Estimator is most popularly used to estimate the parameters of regression model. The estimator has some very attractive statistical properties which have made it one of the most powerful and popular estimators of regression model. A common violation in the assumption of classical linear regression model is the presence of outlier. An outlier is an observation that appears to be inconsistent with other observations in a set of data [1] . In regression, outliers can occur in three different forms: 1) outliers in the response variable; 2) outliers in the explanatory variable called leverage points; and 3) outliers in both the response and explanatory variables. An outlier can either be influential or not. Influential observation is an observation that would cause some important aspects of the regression analysis (regression estimates or the standard error) to substantially change if it were removed from the data set [2] .

The detection of outliers is an important problem in model building, inference and analysis of a regression model. The presence of outliers can lead to biased estimation of the parameters, misspecification of the model and inappropriate predictions [3] .

Regression diagnostics becomes necessary in regression analysis in order to detect the presence of outliers and influential points. These measures either use the OLS residuals or some functions of the OLS residuals (standardized and studentized residuals) for detecting outliers in Y-direction and the diagonal elements of hat matrix for detecting high leverages (X-direction). It was mentioned that the OLS residuals are not appropriate for diagnostic purpose and therefore the scaling versions for the residuals are introduced [3] . However, all these measures are still obtained based on the ordinary least squares estimators.

Robust regression estimator is an important estimation technique for analyzing data that are contaminated with outliers or data with non normal error term. It is often used for parameter estimation to provide resistant (stable) results in the presence of outliers. Some robust estimators have been provided which include the M, MM, LTS, and S estimators. A diagnostic measure based on the robust estimator M was introduced as alternative to the OLS estimator to detect influential points [4] . This M estimator had earlier been observed to perform well when there was outlier in the Y direction [5] .

In this paper, the use of robust estimators MM, S and LTS is proposed and considered as alternative to ordinary least square (OLS) and the robust M estimators.

2. Background

Consider the multiple linear regression model

(1)

(1)

where Y is an n × 1 vector of response variable, X is an n × p full rank matrix of known regressors variables augmented with a column of ones. β is p × 1 vector of the unknown regression coefficients and ε is the n × 1 vector of error terms with  and

and  and

and  is an n × n matrix of identity matrix.

is an n × n matrix of identity matrix.

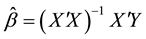

The OLS estimator is defined as:

(2)

(2)

Some useful properties of  are that it is an unbiased estimator

are that it is an unbiased estimator  and the Gauss-Markov theorem [6] guarantees that it is best linear unbiased estimator (BLUE) under the non violation of classical regression model assumptions.

and the Gauss-Markov theorem [6] guarantees that it is best linear unbiased estimator (BLUE) under the non violation of classical regression model assumptions.

2.1. Robust Estimators

2.1.1. M Estimators

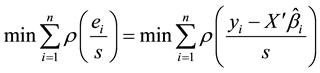

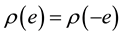

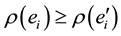

The most common general method of robust regression is M-estimation, introduced by Huber [7] . It is nearly as efficient as OLS. Rather than minimizing the sum of squared errors as the objective, the M-estimate minimizes a function ρ of the errors. The M-estimate objective function is

(3)

(3)

where s is an estimate of scale often formed from linear combination of the residuals. The function ρ gives the contribution of each residual to the objective function. A reasonable ρ should have the following properties: ,

,  ,

,  , and

, and  for

for

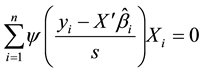

The system of normal equations to solve this minimization problem is found by taking partial derivatives with respect to β and setting them equal to 0, yielding,

(4)

(4)

where  is a derivative of ρ. The choice of the

is a derivative of ρ. The choice of the  function is based on the preference of how much weight to assign outliers. Newton-Raphson and Iteratively Reweighted Least Squares (IRLS) are the two methods to solve the M-estimates nonlinear normal equations. IRLS expresses the normal equations as:

function is based on the preference of how much weight to assign outliers. Newton-Raphson and Iteratively Reweighted Least Squares (IRLS) are the two methods to solve the M-estimates nonlinear normal equations. IRLS expresses the normal equations as:

![]() (5)

(5)

2.1.2. S Estimator

S estimator [8] which is derived from a scale statistics in an implicit way, corresponding to ![]() where

where ![]() is a certain type of robust M-estimate of the scale of the residuals

is a certain type of robust M-estimate of the scale of the residuals![]() . They are defined by mini-

. They are defined by mini-

mization of the dispersion of the residuals: minimize ![]() with final scale estimate

with final scale estimate

![]() . The dispersion

. The dispersion ![]() is defined as the solution of

is defined as the solution of

![]() (6)

(6)

where K is a constant and ![]() is the residual function. Tukey’s biweight function [8] was suggested and is

is the residual function. Tukey’s biweight function [8] was suggested and is

defined as:

![]() (7)

(7)

Setting c = 1.5476 and K = 0.1995 gives 50% breakdown point [9] .

2.1.3. MM Estimator

MM-estimation is special type of M-estimation [10] . MM-estimators combine the high asymptotic relative efficiency of M-estimators with the high breakdown of class of estimators called S-estimators. It was among the first robust estimators to have these two properties simultaneously. The MM refers to the fact that multiple M-estimation procedures are carried out in the computation of the estimator. MM-estimator was described in three stages as follows:

Stage 1. A high breakdown estimator is used to find an initial estimate, which we denote![]() . The estimator needs to be efficient. Using this estimate the residuals,

. The estimator needs to be efficient. Using this estimate the residuals, ![]() are computed.

are computed.

Stage 2. Using these residuals from the robust fit and ![]() where K is a constant and the ob-

where K is a constant and the ob-

jective function![]() , an M-estimate of scale with 50% BDP is computed. This

, an M-estimate of scale with 50% BDP is computed. This ![]() is denoted

is denoted![]() . The objective function used in this stage is labeled

. The objective function used in this stage is labeled![]() .

.

Stage 3. The MM-estimator is now defined as an M-estimator of ![]() using a redescending score function,

using a redescending score function,

![]() , and the scale estimate

, and the scale estimate ![]() obtained from stage 2. So an MM-estimator

obtained from stage 2. So an MM-estimator ![]() defined as a solu-

defined as a solu-

tion to

![]() (8)

(8)

2.1.4. LTS Estimator

Extending from the trimmed mean, LTS regression minimizes the sum of trimmed squared residuals [11] . This method is given by,

![]() (9)

(9)

where ![]() such that

such that ![]() are the ordered squares residuals and h is de-

are the ordered squares residuals and h is de-

fined in the range![]() , with n and p being sample size and number of parameters respectively.

, with n and p being sample size and number of parameters respectively.

The largest squared residuals are excluded from the summation in this method, which allows those outlier data points to be excluded completely. Depending on the value of h and the outlier data configuration. LTS can be very efficient. In fact, if the exact numbers of outlying data points are trimmed, this method is computationally equivalent to OLS.

2.2. Influential Measures in Least Squares

2.2.1. Cook’s Distance Measures

Cook’s distance measure [12] denoted by![]() , considers the influence of the ith case on all n fitted values. It is an aggregate influence measure, showing the effect of the ith case on all fitted values.

, considers the influence of the ith case on all n fitted values. It is an aggregate influence measure, showing the effect of the ith case on all fitted values.

![]() (10)

(10)

where ![]() and

and ![]() respectively provide estimate on all n data points and the estimate obtained after the ith observation is deleted. Cook’s distance measure has been observed to relate to F(p, n − p) distribution and hence its percentile value can be ascertained. If the percentile value is less than about 10 or 20 percent, the ith case has little apparent influence on the fitted values. If on the other hand, the percentile value is near 50 percent or more, the fitted values obtained with and without the ith case should be considered to differ substantially, implying that the ith case has a major influence on the fit of the regression function. An equivalent algebraic expression of Cook’s D Measure is given by:

respectively provide estimate on all n data points and the estimate obtained after the ith observation is deleted. Cook’s distance measure has been observed to relate to F(p, n − p) distribution and hence its percentile value can be ascertained. If the percentile value is less than about 10 or 20 percent, the ith case has little apparent influence on the fitted values. If on the other hand, the percentile value is near 50 percent or more, the fitted values obtained with and without the ith case should be considered to differ substantially, implying that the ith case has a major influence on the fit of the regression function. An equivalent algebraic expression of Cook’s D Measure is given by:

![]() (11)

(11)

where ![]() is the diagonal elements of the hat matrix and where ri is ith internally studentized residual. It was suggested that observations for which

is the diagonal elements of the hat matrix and where ri is ith internally studentized residual. It was suggested that observations for which ![]() warrants attention [12] .

warrants attention [12] .

2.2.2. DFFITS

It is a diagnostic measure to reveal how influential a point is in a statistical regression. It is defined as the change in the predicted value for a point obtained when that point is left out of the regression and divided by the estimated standard deviation of the fit at that point.

A useful measure of the influence that case i has on the fitted value ![]() is given by:

is given by:

![]() (12)

(12)

where ![]() is the predicted value for all cases,

is the predicted value for all cases, ![]() for the ith case obtained when the ith case is omitted in fitting the regression function,

for the ith case obtained when the ith case is omitted in fitting the regression function, ![]() is the estimated mean square error of

is the estimated mean square error of![]() . Thus, DFFITS is the standardized change in the fitted value of a case when it is deleted. It can also be expressed as:

. Thus, DFFITS is the standardized change in the fitted value of a case when it is deleted. It can also be expressed as:

![]() (13)

(13)

where ![]() is the estimate of

is the estimate of![]() ,

, ![]() is the diagonal elements of the hat matrix and

is the diagonal elements of the hat matrix and ![]() is the

is the

studentized residual (also called the external studentized residual).

It was suggested that observations for which ![]() warrants attention for large data sets and if

warrants attention for large data sets and if

the absolute value of DFFITS exceeds 1 for small to medium data sets [13] .

2.2.3. DFBETAS

It is a measure of the influence of the ith case on each regression coefficients![]() . It is obtained by computing the difference between the estimated regression coefficient

. It is obtained by computing the difference between the estimated regression coefficient ![]() based on all n cases and the regression coefficient obtained when the ith case is omitted, to be denoted by

based on all n cases and the regression coefficient obtained when the ith case is omitted, to be denoted by![]() . The difference is divided by an estimate of the standard deviation of

. The difference is divided by an estimate of the standard deviation of![]() , we obtain the measure DFBETAS:

, we obtain the measure DFBETAS:

![]() (14)

(14)

where ![]() is the kth diagonal element of

is the kth diagonal element of![]() .

.

The error term variance, ![]() , is estimated by

, is estimated by ![]() which is the mean square error obtained when the ith case is deleted in fitting the regression model. A large absolute value of

which is the mean square error obtained when the ith case is deleted in fitting the regression model. A large absolute value of ![]() is indicative of a large impact of the ith case on the kth regression coefficient. Guideline for identifying influential cases is when the ab-

is indicative of a large impact of the ith case on the kth regression coefficient. Guideline for identifying influential cases is when the ab-

solute value of DFBETAS exceeds 1 for small to medium data sets and ![]() for large data sets [13] .

for large data sets [13] .

2.3. Influential Measures in Robust Regression

The robust version of Cook’s Distance and DFFITS measure based on Huber-M estimator was introduced to measure influential points.![]() , which is the least square estimator, was replaced with

, which is the least square estimator, was replaced with ![]() which is the M estimator of β and the robust scale estimate of

which is the M estimator of β and the robust scale estimate of ![]() instead of

instead of ![]() which is the least square estimator in (2). The robust version of Cook’s Distance is defined as:

which is the least square estimator in (2). The robust version of Cook’s Distance is defined as:

![]() (15)

(15)

where ![]() is the robust estimation of β and

is the robust estimation of β and ![]() is the robust scale estimation of

is the robust scale estimation of![]() .

.

The robust DFFITS is defined as:

![]() (16)

(16)

where ![]() is the ith diagonal element of hat matrix.

is the ith diagonal element of hat matrix.

The robust version of DFBETAS measure is proposed. This can be expressed as follows:

![]() (17)

(17)

Consequently, in this paper, we consider the robust version of Cook’s D, DFFITS and DFBETAS and applied them not only to the robust M estimator but also to MM, LTS and S estimators.

3. Application to Real Life Data Sets

Real life data sets are used to illustrate the performance of the influential statistics. The results are as follows.

3.1. Application to Longley Data

Table 1 and Table 2 provide the summary of results of the application of robust diagnostics measures to the Longley data.

From Table 1, robust diagnostics based on OLS revealed that case 10 is an outlier. Robust diagnostics based on M estimator revealed that cases 10, 14, 15, 16 are outliers. Robust diagnostics based on MM and S estimators revealed that cases 14, 15, 16 are outliers while robust diagnostic measure based on LTS estimator revealed that cases 5, 14, 15, 16. The influential points from these outliers were then identified in Table 2. The robust diagnostic measures identified more influential points than outliers; this might be because the Longley data suffers both multicollinearity and outlier problem.

From Table 2, Cook’s D based on OLS revealed that cases 5, 16, 4, 10, and 15 (in this order) were the most influential cases. The robust version of the Cook’s D statistics based on the M estimator identified cases 5, 10, 1,

![]()

Table 1. Summary of outlier results using Longley data.

![]()

Table 2. Summary of influential points using Longley data.

WIP: regression estimates after removing influential points. IP: influential points.

16, 4, 15 and 6 as influential. The points identified by Cook’s D based on MM, S and LTS estimators are not different from the points identified by OLS. Though with different root mean square error. The root mean square error is not too different.

The influential points identified by DFFITS based on OLS is not different from the cases identified using Cook’s D. Also, the cases identified by DFFITS based on S and LTS estimators respectively are not different from the points identified by their respective Cook’s D. The only exception is that DFFITS based on MM identified more cases (5, 10, 16, 15, 1, 6, and 2) than its Cook’s D. The robust version of the DFFITS statistics based on the M estimator identified cases 5, 10, 16, 15, 1, 6 and 2 as influential.

From all the influential points identified by Cook’s D and DFFITS statistics respectively, the observations enclosed in parenthesis are reported to influence the regression coefficients. DFBETAS based on OLS (5, 10), DFBETAS based on M (5, 16, 15, 6), DFBETAS based on MM (5), DFBETAS based on S (5), DFBETAS based on LTS (10, 16). Cases identified by MM and S estimator are the same.

Having removed the influential cases, it can observed that the M estimator is most efficient (RMSED = 20.00, RMSEDFFITS = 6.42, RMSEDFBETAS = 145.53). However, MM, S and LTS estimators could not provide results probably because of small sample size (n = 16). More so, MM and S are modified M estimator.

3.2. Application to Scottish Hills Data

Table 3 and Table 4 provide the summary of results of the application of robust diagnostics measures to the Scottish Hills data.

From Table 3, robust diagnostics based on OLS revealed that cases 7, 18, 31, 33, 35 are outliers. Robust diagnostics based on the robust estimators (M, MM, LTS and S estimators) revealed that cases 7, 11, 17, 18, 31, 33, 35 are outliers. The influential points from these outliers were then identified in Table 4.

From Table 4, Cook’s D based on OLS revealed that cases 7, 11, 18 (in this order) were the most influential cases. The robust version of the Cook’s D statistics based on the M estimator identified cases 7, 31, 33 and 35 as influential. The points identified by Cook’s D based on MM, S and LTS estimators are the same but with different root mean square error.

DFFITS based on OLS revealed that cases 7, 18 (in this order) were the most influential cases. Case 11 identified by Cook’s D was not identified. Also, the cases identified by DFFITS based on MM, S and LTS estimators respectively are the same with the cases identified by their respective Cook’s D.

From all the influential points identified by Cook’s D and DFFITS statistics respectively, DFBETAS based on OLS revealed that cases 7 and 18 affect the regression coefficients. The robust DFBETAS measures based on M, MM, S and LTS revealed that none of the influential points identified by DFFITS and Cook’s affect the regression coefficients. It is concluded that the diagnostics measure computed using OLS is not reliable. For this data set, the diagnostic measures based on M estimator identified more influential points than other robust estimators. This might be because the RMSE is smaller than other considered estimators. However, MM, S and LTS estimators provided similar results.

Having removed the influential cases, it can observed that the M estimator is most efficient (RMSED = 274.31, RMSEDFFITS = 274.31, RMSEDFBETAS = 286.87).

3.3. Application to Hussein Data

Table 5 and Table 6 provide the summary of results of the application of robust diagnostics measures to Hussein data.

![]()

Table 3. Summary of outlier results using Scottish Hills data.

![]()

Table 4. Summary of influential points using Scottish Hills data.

![]()

Table 5. Summary of outlier results using Hussein data.

![]()

![]()

Table 6. Summary of influential points using Hussein data.

From Table 5, robust diagnostics based on OLS revealed that cases 15, 16, 20, 21, 30, 31 are outliers. Robust diagnostics based on M and S estimators revealed that cases 12, 14, 15, 16, 17, 18, 19, 20, 21, 30, 31 are outliers. Robust diagnostics based on MM and LTS estimators revealed that cases 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 30, 31 are outliers. The influential points from these outliers were then identified in Table 6.

From Table 6, Cook’s D based on OLS revealed that cases 15, 16, 31, 30, and 20 (in this order) were the most influential cases. The robust version of the Cook’s D statistics based on the M estimator identified cases 30, 31, 16, 21 and 14 as influential. The robust version of the Cook’s D statistics based on the MM estimator identified cases 15, 30, 31, 14, 12, 13, 11, 9, 1, 3, 10 and 18 as influential. The robust version of the Cook’s D statistics based on the S estimator identified cases 30, 31, 16 and 21. The robust version of the Cook’s D statistics based on the LTS estimator identified cases 15, 30, 31, 12, 14, 13, 20 and 11 as influential.

The cases identified by DFFITS based on OLS is not different from the ones identified using Cook’s D. The cases identified by DFFITS based on M and S estimators are 30, 31 and 36. The robust version of the DFFITS statistics based on the MM estimator identified cases 31, 15, 30, 14, 12, 13, 11 and 9 as influential. The robust version of the DFFITS statistics based on LTS estimator identified cases 15, 30, 31, 12, 14 and 13 as influential.

From all the influential points identified by Cook’s D and DFFITS statistics respectively, DFBETAS based on OLS revealed that cases 15 and 16 affect the regression coefficients. The robust DFBETAS measures based on M, MM, S and LTS revealed that none of the influential points identified by DFFITS and Cook’s affected the regression coefficients.

It is concluded that the diagnostics measure computed using OLS is not reliable. For this data set, the diagnostic measures based on MM estimator identified more influential points than other robust estimators. This might be because the RMSE is smaller than other considered estimators.

4. Conclusions

In this paper, it was established that a point identified as outliers is not necessarily influential. In the application to Longley data, more influential points than outliers were identified; this might be because the Longley data suffers both multicollinearity and outlier problem. Some robust version of Cook’s distance, Welsch-Kuh distance (DFFITS) and DFBETAS are proposed to measure influential points. Diagnostics measures based on OLS do not give reliable estimates as compared to other estimators. It suffered more from swamping and masking effect. The performance of the robust version of the influential statistic is largely dependent on the root mean square error. The performances of the Cook’s D and the DFFITS measure are not too different except for some few cases. Inflated standard error is reported in this study as one of the consequence of outliers. It is observed that root mean square error value reduces as the influential points are identified and removed. The DFBETAS shows that not all cases reported to be influential exert undue influence on the regression coefficients. The diagnostic measures based on the robust estimators perform better than OLS estimator when the influential points are removed or not. Also, the performance of the proposed robust diagnostics measure based on MM performs better than that of M estimator in application to Hussein data.

Finally, the performances of the proposed robust diagnostics measured based on MM and M estimator are generally more efficient based on the applied data.