1. Introduction

There is a basic technique (expounded in detail by M. S. Bartlett—see [1] [2] and [3] —nicely summarized in [4]) for constructing confidence regions (intervals) for parameters of a specific distribution (assuming a regular case, meaning the distribution’s support is not a function of any of the distribution’s parameters) which rests on the fact that

(1)

has approximately the chi-square distribution with K degrees of freedom (K is the number of parameters to be estimated), where

(2)

is the set of n observations, individually denoted

(allowing for a possibility of a multivariate distribution),

denotes the corresponding probability density function,

is the vector of the resulting maximum-likelihood (ML) estimators of the parameters, and

represents their true (even though unknown) values.

The proof rests on expanding the LHS of following K-component equation

(3)

with respect to

at

to a linear (in

) accuracy, making the answer equal to

and solving for

, thereby getting

(4)

where

(5)

and

(6)

Note that

is a K-component vector, while

represents a symmetric, positive-definite K by K matrix (the small circle stands for a direct product of two vectors).

Similarly expanding (1) and utilizing (4) we get

(7)

where

has (by Central Limit Theorem), approximately, a K-variate Normal distribution with the mean of

and the variance-covariance matrix of

; this implies that (7) has, to the same level of approximation, the

distribution (since the components of

are then asymptotically independent, each having the mean of 0 and the variance of 1).

From all this, it then follows that an approximate confidence region is found by first finding the maximum likelihood estimators

, then making (1) equal to a critical

value and solving (usually, only graphically) for

.

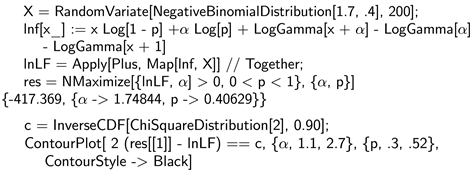

We demonstrate this by generating a random independent sample of 200 observations from a Negative Binomial distribution (with parameters

and p) and constructing the corresponding 90% confidence region for the two parameters; a simple, self-explanatory Mathematica code to do exactly that looks like this:

The resulting confidence region is displaced in Figure 1.

Similarly, to test a null hypothesis which claims specific values for the K parameters, we evaluate (1) with

being the hypothesized (rather than the true) values, and check the result against the critical value of

; something this article will not elaborate on any further.

2. Partial Confidence Regions

The aim of this article is to show how to construct a confidence region (called partial) for only some parameters of distribution, even though all of its parameters are unknown and need to be estimated by the maximum-likelihood technique.

We should mention that there is some existing literature on using partial likelihood functions (LF), for example [5], but its goals and results bear little resemblance to ours. Similarly, articles on marginal LF (for example [6]) and conditional LF deal with only rather specialized issues while our approach is fully general; the only shared feature is the occasional use of identical terminology.

![]()

Figure 1. 90% confidence region for α and p.

Extending the technique delineated in our Introduction, we now need to find an approximate distribution of

(8)

where only some components of

are equal to the true values of the corresponding (we call them pivotal) parameters, while the rest (the nuisance parameters; a term introduced by [7] and further explored by [8]) are set to their

values. Knowing this distribution will then enable us to construct confidence regions (or test hypotheses) for the pivotal parameters only while ignoring the estimates of the nuisance parameters.

Since we already have a good approximation to (1), namely (7), or equivalently

(9)

we now need a similar approximation for

(10)

and then, for the corresponding difference. To approximate (10), we go back to the LHS of (7) and replace

by

(i.e. keeping the nuisance components of

and setting the pivotal components to 0) while

, computed from (4), remains unchanged. This results in

(11)

where

is the original

matrix with all pivotal-by-pivotal elements set to 0 (a notation to be used with other matrices as well).

This can be shown by rearranging the parameters to start with the pivotal and be followed by the nuisance ones, and visualizing the corresponding 2 by 2 block structure of the symmetric matrix

. In such representation, the previous equation reads

where a full square

indicates keeping the original block of the

matrix, while each

represents a zero sub-matrix of the corresponding dimensions.

Subtracting (11) from (9) then yields the desired approximation to (8), namely

(12)

Introducing

, which we know from our Introduction to be approximately K-variate Normal, having zero means and the variance-covariance matrix of

, we can now find the moment generating function (MGF) of (12) by

(13)

where

is the K by K identity matrix. The last equality follows from the fact that

(14)

and

(15)

make the same contribution to the determinant in (13), where

is now the L by L identity matrix (L being the number of pivotal parameters). In (14), we also use the fact that

(16)

where the

and

subscripts refer to the corresponding pivotal and/or nuisance block of the matrix.

It is easy to see that the result of (13) does not change after replacing

(the variance-covariance matrix of

) by the corresponding correlation matrix

, where

is the main-diagonal matrix of the corresponding variances, and correspondingly replacing

by

(recall that the 0 subscript indicates setting all pivotal-pivotal elements equal to 0), since clearly

(17)

Similarly, we can replace the asymptotic variance-covariance matrix of

, namely

, by its correlation matrix

and

by

without affecting the value of the determinant.

Summary

Based on (14), the MGF of (12) is given by

(18)

where

are the eigenvalues of

(note that

can be replaced by

or

, whichever is more convenient). The resulting PDF is then that of a convolution of the individual Gamma (

) distributions.

It is important to note that, when there are more pivotal than nuisance parameters (i.e. when

), the L by L matrix

in (16) is of rank

only (this is now determined by the number of columns of

), implying that

of its eigenvalues are equal to 0, and correspondingly simplifying the eigenvalues of

(

of which will be equal to 1). Furthermore, the remaining eigenvalues of

are the same as those of

; this is based on a general result stating that, when

is an n by m matrix while

is m by n,

and

will share all their non-zero eigenvalues, while the extra eigenvalues, if any, will be equal to 0.

This implies that, when

, we can replace the eigenvalues of

by the eigenvalues of

(a smaller matrix), knowing that each of the remaining

eigenvalues is equal to 1.

The final note: some steps of the resulting procedure for constructing partial confidence regions may be carried out analytically, while the rest require a numerical approach. This can be observed in our subsequent examples: some avoid explicit formulas entirely, performing each step numerically (always an available option), while our last example is almost completely analytical, to facilitate investigation of the technique’s accuracy.

3. Multivariate Normal Distribution

The most important multi-parameter distribution is the Normal distribution of several (say n) random variables

, collectively denoted

. It is fully specified by the following parameters: n individual means (collectively denoted

), n standard deviations

, and

correlation coefficients

(

) usually collected in a symmetric matrix

(each

will

appear twice, on both sides of the main diagonal, whose elements are all equal to 1). This section is a review of basic formulas relating to ML estimation of these parameters.

The natural logarithm of the corresponding PDF (aka likelihood function) is given by

(19)

where

is a main-diagonal matrix of the n values of

. Note that

, explicitly expanded, yields the following vector

.

To find the corresponding

matrix, we use the following well-known formulas

(20)

(21)

where Tr indicates taking the matrix’ trace. Note that all but two elements of

(22)

are equal to 0 (the remaining two elements are equal to 1), where

stands for a vector of

zeros with only its kth component equal to 1.

Differentiating (19) twice with respect to

and changing the sign yields

(23)

which represents the

by

block of

, while the

by

and

by

blocks are both zero sub-matrices, since

—that goes for the

by

and

by

blocks as well.

To find the

by

block, we first differentiate (19) with respect to

and then with respect to

(assuming

), getting

(24)

Reversing the sign and taking the expected value yields the following expression for the off-diagonal elements of the

by

block

(25)

since

.

When differentiating with respect of

twice, the corresponding second derivative is

(26)

Reversing the sign and taking the expected value then yields

(27)

for the main-diagonal elements of the

by

block (note that

).

This means that

(28)

is the resulting expression for both the diagonal and off-diagonal elements of the

by

block.

To find the

-

element of the

matrix, we first need the corresponding second derivative of (19), namely

(29)

Reversing the sign and taking the expected value results in

(30)

Finally, to get the

-

element of

, the corresponding differentiation of (19) yields

(31)

which, after changing the sign and taking the expected value, results in

(32)

having a non-zero value only when

or

.

4. Case of Asymptotic Independence

This section discusses the situation in which all elements of the pivotal-nuisance and (consequently) nuisance-pivotal blocks of

(and, correspondingly, of

and

) are equal to 0. In that case,

has the following simple form: its nuisance-nuisance block is the identity matrix, the remaining three blocks are all zero sub-matrices (implying that

of (13) has an identity matrix in the pivotal-pivotal block, and zero sub-matrices elsewhere). The resulting MGF of (8) thus equals to

, where L is the number of pivotal parameters, which means that the asymptotic distribution of (8) is

, making a construction of the corresponding partial confidence region fairly routine.

Let us add that such asymptotic independence is not uncommon; for example, when a symmetric (with respect to its mean) distribution has a location and scale parameters only, the corresponding ML estimators are then always asymptotically independent.

To see why, note that, in this case, the PDF of the sampled distribution can be expressed as

(33)

where

and

are the location and scale parameters respectively, and

is a parameter-free PDF, symmetric with respect to 0. The off-diagonal element of

is then proportional to

(34)

due to (6); the integrand is clearly an anti-symmetric (odd) function of y, resulting in a zero integral. This enables us to find a partial confidence interval for either

of for

(while ignoring the other parameter) by using the

distribution for (8).

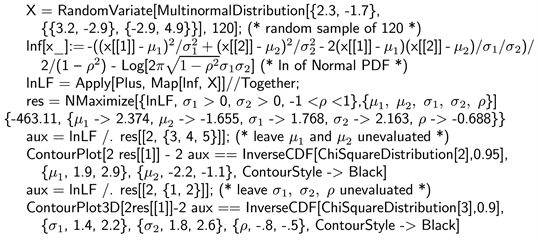

Similarly, in the case of a bivariate Normal distribution, the ML estimators of the two means on one hand, and of the two standard deviations and the correlation coefficient on the other, form two such mutually independent sets as well; this is clear from what we learned in the previous section but, due to the importance of this example, let us be more explicit and quote the well-known asymptotic correlation matrix of

, namely

(35)

where the five parameters (collectively denoted

) are the usual

and

(in that order); the respective asymptotic variances are

and

.

Constructing a confidence region for either set of parameters is then quite simple (knowing that

has the above block-diagonal structure is all we need, the individual elements are irrelevant) as the following Mathematica program demonstrates.

The program produces the output presented in Figure 2 and Figure 3; the first graph is the resulting partial 95% confidence region for

and

, the second one is the 90% confidence region for

and

. This assumes that one is interested only in one or the other (not both)—if a confidence region for all five parameters is desired, one would use the basic procedure of our Introduction.

5. General Case

In general (and with no asymptotic independence to help) things get more complicated. We now go over all possible cases involving up to five parameters.

![]()

Figure 2. Confidence region for μ1 and μ2.

![]()

Figure 3. Confidence region for σ1, σ2 and ρ.

5.1. Two Parameters

In this situation, the only possibility is constructing a confidence interval for one of the two parameters, while ignoring the other. Since the

(or

) matrix will always have the following form

(36)

we get

(37)

which implies that the distribution of (8) is that of a

random variable, further divided by

. This means that, to construct a

confidence interval (CI) for the pivotal parameter, we make (8) equal to the corresponding critical value of

, also divided by

, substitute the ME estimate of the nuisance parameter, and solve for the pivotal one to get the two CI boundaries.

The following Mathematica program demonstrates the construction of a 90% confidence interval for the

parameter of the Gamma (

) distribution.

Note that in this case

, based on

(38)

where

is the second derivative of

, called “PolyGamma[1, α]” by Mathematica; to evaluate it, we had to use the ML estimate of

, instead of its true value. That is how we deal with this problem in general; this does not change the asymptotic distribution of (8).

5.2. Three Parameters

A three-parameter situation requires discussing two possibilities:

When constructing a CI for a single parameter (say

), (8) has again the

distribution; this follows from

having only one element (its only eigenvalue), and from

. Note that this result (when interested in only one parameter) is true for any K.

When finding a confidence region for

and

, the distribution of (8) is a convolution (i.e. an independent sum) of

and another

, the latter multiplied

; this is based on the arguments following (18). The corresponding PDF is

(39)

where

denotes the modified Bessel function of the first kind; note that using this PDF to find a critical value can be done only numerically (there is no analytic expression for the corresponding CDF, let alone for its inverse).

As an example, we assume sampling a distribution with the following PDF

(40)

(each of the three parameters must be positive) and constructing a 95% confidence region for

and

only. Leaving out routine details, the expression for s turns out to be

(41)

where

is the second derivative of

, evaluated at

.

The following Mathematica code demonstrates the algorithm.

![]()

The confidence region for the

and

thus produced is displayed in Figure 4.

![]()

Figure 4. Confidence region for β and γ.

5.3. Four Parameters

A CI for

results in (8) having the

distribution, as discussed previously.

The complementary task of constructing a confidence region for

and

then leads to a convolution of the previous distribution and that of

(to account for the two extra eigenvalues of

, both equal to 1); this convolution has a PDF given by

(42)

Finally, to build a confidence region for

and

requires using the following PDF for (8):

(43)

where

and

are the two eigenvalues of

; using

instead of

is still possible, but has no longer any advantage, since critical values of (42) and (43) can again be found only numerically.

To show how to use the last formula, we assume sampling a mixture of Exponential and Normal distributions (the four parameters are: the mean of the Exponential distribution, denoted b, and its weight in the mixturea, followed by the mean c and standard deviation d of the Normal distribution). Note that in this example we also bypass an analytic solution for elements of the

matrix—these can be easily computed by substituting values of the ML estimates for

in (6), and only then computing, by numerical integration, the corresponding expected value. The following Mathematica program demonstrates the complete algorithm.

![]()

Producing the 90% confidence region displayed in Figure 5 for the mean (horizontal scale) and standard deviation (vertical scale) of the Normal-distribution part of the mixture.5.4. Five ParametersAs in all previous cases, a confidence interval for only one (say

) of the parameters requires using the

distribution, further multiplied by

.

![]()

Figure 5. Confidence region for c and d.

For the complementary task of building a (four-dimensional) confidence region for

and

, the distribution of (8) becomes a convolution of

and

; the corresponding PDF is

(44)

A confidence region for

and

is found using the PDF of (43), found in the case of four parameters.

The PDF used for the three-dimensional confidence region of the true values of

and

is then a convolution of the last PDF and that of an independent

; no explicit formula for the resulting PDF exists, but the following example demonstrates how to bypass this problem.

This time, we assume sampling a tri-variate Normal distribution having identical means (denoted

) and identical standard deviations (denoted

). We now construct a three-dimensional confidence region for

and

(while ignoring

and

), using the following Mathematica code.

![]()

The resulting confidence region is shown in Figure 6.

![]()

Figure 6. Confidence region for μ, σ and ρ12.

6. Technique’s Accuracy

In this section, we go over a rather special example, allowing us to analytically find not only all ML estimators, but also an exact expression for (8). These are then used to build (still analytically) formulas for boundaries of a confidence interval for one of the parameters. Furthermore, the exact distribution of the estimator is also known, which gives us a unique opportunity to compute the error of our technique.

Let us now proceed with the actual example of constructing a confidence interval for the correlation coefficient of a bivariate Normal distribution, and computing its exact level of confidence (while ignoring the remaining four parameters).

Firstly, it is well known what the ML estimators of the two means (

and

), the two standard deviations (

and

), and the correlation coefficient

are; we will denote them

and r respectively. The likelihood function, once we replace all five parameters by their estimators, then reads

(45)

When similarly replacing the first four parameters only (keeping the exact value of

), the likelihood function becomes

(46)

making (8) equal to

(47)

It is easy to find that

, where

is the matrix of (35); this means that the random variable (47) has, approximately, the

distribution further multiplied by

. Making (47) less than the critical value of this distribution (say

) has, approximately, the probability of

of being correct. Since the sampling distribution of r is known, we can also compute the exact probability of the same inequality, thus getting the error of the approximation. This is done by the following Mathematica program (for any chosen set of values for

and

)

where the first two lines (a continuation of a single Mathematica statement) spell out the exact PDF of r. The program then specifies

and

, makes (47) equal to

, and solves for r (note that this is the reverse of what is done when finding boundaries of a confidence interval for

, given a value of r); the last line then evaluates the corresponding exact probability. Note that the PDF of r is notoriously slow in reaching its Normal limit (on which our approximation is based), making the errors of confidence intervals for

atypically large; this example is thus close to presenting the worst-case scenario.

By executing the program using various values of

and

, we get errors (in percent) presented in Table 1 (these are quoted for

and 100 respectively).

Based on these results, we can make the following observations:

• The exact confidence level is always less than the claimed one.

• The error does not change much with the value of

(surprisingly, the error is usually the largest at

and decreases slightly towards both extremes).

• It decreases as the confidence level goes up, but increases relative to

.

• It decreases, to a good approximation, with 1/n.

![]()

Table 1. List of the technique’s errors (in %).

7. Conclusion

We have shown how to construct confidence regions for parameters of interest while ignoring one or more additional (nuisance) parameters. This is done based on ML estimates of all parameters, utilizing the corresponding likelihood function and formulas of this article. The error of the resulting procedure behaves similarly (i.e. decreasing with the first power of the sample size) to the error of ordinary confidence regions based on the

distribution of (1). Our explicit examples have covered situations involving up to five parameters in total, but a general approach for dealing with any number of parameters has been clearly delineated as well. Future research will undoubtedly supply specific details of any such multi-parameter situation, and come up with a way of simplifying, at least numerically, the resulting distributions.

Appendix: Notation and Abbreviations

•

: the Likelihood Function;

is the set of observations,

are the distribution parameters.

• LF: likelihod function.

• ML: maximum likelihood.

• LHS: left hand side.

• MGF: moment generatin function.

• PDF: probability density function.

•

: the chi-square distribution with K degrees of freedom.

•

: a symbol for taking expected value.

•

and

: identity and zero matrix, respectively.

•

: the variance-covariance matrix of multivariate Normal distribution.

•

: the previous matrix with all pivotal by pivotal elements set to 0.

•

: the pivotal by nuisance (or incidental) block of

.

•

: a vector with kth component equal to 1, the rest equal to 0.

•

: a matrix with

and

components equal to 1, the rest equal to 0.