Received 21 May 2016; accepted 17 June 2016; published 21 June 2016

1. Introduction

Genetic Algorithms (GAs) were introduced by Holland [1] and further described by Goldberg [2] as optimization approaches to find a global or near-global optimal solution. GAs are randomized heuristic search algorithm based on the evolutionary principles of natural selection and genetics. GAs represent smart exploitation of the random search which used for solving optimization problems. GAs are designed to simulate processes in natural systems necessary for evolution (i.e. it follows the principles first laid down by Charles Darwin of “survival of the fittest”) [3] - [8] . GA starts with a set of potential solutions (chromosomes). Next, genetic operators (selection, mutation and crossover) are applied one after another to obtain a new generation of chromosomes. This process is repeated until the termination criterion is met. In addition, GA uses only a simple scalar performance measure, so it is easy to use and implement [9] . Data mining techniques comprise three components model, preference criterion and search algorithm. The most common functions in data mining techniques are association rule mining, classification, clustering, regression, sequence, link analysis and dependency modelling. The flexibility of the model for representing the underlying data and the interpretability of the model in human terms is determined by Model representation. This includes decision trees and rules, linear and nonlinear models, probabilistic graphical dependency models, relational attribute models and example-based techniques such as the NN-rule and case-based reasoning [10] . The preference criterion is used for determining which model to use for mining, depending on the underlying dataset, by associating some measure of goodness with the model functions. It tries to generating a model function with a large number of degrees of freedom (i.e. avoid over-fitting of the underlying data). After selecting the model and the preference criterion, specification of the search algorithm is defined. Descriptive and predictive are two mainly kinds of data mining tasks. The descriptive techniques provide a summary of the data, while the predictive techniques learn from the current data to make predictions about the behaviour of new datasets. One of the popular descriptive data mining techniques is Clustering; where it is used for partitioning an input dataset into a number of clusters. Clustering or grouping is an exploratory procedure that searches for “natural” structure within a data set. This process involves sorting the data cases or objects, into groups or clusters, so that objects in the same cluster are more like one another than they are like objects in other clusters. Sorting occurs in the basis of similarities calculated from the data. Clustering is useful for data reduction, developing classification schemes, and suggestion or supporting hypotheses about the structure of the data [11] .

2. Clustering

Clustering techniques have been used in a wide range of disciplines such as: A novel approach of cluster based optimal ranking of clicked URLs using genetic algorithm for effective personalized web search [12] , Optimal clustering of microgrids based on droop controller for improving reliability using a hybrid of harmony search and GAs [13] , Cluster analysis using optimization algorithms with newly designed objective functions [14] , and GA optimization of defect clusters in crystalline materials [15] .

The most popular clustering tool used in scientific and industrial applications is The K-means clustering algorithm [16] - [20] . It proceeds as follows: Firstly, K objects are randomly selected; where each of which initially represents a cluster mean or center. Secondly, for each of the remaining objects, based on the distance between the object and the center, an object is assigned to the cluster to which it is the most similar. Finally, the new center for each cluster is computed and the process iterates until the criterion function converges. Typically, the squared-error criterion is used.

K-means works well when the clusters are compact clouds that are rather well separated from one another by adopting the squared-error criterion. On the other hand, when clusters have widely different sizes, densities, or non-spherical shapes it has difficulty detecting the natural clusters [21] . It will divide the objects in one cluster into two or more clusters to attempt to minimize the square-error criterion. In addition to that, the optimal cluster corresponds to the extremum when applying this criterion to evaluate the clustering results. Moreover, the algorithm will terminate at local optimum if the results of initialization are exactly near the local minimal point. So, this paper intends to present hybrid GA with K-means cluster algorithm that efficiently eliminates this drawbacks of K-means.

The rest of this paper is organized as follows: Previous work in clustering is presented in Section 2. GA is de- scribed in Section 3. Hybrid genetic algorithm with k-means is introduced in Section 4. The experimental results are discussed in Section 5. Finally, we conclude the paper in Section 6.

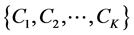

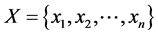

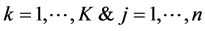

Clustering [22] - [26] is an important unsupervised classification technique where a set of patterns are grouped into clusters in such a way that patterns in the same cluster are similar in some sense and patterns in different clusters are dissimilar in the same sense. Clustering in  (d-dimensional Euclidean) space is the process of partitioning a given set of n points into a number, say K, of clusters

(d-dimensional Euclidean) space is the process of partitioning a given set of n points into a number, say K, of clusters  based on some similarity/dissimilarity metric. The value of K may or may not be known a priori. The main objective of any clustering technique is to produce a

based on some similarity/dissimilarity metric. The value of K may or may not be known a priori. The main objective of any clustering technique is to produce a  partition matrix

partition matrix  of the given dataset X consisting of n patterns,

of the given dataset X consisting of n patterns,

. The partition matrix may be represented as

. The partition matrix may be represented as ,

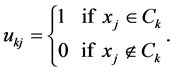

, ; where

; where  is the membership of pattern

is the membership of pattern  to cluster

to cluster . In the case of hard or crisp partitioning,

. In the case of hard or crisp partitioning,

(1)

(1)

In the following, some popular distance measures are described; which are used to characterize the clusters.

2.1. Distance Measure

A distance measure is used to compute the amount of dissimilarity between two data points. The choice of distance function plays an important role in cluster analysis. Suppose the given dataset X contains n points, i.e.,

. If d is the dimension of the data, then

. If d is the dimension of the data, then  denotes the value of the i-th point in the j-th dimension. The distance between two data points

denotes the value of the i-th point in the j-th dimension. The distance between two data points  and

and  is denoted by

is denoted by ![]() and should be satisfying the following properties:

and should be satisfying the following properties:

1) ![]() for all

for all![]() , and

, and ![]() only if i = j.

only if i = j.

2) ![]() for all

for all![]() ; and

; and

3) ![]() for all

for all![]() .

.

Two widely used distance measures, Euclidean distance and correlation-based distance [11] , are defined as follows.

2.1.1. Euclidean Distance Measure

The Euclidean distance ![]() between two feature vectors

between two feature vectors ![]() and

and ![]() is computed as

is computed as

![]() (2)

(2)

2.1.2. Pearson Correlation-Based Distance Measure

The Pearson correlation coefficient ![]() between two feature vectors

between two feature vectors ![]() and

and ![]() is computed as:

is computed as:

![]() (3)

(3)

where ![]() and

and ![]() represent the arithmetic means of the components of the feature vectors

represent the arithmetic means of the components of the feature vectors ![]() and

and![]() , respectively. The Pearson correlation coefficient is a measure of similarity between two points in the feature space. The distance between two points

, respectively. The Pearson correlation coefficient is a measure of similarity between two points in the feature space. The distance between two points ![]() and

and ![]() is computed as

is computed as![]() , which represents the dissimilarity between those two points.

, which represents the dissimilarity between those two points.

Clustering methods can be broadly classified into hierarchical, partitional and density-based. In the following, some basic clustering techniques from each category are described.

2.2. Hierarchical Clustering

In hierarchical clustering, the clusters are generated in a hierarchy, where every level of the hierarchy provides a particular clustering of the data, ranging from a single cluster to n clusters. For generating hierarchical clustering, there are two basic approaches either agglomerative or divisive.

Agglomerative: start with singleton clusters, and merge the two least distant clusters at each step, and hence the number of clusters reduces by 1. This proceeds iteratively in a hierarchy, providing a possible partitioning of the data at every level. The algorithm is terminated when the target number of clusters K is achieved. Single, average and complete linkage agglomerative algorithms differ only in the linkage metric used. The distance

![]() between two clusters

between two clusters ![]() and

and ![]() for the three linkage algorithms are defined as follows:

for the three linkage algorithms are defined as follows:

For single linkage,

![]() (4)

(4)

For average linkage,

![]() (5)

(5)

For complete linkage,

![]() (6)

(6)

where ![]() denotes the distance between the data points x and y, and

denotes the distance between the data points x and y, and ![]() denotes the number points in cluster

denotes the number points in cluster![]() .

.

Divisive clustering just follows the reverse process, i.e., it starts from a single cluster containing all the points. At each step, the biggest cluster is divided into two clusters until the target number of clusters is achieved.

One of the main advantages of hierarchical clustering is that it offers flexibility regarding the levels of granularity and it can adopt any kind of distance metric easily. While the drawbacks of this clustering technique are its inability to improve already constructed clusters and the problem of choosing suitable stopping criteria.

2.3. Partitional Clustering

The partitional clustering algorithms obtain a single clustering solution for a dataset instead of a hierarchical clu- stering structure. Partitional methods are more computationally efficient compared to hierarchical techniques. K-means [26] and its fuzzy version [27] are the two partitional clustering algorithms that are widely used. K-means clustering is described in following subsection.

K-Means Clustering

The K-means clustering algorithm consists of the following steps:

1) K initial cluster centers ![]() are chosen randomly from the n points

are chosen randomly from the n points ![]()

2) Assign point ![]() to cluster

to cluster ![]() iff

iff

![]() (7)

(7)

3) New cluster centers ![]() are computed as follows:

are computed as follows:

![]() (8)

(8)

where ni is the number of elements belonging to cluster Ci.

4) If ![]() then terminate. Otherwise continue from Step 2.

then terminate. Otherwise continue from Step 2.

K-means algorithm may converge to values that are not optimal [28] . Also, for large problems global solutions cannot be found with a reasonable amount of computation effort [29] .

2.4. Density-Based Clustering

In density-based clustering methods, clusters are considered as zones in the data set space, where the points are densely situated, and which are separated by regions of low point density (noise). These zones may have arbitrary shapes and the interior points may be arbitrarily distributed.

Density-based spatial clustering of applications with noise D-BSCAN [30] is a popularly used density-based clustering method, where a cluster is defined using the notion of density-reachability. A point y is said to be directly density-reachable from another point x if it is within a given distance ε (i.e., y is a part of x’s ε-neighbor- hood), and if x is surrounded by sufficiently many points in its ε-neighborhood such that x and y may be considered as a part of a cluster. Again, y is called indirectly density-reachable from x if there is a sequence of points ![]() with

with ![]() and

and ![]() in which each

in which each ![]() is directly density-reachable from

is directly density-reachable from![]() . Two input parameters are required as the input of D-BSCAN: ε (neighborhood radius of a point) and the minimum number of points (Min-pts) required to form a cluster. First, it starts with an arbitrary starting data point that has not been visited yet. This point’s ε-neighborhood radius is found, and if it contains at least Min-pts points, a cluster formation is started. Otherwise, the point is considered as noise. It should be noted that this point may later be found within the ε-neighborhood radius of another point that contains at least Min-pts points. Hence the point can be assigned to some cluster at a later stage. D-BSCAN clusters a dataset by a single scan over all the data points. D-BSCAN can be implemented for non convex, non-uniformly, large scale sized clusters. Also, D-BSCAN can be used to find the number of clusters and it can work efficiently in the presence of noise in the data set. On the other hand, the behavior of D-BSCAN is highly depends on the input parameters (neighborhood radius of a point and the minimum number of points required to form a cluster).

. Two input parameters are required as the input of D-BSCAN: ε (neighborhood radius of a point) and the minimum number of points (Min-pts) required to form a cluster. First, it starts with an arbitrary starting data point that has not been visited yet. This point’s ε-neighborhood radius is found, and if it contains at least Min-pts points, a cluster formation is started. Otherwise, the point is considered as noise. It should be noted that this point may later be found within the ε-neighborhood radius of another point that contains at least Min-pts points. Hence the point can be assigned to some cluster at a later stage. D-BSCAN clusters a dataset by a single scan over all the data points. D-BSCAN can be implemented for non convex, non-uniformly, large scale sized clusters. Also, D-BSCAN can be used to find the number of clusters and it can work efficiently in the presence of noise in the data set. On the other hand, the behavior of D-BSCAN is highly depends on the input parameters (neighborhood radius of a point and the minimum number of points required to form a cluster).

3. Genetic Algorithm (GA)

GAs were introduced by Holland [1] and further described by Goldberg [2] as optimization approaches to find a global or near-global optimal solution. GA starts with a set of potential solutions (chromosomes). Next, genetic operators (selection, mutation and crossover) are applied one after another to obtain a new generation of chromosomes. This process is repeated until the termination criterion is met. Algorithmically, the basic steps of GAs are outlined as below [3] - [7] :

Step I [Start]: random population of chromosomes is generated, that is, suitable solutions for the problem.

Step II [Fitness]: the fitness of each chromosome in the population is evaluated.

Step III [New population]: a new population is created by repeating the following steps:

1) Selection: Select two parents (chromosomes) from a population according to their fitness value. The chance for each chromosome to be selected, as a parent, is determined according to its fitness.

2) Crossover: According to the crossover probability (Pc), new offspring (children) is generated from parents.

3) Mutation: According to mutation probability (Pm), new offspring at each locus (position in chromosome) is mutated.

4) Accepting: new offspring is placed in the new population.

Step IV [Replace]: Use new generated population for a further run of the algorithm.

Step V [Test]: If the end condition is satisfied, return the best solution in current population and stop.

Step VI [Loop]: Go to step II.

The Flowchart of Simple GAs is shown in Figure 1.

4. Hybrid Genetic Algorithm with K-Means for Clustering Problems

The K-means cluster algorithm is one of the most widely used clustering algorithms and has been applied in many fields of science and technology. The major problems of the K-means algorithm are that:

・ It may produce empty clusters depending on initial centre vectors.

・ May converge to values that are not optimal.

・ For large problems global solutions cannot be found with a reasonable amount of computation effort.

This paper presents hybrid genetic algorithm with K-means cluster algorithm that efficiently eliminates this drawbacks.

4.1. Phase I: K-Means Algorithm

Step 1: K initial cluster centres ![]() are chosen randomly from the n observations

are chosen randomly from the n observations![]() .

.

Step 2: A point ![]() is assigned to cluster

is assigned to cluster ![]() iff

iff

![]() (9)

(9)

Step 3: New cluster centres ![]() are computed as follows:

are computed as follows:

![]() (10)

(10)

where ![]() is the number of elements belonging to cluster

is the number of elements belonging to cluster![]() .

.

Step 4: If ![]() then terminate, otherwise continue from step 2.

then terminate, otherwise continue from step 2.

After this phase we get an initial centre for all predetermined clusters.

4.2. Phase II: Genetic algorithm

Step 1: Population initialization

Each individual represent a row-matrix ![]() where n is the number of observation, each gene contain integer

where n is the number of observation, each gene contain integer ![]() which represent the cluster which this observation belongs to it. E.g., let there is ten observation

which represent the cluster which this observation belongs to it. E.g., let there is ten observation ![]() which must be assigned to four cluster

which must be assigned to four cluster![]() . Figure 2 shows the structure of the individual. Also Figure 3 represents the four clusters classification.

. Figure 2 shows the structure of the individual. Also Figure 3 represents the four clusters classification.

Step 2: Evaluation

Evaluate the desired objective function; where the task is to searching for appropriate cluster classifications such that the fitness function is minimized. The clustering fitness function for the K clusters ![]() is given by

is given by

![]() (11)

(11)

![]()

Figure 2. The structure of the individual.

![]()

Figure 3. Classification of the four clusters.

Step 3: Selection

The aim of selection is to direct GA search towards promising regions in the search space. We employ roulette wheel selection in this study; where the individuals on each generation are selected for survival into the next generation according to a probability value. The probability of variable selection is proportional to its fitness value in the population, according to the formula below:

![]() (12)

(12)

where![]() , selection probability of a string x in a population

, selection probability of a string x in a population ![]() and

and

![]() (13)

(13)

Step 4: crossover operator

In this step, we present a modified uniform crossover as shown in Figure 4, where the crossover is done on each individual, in such a way that offspring is constructed by choosing the individual with a probability![]() .

.

Step 5: mutation operator

For each individual, mutation operator is implemented as follows, first select two columns randomly from ith individual and then generate two new columns as shown in Figure 5.

Step 6: Keep the best solutions of the existing population; where GA keeps the best solutions found so far during the process.

4.3. Implementation Results

The experimental results for the proposed algorithm are provided for 4 problems containing artificial data set (problem 1, problem 2, problem 3, and problem 4) which described as follows:

4.3.1. Problem 1

This problem containing a no overlapping data which has two attribute (two-dimensional data), where the number of cluster is varied from two to five![]() , it has 1000 points as shown in Figure 6. Figures 7-10 show the results for

, it has 1000 points as shown in Figure 6. Figures 7-10 show the results for ![]()

4.3.2. Problem 2

This problem containing a no overlapping, data which has two attribute (two-dimensional data), where the num- ber of clusters is five![]() . Figure 11 shows the data set for problem 2 while, Figure 12 shows the results for

. Figure 11 shows the data set for problem 2 while, Figure 12 shows the results for![]() .

.

4.3.3. Problem 3

This problem containing a no overlapping, data which has two attribute (two-dimensional data), where the num- ber of cluster is three![]() . It has 300 data points. The ranges for the three classes are as follows:

. It has 300 data points. The ranges for the three classes are as follows:

![]()

Figure 7. Results for k = 2 for problem 1.

![]()

Figure 8. Results for k = 3 for problem 1.

![]()

![]()

Figure 9. Results for k = 4 for problem 1.

Figure 13 shows the data set for problem 3 while, Figure 14 shows the results for![]() .

.

4.3.4. Problem 4

This problem containing a no overlapping, data which has two attribute (two-dimensional data), where the number of cluster is![]() , It has 900 data points, having nine classes where all the classes are

, It has 900 data points, having nine classes where all the classes are

assumed to have equal a priori probabilities. The ranges for the nine classes [31] are as follows:

![]()

Thus the domain for the triangular distribution for each class and for each axis is 2.6. The data set is shown in Figure 15, while Figures 16-18 show the results for ![]()

5. Conclusion

This paper proposes a hybrid version of the well-known K-means clustering algorithm with GA. The modified

algorithm maintains all important characteristic features of the basic K-means and at the same time eliminates the possibility of generation of empty clusters. Experimental results show that the proposed clustering algorithm is able to solve the clustering problem. Results of simulation experiments using several data sets prove our claim.

NOTES

![]()

*Corresponding author.