1. Introduction

Spectral estimation technology is widely used in the fields of electronic countermeasures, radar, sonar and mobile communication. In this paper, we mainly consider the line spectral estimation in compressed sensing. Considering the problem of the line spectral estimation using a pre-specified discrete Fourier transform matrix, the sparse solution we obtained may not close to the real sparse vectors when the true frequency components may not lie on the pre-specified frequency grid. This error, referred as grid mismatch, results in performance degradation or even recovery failure. Therefore, in this paper, we treat the dictionary parameters as the unknown variable along with the sparse signal, and complete the optimization of the dictionary parameters when we estimate the sparse vector through the iterative way.

Rather than applying the traditional compressed sensing theory, an increasing number of scholars have concentrated on the grid mismatch problem instead. For example, work [1] focused on the impact of the basis mismatch on the reconstruction error by treating the error as a perturbation between the presumed and the actual dictionaries. In work [2] , to handle the grid mismatch, the true dictionary is approximated as a summation of a presumed dictionary and a structured parametrized matrix via the Taylor expansion. A highly coherent dictionary was used to approximate the real dictionary in [3] , and a class of greedy algorithms that use the technique of band exclusion was proposed. On the other hand, in [4] [5] [6] , the grid mismatch problem was studied by proposing an atomic norm-minimization approach to handle an infinite dictionary with continuous atoms. Bayesian statistics has also been applied to solve the grid mismatch problem. In work [7] and [8] , Bayesian approaches were proposed to iteratively refine the dictionary by treating the sparse signals as hidden variables. The work [8] used a generalized expectation-maximization (EM) algorithm to solve the dictionary parameters and determine the sparse vector. Work [9] proved that the problem of compressed sensing using a logarithmic penalty can be transformed into an iterative reweighted

norm regression problem by providing a special surrogate function.

In addition, we analyze the first-order optimal condition of the original problem and then prove that the problem can be transformed into a series of reweighted lasso [10] problems by using the iterative method. In each step of the iteration, the derivative of the anti-triangular penalty function forms the weight of the

norm. Compared with the algorithms proposed in [9] and [11] , our method is more adaptive with regard to the choice of penalty function and the calculation method for the weight, additionally, the sparse effect of the

norm is also better than the

norm. However, it is well known that there is no explicit solution to the problem of the

penalty regression. In this study, we use a Bayesian lasso approach to determine the optimal solution for each step.

The remainder of the paper is organized as follows. Section 2 is the description of the line spectral estimation problem, which we formulate as the penalty least squares problem with dictionary parameters. In Section 3, we provide a theoretical analysis and propose the iterative reweighted

algorithm. In Section 4, we present several sets of numerical experiments to demonstrate that the iterative reweighted

method is better than other state-of-the-art algorithms in many cases. Section 5 concludes the paper and provides some ideas for future work.

2. Model Overview

2.1. Line Spectral Estimation

Assume the line spectral estimation problem where the observed signal is a summation of a number of complex sinusoids:

(1)

And we write it in the form of a matrix expression:

(2)

where

represents the observed value.

is unknown parameters represent the frequency.

determined by parameter

. The covariate in the model

represents the amplitude of the corresponding frequency.

represents a random error term, assuming that they are independent.

2.2. Penalty Least Squares Regression

In the process of signal reconstruction, the dimension of Y is much smaller than the number of measurements (

). Since the signal is sparse, the Equation (2) would be transformed into an optimization problem (3):

(3)

where

stands for the number of the non-zero components of

. The optimization (3), however, is an NP-hard problem (which is difficult to find the solution in polynomial time). We can transform optimization (3) into a penalty least squares problem:

(4)

The optimization (4) can be formulated as an unconstrained optimization problem by removing the constraint and adding a penalty term to the objective function:

(5)

where

represents the adjustable penalty parameter. Different penalty functions form different regularization parameters in the iterative process. We find that the penalty function of the inverse trigonometric function has better properties than other common penalty function such as logarithmic penalty function. In the next section we propose an iterative reweighted

sparse algorithm with anti-trigonometric function penalties.

3. Iterative Reweighted l1 Sparse Algorithm

3.1. Algorithm Description

We now develop an iterative reweighted algorithm for joint dictionary parameter learning and sparse signal recovery. Consider the line spectral estimation with anti-trigonometric penalty function:

(6)

We consider the first derivative of the problem (6). Since the absolute value is involved, we summarize the following derivative functions:

(7)

(8)

The penalty function

cannot be guided at zero,

represents its sub-gradient at zero which is a set of real number:

(9)

If we have the iteration value of step t:

, combine with (7) we can estimate

by solving the next weighted lasso problem:

(10)

Here we use the ideal of Bayesian lasso [11] (which we will briefly introduce later in this article) to find

. Similar to (7), the first derivative of (10) can be summarized as:

(11)

represents the sub-gradient of

at zero which is also a set of real number:

(12)

The next step is to find

. In this situation we do not need to calculate the optimal solution, instead we are going to find the estimation

which satisfied:

(13)

The stop condition of the algorithm is controlled by tolerance value

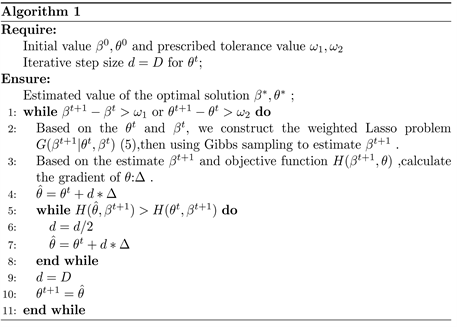

. In this paper we set the tolerance value equals 0.02 in the numerical simulation. Based on the discussion above, we summarise our algorithm as follows:

3.2. Theoretical Analysis

First we want to prove that the objective function (6) is guaranteed to be non-increasing at each iteration:

(14)

Since we obtain

by the gradient descent method, it is obvious that

.

On the other hand, we prove

using the next lemma which has been introduced by [12] :

LEMMA: Given that the adjust parameter

, then we have the following inequality:

(15)

proof:

We first denote

and let

, then by the mean value theorem we have:

, where

between

and

.

Since

is an increasing function and

is a decreasing function, the following inequality:

is always holds for any non-negative value

and

. If we let

and

, the inequality (15) would be certainly proved.

Next we consider the following equality:

(16)

using the lemma above we can yields:

(17)

where

is the optimal solution of problem (10), which satisfied:

(18)

Substituting (18) to (17), we show that we have the inequality:

(19)

in different situations:

When:

:

(20)

then:

(21)

By the fact that

on the condition of

, the inequality (19) has proved to be correct.

When:

:

(22)

then:

(23)

By the fact that

on the condition of

, the inequality (19) has proved to be correct.

When:

:

The set of sub-gradient at

(which is

) should contains 0 when

is the critical point, thus we have the following inequality:

(24)

Consider the inequality we want to prove, we can easily formulate:

(25)

The discussion above proves that we can ensure that the function value keeps non-increasing at each iteration. In addition we want to illustrate that

convergence to

and

convergence to

on the limit situation

and

.

As

is the optimal solution of (10), we define:

.

Consider the first-order condition of

which satisfied:

(26)

On the other hand we have the following conclusion in the situation of

:

(27)

Compare the above two equalities we can conclude that

.

As for the situation when

, consider the inequality from (18):

(28)

Since

, we can easily conclude:

(29)

After the discussion before we can summarize that

always satisfied the first-order condition (7) when

and

. Thus we demonstrate the limit of:

. When

, consider the gradient of

:

(30)

Thus the value of

remains

.

3.3. The Bayesian Lasso

In this article we use the ideal of Bayesian lasso to estimate the optimal solution of problem (10).

Assuming that the prior distribution of the parameter

follows the Laplace distribution:

(31)

Combined with the likelihood function we can get the posterior probability:

(32)

Solving problem (10) is equivalent to solving the maximal probability of posterior probability, which we can obtain from Gibbs sampling [13] .

As Laplace distribution is difficult to directly derive intuitive full condition posterior distribution, the following integral (33) provides an effective solution:

(33)

Using the above integral we can rewritten the Laplace prior distribution

by introducing the intermediate parameter v:

(34)

In problem (32) we let

.

Then we can motivate the following hierarchical Bayesian lasso model:

(35)

where

corresponds to

and

.

For Bayesian inference, the full condition distribution of

and v is:

(36)

where

, the distribution

represent inverse Gaussian

distribution with

,

and

. The density of inverse

Gaussian as follows:

(37)

By repeated sampling, we will form a Markov chain contains a series of point:

.

Since each iteration will lead to a Markov chain, we will get a long sample of

through the whole algorithm:

(38)

And we have demonstrated that

in the above. The simulation results are shown in the next section.

4. Simulation Results

In this section, we carry out a series of experiments to illustrate the performance of our proposed

iterative reweighted algorithm (denoted to as l1-IR). In our simulations, we compare our proposed algorithm with other existing state-of-the-art methods, including the sparse Bayesian learning with dictionary refinement algorithm (denoted as DicRefCS) [7] , the sparse Bayesian learning with dictionary estimation (denoted as SBL-DE) [8] , and especially the super-resolution iterative reweighted algorithm (denoted as SR-IR) [11] .

In order to control the noise level at some of the experiments, we first give the definition of observation quality by the peak-signal-to-noise ratio (PSNR):

(39)

where

represents the variance of noise. We calculate the signal-to-noise ratio (PSNR) to complete the recovery effect comparison:

(40)

where

represents the original sparse signal and

represents the signal recovered by the algorithm. Parameters

and

have the same effect as regularization parameters, we choose

and select optimal parameters

by cross validation.

In the following, we examine the behaviour of respective algorithms under different scenarios. First we control the noise level

, which means

. Figure 1 shows the changes in PSNR of the various algorithms with the change of sparseness S (the sparseness S represents the number of non-zero values in original sparse signal). We keep

and the sparseness S values range from 1 to 10. We set the number of measurements

in order to control the variables. Each data point is averaged by repeated tests.

Figure 1 indicates that the performance of the

iterative reweighted algorithm is better than other state-of-the-art methods. With a low sampling number (

), the performances of the DicRefCS and SBL-DE algorithms deteriorated quickly and failed when the sparseness S was higher than 5. On the other hand, our proposed algorithm still performed well, even when the sparseness S reached 8. It is worth mentioning that the SR-IR algorithm also performed better than the other two Bayesian algorithms but not as well as the L1-IR algorithm. The reasons are that the

norm is sparser than the

norm and we chose a better weight function.

Next, we illustrate the influence of the sample size N on the recovery effect using another set of experiments. We keep

, the sparseness

and the PSNR maintains at 20. We change the number of measurements N from 6 to 32. For each N, Figure 2 shows the performance of respective algorithms.

It can be observed from Figure 2 that, with the increase in N, the recovery performance of the respective algorithms is increased to a relatively high level but our proposed algorithm outperforms the other methods for a small number

![]()

Figure 1. RSNR in different S while K = 64, n = 20, PSNR = 20.

of measurements. This means that our method performs better for the recovery of sparse signals when the number of samples is limited.

Our last experiment tested the recovery performance of respective algorithm under different noise level PSNR. According to the definition of PSNR, we set the variance of noise from 0.01 to 1, which makes the PSNR changed from 20 to 0. At this experiment use signals of length

contains

complex sinusoids and set the number of measurements

. We take 20 points evenly between 0 and 20 and record the performance of respective algorithm in each point. Each data point is averaged by repeated tests.

Relatively speaking, the L1-IR algorithm and the SR-IR algorithm were more stable than the other algorithms as the noise level increased. Figure 3 indicates that the PSNR of all algorithms deteriorated quickly when the noise was very strong. In the case of relatively strong noise, some trials lead to failure results and the failure rate increases with an increasing noise level. Here, a trial was considered successful if the PSNR was higher than 15 dB. Table 1 shows the success rate of the algorithms for different levels of PSNR (at each level, we repeated 20 trials) in the last experiment. The success rate of DicRefCS and SBL-DE is obviously less than that of l1-IR and SR-IR when

is large than 0.2.

The validity, superiority, and stability of the l1-IR algorithm are illustrated by these experiments, indicating that the algorithm is worth applying in some practical cases.

5. Conclusion

In this paper, we treated the real dictionary parameters as unknown variables, and studied the line spectral estimation problem with unknown dictionary parameters. Based on the ideal of Bayesian lasso and the analysis of the first-order

![]()

Figure 2. RSNR in different n while K = 64, S = 3, PSNR = 20.

![]()

Figure 3. RSNR in different noise level while K = 64, S = 3, n = 20.

![]()

Table 1. Successful rate of different algorithms.

condition of the optimal solution, we proposed an iterative reweighted

penalty regression algorithm. We proved that in each step of the iterative process, the function value is continuously reduced until the approximate solution of the real sparse vector is obtained. The numerical results in Section 4 illustrated that the performance of our algorithm is better than other state-of-the-art algorithms in some cases. The disadvantage is that our method is more time-consuming, partly because in each sampling step it is necessary to ensure convergence, resulting in a sampling length that cannot be effectively reduced. Future studies will focus on this problem.