The Prediction of Stock Price Based on Improved Wavelet Neural Network ()

1. Introduction

The stock prices are time series data with multiple variables, there are non-linear, time-varying and uncertain relationship, and have always been a challenge to both economists and researchers [1] [2] .

A variety of models have been established to predict the stock prices by observing the law of data, such as Autoregressive Integrated Moving Average Model (ARIMA) [3] , support vector machine [4] and neural network (NN), wavelet neural network (WNN) [5] model. ARIMA is typical linear time series analysis method, but stock market is a large of nonlinear dynamic system and share price index is out of order. So if ARIMA model predicts complex time series, forecasting result often don’t be ideal. SVM is a non-traditional nonlinear forecasting technique based on the structure risk minimization principle. It can be use the theory of minimizing the structure risk to avoid the problems of excessive study, calamity data, local minimal value and so on. However, the regression cannot approach every function. With the rapid development of artificial intelligence technology, NN is employed as the nonlinear predictor for financial time series. Although prediction results usually outperform ARIMA models, traditional NN models have several disadvantages, including: a lot of model parameters should be dependent on, easy to be trapped into local minima, and over-fitting on training data resulting in poor generalization ability. As the wavelet analysis theory developed in the mid-1980, WNN was first proposed by Qinghua Zhang, and has been widely applied [6] , WNN has following advantages, one is that as the low correlation of wavelet neuron, WNN has fast convergence. What’s more, stretching and movement factors of wavelet function make the approximation capability of network more powerful. The third is wavelet neural network matches the signal with the good partial characteristic and multiresolution learning, which can express function characteristic and has higher forecast accuracy [7] . But there still have two disadvantages: First, the learning rate is constant, so if there is large, the training may over convergence; Second, WNN training method usually includes the stochastic gradient algorithm and declined gradient, those only consider the nth while ignoring the previous direction of n times, which make training trapped into local minima [8] .

Aiming at the shortcomings of WNN, the paper proposes the stocks market modeling and forecasting by using improved WNN. In parameters adjusting and learning of network, momentum items are added, in the same time, learning rate and the factor of momentum are self-adaptive and the form of decreasing factor of learning rate is connected with forecasting error. Using improved WNN to predict Shanghai Stock market, it shows that im- proved WNN is superior to WNN.

2. Models

2.1. Wavelet Neural Network (WNN)

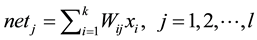

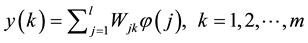

WNN is neural network model composed by wavelet function as its activation function. Structural diagram of three-layer wavelet neural network is shown in Figure 1.  are input of network,

are input of network,  are output,

are output,  represents the weight between input layer and hidden layer,

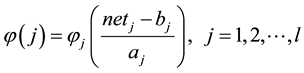

represents the weight between input layer and hidden layer,  is weight between hidden layer and output layer. In this time, the input and output of hidden layer as follows,

is weight between hidden layer and output layer. In this time, the input and output of hidden layer as follows,

the output of network as follows,

is wavelet function,

is wavelet function,  is movement parament,

is movement parament,  is stretching parameter, the number of hidden layer

is stretching parameter, the number of hidden layer

nodes is  is the number of input layer nodes and m is the output layer nodes’.

is the number of input layer nodes and m is the output layer nodes’.

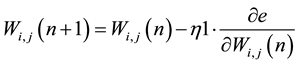

The correction of parameters such as weights, movement parameters and stretching parameters as follows,

In the formulas, ![]() and represents the error of output of network,

and represents the error of output of network, ![]() represents ex-

represents ex-

pected output, ![]() represent learning rate.

represent learning rate.

2.2. Improved Wavelet Neural Network

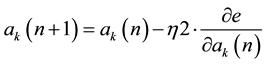

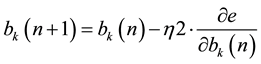

As the learning rate is constant, if there is large, the training may over convergence, in the same time WNN training method usually include the stochastic gradient algorithm and declined gradient, those only consider the nth while ignoring the previous direction of n times, which make training trapped into local minima. In order to overcome the shortcomings in the correcting, we propose the formulas of correction as follows,

![]()

![]()

![]()

![]()

where, ![]() represent the nth learning rate and

represent the nth learning rate and ![]() is the nth momentum factor, and in this paper, the formulas of them are expressed as,

is the nth momentum factor, and in this paper, the formulas of them are expressed as,

![]()

![]()

![]()

In the formulas, ![]() , if

, if![]() , the learning error increased, it is better to decrease the learning rate and remove the momentum; if

, the learning error increased, it is better to decrease the learning rate and remove the momentum; if![]() , the learning error decreased and the direction of correction is right, so we keep learning rate and momentum unchanged.

, the learning error decreased and the direction of correction is right, so we keep learning rate and momentum unchanged.

2.3. The Training Process by Improved WNN Model

Step 1. Considering when the initial parameters is not suitably selected, the WNN training will slow or even diverging [9] , so it is vital to use GA to optimize initial weights, stretching parameters and movement parame- ters [10] .

Step 2. Setting initial learning rate ![]() and momentum factor .

and momentum factor .

Step 3. Dividing sample into training sample and test sample. Training sample is used to train the network and test sample is used to test the prediction accuracy.

Step 4. Putting test sample as input of network and calculating the error between expected output and actual output.

Step 5. Making output of network is close to expected output, using error to adjust weights, stretching parameters and movement parameters.

Step 6. Judging the algorithm is end or not. If it is not, return Step 3.

3. Case Study

3.1. Data Processing

Taking Shanghai stock market for example, the chosen time series is the closing index of daily stock price in Shanghai Stock exchange from March 23th, 2012 to November 28th, 2014. This yields a total of 607 data samples, in which, the former 507 data samples are selected as training samples, the later 100 data samples as test samples. To improve the prediction accuracy, the data are normalized, the formula as follows,

![]()

where ![]() represents the data of normalized processing,

represents the data of normalized processing, ![]() represents original data,

represents original data, ![]() ,

, ![]() represent the maximum and the minimum number in data.

represent the maximum and the minimum number in data.

3.2. Training of Network

To stock market, taking into account the fact that there are five trading days a week, a WNN forecast model of one five-dimension input data [11] and one output data is established and set that the number of neurons in hidden layer is 6. We choose three-layer network because it can approximate arbitrary function. So the structure of WNN is 5-6-1. If the activation function adopts different wavelet functions, then there will be different performance [12] . In this paper, we choose Morlet wavelet function as activation function. Learning rate ![]() are 0.01and 0.001 separately. The expected error is 0.001 and the number of iterations is 100; Improved WNN has the same structure with WNN. The initial learning rate

are 0.01and 0.001 separately. The expected error is 0.001 and the number of iterations is 100; Improved WNN has the same structure with WNN. The initial learning rate ![]() are also 0.01 and 0.001 separately; initial momentum factor

are also 0.01 and 0.001 separately; initial momentum factor ![]() is 0.9; the expected error is 0.001; and the number of iterations is 100. GA is used to optimize initial weights, stretching parameters and movement parameters of WNN and improved WNN. The number of iterations is 50. What’s more, in GA, the population size is 40, the number of evolution is 50, crossover probability is 0.2 and mutation probability is 0.1.

is 0.9; the expected error is 0.001; and the number of iterations is 100. GA is used to optimize initial weights, stretching parameters and movement parameters of WNN and improved WNN. The number of iterations is 50. What’s more, in GA, the population size is 40, the number of evolution is 50, crossover probability is 0.2 and mutation probability is 0.1.

3.3. Simulation and Evaluation

The paper use root mean square error (RMSE) and mean absolute percentage error (MAPE) as evaluation standard of network, which are defined as

![]()

![]()

where n refers to the number of test sample, ![]() refers to actual output of network,

refers to actual output of network, ![]() refers to desired output.

refers to desired output.

Figure 2 shows the forecasting result of WNN improved WNN whose initial parameters were optimized by GA. Their RMSE and MAPE were shown by Table 1.

Figure 2 we can see after optimized by GA, improved WNN has a better forecasting ability than WNN.

![]()

Figure 2. WNN forecasting and Improved WNN forecasting.

![]()

Table 1. Comparison of forecasting error in different methods.

Table 1 summarizes the comparison of performance indicators of forecasting errors between the two models. Comparing with two methods, it can be see that improved WNN improves forecast accuracy, and it substantially reduces the RMSE by about 20% and MAPE by about 19%.

4. Conclusion

In this paper, the application of improved wavelet neural network to stock market prediction is studied. Considering that the forecasting accuracy is easy to be affected by initial parameters, which can provide GA to optimize it at first. The forecasting simulation results of Shanghai index data show that the improved WNN method is effective and the stock market model is of good prediction performance.

NOTES

*Corresponding author.