1. Introduction

Target tracking in video sequence is an important research subject in computer vision field; it has been widely applied in video surveillance system, aerospace system, intelligent traffic management, medical diagnosis, military and so on. In recent years, the domestic and foreign scholars have conducted a lot of research on this field and come up with a lot of different tracking methods. Meanshift [1] [2] algorithm is a popular nonparametric and fast matching algorithm based on density estimation which has been widely used. However, the lack of template updating and fixed kernel can make target lost [3] when the scale of target is changed. Bradski [4] proposed Camshift algorithm and did Meanshift tracking for each frame. The algorithm can adapt to size change automatically, but when the light is stronger, the interference of similar color background or occlusion occurs and the tracking fails. In order to reduce the influence of illumination on tracking, Camshift algorithm firstly transforms the image from RGB color space to HSV color space [5] , builds color histogram with H component of the image, and then replaces the pixel value of the original image with corresponding pixel’s statistics, requantizes and gets distribution image of color probability. Color histogram can only reflect the statistical information of target’s color, but fails to reflect the spatial information of the target. When the light outside is not stable, Camshift can’t go on tracking [6] . A common method of target tracking is feature matching, Speeded-Up Robust Features (SURF) keeps its invariant feature locally on translation, rotation, scaling and so on based on scale space. Also it is better than Scale-invariant feature transform (SIFT) [7] and other algorithms on reproducibility, robustness and resolution. It has characteristics of high speed and high precision. Ta et al. studied a continuous fast target tracking and recognition algorithm named SURFTrack [8] [9] using a local feature description. Experiments prove that it has good tracking performance. Reference [10] combines color feature and SURF feature to position target precisely and updates the result according to the Bhattacharyya coefficient between candidate region and template region. The problems of target deformation, scale change and color interference can be solved effectively, but SURF tracks targets based on matching of feature points, it fails to track easily [11] when the target is small or the feature is monotonous. Also it can’t meet the real-time requirement when it is applied to large size image. As in [12] , Kalman Filter (KF) is introduced into Camshift algorithm to effectively predict the position of the target and improve the accuracy and real-time of tracking. However, KF is only suitable for linear systems.

According to the above problems, improved Camshift algorithm combined with Unscented Kalman Filter (UKF) [13] [14] is proposed. Firstly the color model of target image is converted from RGB to HSV, then the H component of HSV color space is used to establish color model of target. Because of SURF’s invariant feature, the influence of illumination and similar background on Camshift can be reduced when the Camshift’s result is added with SURFTrack algorithm by weight; in order to avoid the influence of occlusion, UKF algorithm is introduced into the improved Camshift to predict target’s position effectively and make tracking accurate and real- time.

2. Algorithm Principle

2.1. Camshift Algorithm

Camshift is an improved algorithm based on Meanshift, mainly composed by reverse projection, Meanshift algorithm and Camshift algorithm [15] . The Camshift algorithm firstly converses color model of image from RGB to HSV color model, then use H component to establish target’s color model, then replace the original image pixel value with pixel statistics of the corresponding histogram, re quantize and get distribution image of the color probability.

Camshift algorithm does continuous Meanshift operation for all frames of the video sequence, refers to the center position and the size of the search window by calculating as initial value for next frame’s Meanshift search window. Iteration continues like this, target can be tracked.

2.2. UKF Algorithm

According to the nonlinear problems in maneuvering tracking, improving the filtering effect, KF filter was presented based on the Unscented transform namely UKF by Julier [16] which is used for video tracking [17] . on the premise of invariant mean and covariance of stochastic vector, a set of sample points are chosen to go through Unscented transform for pre estimating the mean and covariance with statistics of transformed sigma points after non linearization to avoid error caused by the linearization.

2.2.1. Establish UKF Model

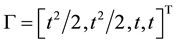

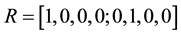

UKF used for target tracking can be divided into two parts, namely, state transform model and state observation model. The state is the state of target, observation is for sequence image; target state includes center position’s coordinates and speed of target. Target’s speed changes randomly, assuming that its acceleration is  and

and  obeys the Gauss distribution

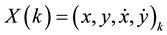

obeys the Gauss distribution . Set the target state vector:

. Set the target state vector: .

.  and

and  represent the coordinates of the target’s center position,

represent the coordinates of the target’s center position,  and

and  are respectively derivative of

are respectively derivative of  and

and , representing the target’s speed. Observation variable

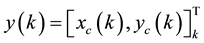

, representing the target’s speed. Observation variable  (

( and

and  represent observation value of target location). UKF state transition model and observation model are respectively:

represent observation value of target location). UKF state transition model and observation model are respectively:

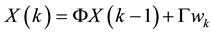

(1)

(1)

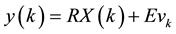

(2)

(2)

;

; ;

; ;

;

where ![]() and

and ![]() respectively represent Gauss white noise of state transition model and observation model;

respectively represent Gauss white noise of state transition model and observation model; ![]() is interval of two adjacent frames,

is interval of two adjacent frames,![]() ;

;

Assuming ![]() and

and ![]() are coordinates of target’s center which is initialized by manually positioning the target area,

are coordinates of target’s center which is initialized by manually positioning the target area,![]() .

.

2.2.2. UKF Filter

Unscented transform is the core of UKF algorithm, also is an important method for nonlinear state estimation. Assuming state mean and variance of state vector with ![]() dimensions at time

dimensions at time ![]() are

are ![]() and

and![]() , The state vector of UKF is a amplified vector

, The state vector of UKF is a amplified vector ![]() composed of

composed of ![]() and noise, the corresponding

and noise, the corresponding

vector of sigma points is![]() , the concrete implementation steps of UKF filter esti-

, the concrete implementation steps of UKF filter esti-

mation are as follows:

1) Initialization

![]() (3)

(3)

![]() (4)

(4)

![]() (5)

(5)

![]() (6)

(6)

where ![]() is noise covariance of state transition matrix,

is noise covariance of state transition matrix, ![]() is the observation noise.

is the observation noise.

2) Calculate sigma points using Equation (7)

![]() (7)

(7)

where![]() ;

;![]() ,

, ![]() is candidate parameter,

is candidate parameter,![]() .

.

3) Time updating. Take sigma points into state transition Equation (8) and observation Equation (9), calculate the average value of state vector at time ![]() by Equation (10)

by Equation (10)

![]() (8)

(8)

![]() (9)

(9)

![]() (10)

(10)

where ![]() is state transition equation,

is state transition equation, ![]() is observation equation,

is observation equation, ![]() is the weight coefficient for average value,

is the weight coefficient for average value,![]() ;

;![]() ;

;![]() .

.

4) Observation update equation. Take Equation (8) and Equation (9) into Equation (11) and Equation (12), calculate gain by Equation (13)

![]() (11)

(11)

![]() (12)

(12)

![]() (13)

(13)

![]() ,

,![]() here

here![]() ;

;![]() .

.

Take gain into Equation (14) and Equation (15), update mean and variance of state vector.

![]() (14)

(14)

![]() (15)

(15)

Predict position of fast moving target by UKF filter, due to uncertainty of moving object and moving model, feedback Camshift’s tracking result to UKF for updating and correcting its state model each time.

3. Tracking Algorithm Based on SURF Matching

3.1. SURF Feature Extraction and Target Description

SURF feature can better describe the target texture and spatial information, its performance is roughly the same as SIFT. Due to the adoption of box shaped filtering and integral image, its computing speed is nearly four times faster than SIFT, also it has good real-time performance. Process of SURF feature extraction is shown in Figure 1.

Process of SURF feature extraction: firstly, establish integral image for each frame image, increase window size of box shaped filter gradually, do fast convolution for integral image, thus construct the Pyramid scale space; then subtract two adjacent images on each layer in Pyramid to obtain the differential scale space, compare each point of this space with all the points on the adjacent scale and the same scale of 3 * 3 * 3 Stereo neighborhood, obtain extreme points by the Hessian matrix, next do interpolation calculation and optimization to get the stable feature points; find the main direction around the feature points, construct the region, do Hart wavelet transform for points on the region, extract SURF description vector; at last, calculate Euclidean distance of the two images’ feature vector respectively and match with method of nearest neighbor matching.

3.2. Target Locating and Tracking

In this paper, firstly target tracking template is acquired from video frames, then extract SURF feature points of

![]()

Figure 1. Process of SURF’s feature extraction.

target template and initialize position and size of the target. Assuming target’s Center of last frame is ![]() and the scale of tracking window is

and the scale of tracking window is![]() , relationship between two adjacent frames of images can be described by affine transformation model, establish affine transformation model by feature points of the two adjacent frames through SURF matching, calculate affine transformation matrix of them, thus correct target’s position and size. The theory of affine transformation leads to target’s center position as follows:

, relationship between two adjacent frames of images can be described by affine transformation model, establish affine transformation model by feature points of the two adjacent frames through SURF matching, calculate affine transformation matrix of them, thus correct target’s position and size. The theory of affine transformation leads to target’s center position as follows:

![]() (16)

(16)

where ![]() and

and ![]() represent telescopic distance of the target tracking window on horizontal and vertical direction respectively,

represent telescopic distance of the target tracking window on horizontal and vertical direction respectively, ![]() and

and ![]() are the corresponding offset scales,

are the corresponding offset scales, ![]() and

and ![]() can be obtained by RANSAC method.

can be obtained by RANSAC method.

New target’s center ![]() and target’s window size

and target’s window size ![]() in current frame can be obtained by Equation (13). When matched points between the adjacent frames are too many, this method is time consuming, make the centroid of the feature points matched successfully as center position of the current frame, offset value of target’s scale is up to maximal change value between horizontal direction and vertical direction.

in current frame can be obtained by Equation (13). When matched points between the adjacent frames are too many, this method is time consuming, make the centroid of the feature points matched successfully as center position of the current frame, offset value of target’s scale is up to maximal change value between horizontal direction and vertical direction.

![]() (17)

(17)

![]() (18)

(18)

where ![]() is number of feature points matched successfully between two adjacent frames.

is number of feature points matched successfully between two adjacent frames.

4. The Algorithm’s Improvement and Implementation Steps

The traditional Camshift algorithm transforms color space from RGB to HSV, regards the H component in HSV as the histogram’s template information to get distribution of color probability, in order to reduce the influence of illumination on target tracking, but when the background color is similar to target’s, accuracy of target tracking will greatly decreases. Because of SURF’s rotation and scale invariance, also it adapts to illumination change easily, in some occlusions or chaotic scenes, it can still maintain invariant advantages. An improved target tracking algorithm proposed in this paper with the fusion of Camshift and SURF. The block diagram of the improved Camshift algorithm is shown in Figure 2.

Implementation steps of the improved Camshift algorithm:

1) Initialize the first frame to determine the target template, set size and position of the tracking window, extract SURF feature and histogram of target template;

2) Find the centroid in the search window by Camshift algorithm, obtain new size ![]() and center position

and center position ![]() of search window, window size and Bhattacharyya coefficient between the target image’s and the current frame’s color histograms;

of search window, window size and Bhattacharyya coefficient between the target image’s and the current frame’s color histograms;

3) At the same time, use tracking algorithm of SURF’s feature matching to find center position ![]() and scale

and scale ![]() in new search window of target in the current frame, calculate Bhattacharyya coefficient

in new search window of target in the current frame, calculate Bhattacharyya coefficient ![]() between search area’s and template’s color histograms;

between search area’s and template’s color histograms;

4) After the two above tracking, use ![]() and

and ![]() to weight these two tracking results as result of the target in the current frame, it equals to correct Camshift’s tracking result with SURF’s tracking result since Camshift’s tracking result are not very accurate under illumination change or background interference, the tracking result after correction is as the following:

to weight these two tracking results as result of the target in the current frame, it equals to correct Camshift’s tracking result with SURF’s tracking result since Camshift’s tracking result are not very accurate under illumination change or background interference, the tracking result after correction is as the following:

![]()

In the next frame of video image, initialize search window’s position and size by value of the fourth step. Jump to Step 2 and continue to run.

Combine the improved Camshift algorithm with UKF filter to avoid the influence of the occlusion, nonlinear motion, fast speed and other factors on tracking. Algorithm’s block diagram is shown in Figure 3:

First of all, initialize the initial state ![]() of UKF filter by centroid of search window, according to Equation (1), precast position of the window, correct predicted results by Equation (2), finally regard state obtained by UKF’s state correction equation as input of improved Camshift algorithm at the next time.

of UKF filter by centroid of search window, according to Equation (1), precast position of the window, correct predicted results by Equation (2), finally regard state obtained by UKF’s state correction equation as input of improved Camshift algorithm at the next time.

5. Experimental Results and Analysis

The algorithm in this paper is implemented based on MATLAB2013a software platform. Experimental video sequence is AVI format, video acquisition speed is 20 ![]() and the resolution is

and the resolution is![]() , the initial state of the car is

, the initial state of the car is ![]() and the initial state of ball is

and the initial state of ball is![]() . Tracking effects of

. Tracking effects of

![]()

Figure 2. Block diagram of improved Camshift algorithm.

![]()

Figure 3. Block diagram of improved Camshift algorithm combined with UKF.

the algorithm are verified from color interference and occlusion compared with the traditional Camshift algorithm’s. The experimental results are showed in Figures 4-7.

Figure 4 shows tracking effects of traditional Camshift, larger window offset occurs when the target is more and more close to the black car, moreover tracking has trend of failure when the car turns. Figure 4 shows the tracking effect of the improved Camshift algorithm, due to Camshift’s tracking result are corrected by linear weighted method of improved Camshift’s tracking result and SURF’s tracking result, the tracking algorithm is considered by not only the color information for tracking effectiveness also texture feature for strengthening tracking accuracy. From the result figures, we can see moving target can be accurately tracked by improved Camshift algorithm under background color interference, comparison of tracking performance between Figure 4 and Figure 5 is shown in Table 1.

![]()

Figure 4. Traditional camshift algorithm.

![]()

Figure 6. Tracking results of improved camshift algorithm.

Figure 6 and Figure 7 show the tracking effects of improved Camshift algorithm and algorithm in this paper when the moving ball is blocked. Figure 6 shows tracking window fails to catch up with ball timely after the ball rolls across box. It can be seen in Figure 7, when the ball is shield, algorithm in this paper can keep tracking from occlusion, the tracking window can still accurately and timely track with the ball; Time to locate target is reduced and the accuracy is increased because UKF algorithm is introduced to predict the target, comparison of tracking performance between Figure 6 and Figure 7 is shown in Table 2.

The experimental results show that, target’s tracking effect of improved Camshift combined with UKF is more obvious than the traditional Camshift algorithm’s under color interference of the similar background occlusion, it can realize the accurate and real-time tracking of moving target. Tracking effects of algorithm in this paper are better than improved Camshift algorithm’s, it can track moving target accurately and real-timely under occlusion by moving targets.

6. Conclusion

The algorithm in this paper firstly tracks moving target by Camshift algorithm, then linearly weights Camshift’s tracking result with tracking result of SURF to reduce the influence of similar background interference on tracking result, and finally combines the improved Camshift algorithm with UKF to solve the occlusion problem

![]()

Figure 7. Tracking results of improved camshift algorithm combined with UKF.

![]()

Table 1. Performance comparison of traditional Camshift and improved Camshift.

![]()

Table 2. Performance comparison of improved Camshift algorithm and algorithm in this paper.

because UKF can predict target even if the target is sheltered or moving nonlinearly. Since the UKF algorithm has a very accurate tracking result even target moves with uncertain direction and strong random, it may be used for multiple targets tracking in the future. Also SURF can’t accurately track the target under unitary texture feature, so in multiple targets tracking we should pay attention to research of tracking characteristics.

Acknowledgements

The author would like to thank the student, Lv Haidong, who has helped in implementing the proposed algorithm and making this work possible.

NOTES

*Corresponding author.