Journal of Mathematical Finance

Vol.05 No.05(2015), Article ID:61616,25 pages

10.4236/jmf.2015.55040

Efficient Density Estimation and Value at Risk Using Fejér-Type Kernel Functions

Olga Kosta1, Natalia Stepanova2

1Decision Economics Group, HDR, Inc., Ottawa, Canada

2School of Mathematics and Statistics, Carleton University, Ottawa, Canada

Copyright © 2015 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

Received 29 September 2015; accepted 27 November 2015; published 30 November 2015

ABSTRACT

This paper presents a nonparametric method for computing the Value at Risk (VaR) based on efficient density estimators with Fejér-type kernel functions and empirical bandwidths obtained from Fourier analysis techniques. The kernel-type estimator with a Fejér-type kernel was recently found to dominate all other known density estimators under the  -risk,

-risk, . This theo- retical finding is supported via simulations by comparing the quality of the density estimator in question with other fixed kernel estimators using the common

. This theo- retical finding is supported via simulations by comparing the quality of the density estimator in question with other fixed kernel estimators using the common  -risk. Two data-driven band- width selection methods, cross-validation and the one based on the Fourier analysis of a kernel density estimator, are used and compared to the theoretical bandwidth. The proposed nonpara- metric method for computing the VaR is applied to two fictitious portfolios. The performance of the new VaR computation method is compared to the commonly used Gaussian and historical simulation methods using a standard back-test procedure. The obtained results show that the proposed VaR model provides more reliable estimates than the standard VaR models.

-risk. Two data-driven band- width selection methods, cross-validation and the one based on the Fourier analysis of a kernel density estimator, are used and compared to the theoretical bandwidth. The proposed nonpara- metric method for computing the VaR is applied to two fictitious portfolios. The performance of the new VaR computation method is compared to the commonly used Gaussian and historical simulation methods using a standard back-test procedure. The obtained results show that the proposed VaR model provides more reliable estimates than the standard VaR models.

Keywords:

Value at Risk, Kernel-Type Density Estimator, Fejér-Type Kernel, Asymptotic Minimaxity, Mean Integrated Squared Error, Fourier Analysis

1. Introduction

Financial institutions monitor their portfolios of assets using the Value at Risk (VaR) to mitigate their market risk exposure. The VaR was made popular in the early nineties by U.S. investment bank, J.P. Morgan, in response to the infamous financial disasters at the time and has since been implemented in the financial sector worldwide by the Basel Committee on Banking Supervision. By definition, the VaR is a risk measure of the worst expected loss of a portfolio over a defined holding period at a given probability. The time horizon and the loss probability parameters are specified by the financial managers depending on the purpose at hand. Typically, the VaR is computed at short time horizons of one hour, two hours, one day, or a few days, while the loss probability can range from 0.001 to 0.1 depending on the risk averseness of the investors. Financial institutions then use the results of the VaR to determine the necessary capital and cash reserves to put aside for coverage against potential losses in the event of severe or prolonged adverse market movements.

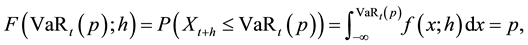

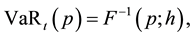

Formally, the Value at Risk of a portfolio,  , is the p-th quantile of the distribution of portfolio returns over a given time horizon h that satisfies the following expression:

, is the p-th quantile of the distribution of portfolio returns over a given time horizon h that satisfies the following expression:

where  is the portfolio return between time

is the portfolio return between time  and

and  and

and  is the probability density function (pdf) of returns. Equivalently,

is the probability density function (pdf) of returns. Equivalently,

where  is the inverse of the distribution function

is the inverse of the distribution function  that is continuous from the right. The time horizon h and loss probability

that is continuous from the right. The time horizon h and loss probability  are specified parameters. In our analysis, we use a time horizon of one day and probability levels ranging from 0.005 to 0.05. For a more in depth discussion on the origins of the VaR and its many uses see [1] .

are specified parameters. In our analysis, we use a time horizon of one day and probability levels ranging from 0.005 to 0.05. For a more in depth discussion on the origins of the VaR and its many uses see [1] .

In practice, there exists a variety of computational methods for the VaR. The two most commonly used approaches are the parametric normal and the nonparametric historical simulation summarized below. The following models rely on the assumption of independent and identically distributed (iid) daily portfolio returns.

1. Normal method. For normally distributed returns, with  as the expected return on a portfolio and

as the expected return on a portfolio and  as the variance of portfolio returns, the VaR is the p-th quantile of the normal distribution function given by

as the variance of portfolio returns, the VaR is the p-th quantile of the normal distribution function given by

where

natural VaR estimator is

The normal method for estimating the VaR is widely used among financial institutions due to its familiar properties. It is not realistic, however, to assume that the portfolio returns are normally distributed since high frequency financial data have heavier tails than can be explained by the normal distribution. As a result, this method generally underestimates the true VaR.

2. Historical simulation. Let

where

The main strengths of the historical simulation method are its simplicity and that it does not require any distributional assumptions on the portfolio returns as the VaR is determined by the actual price level movements. One has to be careful when selecting the data so as not to remove relevant or include irrelevant data. For instance, large samples of historical financial data can be disadvantageous. The portfolio composition is based on current circumstances; therefore, it may not be meaningful to evaluate the portfolio using data from the distant past since the distribution of past returns is not always a good approximation of expected future returns. Also, if new market risks are added, then there is not enough historical data to compute the VaR, which may underestimate it. Another drawback is that the discrete approximation of the true distribution at the extreme tails can cause biased results.

A more generalized and sophisticated nonparametric method for estimating the pdf of daily portfolio returns is kernel density estimation. Let

In this paper, we introduce a nonparametric approach for computing the VaR based on quantile estimation with the Fejér-type kernel and a nearly optimal bandwidth obtained from the Fourier analysis techniques. To do so, we first conduct a simulation study to support the theoretical finding that the kernel-type density estimator in hand has the best performance with respect to the

The paper is organized as follows. Section 2 provides some background on assessing the goodness of a nonparametric estimator. Section 3 gives a brief overview of kernel density estimation and demonstrates how to obtain the empirically selected bandwidths. The density estimator with the Fejér-type kernel is presented in Section 4 along with its properties. Section 5 presents a simulation study comparing the kernel-type density estimator in question with other fixed kernel estimators in the literature. The proposed VaR compuation method is introduced in Section 6. In Section 7, we use the new VaR model to estimate the VaR for two fictitious portfolios and compare the results to those of the commonly used VaR models by means of a back-test. Section 8 concludes the paper with a discussion and analysis of the results.

The following notation are used throughout the paper. We use the symbol

surely is indicated by

that there exist constants

2. Common Approaches to Measuring the Quality of Density Estimators

Let

arbitrary estimator of

The performance of a density estimator can be evaluated through a risk function that measures the expected loss of choosing

When

speak of the

The

The quality of a density estimator is often measured by a minimax criterion. The idea is to protect statisticians from the worst that can happen. The minimax risk is given by

where the infimum is taken over all estimators

An estimator

That is, for large sample sizes, the maximum risk of

In nonparametric regression analysis, work on asymptotically minimax estimators of smooth regression curves with respect to the

A more precise approach for finding efficient estimators is local asymptotic minimaxity (for a more detailed description on the origins of this method, see [7] ). An estimator

where

In kernel density estimation, the LAM ideology differs significantly from both the asymptotically minimax and rate optimality approaches. When constructing LAM estimators of f, one has to pay close attention to the choice of both the bandwidth h and the kernel K. Indeed, the usual bias-variance tradeoff approach, when the variance and the bias terms of an optimal estimator are to be balanced by a good choice of h, is no longer appropriate. In several papers, it is shown that with a careful choice of kernel the bias of

In a recent paper of Stepanova [2] , a kernel-type estimator for densities belonging to a class of infinitely smooth functions is shown to have the

3. Kernel Density Estimation

Let

lative distribution function (cdf)

known. A kernel density estimator of

where the parameter

Under certain nonrestrictive conditions on K, the above assumptions on h imply the consistency of

A more general class of density estimators includes the kernel-type estimators whose kernel functions,

Some classical examples of kernels together with their Fourier transforms (see formula (6)) are listed in Table 1 and presented graphically in Figure 1. These kernel functions are the most commonly applied in practice, most likely due to their additional nonnegative property as their corresponding estimators result in density functions. The group of kernels listed in Table 2 and presented in Figure 2, along with their Fourier transforms, are well known in statistical theory and generally more asymptotically efficient than the standard kernels in Table 1 since they were shown to achieve better rates of convergence in the works of [2] [9] . These kernel functions alternate between positive and negative values, except for the Fejér kernel. For these kernels, the positive part estimator

can be used to maintain the positivity of a density estimator. Throughout our analysis, we shall be using the positive part of all the kernel density estimators under study.

The most popular approach for judging the quality of an estimator in the literature and in practice is the Mean

Figure 1. Some standard kernel functions and their Fourier transforms.

Figure 2. Some efficient kernel functions and their Fourier transforms.

Table 1. Some standard kernel functions and their Fourier transforms.

Table 2. Some efficient kernel functions and their Fourier transforms.

Integrated Squared Error (MISE). Observe that the

By the Fubini theorem, the right-hand side (RHS) of (5) can be further expanded to represent the variance- bias decomposition of the density estimator:

Notice also that the MISE of the positive part estimator

3.1. Fourier Analysis of Kernel Density Estimators

In nonparametric estimation, the use of Fourier analysis makes it often easier to study statistical properties of estimators. It can be noted from Figure 1 and Figure 2 that the Fourier transforms of the efficient kernels have a simpler form than those of the standard kernels. This simplifies the analysis of density estimators under certain settings when using efficient kernel functions. We begin by providing a few basic definitions and properties related to the Fourier transform (see, for example, Chapter 9 of [10] ).

The Fourier transform

where

Using also the notation

The Fourier transform of a density is known to be the characteristic function defined by

The corresponding empirical characteristic function is

where

for all

In

Continuing from (10) and relations (9), the MISE of the kernel estimator

where

Formula (11) provides a more suitable method for expressing the MISE than some classical approaches that derive upper bounds on the integrated squared risk (see [12] , Section 2.1.1). Unlike the classical approaches, the assumptions required to obtain formula (11) are not very restrictive, which allows for the derivation of more optimal kernels. Indeed, most of the general properties of a kernel in (4), such as K integrating to one or being an integrable function, do not need to be true. Also, the expression for

where Leb(A) denotes the Lebesgue measure of a set A, then K is inadmissible. As seen from Figure 1, the

Epanechnikov and uniform kernel functions are inadmissible since the set

Lebesgue measure. This is another argument as to why the efficient kernels listed in Table 2 as well as the family of Fejér-type kernels in (20) are preferred.

3.2. Bandwidth Selection Based on Unbiased Risk Estimation and Fourier Analysis Techniques

Selecting an appropriate bandwidth

In theory, an optimal bandwidth can be obtained by minimizing the MISE with respect to h:

In practice, the RHS of (13) cannot be computed as the MISE depends on the unknown density f. Instead, an approximately unbiased estimator of the

As we are only concerned with minimizing the MISE with respect to h, the term

where

is the leave-one-out estimator of

where * denotes the convolution. It follows that

where

yielding

as the kernel estimator of

Cross-validation is perhaps the most common approach based on unbiased risk estimation for selecting h; however, many authors have noted that is has a slow rate of convergence towards

The MISE of interest is given in (11) and denoted by

where

Hence, minimizing

The corresponding kernel density estimator with the bandwidth

Under appropriate conditions,

4. Density Estimators with Fejér-Type Kernel Functions

Suppose that

analytic on the interior of

We have for any

The functional class

The kernel-type estimator of

where

with

It is easy to see that the Fejér-type kernel as in (20) satisfies the properties in (4). The parameters in (21) are chosen to have

ensuring the consistency of

The Fourier transform of the Fejér-type kernel is given by (see [18] , p. 202)

The Fejér-type kernel

To apply the data-driven bandwidths given in (15) and (17), the unbiased estimators as in (14) and (16), denoted by

Table 3. Cases of the Fejér-type kernel.

Figure 3. The Fejér-type kernel function and its Fourier transform.

where

for

In the following section, we demonstrate numerically that the positive part of the kernel-type estimator in (19) with the Fejér-type kernel in (20) works well with respect to the

5. Simulation Study: Comparison of Kernel Density Estimators

A simulation study is carried out to assess the quality of the positive part of the density estimator in (19) with the Fejér-type kernel in (20) using the MISE criterion. The finite-sample performance of (19) is compared to other density estimators that use the sinc, de la Vallée Poussin, and Gaussian kernels. These kernel functions were chosen since the sinc and de la Vallée Poussin are efficient kernels that are specific cases of the Fejér-type, while the Gaussian kernel is the most commonly used in practice. Three bandwidth selectors are applied to the kernel density estimators in hand. The bandwidth selection methods include the empirical approaches from cross-validation and Fourier analysis and the theoretical smoothing parameter

We generated 200 random samples, of a wide range of sample sizes, from the following four distributions: standard normal

Let us first assess the bandwidth selection methods under consideration. Figure 4 and Figure 5 capture the performance of each bandwidth selection method for each kernel density estimate under study by plotting the MISE estimates against samples of size 25 to 100 and 200 to 1000, respectively. The following can be observed from the figures. For a good choice of

Figure 4. MISE estimates for the cross-validation, Fourier, and theoretical bandwidth selectors with Fejér-type, sinc, dlVP, and Gaussian kernels that estimate the standard normal, Student’s

Figure 5. MISE estimates for the cross-validation, Fourier, and theoretical bandwidth selectors with Fejér-type, sinc, dlVP, and Gaussian kernels that estimate the standard normal, Student’s

the chi-square density, which is not in

Now, we assess the quality of the Fejér-type kernel estimator when using empirical bandwidths. Figure 6 and Figure 7 capture the performance of the kernel functions for each density estimate under a specified empirical bandwidth by plotting the MISE estimates against samples of size 25 to 100 and 200 to 1000, respectively. For

Figure 6. MISE estimates for the Fejér-type, sinc, dlVP, and Gaussian kernels with cross-validation and Fourier bandwidth selectors that estimate a standard normal, Student’s

estimation of the unimodal densities with data-dependent bandwidth methods, the Fejér-type kernel slightly improves the other fixed efficient kernels and performs much better than the common Gaussian kernel for larger sample sizes. Also, we observe that, when estimating the bimodal density, the Fejér-type kernel performs much better than all of the competing kernels, especially for large sample sizes.

In summary, for an appropriate choice of

Figure 7. MISE estimates for the Fejér-type, sinc, dlVP, and Gaussian kernels with cross-validation and Fourier bandwidth selectors that estimate a standard normal, Student’s

MISE, with competing kernel estimators, especially when estimating normal mixtures. The simulation results attest that, for a good choice of

6. VaR Model with Fejér-Type Kernel Functions

Suppose that

denote the corresponding order statistics. Recall that VaR models are concerned with evaluating a quantile function

for a general cdf

Let

By Kolmogorov’s strong law of large numbers, the empirical distribution function is a strongly consistent estimator of the true distribution for any

Moreover, by the Glivenko-Cantelli theorem,

A common definition for the empirical quantile function is

for

where

Therefore, for a probability level

where

7. Application to Value at Risk

We assess the proposed nonparametric VaR computation method given by formula (23) and compare it to the common normal and historical simulation approaches as in (1) and (2). Each VaR computation method is evaluated by means of a statistical back-test procedure based on a likelihood ratio test.

7.1. Evaluation of VaR Computation Methods

To evaluate the adequacy of each VaR computation method, we perform a statistical test that systematically compares the actual returns to the corresponding VaR estimates. The number of observations that exceed the VaR of the portfolio should fall within a specified confidence level; otherwise, the model is rejected as it is not considered adequate for predicting the VaR of a portfolio. A back-test of this form was first used by Kupiec in 1995 (see [1] , Chapter 6).

Let

where

out of an evaluation sample of m observations and follows the binomial distribution

for

A likelihood ratio test is carried out to determine whether or not to reject the null hypothesis that the model is adequate. The likelihood function for p given the observed values

where

where

mild regularity conditions, the asymptotic distribution of the log-likelihood ratio statistic

we would reject

In this study, we evaluate the test statistic

7.2. Comparative Study of VaR Computation Methods

We apply the normal, historical simulation, and newly proposed VaR computation methods defined in (1), (2), and (23), respectively, to estimate 1000 daily VaR forecasts from two portfolios. Probability levels of 0.05, 0.025, 0.01, and 0.005 are considered. Each VaR model is estimated using samples of 252, 504, and 1000 trading days. A back-test is then performed to evaluate the adequacy of each VaR model under consideration over an evaluation sample of 1000 trading days.

We have two imaginary investment portfolios each consisting of a single well-known stock index, the Dow Jones Industrial Average (DJIA) and the S&P/TSX Composite Index. These indices were chosen to be in our fictitious portfolios as they have abundant publicly available historical data. Here, they are used as repre- sentative stocks since, in reality, an index cannot be invested directly being that it is a mathematical construct. The raw values of the daily DJIA and S&P/TSX Composite indices are displayed in Figure 8 from June 28, 2007 to March 11, 2015. The effect of the 2008 financial crisis is indicated by both indices, where the DJIA can be seen to have a large decrease in points with a low level of approximately 6500 in the early months of 2009. This is followed by an increase in the level of both indices in the recent years, particularly for the DJIA.

The index values are used to evaluate the daily logarithmic returns as follows. If

Table 4. 95% nonrejection confidence regions for the likelihood ratio test under different VaR confidence levels and evaluation sample sizes.

Figure 8. Daily DJIA and S&P/TSX Composite indices from June 28, 2007 through March 11, 2015.

The autocorrelation of the daily log returns are plotted in Figure 9 for each index. We can observe that there are no significant autocorrelations as almost all of them fall within the 95% confidence limits. A few lags slightly outside of the limits do not necessarily indicate non-randomness as this can be expected due to random fluctuations. In addition, there is absence of a pattern. Therefore, both portfolios may be considered random, and thus all the VaR computation methods in hand may be applied.

The daily log returns and VaR estimates for every model under consideration are displayed in Figure 10 and Figure 11 for each stock index over a time period of one thousand trading days. Each row of plots corresponds to the VaR confidence level, while each column provides the results of the estimation sample used. Table 5 displays the back-test results of all the VaR models in question for each stock market index. The outcome of each test, that is whether or not to reject the model given the observed number of VaR violations, is reported for every VaR model. These outcomes are determined by the 95% nonrejection regions indicated in Table 4 when the evaluation sample size is 1000 days.

Table 5. Back-test results of all the VaR models under consideration applied to each stock index over an evaluation sample of 1000 days and 95% confidence regions.

Figure 9. Autocorrelation plots of the daily logarithmic returns for each stock market index.

The following can be observed from the aforementioned figures and tables. Overall, the empirical results of both stock indices are fairly similar. The back-test results in Table 5 show that the normal model has the poorest performance as it is not considered adequate in most cases. The observed number of VaR violations is quite high for smaller probability levels, meaning that the mass in the tails of the distribution is underestimated. The only case when the normal model is not consistently rejected is when the probability level is 0.05 for estimation samples of 252 and 504 observations. The historical simulation method generally performs well and shares similar results with the newly proposed VaR estimation method. It is, however, rejected for probability levels 0.005 and 0.025 when the number of observations is 252 in the S&P/TSX Composite Index portfolio. Finally, the VaR model of interest based on the Fejér-type kernel quantile estimation is the most reliable as it has the least number of rejections for all the tests considered.

Overall, it can be seen that none of the models perform well when the estimation sample is large, except for sometimes the normal method when probability levels are small. This is expected as financial data from four years ago may no longer be relevant to the current market situation. Moreover, the performance of all the VaR computation methods is similar at the 95% confidence level.

For an illustration of the density of portfolio returns on a specific day see Figure 12 and Figure 13. The Fejér-type kernel density estimates with Fourier bandwidths are represented by the green curves while the normal densities have the red curves. The images are consistent with the assertion that the stock returns are heavy tailed. It can be clearly seen that the density estimates with Fejér-type kernels can account for heavy tails of the return distributions better than the normal densities.

In summary, the proposed method for computing the VaR based on density estimation with Fejér-type kernel functions and Fourier analysis bandwidth selectors provides more reliable results than the commonly used VaR computation methods. Density estimates with Fejér-type kernel functions can account for the heavy tails of the return distributions, unlike the normal density. The normal method for computing the VaR tends to underesti- mate the risk, especially for higher confidence levels. For the nonparametric models, one has to be careful in choosing a relevant estimation period; otherwise, they tend to overestimate the risk for large estimation samples.

8. Conclusion

The paper introduces a nonparametric method of VaR computation on portfolio returns. The approach relies on the kernel quantile estimator introduced by Parzen [22] . The kernel functions employed are Fejér-type kernel functions. We use these functions because they are known to produce asymptotically efficient kernel density estimators with respect to the

Figure 10. Daily log returns and VaR estimates of the DJIA at 95%, 97.5%, 99%, and 99.5% confidence levels under 252, 504, and 1000 observations over 1000 trading days for the VaR computation methods in consideration.

data-driven bandwidth obtained from the Fourier analysis of a kernel density estimator. The latter bandwidth is chosen for constructing the new VaR estimator,

Figure 11. Daily log returns and VaR estimates of the S&P/TSX Composite Index at 95%, 97.5%, 99%, and 99.5% confidence levels under 252, 504, and 1000 observations over 1000 trading days for the VaR computation methods in consideration.

numerical results. The resulting estimator is compared numerically with the two standard VaR estimators,

Figure 12. Normal, empirical, and positive part Fejér-type kernel densities of the DJIA daily returns based on 252, 504, and 1000 observations for the days 29/06/2011, 25/06/2013, and 30/09/2014. The 97.5% VaR of each model is illustrated along with the actual daily return.

convenient for practitioners because it does not require restrictive assumptions on the underlying distribution, as

Figure 13. Normal, empirical, and positive part Fejér-type kernel densities of the S&P/TSX Composite daily returns based on 252, 504, and 1000 observations for the days 29/06/2011, 25/06/2013, and 30/09/2014. The 97.5% VaR of each model is illustrated along with the actual daily return.

the normal method does. Our method also provides more accurate VaR estimates than the historical simulation method due to its smooth structure.

Acknowledgements

This research was supported by an NSERC grant held by Natalia Stepanova at Carleton University.

Cite this paper

OlgaKosta,NataliaStepanova, (2015) Efficient Density Estimation and Value at Risk Using Fejér-Type Kernel Functions. Journal of Mathematical Finance,05,480-504. doi: 10.4236/jmf.2015.55040

References

- 1. Jorion, P. (2001) Value at Risk: The New Benchmark for Managing Financial Risk. 2nd Edition, McGraw-Hill, United States of America

- 2. Stepanova, N. (2013) On Estimation of Analytic Density Functions in Lp. Mathematical Methods of Statistics, 22, 114-136.

http://dx.doi.org/10.3103/S1066530713020038 - 3. Levit, B. and Stepanova, N. (2004) Efficient Estimation of Multivariate Analytic Functions in Cube-Like Domains. Mathematical Methods of Statistics, 13, 253-281.

- 4. Golubev, G.K., Levit, B.Y. and Tsybakov, A.B. (1996) Asymptotically Efficient Estimation of Analytic Functions in Gaussian Noise. Bernoulli, 2, 167-181.

http://dx.doi.org/10.2307/3318549 - 5. Guerre, E. and Tsybakov, A.B. (1998) Exact Asymptotic Minimax Constants for the Estimation of Analytic Functions in Lp. Probability Theory and Related Fields, 112, 33-51.

http://dx.doi.org/10.1007/s004400050182 - 6. Schipper, M. (1996) Optimal Rates and Constants in L2-Minimax Estimation. Mathematical Methods of Statistics, 5, 253-274.

- 7. Hájek, J. (1972) Local Asymptotic Minimax and Admissibility in Estimation. Proceedings of the Sixth Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, 175-194.

- 8. Belitser, E. (1998) Efficient Estimation of Analytic Density under Random Censorship. Bernoulli, 4, 519-543.

http://dx.doi.org/10.2307/3318664 - 9. Ibragimov, I.A. and Hasminskii, R.Z. (1983) On Estimation of the Density Function. Journal of Soviet Mathematics, 25, 40-57.

http://dx.doi.org/10.1007/BF01091455 - 10. Rudin, W. (1987) Real and Complex Analysis. 3rd Edition, McGraw-Hill, Singapore.

- 11. Tsybakov, A.B. (2009) Introduction to Nonparametric Estimation. Springer Science, United States of America.

http://dx.doi.org/10.1007/b13794 - 12. Kosta, O. (2015) Efficient Density Estimation Using Fejér-Type Kernel Functions. M.Sc. Thesis, Carleton University, Ottawa.

http://dx.doi.org/10.1214/aos/1176351046 - 13. Cline, D.B.H. (1988) Admissible Kernel Estimators of a Multivariate Density. Annals of Statistics, 16, 1421-1427.

- 14. Härdle, W., Müller, M., Sperlich, S. and Weratz, A. (2004) Nonparametric and Semiparametric Models. Springer, Heidelberg.

http://dx.doi.org/10.1007/978-3-642-17146-8 - 15. Rudemo, M. (1982) Empirical Choice of Histograms and Kernel Density Estimators. Scandinavian Journal of Statistics, 9, 65-78.

- 16. Scott, D.W. and Terrell, G.R. (1987) Biased and Unbiased Cross-Validation in Density Estimation. Journal of the American Statistical Association, 82, 1131-1146.

http://dx.doi.org/10.1080/01621459.1987.10478550 - 17. Golubev, G.K. (1992) Nonparametric Estimation of Smooth Densities of a Distribution in L2. Problems of Information Transmission, 23, 57-67.

- 18. Achieser, N.I. (1956) Theory of Approximation. Frederick Ungar Publishing, New York.

- 19. Golubev, G.K. and Levit, B.Y. (1996) Asymptotically Efficient Estimation for Analytic Distributions. Mathematical Methods of Statistics, 5, 357-368.

- 20. Mason, D.M. (2010) Risk Bounds for Kernel Density Estimators. Journal of Mathematical Sciences, 163, 238-261.

http://dx.doi.org/10.1007/s10958-009-9671-0 - 21. Lepski, O.V. and Levit, B.Y. (1998) Adaptive Minimax Estimation of Infinitely Differentiable Functions. Mathematical Methods of Statistics, 7, 123-156.

- 22. Parzen, E. (1979) Nonparametric Statistical Data Modeling. Journal of the American Statistical Association, 74, 105-121.

http://dx.doi.org/10.1080/01621459.1979.10481621 - 23. Marron, J.S. and Sheather, S.J. (1990) Kernel Quantile Estimators. Journal of the American Statistical Association, 85, 410-416.

http://dx.doi.org/10.1080/01621459.1990.10476214 - 24. Roussas, G. (1997) A Course in Mathematical Statistics. 2nd Edition, Academic Press, United States of America.