Advances in Linear Algebra & Matrix Theory

Vol.05 No.02(2015), Article ID:56994,7 pages

10.4236/alamt.2015.52005

On Exact Determination of Eigen Vectors

Raghuram Prasad Dasaradhi1*, V. V. Haragopal2

1Department of Mathematics, Osmania University, Hyderabad, India

2Department of Statistics, Osmania University, Hyderabad, India

Email: *draghuramp@gmail.com, haragopalvajjha@gmail.com

Copyright © 2015 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 24 March 2015; accepted 6 June 2015; published 9 June 2015

ABSTRACT

In this article, we determine the Eigen values and Eigen vectors of a square matrix by a new approach. This considers all the roots with their multiplicities are known, using only the simple matrix multiplication of a vector. This process does not even require matrix inversion.

Keywords:

Characteristic Equation, Minimal Polynomia, Eigen Values, Eigen Vectors, Vander Monde Matrices, Jordan Reduction

1. Introduction

There are many algorithms to determine the Eigen values and Eigen vectors of a square matrix [1] -[4] . Basically they are iterative, they either determine sequentially the largest Eigen values and associated Eigen vectors, or as in the case of positive definite matrices go for simultaneous iterative determination of all Eigen values and Eigen vectors by a succession of orthogonal transformations [1] [3] . Also, when one has multiple roots, the usual iterative approaches generally fail to work, unless additional properties of Eigen vectors of repeated roots are exploited. However, it is theoretically possible (and hence, in practice achievable with some success) to obtain all the Eigen vectors (including the generalized Eigen vectors connected with Jordan reduction) of a matrix if all the roots with their multiplicities are known using only the simple matrix multiplication of a vector. This process does not even require matrix inversion.

In what follows, we shall present the procedure through illustrative examples. Since the theory behind it is rather simple and becomes almost obvious once the way is pointed out, we shall not prove any result. Rather we shall only state a relevant new theorem in matrix theory. Implication of this theorem and its extensions in the general contexts are dealt with in a separate study.

2. Basic Points

To place the results of the present paper in a proper perspective, it is necessary to make the following points explicit before going to the theorem.

1) This procedure does require the knowledge of minimal polynomial [5] and the numerical value of the Eigen value for which the Eigen vector is to be obtained.

2) The only matrix operation involved in obtaining the Eigen vector is multiplication of a matrix and a vector.

3) One can obtain, with equal ease, the Eigen vectors for each known root, including the generalized vectors for multiple roots when they exist. In other words, Eigen vectors can be obtained for each value by itself without needing to determine either other root or the associated vectors.

4) When a multiple Eigen root has many roots, one can get them all by starting with different initial vectors.

® For convenience, we shall employ the following convention and notations:

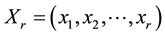

a) A is a square matrix of order n with Eigen roots ; unless stated otherwise, they are all assumed to be distinct.

; unless stated otherwise, they are all assumed to be distinct.

b)  is the matrix of Eigen columns of A.

is the matrix of Eigen columns of A.

c) 1)  is the initial or starting vector and

is the initial or starting vector and ,

, .

.

2)  is the n by r matrix with the

is the n by r matrix with the  as its columns.

as its columns.

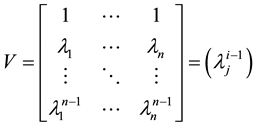

d)  is the Vander Monde matrix [6] of Eigen values

is the Vander Monde matrix [6] of Eigen values

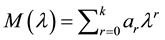

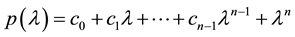

is the minimal polynomial of A and

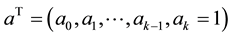

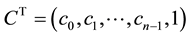

is the minimal polynomial of A and  is the vector of coeff- icients in

is the vector of coeff- icients in  in reverse order. When the

in reverse order. When the  are all distinct, as is being assumed for the present, this is also the characteristic polynomial

are all distinct, as is being assumed for the present, this is also the characteristic polynomial  with

with  as the corres- ponding coefficient vector. Thus,

as the corres- ponding coefficient vector. Thus,  in the present situation.

in the present situation.

As is well known, with the condition , a is unique if

, a is unique if

e)

3. Main Results

We can now state the new Eigen vector theorems and their obvious extensions which are at the heart of the procedures presented in the sequel.

THEOREM 1:

Proof is easy once it is noted that

vectors in general are unique upto scale and Eigen vectors associated with different Eigen values are linearly independent, the choice of

THEOREM 2: The vector

values

3.1. Illustrations

We shall now illustrate the application of the above theorems in the determination of

3.2. Illustration 1

Hence a, such that

Since

Similarly,

3.3. Illustration 2

Here,

Hence,

And

Taking

Taking

A second eigenvector associated with

We then get

and

As is to be expected, the

3.4. Illustration 3

Here again rank of

Since

Thus

One Eigen vector is got by taking

Defining

It is easily verified that

To obtain a second Eigen vector for

we start with a different

The new Eigen vectors are

With

Here,

Hence defining

We have

Thus,

Obviously, two independent Eigen vectors say,

3.5. Illustration 4

With

Since

But has only one Eigen vector. Defining

We get

Giving

As is to be expected, we have

3.6. Illustration 5

Since

Giving the minimal polynomial

Thus,

Since

Similarly with

We get

With

Thus, we have obtained four vectors for A; one more Eigen vector is yet to be obtained corresponding to the triple Eigen value

3.7. Case 1

With the starting

This is obviously of rank 2 only.

Solving the homogeneous equations X3y = 0 we get a polynomial

Using

And

To illustrate the complexity of the situation one has to be prepared to encounter, we shall in turn present the results with five different starting vectors.

3.8. Case 2

Has rank 2. Hence,

3.9. Case 3

Hence

Taking

And

3.10. Case 4

Hence

3.11. Case 5

With

Taking

It is also instructive to examine the result of multiplication of an X matrix with the coefficient vector got by dividing the characteristic polynomial by any of its factors. With the of Case 5, taking

For the matrix A, we have one representation

In Case 5, we have

Similarly, in Case 2, we have

Hence, as is to be expected, the X for this case viz,

Is of rank 2, giving with

Any Q which includes only

These results, of course, are the consequences of the second Eigen vector theorem presented elsewhere.

4. Summary

Some general observations regarding the problem of determining the Eigen values and corresponding Eigen vectors of a matrix are now in order. Though, theoretically obvious, significance of the procedure presented above in the case of computation of the same perhaps needs to be reiterated. However, in the present study we have not gone into the important questions regarding the approximations in practical computations and effect of consequent noise on the final results observed.

1) If one has the ability to solve a set of linear equations, one can obtain the characteristic polynomial of a matrix provided, it is also the minimal polynomial. This fact is, of course, well known. However, the same is true regarding the determination of minimal polynomial in general.

In the above notation, we compute sequentially the ranks of

This is the case when

If

2) Since the highest common factors of a polynomial and its derivative have each of the roots of the polynomial,

multiplicity of each being reduced by 1, it follows that

each of the roots

3) Since real symmetric matrices are fully diagonalizable by an orthogonal matrix, their minimal polynomial will have no repeated root. This fact is of great help especially in situations where a dispersion matrix has signal Eigen values which are relatively large and possible distinct and a “noise Eigen value” which is hopefully small and will be of high multiplicity. A good estimation of the minimal polynomial will be possible with relatively less computational effort by the present approach. Using the same computational product by

4) The present approach enables one also to tackle complex Eigen values and Eigen vectors, especially when A is real and hence Eigen values and vectors occur in conjugate pairs.

Acknowledgements

We are highly thankful to Late Prof. S. N. Narahari Pandit for suggesting this problem, we are indebted to him. The author 1, acknowledges UGC, India for financial support.

References

- Datta, K.B. (1991) Matrix and Linear Algebra. Prentice-Hall of India Private Limited, New Delhi,.

- Curtis, C.W. (1984) Linear Algebra an Introductory Approach. Springer-Verlag, New York.

- Proskuryakov, I.V. (1978) Problems in Linear Algebra. Mir Publishers, Moscow.

- Bellman, R. (1970) Introduction to Matrix Analysis. 2nd Edition, Tata Mcgraw-Hill Publishing Company Ltd., New Delhi.

- Cullen, C.G. (1990) Matrices and Linear Transformations. 2nd Edition, Dover Publications, New York.

- Horn, R.A. and Johnson, C.R. (1991) Topics in Matrix Analysis. Cambridge University Press, Cambridge. http://dx.doi.org/10.1017/CBO9780511840371

NOTES

*Corresponding author.