Push-Pull Finite-Time Convergence Distributed Optimization Algorithm ()

1. Introduction

Consider a network with N nodes. Each node on the network has its own cost function, expressed as

. It is strictly convex. All nodes cooperate to achieve the optimal value of the target cost function.

(1-1)

Among them,

is the optimal value of function

. Generally, a problem of the form (1-1) is called an unconstrained convex optimization problem [1] [2], and similar to it is the resource positioning problem [3], formation control [4], sensor scheduling [5] and distributed message routing [6], etc.

At present, a series of algorithms on problem (1-1) have been extensively studied. In general, these algorithms can be divided into two categories: discrete-time algorithms [1] [2] [7] [8] [9] and continuous-time algorithms [10] - [16]. Most of the former adopt iterative method, and based on the consistency of the dynamic system to achieve the goal. For example, in reference [1], the authors propose a non-gradient distributed random iterative algorithm, which can achieve asymptotic convergence with less information transmission, which is better than some existing gradient-based algorithms. In [2], the authors propose a new event-driven zero-gradient and algorithm that can be widely applied to most network models. It can achieve exponential convergence when the network topology is strongly connected and is a detail balance graph. The latter are mostly designed in continuous time, and the study of their convergence properties uses control theory as the main tool. In [10], the researchers proposed a distributed zero-gradient sum algorithm based on continuous time. The initial value of the algorithm is the optimal value of the cost function of each node. Exponential convergence can be achieved when the network is a connected and undirected fixed topology. In [13], the author pointed out that the algorithm can achieve exponential convergence when the local cost function of the node is strongly convex and the gradient meets the global Lipschitz continuity condition. However, most of the existing algorithms on problem (3-1) can only achieve asymptotic or exponential convergence. In real engineering systems, we all hope that the nodes can reach the optimal value x* in a certain time. Some effective methods have also been studied to improve the speed of consensus convergence, for example, by designing optimal topology and optimal communication weights [17] [18] [19] [20] [21]. Although these consensus algorithms have fast convergence speed, they cannot solve the problem in a limited time (1-1).

Based on the above research, a finite-time convergence algorithm is proposed in this chapter, using the Hessian inverse matrix to solve the problem (1-1). This algorithm was inspired by references [22] and [23], and extended the existing continuous-time exponential convergence ZGS algorithm to finite-time convergence. The convergence of the algorithm can be guaranteed by the Lyapunov method. Corresponding numerical simulations also verify the effectiveness of our algorithm.

1.1. Summary

Distributed optimization theory and applications have become one of the important development directions of contemporary systems and control science. Among them, the design of optimization algorithms, proof of convergence, and algorithm complexity analysis are several key issues in the research of optimization theory. According to whether the optimized objective function has convexity, it can be divided into two categories: distributed non-convex optimization and distributed convex optimization. Because convexity has many excellent characteristics, solving distributed convex optimization is relatively simpler than solving distributed non-convex optimization. Therefore, for non-convex optimization problems, we often use some methods to convert it into convex optimization to solve. For distributed convex optimization, their objective function is generally the sum of the local objective functions of the nodes in the network. Common research methods include gradient descent method (including hybrid steepest descent method [24], random gradient descent method [25]), distributed projection sub-gradient method [26] [27], incremental gradient method [28] [29], ADMM method [30] [31] and so on. Angelia Nedic proposed an overview of distributed first-order optimization methods for solving minimally constrained convex optimization problems in article [32], and can be widely used in distributed control, network node coordination, distributed estimation, wireless networks Signal processing issues. According to the structural characteristics of the network topology, the corresponding distributed convex optimization research algorithms can be divided into [10] based on fixed connected topology graphs, [33] on directed graphs, [11] on detail balance graphs, and time-varying topological graphs [7] [12] [34] of switching topology, etc. According to the time domain characteristics of the algorithm, it can be divided into discrete-time distributed convex optimization algorithms [1] [2] [7] [8] [9] and continuous-time distributed convex optimization algorithms [10] - [16]. According to the convergence characteristics of the distributed convex optimization algorithm, it can be divided into asymptotic convergence [10] and exponential convergence [13]. Event-driven scheduling algorithms have received widespread attention due to the advantages of fewer analog components and high algorithm execution speed. Therefore, many distributed convex optimization-related tasks have also taken event-driven scheduling into account [13] [35].

Consistency is the theoretical basis of distributed computing, an important performance indicator of distributed optimization and distributed cooperative learning, and convergence is a key indicator of consistency algorithms. However, most of the existing literature is about evolution Results of near-consistent convergence [10] [23]. With the in-depth study of collaborative control, the research on consistency issues has developed rapidly, and the corresponding references give various methods to achieve consistency [36] [37] [38]. From the perspective of time cost, it is very meaningful if the state of multiple agents can be consistent within a certain time. Therefore, the problem of finite-time consistency control of multi-agents has attracted widespread attention from scholars [39] [40].

For distributed learning, the learning speed is as important as the learning effect. At present, many algorithms are dedicated to finding an optimal learning strategy [41] [42] [43]. In reference [41], the author gives a distributed cooperative learning algorithm that can achieve exponential convergence. In reference [42], the authors propose a distributed optimization algorithm based on the ADMM method. Under this strategy, the algorithm can achieve global goal problems with asymptotic convergence speed. In [43], the authors proposed two distributed cooperative learning algorithms based on decentralized consensus strategy (DAC) and ADMM strategy. Algorithms based on the ADMM strategy can only achieve asymptotic convergence, but algorithms using the DAC strategy can achieve exponential convergence.

1.2. Major Outcomes

Based on the existing research results in related fields, this paper proposes a finite-time convergence distributed optimization algorithm and a fast-convergent distributed cooperative learning algorithm. The effectiveness of our algorithm is verified theoretically and experimentally. . First, a new distributed optimization method and its graph variants are used. Based on this, a neural network-based finite-time convergence algorithm is used to solve the distributed strong convex optimization based on the fixed-time undirected topology network's finite-time convergence problem. The proposed distributed convex optimization algorithm can clearly give the upper bound of the convergence time, which is closely related to the initial state of the algorithm, the algorithm parameters, and the network topology graph. Secondly, the proposed distributed cooperative learning algorithm is a privacy protection algorithm, and the global optimization goal can be solved by simply exchanging the learning weights of the neural network. Unlike previous distributed cooperative learning algorithms that can only achieve asymptotic or exponential convergence, this algorithm can achieve rapid convergence.

1.3. Organization of the Paper

We first give the basic assumptions of symbols and descriptions in Section 1.4. Then introduce the push-pull gradient algorithm in the second section and prove its convergence. An introduction to the finite-time convergence algorithm and proof of convergence are given in Section 3. In the fourth section, we introduce a push-pull fast convergence distributed cooperative learning algorithm, demonstrate its convergence, and give numerical simulation. Section 5 gives simulations and comparisons with other algorithms to prove their competitiveness, and gives the conclusion

1.4. Notation

Let’s start with a brief description of the symbols that will be used later.

and

represent the real number set and the non-negative real number set, respectively;

represents the Euclidean norm on the set

; Table ⊗ Real Kroneck Product,

, among them

,

,

is the unit matrix;

and

represent the gradient and Hessian matrix of function

, respectively.

is defined as

, among them

,

;

, and

means symbolic function;

is a constant.

Consider the following system

(1-2)

where

is continuous in an open neighborhood

containing the origin

. Suppose there is a continuous positive definite Lyapunov function

on the set

, where

is a neighborhood about the origin. If there exists a real number

such that

holds on the set U, then the system is stable in finite time, and the bound of its convergence time T

(1-3)

For a linear parameterized neural network with m-dimensional input, n-dimensional output, and l hidden neuron, it can be modeled as follows

(1-4)

where

represents the m-dimensional input vector,

represents the output of the i-th hidden node, and

is the neural network learning weight connecting the output node with the i-th hidden node.

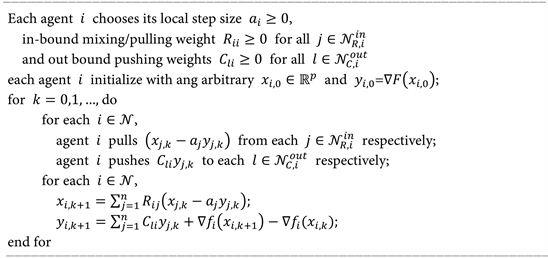

2. Push-Pull Gradient Method

In this section, the default vector is a column, let

, be a group of agents, each agent

, and it holds a local copy of the decision variable

and the auxiliary variable

of the average tracking gradient, and their iteration values are obtained by

,

, k respectively. Instead, use {∙} to represent the trajectory of the matrix by default. Make:

(2-1.a)

(2-1.b)

Define

as the sum function of local variables

(2-2)

Write it as

(2-3)

Definition 2.1 Given an arbitrary vector

on

, for any

we define

(2-5)

where

are members of the x column.

Assumption 2.1 is strongly convex and continuous for each node function

(2-6.a)

(2-6.b)

Under this assumption we studied, there is a problem of unique optimal solution.

For the interactive topology graph between the nodes to be used, we model it abstractly as a directed graph. A histogram

consisting of a pair of nodes

and ordered edge sets

. Here we think that if a message from node i reaches node j in the graph, and

is within the directed edge

, then i is defined as the parent node and j is the child node. Information can be passed from parent to child nodes. In graph

, a directed edge path is a subsequence of edges, such as

In addition, directed trees are directed graphs, in other words, each vertex has only one parent. A tree generated by a directed graph is a directed tree that will follow all vertices in the graph.

2.1. Detailed Push-Pull Gradient Method

The algebraic form of the push-pull gradient method can be written as:

(2-7.a)

(2-2.b)

where

is a non-negative diagonal matrix, and

We derive the hypothesis after this.

Assume 2.2, the matrix

is non-negative random, and

is also non-negative random, that is,

. In addition, we show that the diagonal terms of R and C are positive, that is,

,

for

.

Inductive by column random C

(2-8)

The above relationship has a very important relationship to the average tracking speed of the subset

.

Now, we give the graphs

and

derived from the matrices R and

, respectively. Here we want to explain that

and

are the same, but all edges are opposite.

Assume 2.3. For graphs

and

, each contains at least one spanning tree. In addition, at least one node is followed by a spanning tree of

and

, that is,

, and

is the set of all possible spanning tree roots in graph

.

For the choice of step size, we assume that at least one node in the range has a positive step size.

From the above prerequisites and assumptions we can get some constraints and the scope of the argument, which intuitively opens the way for the algorithm, so we explain our algorithm from another angle.

In order to show the feasibility of the push-pull algorithm, we first calculate in the optimal form

(2-9.a)

(2-9.b)

where

, and meet the conditions introduced above, now consider the algorithm proposed above, assuming that the algorithm generates two sequences

and

, which converge to

and

, respectively, We can get

(2-10.a)

(2-10.b)

Here we want to show that if

does not intersect the span of

, we will get

,

,Therefore,

satisfies

the optimal condition of

. From

,

is the exactly Optimal condition in

.

We now reproduce the feasibility of the push-pull algorithm, and from the above assumptions and conditions we know that it is linearly convergent

(2-11)

Therefore, in the case of relatively small step sizes, the above relationship means that

,

,

means that the entire network

only pulls the state information of the agent

, while yk means pushing back the agent

and tracking the average gradient information. This form of “push” and “pull” information gives the name of our proposed algorithm. The information that

essentially represents is that at least every agent needs to be pushed and pulled at the same time.

The algorithm in (2-7) is similar in structure to the DIGing algorithm proposed in [44], with mixed matrix distortion. The x update can be viewed as an inexact gradient step with a formula, and it can be viewed as a gradient tracking step. This asymmetric R-C structure design has been used in the literature of average consensus [45], but this algorithm has a gradient term and nonlinear dynamic characteristics, so it cannot explain linear dynamic systems.

Above we have explained the rationality of this method mathematically, now we conceptually explain it as a push-pull algorithm and its reliability. In the current calculation, we still put it in a static network, discuss and analyze it. But in fact, many networks in the real world are dynamic or even unreliable. We need to expand the scope of the discussion. The original algorithm was actually calculated from [44], and it also gave us some inspiration. In a dynamic network, if we need to disseminate or integrate information, we need to know the weight of the scatter or know how to derive its weight. When in an unreliable network, the connection between the dissemination and receiving nodes is not reliable. We need some specific strategies to specify the weight distribution or customization

In order to keep the part of the network we specified converge, a relatively effective method is to make the receiver perform the task of scaling and combining. When the network environment changes, as the underlying sender, it is difficult to know the entire network change and we can adjust the weight accordingly. We can also continue to use the push protocol to communicate and let the surrounding nodes continue to send messages to it. However, it is difficult to determine whether it is still alive (expired) in the network, because we do not know its status should not or cannot respond as death). We can “subjectively” judge whether a certain node or agent is dead. The important reason is that we cannot fully synchronize. If a node waits for a certain period of time without responding, we can consider it to be dead until he again Answer. In fact, a pull communication protocol can also be used to allow agents to pull information from neighbors or nodes for effective coordination and synchronization.

To sum up, for the general implementation of Algorithm 1, the push protocol is indispensable, and using the pull protocol on this basis can improve the network operation efficiency, but it cannot be operated only by the pull network.

2.2. Unify Different Distributed Computing Architecture Systems

We now show how the proposed algorithm unifies different types of distributed architectures to a limited extent. For a completely decentralized case, for example, there is an undirected connection graph

, we can set

, and let

, then it becomes a symmetric matrix. In this case, the algorithm can be regarded as [44] [46]. If the graph is directional and closely connected, we can also let

and set the corresponding R and C weights.

While it may not be straightforward to implement in a centralized or semi-centralized network, let us illustrate by example. Consider a four-node star network consisting of {1, 2, 3, 4}. Let node 1 be located in the center, and nodes 2, 3, and 4 be connected to node 1. In this case, we can use the matrices R and C are set to

,

As an illustration, Figure 1 shows the network topology diagram of

and

. The central node pushes information such as

to other neighbor nodes through

. And other nodes or neighbors can only passively wait for the information of the sending node.

At the same time, the node collects information about

from the feedback information through

, and other nodes can only passively comply with the request from node 1. This very intuitively shows the name of the push-pull algorithm. Although the related nodes 2, 3, and 4 update their information, these numbers do not need to participate in the optimization process. Due to the last three rows of C weights, they are geometrically fast, will disappear. In this case, we can set the local step size of 2, 3, 4 to 0 as a matter of course. In general, we can assume that

, then we can make

become a centralized algorithm. The master node uses 2, 3, 4 Calculated by distributed gradient method.

The above example is more of a semi-centralized case. Node 1 cannot be replaced by a strongly connected subnet in R and C, but 2, 3, and 4 can be replaced by different nodes, as long as the information of these subnodes can be passed to

. In the subordinate agent layer of the above, the theory is discussed in the next section. The layer in

, using the concept of the root tree, can be understood as the specific requirement of the subnet connectivity. In the network, his role is similar to the role of node 1, we call it the leader, and other nodes are called followers. One thing we want to emphasize here is that a subnet can be used to replace a node, but after the replacement, all subnet structures are decentralized, and the relationship between the leader and the subnet is subordinate. This is what we call a semi-centralized architecture.

![]()

Figure 1. Network topology of

and

.

2.3. Proof of Convergence

In this section, we will study the convergence of the algorithm. First, we define

,

. Our thinking is based on the linear constraint

,

,

for binding. Among them,

and

are defined later. He is a specific specification. On this basis, a linear system can be established, which belongs to the inequality.

Algorithm analysis According to Formula (2-7), we can get

(2-12)

(2-13)

Let’s further define,

,

(2-14)

From (2-8) and (2-10) we get

Then we can get

(2-15)

According to the above definition we can get

(2-16)

Similarly available

(2-17)

According to

,

,

lemma we build a linear system of inequality

Here the inequality is calculated by component, and the transformation matrix element

can be obtained

It’s here

,

.

According to the previous inequality linear system, we know that when the spectral radius

is satisfied,

,

,

converge to 0 at a linear rate ℴ

. The problem to be explained next is

.

Given a nonnegative irreducible matrix

,

and

, then

is

Necessary and sufficient conditions.

We now give convergence results for the proposed algorithm.

We assume that in the algorithm (1-1),

in

, we get

(2-18)

Among them

will be given later. In this way, when the spectral radius

is satisfied,

,

,

converges to 0 at a linear rate ℴ

.

We prove that according to the above lemma, we guarantee that

, and

(2-19)

The small problem now is to explain that

make the above formula hold.

First,

,

and

are guaranteed in the selected

, we can get

(2-20)

Secondly, the sufficient condition for making

is to replace

with

, and the rest is similar, so that

. We can get

.

Here we explain

(2-21)

get

(2-22)

And then

(2-23)

As discussed above

(2-24)

From this we get the final limit of

.

3. Finite-Time Convergence Algorithm

Now we introduce the optimization algorithm for finite-time convergence. Compared with most existing distributed convex optimization algorithms that can only achieve exponential convergence, this algorithm can achieve finite-time convergence. The convergence of the algorithm can be guaranteed by Lyapunov’s finite-time stability theory.

3.1. Algorithm Introduction

Consider a network with N nodes. Each node on the network has its own cost function, expressed as

, which is strictly convex. All nodes cooperate to obtain the optimal value of the target cost function. In order to better design the algorithm, we give the following assumptions:

Assumption 3.1: The upper-layer communication topology network is undirected and connected.

Assumption 3.2: For each proxy node

of the network, his cost function

is second-order continuously differentiable strongly convex, the convex parameter

, and the Hessian matrix

meets the local Lipschitz condition.

From this we get

(3-1)

where

represents the state of node i, and

is a gain constant that can be used to improve the convergence speed of Aijie;

means The set of all neighbor nodes of node i;

is an element of the adjacency matrix A;

.

And

is the optimal value of cost function

.

Note 3.1: The algorithm (3-1) is inspired by continuous time zero gradient [10] and finite time consistency protocol [20]. From the first formula,

.

From the second formula, we can get

, So it is easy to get the gradient and satisfy

where

can ensure that the algorithm achieves finite-time consistent convergence, that is, there is a convergence time T and a convergence state

. For

, both have

and

. From the hypothesis 2, we know that

Strongly convex has only one optimal value

, and satisfies

. The above analysis shows that

, which shows that at the upper level, this algorithm can solve the problem we raised. It should be noted that when

, the algorithm only achieves progressive convergence.

3.2. Convergence Analysis

Theorem 3.1: Based on assumptions 1 and 2, our proposed algorithm can solve the target problem in a finite time, and the bound of its convergence time is T. It also shows that

Where T satisfies:

(3-2)

Among them

;

;

,

is a continuous positive definite Lyapunov function,

is the algebraic connectivity related to the topological graph, and

is a constant related to

.

Proof: This part of the Lyapunov method gives a proof of Theorem 3.1. First,

(3-3)

This function is given in [10]. Based on Hypothesis 3.2,

is a second-order continuously differentiable function. It is also known that

is a locally strongly convex function.

Next, for convenience of derivation, we give the following definitions:

For

,

is a local strongly convex function. From the above formula, we know that

is a compact set. In order to take advantage of the strong convex function, we need to find another convex compact set, so we let

, where “

“ represents a convex set From hypothesis 3.2, we can know that

is a compact set, U is a convex compact set and satisfies

is based on the convex compact set U, for every

, combined with hypothesis 3.2, there will be

satisfying

(3-5)

From (3-5), we can get V

, when

. Considering the derivative of V with respect to time, then for

, the following relationship exists

(3-6)

where

, we can get

if and only if the equation

holds, so V can be used to prove Theorem 3.1.

In addition,

, combining the existing initial conditions, we can get the following properties

. We set

, there are

And can get the following inequality

(3-7)

Combining (1-4) for

, (3-7) can be written as

(3-8)

where

,

is the complete graph of graph

, then combining Cauchy's inequality and (3-6), we can get

(3-9)

Due to

, Combining (3-8) we get:

(3-10)

Combined with the finite-time stability theorem proposed earlier, we can get that our algorithm is convergent, then there is a time T,

. That is

, In addition, the bound of T

can be obtained from Theorem (3.1)

. Among them, the

convergence speed is related to parameters such as algebraic connectivity

, function curvature

, etc.

3.3. Simulation

In this section, a simulation experiment is given to demonstrate the effectiveness of the algorithm in this section. We set up a 6-node network topology diagram, as shown in Figure 2. His adjacency matrix is

and

. The cost function of each node is

(3-11)

It can be obtained that the optimal value of each node satisfies

,

. The optimal value of Equation (1-1) is calculated as

,

. Combining the convex compact set U in the proof, we can get

,

,

,

,

,

, which means

.

In the simulation, we use the parameter values

,

,

, and the simulation results are shown in Figure 3.

![]()

Figure 3.

.

4. Push-Pull Fast Convergent Distributed Cooperative Learning Algorithm

This chapter aims to combine and generalize the previously proposed algorithms to practical applications, such as common machine learning scenarios. Inspired by the previous algorithm, we will design a fast convergent distributed cooperative learning (P-DCL) algorithm based on a linear parameterized neural network based on push-pull mode. In the first step, a P-DCL algorithm based on continuous-time convergence in push-pull gradient mode is first given. In the second step, we give a convergence analysis of the algorithm based on the Lyapunov method. In the third step, for the practical effect of the algorithm, we use the fourth-order Runge-Kutta (RK4) method to discretize the algorithm. In the fourth step, the distributed ADMM algorithm and the push-pull gradient-based (P-DCL) algorithm simulation are given. Experiments show that our proposed algorithm has higher learning ability and faster convergence speed. Finally, we give the relationship between the algorithm’s own convergence speed and some parameters. Simulation results show that the convergence speed of the algorithm can be effectively improved by properly selecting some adjustable parameters.

Restatement: In order to construct the algorithm systematically, the problem formation is given first, and then the local cost function is analyzed. Then the relationship between global cost function and local cost function solution is given.

Consider a network with N nodes. Each node

in the network contains

samples, and each sample set can be expressed as

, Where

, represents the k-th sample on the i-th node, so for each node, their local cost function can be expressed as:

(4-1)

is the learning weight of the i-th node,

represents the sample of the i-th node;

and

represent the expectations of

With the output,

is a non-negative constant. In this way, the optimal learning weight of node i can be easily obtained.

(4-2)

If all the node samples satisfy

, the adjustment parameters of all nodes satisfy

. Then the W-optimal global cost function (1-7) is equivalent to the sum function of the local cost functions of each node.

(4-3)

As mentioned earlier, there are many distributed solving algorithms for this problem that can achieve progressive convergence. Next, what needs to be done is to design a fast distributed optimization algorithm, such as the following requirements:

(4-4)

This shows that all nodes can converge to the optimal learning weight

in a finite time T.

From the above analysis, the global cost function (1-7) can be written as:

(4-5)

This is often referred to as global consistency. Unlike the traditional multi-agent consistency problem, the result of consistency convergence here has no specific meaning. Consistency has a long history of research. The basic concept is that all nodes in all networks eventually reach the same state through information exchange with neighbors. From the perspective of learning, an efficient learning algorithm is very necessary. For distributed cooperative learning algorithms, their learning rate is an important measurement index of their algorithm. However, in real life, it is more necessary to reach a valid result within a certain time, which also prompts us to design a fast consensus learning cooperation algorithm.

4.1. Fast Convergent Distributed Algorithm

Here, based on the linear parameterized neural network, a distributed strategy for the target problem is given. To build a better construction algorithm, the following assumptions are given first:

Hypothesis 4.1 assumes that the network topology

is undirected and connected.

Based on the previous analysis, the distributed cooperative learning algorithm in continuous time gives:

(4-6)

where

is a constant used to adjust the convergence rate.

,

is an element in the adjacency matrix

;

, Figure 4 can show the operation of the algorithm more intuitively.

Let

,

,

, The algorithm can be written as a matrix:

(4-7)

Note 4.1: The above algorithms are inspired by [47]. Linear consistency algorithms can achieve progressive convergence, while cruise ship consistency algorithms that can achieve limited time convergence mostly use symbolic functions [20] [39].

, by setting

, there is

, so it is easy to get the gradient sum of the node cost function Satisfies

, and because

is a strong convex function, that is, it has only one optimal. The value also reflects that the algorithm we mentioned does have a solution.

Theorem 4.1: The algorithm (4-7) can achieve the goal in a finite time T, where time T satisfies:

![]()

Figure 4. Algorithm (4-6) running on i-node.

(4-8)

where

is a second-order continuous positive definite function,

is a constant in the algorithm (4-7),

;

is related to the network topology Graph-related algebraic connectivity.

is a constant related to the cost function of all nodes;

is the gain constant in the algorithm.

Proof: Based on the Lyapunov method, a rigorous proof of Theorem 4.1 is given next. Before certification, some related work needs to be prepared. First, select:

(4-9)

As a Lyapunov candidate function,

. Since

, then we can get

, change In other words,

in the Formula (4-9) is positive definite. In addition,

,

where

, then:

(4-10)

where

,

,

,

is the Laplacian matrix of

, and

is completely Figure of

.

Next, by calculating the inverse of

, we can get

(4-11)

where

represents the neighbor of node i,

, we can get

, which also means

(4-12)

In addition, it can be concluded

(4-13)

Combining Formula (4-12) and Formula (4-13) Formula (4-11) can be written as

(4-14)

This indicates that

is negative. Since

, Formula (4-14) can be obtained

(4-15)

we can get that the proposed algorithm (4-7) is stable for a finite time, so there is a time T here, with

,

, that is,

. Can be combined with theorem 4.1 from Formula (4-15) to get

(4-16)

Based on the above analysis, we can get that the algorithm proposed in this chapter can indeed find the optimal value of (1-7) in a limited time.

4.2. Fast Convergent Discrete-Time Distributed Cooperative Learning Algorithm

Based on the algorithm of (4-6), this section gives the discrete form:

(4-17)

represents the k-th estimate of the i-th node with respect to

. h represents the iteration step size;

, where

represents the cardinality of the set.

,

,

. In addition, Figure 5 can more intuitively show the iterative process of the discrete algorithm (4-17).

Note 4.2: In order to obtain good control performance or simplify the design process, usually in the design process of modern industrial control, we need to discretize a continuous-time system. In addition, effective discretization can not only reduce time and space costs, but also improve the learning accuracy of the algorithm. Methods like pulse invariance methods, pole-zero mapping methods, and triangle-equivalent equivalence are commonly used to convert continuous-time systems into equivalent discrete systems. Runkutta (RKK) algorithm with high accuracy and good stability is widely used. Therefore, we use the fourth-order RK (RK4) to process the discretization algorithm (4-6). However, for node i, we need to add

communications for each step. In other words, using the RK4 method for calculation increases the complexity of the calculation.

4.3. Two Types of Discrete Distributed Cooperative Learning Methods

In this section, we present two distributed cooperative learning algorithms to be compared with our algorithm (4-17). Specific comparison results can be found in the simulation section.

4.3.1. Distributed ADMM Algorithm

The algorithm achieves the global goal of the algorithm through each communication with the remaining nodes.

![]()

Figure 5. Algorithm (4-17) running at point i.

(4-18)

where

is a tuning function,

;

;

, among them

. For a more detailed description of the algorithm (4-18), you can refer to [42].

Note 4.3: The ADMM algorithm is actually a constrained optimization algorithm, where the constraint is

. From the above algorithm form, we can know that the ADMM algorithm is not a completely distributed algorithm, and each iteration of it requires the information of all nodes rather than the information of neighbors. So this algorithm is only suitable for fully connected undirected network topologies. It is known from [43] that the algorithm is asymptotically convergent.

4.3.2. Distributed Cooperative Learning Algorithm Based on Zero-Gradient Sum

Unlike the distributed ADMM algorithm, this algorithm only needs the information of the neighbor nodes for each iteration.

(4-19)

Lemma ( [41]): If the topology graph

is connected, the parameter

is taken from

, where

, then the

algorithm (4-19) can find the optimal value of the target cost function, and

.

Note 4.4: Like the algorithm (4-19), the algorithm mentioned in this chapter is also constrained by the zero-gradient sum, which can help us find the global best advantage faster. In particular, when the parameter

in the algorithm (4-6), it is equivalent to the algorithm (4-19). In addition, the algorithms (4-19) and (4-6) are completely distributed algorithms and can be applied in distributed connection networks. But the algorithm (4-19) can only achieve asymptotic convergence.

5. Simulation

In this section, we consider numerically verifying our conclusions on real data sets in several different network situations. First, we give the comparison results of different algorithms based on different parameters of different data sets. Four different network topologies are given and their algebraic connectivity

is calculated. Secondly, in order to simplify the calculation, each node is assigned the same training sample and the same adjustment constant

. Finally,

is calculated by lemma, and corresponding simulation parameters are set, such as the number of hidden neurons l, gain constants

,

and

.

In order to better show the comparison results of the algorithms, the general form of the mean square error (MSE) is given. The MSE of the k-th iteration of the i-th node is defined as follows:

(5-1)

In addition, the MSE of the entire network at the k-th iteration can be written as follows:

(5-2)

By using the transformation

to enlarge the error, the error curve of the iterative process can be more clearly shown.

We choose

as the objective function. The sample set

is =10,000 samples generated from the random set [−1.1]. We take

as our basis function and choose a four-node network as the network topology graph, where the adjacency matrix

, and

, each node is evenly distributed to

samples, where

starts from [0,1] In the experiment, we randomly selected the results:

,

,

,

, and

. The distributed learning weights are [0.9813, 0.0002, −0.0813, −0.0003, −0.0734, −0.0002, −0.0196, 0.0001, 0.0078, 0.0001, 0.0156, 0, 0.0130]. By making a difference, it is obvious that distributed is very close to centralized. In particular, let

, Then you can get

,

,

,

, so

, Similarly, we can get

, Combining Lemma gives

, In order to show the convergence speed of the proposed algorithm more clearly, we randomly select a component of W to display. Its convergence speed can be seen in Figure 6. From the figure, the convergence time

can be obtained.

Combined with Theorem 4.1, the relationship between the convergence speed and parameters of the algorithm will be given intuitively in this part. Figure 7 serves as our network topology. Figure 8 shows the comparison of different algorithms on the data set. We use the control variable method for research. The initialization parameters are

,

,

,

,

and

. Based on three different algebraic connectivity, Figure 9. The effect of algebraic connectivity on the convergence speed of the algorithm is shown intuitively. Figure 10 shows the effect of different parameters

:

,

and

on the convergence error. It can be seen from the figure that the larger the gain constant

, the faster the convergence speed. Figure 11 shows the effect of different values of parameter

on the convergence error. The parameters are

,

,

and

respectively. It can be seen from the figure that the smaller the β, the faster the convergence speed.

![]()

Figure 6.

convergence effect diagram.

![]()

Figure 7. Random undirected network topology.

![]()

Figure 8. Different algorithms working with data sets.

![]()

Figure 9. Effect of different

on algorithm convergence.

![]()

Figure 10. Effect of different

on algorithm convergence error.

![]()

Figure 11. The effect of different

on the convergence error of the algorithm.

6. Conclusions

In this paper, we study the distributed optimization problem on the network. We propose a new distributed method based on push-pull finite time convergence, in which each node keeps the average gradient estimation of the optimal decision variable and the principal objective function. Information about gradients is pushed to its neighbors, and information about decision variables is pulled from its neighbors. This method uses two different graphs for information exchange between agents and is applicable to different types of distributed architectures, including decentralized, centralized, and semi-centralized architectures. Along with this, we introduced a fast convergent distributed cooperative learning algorithm based on a linear parameterized neural network. Through strict theoretical proof, the algorithm can achieve finite-time convergence under continuous time conditions. In the simulation, we have investigated the influence of different parameter changes on the convergence speed, and also proved the effectiveness of the algorithm compared with some typical algorithms. In the future work, we can properly promote and apply the proposed distributed cooperative learning algorithm to large-scale distributed machine learning problems.