Continuous Iteratively Reweighted Least Squares Algorithm for Solving Linear Models by Convex Relaxation ()

1. Introduction

Low-dimensional linear models have broad applications in data analysis problems such as computer vision, pattern recognition, machine learning, and so on [1] [2] [3] . In most of these applications, the data set is noisy and contains numbers of outliers so that they are distributed in higher dimensional. Meanwhile, principal component analysis is the standard method [4] for finding a low-dimensional linear model. Mathematically, the problem can be present as

(1)

where

is the data set consisting of N points in

,

, a target dimension

,

denotes the

norm on vectors, and tr refers to the trace.

Unfortunately, if the data set contains a large number of noise in the inliers and a substantial number of outliers, these nonidealities points can interfere with the linear models. To guard the subspace estimation procedure against outliers, statistics have proposed to replace the

norm [5] [6] [7] with

norm that is less sensitive to outliers. This idea leads the following optimization problem

(2)

The optimization problem (2) is not convex, and we have no right to expect that the problem is tractable [8] [9] . Wright [10] proved that most matrices can be efficiently and exactly recovered from most error sign-and-support patterns by solving a simple convex program, for which they give a fast and provably convergent algorithm. Later, Candes [11] presented that under some suitable assumptions, it is possible to recover the subspace by solving a very convenient convex program called Principal Component Pursuit. Base on convex optimization, Lerman [12] proposed to use a relaxation of the set of orthogonal projectors to reach the convex formulation, and give the linear model as follows

(3)

where the matrix P is the relaxation of orthoprojector

, whose eigenvalues lie in the interval

form a convex set. The curly inequality

denotes the semidefinite order: for symmetric matrices A and B, we write

if and only if

is positive semidefinite, the problem (3) is called REAPER.

To obtain a d-dimensional linear model from a minimizer of REAPER, we need to consider the auxiliary problem

(4)

where

is an optimal point of REAPER,

denotes Schatten 1-norm, in other words, the orthoprojector

is closest to

in the Schatten 1-norm, and the range of

is the linear model we want. Fortunately, Lerman has given the error bound between the d-dimensional subspace with the d-dimensional orthoprojector

in Theorem 2.1 [12] .

In this paper, we improve that the algorithm calls continuous iteratively reweighted least squares algorithm for solving REAPER (3), and under a weaker assumption on the data set, we can prove the algorithm is convergent. In the experiment part, we compare the algorithm with the IRLS algorithm [12] .

The rest of the paper is organized as follows. In Section 2, we develop the CIRLS algorithm for solving problem (3). We present a detail convergence analysis for the CIRLS algorithm in Section 3. An efficient numerical is reported in Section 4. Finally, we conclude this paper in Section 5.

2. The CIRLS Algorithm

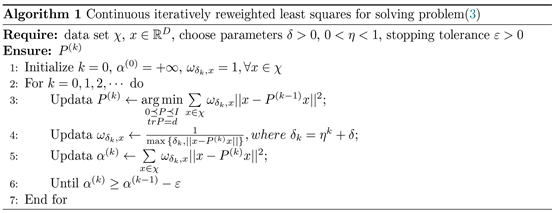

We give the CIRLS algorithm for solving optimization problem (3), and the algorithm is summarized as Algorithm 1.

In the next section, we prove the sequence

is convergent to

. Furthermore, we also present the

satisfied the bound with the optimal point

of REAPER.

3. Convergence of CIRLS Algorithm

In this section, we prove that the sequence

generated by Algorithm 1 is convergent to

and we provide the

satisfied the bound with the optimal point

of REAPER. Firstly, we start from the Lemma prepare for the proof of the following theorem.

Lemma 1 ( [12] , Theorem 4.1) Assume that the set

of observations does not lie in the union of two strict subspaces of

. Then the iterates of IRLS Algorithm with

converge to a point

that satisfies the constraints of the REAPER problem. Moreover, the objective value at

satisfies the bound

(5)

where

is an optimal point of REAPER.

In lemma 1, under the assumption that the set

of observations does not lie in the union of two strict subspaces of

, they consider the convergence of the IRLS algorithm. However, verify whether a data set satisfies this assumption requires amounts of computation in theory, so we give a assumption that is easier to verify in following theorem.

Theorem 1 Assume that the set

of observations satisfies

, if

is the limit point of the sequence

generated by

algorithm, then

is an optimal point of the following optimization problem

(6)

and satisfies

(7)

where

is an optimal point of REAPER(3).

Proof. Firstly, we consider the optimization model with Algoritnm 1,

(8)

then, we define iterative point

of the optimization problem

(9)

where

.

For convenience, let

, and the optimization model(9) convert into

(10)

where

.

Similarly, we convert the optimization model (8) into

(11)

Next we prove the convergence of

, consider the Huber-like function

(12)

since

, for any

, then

(13)

holds for any

,

. We introduce the convex function

(14)

note that F is continuously differentiable at each matrix Q, and the gradient is

(15)

in addition, we introduce the function

(16)

then

(17)

By the definition of

we know that

(18)

and

(19)

it is obvious that

is a smooth quadratic function, we may relate

through the expansion in

as follows

(20)

where

is the Hessian matrix of

.

By the definition of

we know that

, combines with (13) we have

(21)

and since the optimization model(10) is equivalent to the model

(22)

then we have the monotonicity property

(23)

We note that when

, we have

(24)

(25)

since

, on the one hand, when

,

holds, and

holds for any

, therefore the inequality

holds for

. On the other hand, when

,

, and

holds, then we have

(26)

since

, and

,

holds for all

, therefore

(27)

and according to (23), we have the following result

(28)

Since

(29)

combined with the convex optimization variational inequalities with some constraints, we have

(30)

base on (20), we have the equation

(31)

then we have

(32)

therefore

(33)

and according to(23), then we get the inequality

(34)

add to

, then we have

(35)

Let

, since

,

and

are all symmetric matrix, then the inequality

(36)

holds, in addition,

,

, thus

is positive semidefinite matrix, and

, then we have

, therefore

(37)

so we have

(38)

thus we can get the inequality as follows

(39)

Let

, then

, and we have

(40)

since

, so we have

(41)

therefore, we get the following limits

(42)

Since the set

is bounded and closed convex set, and

, then the sequence

exist convergent subsequences, we suppose

is one of the subsequences and

(43)

Since for any

, we have

(44)

and

(45)

so we have

(46)

in other words

(47)

According to the definition of

and (10), for any

, we have

(48)

Since

(49)

and

(50)

as well as

, so we have

(51)

thus

(52)

Taking the limit at both ends of the inequality (48) and using the continuity of the inner product, for any

, we have

(53)

the variational inequalities demonstrate that

(54)

it means that the limit points

of any convergent subsequence generated by

is an optimal point of the following optimization problem

(55)

Now we set

and define

with

and

. And we define

, and

with respect to the feasible set

, and

, then we have

(56)

According to the lemma 3.1, we have

(57)

where

denotes the number of elements of

.

4. Numerical Experiments

In this section, we present a numerical experiment to show the efficiency of the CIRLS algorithm for solving problem (3). We compare the performance of our algorithm with IRLS on the data generated from the following model. In the test, we randomly choose Nin inliers sampled from the d-dimensional Multivariate Normal distribution

on subspace L and add Nout outliers sampled from a uniform distribution on

, we also add a Gaussian noise

. The experiment is performed in R and all the experimental results were averaged over 10 independent trials.

The parameters were set the same as [12] that

,

and

, we choose

from

and we set

in general. All the

other parameters of the two algorithms were set to be the same, Nout means the number of outliers and Nin is the number of inliers, D is the ambient dimension and d is the subspace dimension we want. From the model above, we get the data sets with values in the table below, then we calculate the iterative number

![]()

Table 1. The iterative number of the CIRLS and IRLS for different dimension.

with the two algorithms through the data sets and the given parameters. The results are shown in Table 1.

We focus on the convergence speed of the two algorithms. Table 1 reports the numerical results of the two algorithms for different space dimension. From the result, we can see that in different dimension, the CIRLS algorithm performs better than IRLS algorithm in convergent efficiency.

5. Conclusion

In this paper, we propose an efficient continuous iteratively reweighted least squares algorithm for solving REAPER problem, and we prove the convergence of the algorithm. In addition, we present a bound between the convergent limit and the optimal point of REAPER problem. Moreover, in the experiment part, we compare the algorithm with the IRLS algorithm to show that our algorithm is convergent and performs better than IRLS algorithm in the rate of convergence.