Combining Expected Utility and Weighted Gini-Simpson Index into a Non-Expected Utility Device ()

1. Introduction

Expected utility theory may be considered to be born in 1738, relative to the general problem that choosing among alternatives imply a consistent set of preferences that can be described by attaching a numerical value to each―designated its utility; also, choosing among alternatives involving risk entails that it is selected that one for which the expected utility is highest (e.g. [1] ). As Weirich [2] points out, such an utterance inherits from the theory of rationality a collection of problems concerning evaluation of acts with respect to information. It is useful to distinguish among decisions under risk, meaning circumstances or outcomes with known probabilities, as opposed to situations on uncertainty where probabilities are not known (e.g. [3] ). Shannon entropy [4] measures the uncertainty of a random variable, and, for example, an entropy-based risk measure concerning financial asset pricing is claimed to be more precise than other models [5] . A recent review of applications of entropy in finance, mainly focused in portfolio selection and asset pricing, but also in decision theory, can be acknowl- edged in [6] .

This work is an analogous development of another paper recently published, where it was discussed an expected utility and weighted entropy framework, with acronym EU-WE [7] . We shall prove that the claimed analogy has here a proper sense, as either weighted Shannon entropy or weighted Gini-Simpson index may be considered two cases of generalized useful information measures. Hence, the conceptual framework to be discussed and elucidated in this paper is referred to with the acronym EU-WGS, and, as it will be justified later, consists of another form of mean contributive value of a finite lottery in the context of decision theory.

Combining the concepts of expected utility and some measure of variability of the probability score―generat- ing utility functions that are nonlinear in the probabilities―is not an innovative method and we can identify an example concerning meteorology forecasts dating back to 1970 [8] . Those approaches were later merged under the name of “non-expected utility” methods in the 1980s and consist of different conceptual types, the one we shall be dealing with framing into the category of decision models with decision weights or non-additive probabilities, also named capacities. A decision-making model based on expected utility and entropy (EU-E) introducing a risk tradeoff factor was discussed by Yang and Qiu [9] , where Shannon entropy measures the objective uncertainty of the corresponding set of states, or its variability; recently, the authors reframed their model into a normalized expected utility-entropy measure of risk [10] , allowing for comparing acts or choices where the num- ber of states are quite apart.

First, we shall present a synthetic review of the theoretical background anchored in two scientific fields: expected or non-expected utility methods and generalized weighted entropies or useful information measures. Then, we shall proceed merging the two conceptual fields into a mathematical device that combines tools from each and follow studying it analytically and discussing the main issues that are entailed for such a procedure. The spirit in which this paper is written is neither normative nor descriptive―instead it is conceived as a heuristic approach to a decision procedure tool whose final judge will be the decision maker.

2. Theoretical Background

2.1. Expected and Non-Expected Utility Approaches

The concept of “expected utility” is one of the main pillars in Decision Theory and Game Theory, going back at least to 1738, when Daniel Bernoulli proposed a solution to the St. Petersburg paradox using logarithms of the values at stake, thereby making the numerical series associated with the calculation of the mean value convergent (there referred to as “moral hope”). Bernoulli [11] explicitly stated that the determination of the value of an item should not be based on its price but rather on the utility that it produces, being the marginal utility of money inversely proportional to the amount one already has. Bernoulli approach―considered the first statement on Expected Utility Theory (EUT)―presupposes the existence of a cardinal utility scale, and that remained an obstacle until the theme was revived by the remarkable work of John von Neumann and Oskar Morgenstern in 1944, showing that the expected utility hypothesis could be derived from a set of axioms on preference [12] , considering the utilities experienced by one person and a correspondence between utilities and numbers, involving complete ordering and the algebra of combining. Nevertheless, based on their theorem one is restricted to situations in which probabilities are given (e.g. [13] ).

Since that time there were innumerable contributions on the theme. For instance, Alchian [14] outlines the issue stating that if, in a given context, it is possible to assign numerical values for different entities competing, then a selection process of rational choices is made to maximize the utility and one can say that the normal form of expected utility reduces to the calculation of the average value of a pattern of preferences expressed by li- mited numerical functions. But soon also appeared the objections relative to the adherence of EUT to empirical evidence, namely the Allais paradox which was first published in 1953, showing that individual’s choices in many cases collided with what was predicted by the theory, one reason being because expected utility devices associated with lotteries do not take into account the dispersion of the utilities around their mean values and also because, in general, people overweight positive outcomes that are considered certain compared to outcomes which are merely probable (e.g. [15] for a review).

Subjective expected utility, having a first cornerstone in the works of de Finetti and Ramsey [16] both published in 1931, followed by a further substantial development with Savage in 1954, was regarded by most decision analysts to be the preferred normative model for how individuals should make decisions under uncertainty, eliciting vectors of probabilities given the preferences in outcomes; but that approach was also revealed to be violated in empirical situations, what was illustrated for example by the Ellsberg paradox published in 1961. Other paradoxes were mentioned later such as those referred to by Kahneman and Tversky concerning prospect theory [17] where the carriers of value are changes in wealth or welfare rather than final states. Machina [18] highlighted that the independence axiom of EUT tends to be systematically violated in practice and concluded that the main concepts, results and tools of expected utility analysis may be derived from the much weaker assumption of smoothness of preferences over alternative probability distributions; this remarkable work outlined the scope of generalized expected utility analysis or non-expected utility frameworks. Other methods were pro- posed and used such as mean-variance analysis (e.g. [19] [20] ); based on this type of method and using mean absolute deviation instead of standard deviation, Frosini [21] presented recently a discussion revisiting Borch paradox linked to a general criterion of choice between prospects.

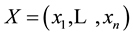

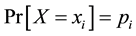

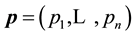

Here we will be focused in lotteries, a concept we shall retain (e.g. [22] ): a lottery is defined as a list or finite collection of simple consequences or outcomes  with associated, usually unknown, probabilities stated as

with associated, usually unknown, probabilities stated as  for

for  completed with the standard normalization conditions

completed with the standard normalization conditions  and

and  defining a

defining a  simplex

simplex  and denoting a vector

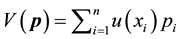

and denoting a vector . The axioms of EUT with the most usual version―ordering, continuity and independence―allow for preferences over lotteries to be represented by the maximand functional

. The axioms of EUT with the most usual version―ordering, continuity and independence―allow for preferences over lotteries to be represented by the maximand functional , where

, where  is a utility function mapping the set of consequences with image conceived as a set of real numbers

is a utility function mapping the set of consequences with image conceived as a set of real numbers  with

with  for

for . For simplicity of notation we shall write a discrete random variable representing a finite lottery denoted as

. For simplicity of notation we shall write a discrete random variable representing a finite lottery denoted as , from what follows that expected utility is therefore evaluated like

, from what follows that expected utility is therefore evaluated like .

.

The geometry of , supposed monotonous, has a simple behavioral interpretation under EUTaxioms, whereby being concave implies risk aversion―such as the logarithm function used by Bernoulli―and convexity entails risk prone behavior by an individual agent. The Arrow-Pratt measure (e.g. [23] ) is commonly used to assess the issues of risk-avert or risk-prone behavior. Recently, Baillon et al. [24] presented a general and simple technique for comparing the concavity of different utility functions isolating its empirical meaning in EUT, and an example of a concave utility function used to assess optimal expected utility of wealth under the scope of insurance business can be acknowledged in [25] .

, supposed monotonous, has a simple behavioral interpretation under EUTaxioms, whereby being concave implies risk aversion―such as the logarithm function used by Bernoulli―and convexity entails risk prone behavior by an individual agent. The Arrow-Pratt measure (e.g. [23] ) is commonly used to assess the issues of risk-avert or risk-prone behavior. Recently, Baillon et al. [24] presented a general and simple technique for comparing the concavity of different utility functions isolating its empirical meaning in EUT, and an example of a concave utility function used to assess optimal expected utility of wealth under the scope of insurance business can be acknowledged in [25] .

As Shaw and Woodward [26] say, focusing on the issue of natural resources management, the problem in classical utility theory is that the optimization of the models may have to accommodate preferences that are nonlinear in the probabilities. There are many approaches with this perspective, known at least since Edwards in 1955 and 1962 [27] discussed in parallel the theory of Kurt Lewin utility and the theory of subjective probability of Francis Irwin, introducing the concept of decision weights instead of probabilities; the Lewin utility theory was referred to as anchored in the concept that an outcome which has a low probability will, by virtue of its rarity, have a higher utility value than the same outcome would have if it had a high probability.

A substantial review was made by Starmer [28] under the name of non-expected utility theory, the case of subjective probabilities being framed within the conventional strategy approach focused on theories with decision weights, in particular the simple decision weight utility model where individuals concerned with lotteries are assumed to maximize the functional![]() ; the probability weighting function

; the probability weighting function ![]() transforms the individual probabilities of each consequence into weights, and, in general, it is assumed that

transforms the individual probabilities of each consequence into weights, and, in general, it is assumed that ![]() is a continuous non-decreasing function with

is a continuous non-decreasing function with ![]() and

and ![]() (e.g. [13] [28] [29] ); decision weights are also named capacities if additionally they satisfy monotonicity with respect to set inclusion. An example of a decision weight procedure concerning assessing preferences for environmental quality of water under uncertainty is outlined in [30] .

(e.g. [13] [28] [29] ); decision weights are also named capacities if additionally they satisfy monotonicity with respect to set inclusion. An example of a decision weight procedure concerning assessing preferences for environmental quality of water under uncertainty is outlined in [30] .

There are many other approaches to surpass the limitations of independence axiom, and, for example, Hey and Orme [31] compared traditional expected utility and non-expected utility methods with a total of 11 types of preference functionals, evaluating the trade-off of explaining observed differences of the data relative to the models versus loosing predictive power. Recently, a reasoning of decision-making with catastrophic risks motivated the incorporation of a new axiom named sensitivity to rare events [32] . But simple decision weight utility modeling is the conceptual type of non-expected utility methods that is relevant in this paper.

2.2. Generalized Useful Information Measures and Weighted Gini-Simpson Index

The quantitative-qualitative measure of information generalizing Shannon entropy characterized by Belis and Guiasu [33] is additive and may be associated with a utility information scheme, anchored in a finite sample space, establishing that an elementary event ![]() occurring with (objective) probability

occurring with (objective) probability ![]() entails a positive (sub- jective) utility

entails a positive (sub- jective) utility![]() . Thus, retrieving the discrete random variable we denoted previously as

. Thus, retrieving the discrete random variable we denoted previously as ![]() and identifying the event

and identifying the event ![]() with the outcome

with the outcome ![]() of utility

of utility![]() , it follows that the mathematical expectation or mean value of

, it follows that the mathematical expectation or mean value of ![]() is evaluated with the standard formula

is evaluated with the standard formula ![]() and is usually referred to as weighted (Shannon) entropy. This mathematical device was further discussed and studied analyti- cally by Guiasu in 1971 [34] , considered as a measure of uncertainty or information supplied by a probabilistic experiment depending both on the probabilities of events and on corresponding qualitative positive weights. The functional

and is usually referred to as weighted (Shannon) entropy. This mathematical device was further discussed and studied analyti- cally by Guiasu in 1971 [34] , considered as a measure of uncertainty or information supplied by a probabilistic experiment depending both on the probabilities of events and on corresponding qualitative positive weights. The functional ![]() was also named useful information (e.g. [35] ). At least since the eighties of last century weighted entropy was used in economic studies concerning investment and risk [36] and that scope of approach used in financial risk assessment and security quantification proceeds until nowadays (e.g. [37] -[40] ). Also, new theoretical developments concerning weighted entropy mathematical properties are available (e.g. [41] ).

was also named useful information (e.g. [35] ). At least since the eighties of last century weighted entropy was used in economic studies concerning investment and risk [36] and that scope of approach used in financial risk assessment and security quantification proceeds until nowadays (e.g. [37] -[40] ). Also, new theoretical developments concerning weighted entropy mathematical properties are available (e.g. [41] ).

In 1976, Emptoz―quoted in Aggarwal and Picard [42] ―introduced the entropy of degree ![]() of an experiment outlined with the same premises of the information scheme referred to above and defined as:

of an experiment outlined with the same premises of the information scheme referred to above and defined as:

![]()

Using l’Hôpital’s rule it is easy to prove the result of the limit![]() , thus allowing for the extension by continuity to the case

, thus allowing for the extension by continuity to the case![]() , hence retrieving weighted Shannon entropy. In 1978, Sharma et al. [43] discussed a non-additive information measure generalizing the structural

, hence retrieving weighted Shannon entropy. In 1978, Sharma et al. [43] discussed a non-additive information measure generalizing the structural ![]() -entropy previously studied by Havrda and Charvat [44] ; they named their mathematical device “generalized useful information of degree

-entropy previously studied by Havrda and Charvat [44] ; they named their mathematical device “generalized useful information of degree![]() ”, relative to the utility information scheme stated above and denoted as:

”, relative to the utility information scheme stated above and denoted as:

![]()

The formula above means exactly the same entity as entropy of degree ![]() of Emptoz―what we can check making α = β and rearranging the terms. Hence, the result

of Emptoz―what we can check making α = β and rearranging the terms. Hence, the result ![]() also holds, and when

also holds, and when ![]() we get

we get![]() , which we can acknowledge as meaning “double” weighted Gini-Simpson index, as we shall see; the authors interpret the number

, which we can acknowledge as meaning “double” weighted Gini-Simpson index, as we shall see; the authors interpret the number ![]() as a flexibility parameter, exemplifying its semantic content either as an environmental factor or a value of “information consciousness” in aggregating financial accounts.

as a flexibility parameter, exemplifying its semantic content either as an environmental factor or a value of “information consciousness” in aggregating financial accounts.

Weighted Gini-Simpson (WGS) index was outlined by Guiasu and Guiasu [45] in the scope of conditional and weighted measures of ecological diversity denoted as ![]() where the positive weights

where the positive weights ![]() reflect additional information concerning the importance (abundance, economic significance or other relevant quantity) of the

reflect additional information concerning the importance (abundance, economic significance or other relevant quantity) of the ![]() species in an ecosystem represented by their proportions or relative frequencies

species in an ecosystem represented by their proportions or relative frequencies ![]() (for

(for![]() ) associated with the closure condition

) associated with the closure condition![]() , hence defining the standard

, hence defining the standard ![]() simplex. Whether we use the objective or physical concept of probability practically as a synonym for proportion of successes in trails governed by large numbers laws as discussed in Ramsey [16] ―outlined subsequently as inter- subjective probabilities by Anscombe and Aumann [46] ―or even as subjective probability measuring the degree of belief of an agent, we can provide the result

simplex. Whether we use the objective or physical concept of probability practically as a synonym for proportion of successes in trails governed by large numbers laws as discussed in Ramsey [16] ―outlined subsequently as inter- subjective probabilities by Anscombe and Aumann [46] ―or even as subjective probability measuring the degree of belief of an agent, we can provide the result ![]() using the formulas for generalized useful information of degree

using the formulas for generalized useful information of degree ![]() previously referred to by Sharma et al. [43] or the entropy of degree

previously referred to by Sharma et al. [43] or the entropy of degree ![]() of Emptoz (see [42] ). Guiasu and Guiasu proceeded with several developments of the WGS index as a relevant measure in the context of ecological diversity assessment (e. g. [47] [48] ).

of Emptoz (see [42] ). Guiasu and Guiasu proceeded with several developments of the WGS index as a relevant measure in the context of ecological diversity assessment (e. g. [47] [48] ).

The formulation and analytical study of weighted Gini-Simpson index was first introduced by Casquilho [49] within a set of indices built as mathematical devices applied to discuss compositional scenarios of landscape mosaics (or ecomosaics), using either ecological or economic weights―there referred to as positive characteristic values of the habitats―in order to assess the relevance of the actual extent of the components, as compared with the optimal solutions of the different indices.

One main feature of WGS index we must keep in mind is that we have ![]() as a diversity measure should behave, because WGS index is composed of a sum of positive terms, attaining the null value at each vertex of the simplex (

as a diversity measure should behave, because WGS index is composed of a sum of positive terms, attaining the null value at each vertex of the simplex (![]() if

if![]() ), meaning that just that component is present and all the others absent. In the context of lottery theory

), meaning that just that component is present and all the others absent. In the context of lottery theory ![]() entails there exists only a single consequence, the result of a certain event; whether we should be dealing with prospect theory the result

entails there exists only a single consequence, the result of a certain event; whether we should be dealing with prospect theory the result ![]() has a semantic shift and would mean that there is a single pure strategy.

has a semantic shift and would mean that there is a single pure strategy.

Weighted Gini-Simpson index is used in several domains, besides ecological and phylogenetic assessments― focusing in economic applications we have examples such as: estimating optimal diversification in allocation problems [50] and other developments concerning ecomosaics composition assessment with forest habitats [51] [52] . In [49] [51] it is shown that weighted Gini-Simpson index can be interpreted as a sum of variances of interdependent Bernoulli variables thus becoming a measure of the variability of the system and enabling a criterion for its characterization.

3. Combining Expected Utility and Weighted Gini-Simpson Index

3.1. Definition and Range

In what follows, the simple lottery ![]() is conceived as having fixed strictly positive utilities1

is conceived as having fixed strictly positive utilities1 ![]() for

for ![]() and

and ![]() if

if![]() , while the variables are the probabilities defined in the standard

, while the variables are the probabilities defined in the standard ![]()

simplex:![]() .

.

In this setting used to characterize the discrete random variable ![]() we shall denote the EU-WGS mathematical device as

we shall denote the EU-WGS mathematical device as ![]() in analogy with the formula of the EU-WE framework discussed in [7] , to be defined as:

in analogy with the formula of the EU-WE framework discussed in [7] , to be defined as:

![]() (1)

(1)

Equation (1) therefore has the full expression![]() . We see that we can also interpret it as a preference function under the scope of non-expected utility theory with the characteristic formula

. We see that we can also interpret it as a preference function under the scope of non-expected utility theory with the characteristic formula ![]() where the decision weights defined as

where the decision weights defined as ![]() for

for ![]() verify the standard conditions

verify the standard conditions ![]() and

and![]() ; also it is easily seen that the function

; also it is easily seen that the function ![]() is strictly increasing in the open interval

is strictly increasing in the open interval ![]() and concave, as we have that the calcu- lus of derivatives entails:

and concave, as we have that the calcu- lus of derivatives entails: ![]() and

and![]() .

.

Still, we can proceed with the subsequent interpretation: denoting ![]() a random variable with values

a random variable with values ![]() for

for![]() , we have an expression for utilities in the sense of Lewin (referred to in [27] ), as when the event is quite rare we have

, we have an expression for utilities in the sense of Lewin (referred to in [27] ), as when the event is quite rare we have ![]() entailing

entailing ![]() and, on the contrary, when the event is about to be certain we get

and, on the contrary, when the event is about to be certain we get ![]() and

and![]() ; hence, in this approach, utility values

; hence, in this approach, utility values ![]() are also function of the pro- babilities being enhanced by rarity as claimed in that theory and with this interpretation we compute the mean value as

are also function of the pro- babilities being enhanced by rarity as claimed in that theory and with this interpretation we compute the mean value as![]() .

.

The function ![]() is differentiable in the open simplex as it is composed of a sum of quadratic elementary real functions, thus being continuous in its domain, which is a compact set (a closed and limited subset contained in

is differentiable in the open simplex as it is composed of a sum of quadratic elementary real functions, thus being continuous in its domain, which is a compact set (a closed and limited subset contained in![]() ), hence Weierstrass theorem ensures that

), hence Weierstrass theorem ensures that ![]() attains minimum and maximum values. Also it is easily seen that

attains minimum and maximum values. Also it is easily seen that ![]() is a concave function―the simplest way to show that is recalling that it was previously proved that weighted Gini-Simpson index is a concave function (e.g. [45] [49] ) and adding the bilinear function

is a concave function―the simplest way to show that is recalling that it was previously proved that weighted Gini-Simpson index is a concave function (e.g. [45] [49] ) and adding the bilinear function ![]() does not change the geometry.

does not change the geometry.

Concerning the evaluation of the minimum point we see that denoting ![]() we have ensured that

we have ensured that ![]() because

because ![]() and we have

and we have ![]() at every vertex of the simplex as noticed before, otherwise

at every vertex of the simplex as noticed before, otherwise ![]() being strictly positive; hence,

being strictly positive; hence, ![]() is the minimum value attained in the correspondent vertex of the simplex, this vertex being the minimum point. Though we don’t know yet which is the maximum value of

is the minimum value attained in the correspondent vertex of the simplex, this vertex being the minimum point. Though we don’t know yet which is the maximum value of ![]() we know it exists, so if we denote it as

we know it exists, so if we denote it as ![]() we can write the range―or the image set―of function

we can write the range―or the image set―of function![]() , a closed real interval defined as:

, a closed real interval defined as:

![]() (2)

(2)

3.2. Searching the Maximum Point

Searching for the maximum point correspondent to the maximum value ![]() we shall proceed in stages: first, we

we shall proceed in stages: first, we

build the auxiliary Lagrange function ![]() and then we compute the

and then we compute the

partial derivative(s) as follows:![]() ; solving the equation

; solving the equation ![]() we obtain the critical values of the auxiliary Lagrange function, evaluated as

we obtain the critical values of the auxiliary Lagrange function, evaluated as ![]() for

for![]() . As we also have that the equation

. As we also have that the equation ![]() entails the condition

entails the condition![]() , merging the results and simplifying we get

, merging the results and simplifying we get![]() and solving the equation for the Lagrange multiplier

and solving the equation for the Lagrange multiplier ![]() we obtain the result:

we obtain the result:

![]() (3)

(3)

Using Equation (3) combined with the equation ![]() we can substitute, writing the formula for the candidates to optimal coordinates of the maximum point:

we can substitute, writing the formula for the candidates to optimal coordinates of the maximum point:

![]() (4)

(4)

As it is known, the critical value of the Lagrange multiplier reflects the importance of the constraint in the problem and we can check directly from Equation (3) that we have![]() ; for the trivial case concerning the certain consequence lottery (

; for the trivial case concerning the certain consequence lottery (![]() with

with![]() ) we get

) we get ![]() and also Equations (4) solves like

and also Equations (4) solves like ![]() as it should be; when

as it should be; when ![]() we obtain

we obtain ![]() and we can proceed to the evaluation of the critical coordinates, obtaining:

and we can proceed to the evaluation of the critical coordinates, obtaining:

![]() (5)

(5)

We can also check that the result ![]() holds, confirming that the candidates to the maximum point defined in Equations (4) are in the hyperplane of the simplex. But, when

holds, confirming that the candidates to the maximum point defined in Equations (4) are in the hyperplane of the simplex. But, when ![]() we have to be careful; this is because the auxiliary Lagrange function did not include the constraints of non-negativity

we have to be careful; this is because the auxiliary Lagrange function did not include the constraints of non-negativity ![]() thus allowing for non-feasible solutions. Explicitly, for

thus allowing for non-feasible solutions. Explicitly, for ![]() and the set of utilities

and the set of utilities ![]() with

with ![]() we can check directly, manipulating Equations (4), that if the following inequality holds

we can check directly, manipulating Equations (4), that if the following inequality holds![]() , then we get

, then we get![]() .

.

Thus, combining ![]() in Equation (4) and Equation (3) we obtain the condition

in Equation (4) and Equation (3) we obtain the condition ![]() to get feasible values, and we deduce the general feasibility conditions which show to be closely related to the harmonic mean of the utilities, Equations (4) being directly applicable to evaluate the optimal point when we have that all the relations defined by Inequalities (6) are verified:

to get feasible values, and we deduce the general feasibility conditions which show to be closely related to the harmonic mean of the utilities, Equations (4) being directly applicable to evaluate the optimal point when we have that all the relations defined by Inequalities (6) are verified:

![]() (6)

(6)

Otherwise, we have to proceed using an algorithm, as it will be shown next. But we can already notice, from direct inspection of Equation (4) to Inequality (6) that the candidates to optimal coordinates would be the same if we use a positive linear transformation of the utilities such as ![]() with

with![]() , thus meaning that the maximum point of

, thus meaning that the maximum point of ![]() is insensitive to a positive linear transformation of the original values.

is insensitive to a positive linear transformation of the original values.

3.3. Algorithms for Obtaining the Maximum Point

It must be noted that we are certain that the maximum point exists as it is implied by Weierstrass theorem. The problem we are dealing has an old root, as Jaynes [53] in 1957 had already noticed, saying that the negative term ![]() has many of the qualitative properties of Shannon’s entropy but has the inherent difficulty arising from the fact that using Lagrange multiplier method entails that the results in general do not satisfy the feasibility conditions relative to

has many of the qualitative properties of Shannon’s entropy but has the inherent difficulty arising from the fact that using Lagrange multiplier method entails that the results in general do not satisfy the feasibility conditions relative to![]() .

.

Here, first we shall choose a forward selection procedure since we know that when ![]() it follows that Equations (5) ensure proper optimal solutions. Also, a direct inspection of Equations (4) highlights that the value

it follows that Equations (5) ensure proper optimal solutions. Also, a direct inspection of Equations (4) highlights that the value ![]() increases with the correspondent

increases with the correspondent ![]() since the term

since the term ![]() is common to all critical values; in fact, we have that for a fixed j when

is common to all critical values; in fact, we have that for a fixed j when ![]() the limit value in Equation (4) is

the limit value in Equation (4) is![]() , and, conversely, when

, and, conversely, when ![]() we

we

get the result![]() .

.

So, given the set of utilities defined in ![]() we begin ordering the set such as we are now dealing with the lottery in the form of an act described as a lottery where the outcomes or utilities are real numbers ordered in a decreasing way (e.g. [54] ):

we begin ordering the set such as we are now dealing with the lottery in the form of an act described as a lottery where the outcomes or utilities are real numbers ordered in a decreasing way (e.g. [54] ):![]() ; as it is for sure that

; as it is for sure that ![]() and

and ![]() imply strictly positive

imply strictly positive ![]() and

and ![]()

the problem begins with the evaluation of![]() . Does

. Does ![]() verifies the condition

verifies the condition![]() ?

?

Whether not, stop and state ![]() and evaluate the optimal solution with Equations (5), all the other components of higher order having null probability or proportion; if it does verify the feasibility condition proceed,

and evaluate the optimal solution with Equations (5), all the other components of higher order having null probability or proportion; if it does verify the feasibility condition proceed,

now with the condition (6) restated as ![]() and pose the same recurrent ques-

and pose the same recurrent ques-

tion until you verify that there is an order ![]() for which some of the inequalities (6) fail; then settle

for which some of the inequalities (6) fail; then settle ![]() as well as all the other proportions until order (n) and evaluate the optimal solutions with the first k ordered utilities

as well as all the other proportions until order (n) and evaluate the optimal solutions with the first k ordered utilities ![]() using Equations (4). The dimension of the problem is reset as

using Equations (4). The dimension of the problem is reset as![]() , all the other proportions being null.

, all the other proportions being null.

It must be noted that we could have chosen a backward elimination procedure instead with a faster algorithm, begin-

ning with the lowest utility![]() , then using Equation (4) to compute

, then using Equation (4) to compute ![]() with

with ![]()

and if observing ![]() then setting

then setting ![]() and proceeding to the evaluation of

and proceeding to the evaluation of ![]() with

with![]() , recurring until we obtain

, recurring until we obtain![]() ; then, all the other subsequent variables will be positive and are evaluated with Equations (4) setting

; then, all the other subsequent variables will be positive and are evaluated with Equations (4) setting![]() .

.

3.4. Numerical Example

Assume that we have the following lottery with n = 5 and ordered utilities:![]() ,

, ![]() ,

, ![]() ,

, ![]() and

and![]() ; then the first doubt is relative to whether it is true or not that

; then the first doubt is relative to whether it is true or not that![]() ; computing the right member of inequality we obtain the numeric value 2.5532 so the condition 3 > 2.5532 holds and we proceed to the next stage: does the condition

; computing the right member of inequality we obtain the numeric value 2.5532 so the condition 3 > 2.5532 holds and we proceed to the next stage: does the condition ![]() is true? Computing the right member of the inequality we get 2.3377 so the condition does not hold; thus, stop, set

is true? Computing the right member of the inequality we get 2.3377 so the condition does not hold; thus, stop, set ![]() and

and![]() , hence eva-

, hence eva-

luate the optimal point with Equations (4) relative to the set![]() , being the dimension of the problem

, being the dimension of the problem

reset to![]() , with approximate values:

, with approximate values:![]() ,

, ![]() and

and![]() .

.

Now, exemplifying the backward elimination procedure with the same utility values: first we evaluate

![]() so we have

so we have ![]() and set

and set![]() ; thus, we calcu-

; thus, we calcu-

late ![]() so we also have

so we also have ![]() and set

and set![]() ; next,

; next,

we get![]() , a proper value, and the evaluation of the other optimal coordinates proceeds with

, a proper value, and the evaluation of the other optimal coordinates proceeds with ![]() applying Equations (4).

applying Equations (4).

3.5. The Maximum Value

The maximum value of ![]() in itself does not seem to be relevant, because our search was attached to the evaluation of the maximum point as the criterion to find the best lottery according to the framework, but obviously it is possible to evaluate

in itself does not seem to be relevant, because our search was attached to the evaluation of the maximum point as the criterion to find the best lottery according to the framework, but obviously it is possible to evaluate ![]() and become clear about the range defined in Inequalities (2); supposing that

and become clear about the range defined in Inequalities (2); supposing that![]() , for

, for ![]() are the proper non-null optimal coordinates previously evaluated we have that

are the proper non-null optimal coordinates previously evaluated we have that

![]() but we also can calculate the maximum value just with the utility values as written in Equa- tion (7), what follows from combining Equation (1) and Equation (4) and simplifying:

but we also can calculate the maximum value just with the utility values as written in Equa- tion (7), what follows from combining Equation (1) and Equation (4) and simplifying:

![]() . (7)

. (7)

In the case of the numerical example described above we get the number![]() .

.

4. Discussion

Going back to the beginning, we can state that the reasoned choice modeling we introduced was a sequential decision-making procedure that began with a lottery ![]() with given utilities and unknown probabilities―thus facing a problem of decision under uncertainty―and, following the maximum principle attached to function

with given utilities and unknown probabilities―thus facing a problem of decision under uncertainty―and, following the maximum principle attached to function![]() , we ended with a unique

, we ended with a unique ![]() associated with a problem of decision under risk. The EU-WGS device discussed in this paper is suitably defined as a non-expected utility method with decision weights, a tool built combining expected utility and weighted Gini-Simpson index as claimed in the title.

associated with a problem of decision under risk. The EU-WGS device discussed in this paper is suitably defined as a non-expected utility method with decision weights, a tool built combining expected utility and weighted Gini-Simpson index as claimed in the title.

First, we shall focus the discussion comparing optimal proportions of function ![]() with index

with index ![]() presented and discussed in [7] . The analogy stated in the introduction follows from the standard result that Taylor’s first order (linear) approximation of the real function

presented and discussed in [7] . The analogy stated in the introduction follows from the standard result that Taylor’s first order (linear) approximation of the real function ![]() is

is ![]() for

for![]() , the approximation being fair near

, the approximation being fair near![]() . So, the EU-WGS device outlined in this paper and the EU-WE framework referred to in [7] are intimately related and the analogy is a proper one.

. So, the EU-WGS device outlined in this paper and the EU-WE framework referred to in [7] are intimately related and the analogy is a proper one.

Nevertheless, there are differences, perhaps the most noticeable ones being qualitative, as the fact that the optimal point of ![]() always remains in the interior of the simplex (

always remains in the interior of the simplex (![]() for

for![]() ) while in the case of index

) while in the case of index![]() , except for quite balanced sets of utilities verifying altogether Inequalities (6), we shall obtain in general

, except for quite balanced sets of utilities verifying altogether Inequalities (6), we shall obtain in general![]() , for

, for ![]() with

with![]() , all the other coordinates being null, meaning geometrically that the maximum point is located in another simplex

, all the other coordinates being null, meaning geometrically that the maximum point is located in another simplex ![]() which is a face of the original. In other words, the optimum point of

which is a face of the original. In other words, the optimum point of ![]() reveals to be more conservative relative to low utility values compared with the correspondent maximum point of

reveals to be more conservative relative to low utility values compared with the correspondent maximum point of ![]() that discards those cases from the composition of

that discards those cases from the composition of![]() . Also the optimal value of the Lagrange multiplier of index

. Also the optimal value of the Lagrange multiplier of index ![]() evaluates as the weighted entropy of the coordinates of the optimal point, while in index

evaluates as the weighted entropy of the coordinates of the optimal point, while in index ![]() the value

the value ![]() defined in Equation (3) is closely related to the harmonic mean of the utilities. Another different technical issue is that the evaluation of the maximum point of index

defined in Equation (3) is closely related to the harmonic mean of the utilities. Another different technical issue is that the evaluation of the maximum point of index ![]() entails solving numerically an equation while in the case discussed here we shall have in most cases to use an algorithm but the solutions are explicit.

entails solving numerically an equation while in the case discussed here we shall have in most cases to use an algorithm but the solutions are explicit.

Yet there is another point that deserves an explanation: it was claimed in [7] that index ![]() could be named “mean contributive value” because of the rationale that is exposed in a more detailed version in [55] , concerning Kant valuation moral philosophy. What about the maximand functional

could be named “mean contributive value” because of the rationale that is exposed in a more detailed version in [55] , concerning Kant valuation moral philosophy. What about the maximand functional![]() ? The similitude is so compelling that we have no option but to claim it should be considered as a formulation of “mean contributive value type II” since the difference between

? The similitude is so compelling that we have no option but to claim it should be considered as a formulation of “mean contributive value type II” since the difference between ![]() and

and![]() , besides what was mentioned in the first paragraph of this section, may be referred to the numeric values of parameters relative to generalized weighted entropies or useful infor- mation measures ([42] [43] ), extended by continuity:

, besides what was mentioned in the first paragraph of this section, may be referred to the numeric values of parameters relative to generalized weighted entropies or useful infor- mation measures ([42] [43] ), extended by continuity: ![]() index relative to the case

index relative to the case ![]() and

and ![]() the semi- value obtained with

the semi- value obtained with![]() .

.

There is also another issue that demands an explanation, or, the least, to be posed straightforwardly: consider the lottery ![]() ordered in a decreasing way such that

ordered in a decreasing way such that![]() ; for what reason should

; for what reason should ![]() be preferred to the certain event

be preferred to the certain event ![]() that maximizes traditional expected utility with

that maximizes traditional expected utility with![]() ? The reason that seems appropriate to answer such a question is that the maximum principle attached to function

? The reason that seems appropriate to answer such a question is that the maximum principle attached to function ![]() (or, equivalently, to index

(or, equivalently, to index![]() ) contains an implicit hidden “utility value” linked to valuing diversity as opposed to exclusivity. Is that intrinsic valuation of diversity a proper issue in Economics theory? That is a question that will not be answered here, may be the answer will be positive concerning some issues―for example in ecological economics, where diversity of the ecosystems is considered to be directly affected by their ecological richness in species and indirectly related to the properties of resilience and/or stability―and negative in other cases. As Shaw and Woodward [26] say, there may be no general theory of decision making under uncertainty.

) contains an implicit hidden “utility value” linked to valuing diversity as opposed to exclusivity. Is that intrinsic valuation of diversity a proper issue in Economics theory? That is a question that will not be answered here, may be the answer will be positive concerning some issues―for example in ecological economics, where diversity of the ecosystems is considered to be directly affected by their ecological richness in species and indirectly related to the properties of resilience and/or stability―and negative in other cases. As Shaw and Woodward [26] say, there may be no general theory of decision making under uncertainty.

Weirich [2] points out, concerning the discussion of generalized expected utility methods, that comprehensive rationality requires adopting the right option for the right reason. Whether these models are appropriate for rooting such an utterance is a question that remains open, as we were not concerned with the moral issues that could be raised related to the criteria embodied in the formulas.

Discussing the limitations of this modeling approach we have to highlight that the weighting function ![]() here used, though verifying the standard conditions stated when defining Equation (1), does not hold for other properties such as sub-certainty discussed in [17] ; also, quite rare events that could presumably have a very high utility value in the sense of Lewin’s conception, are enhanced in this model by a maximum two-fold factor, as pointed out in Section 3.1, what can be considered in some cases a significant limitation (which didn’t occur, for instance, with index

here used, though verifying the standard conditions stated when defining Equation (1), does not hold for other properties such as sub-certainty discussed in [17] ; also, quite rare events that could presumably have a very high utility value in the sense of Lewin’s conception, are enhanced in this model by a maximum two-fold factor, as pointed out in Section 3.1, what can be considered in some cases a significant limitation (which didn’t occur, for instance, with index![]() ).

).

Gilboa [13] says that a decision is objectively rational if the decision maker can convince others that she is right in making it, whether it is subjectively rational for her if others cannot convince her that she is wrong in making it―the ultimate judge of the choices remaining the decision maker. Hence, with this reference on mind, taking the decision of adopting the optimal result ![]() as a decision-making procedure is still under subjective assessment, and the problem is after all reframed as if the function

as a decision-making procedure is still under subjective assessment, and the problem is after all reframed as if the function ![]() and the correspondent maximum prin- ciple―consisting of mathematical tools embodying decision weights linked to information theory―are used to assess subjective probabilities or proportions, since it entails an implicit degree of belief that the device is suita- ble for the case at study.

and the correspondent maximum prin- ciple―consisting of mathematical tools embodying decision weights linked to information theory―are used to assess subjective probabilities or proportions, since it entails an implicit degree of belief that the device is suita- ble for the case at study.

5. Conclusions

In this work we outlined a reasoned decision-making tool combining traditional expected utility with a generalized useful information measure (a type of weighted entropy) referred to as the weighted Gini-Simpson index― thus becoming a conceptual framework with acronym EU-WGS. This device is original and applies to simple lotteries defined with positive utilities and unknown probabilities, denoted as a real function with domain in the standard simplex.

It was shown that this mathematical device frames into the class of non-expected utility methods relative to the type concerning the use of decision weights verifying standard conditions; also, it was shown that function could be interpreted as a mean value of a finite lottery with utilities conceived in the sense of Kurt Lewin, where the rarity of a component enhances the correspondent utility value by a maximum of a two-fold factor. For each set of fixed positive utilities, the real function is differentiable in the open simplex and concave, having an identifiable range with a unique and global maximum point; we settled the procedure to identify the optimal point coordinates, highlighting the sequence of stages with a numeric example; also, we conclude that the maximum point doesn’t change if utilities are affected by a positive linear transformation.

Such a framework can be used to generate scenarios of optimal compositional mixtures relative to finite lotteries associated with prospect theory, financial risk assessment, security quantification or natural resources management. Nowadays, different entropy measures are proposed to be used to form and rebalance portfolios concerning optimal criteria, and some state that the portfolio values of the models incorporating entropies are higher than their correspondent benchmarks [56] . Also, we discussed the similarity between this EU-WGS device and an EU-WE framework recently published denoted index ![]() (which is a particular case of a one-parameter family of models outlined and discussed in [57] ), pointing out the main analogies and differences between the two related issues which can be , in any case, referred to as mean contributive values of compositional mixtures. Last, in this paper, we didn’t face normative or descriptive challenges concerning the behavior of this decision modeling approach relative to paradoxes of expected or non-expected utility theories and adherence to empirical data, which is a field that remains open for future research.

(which is a particular case of a one-parameter family of models outlined and discussed in [57] ), pointing out the main analogies and differences between the two related issues which can be , in any case, referred to as mean contributive values of compositional mixtures. Last, in this paper, we didn’t face normative or descriptive challenges concerning the behavior of this decision modeling approach relative to paradoxes of expected or non-expected utility theories and adherence to empirical data, which is a field that remains open for future research.

Notes