Salient Region Detection and Analysis Based on the Weighted Band-Pass Features

48

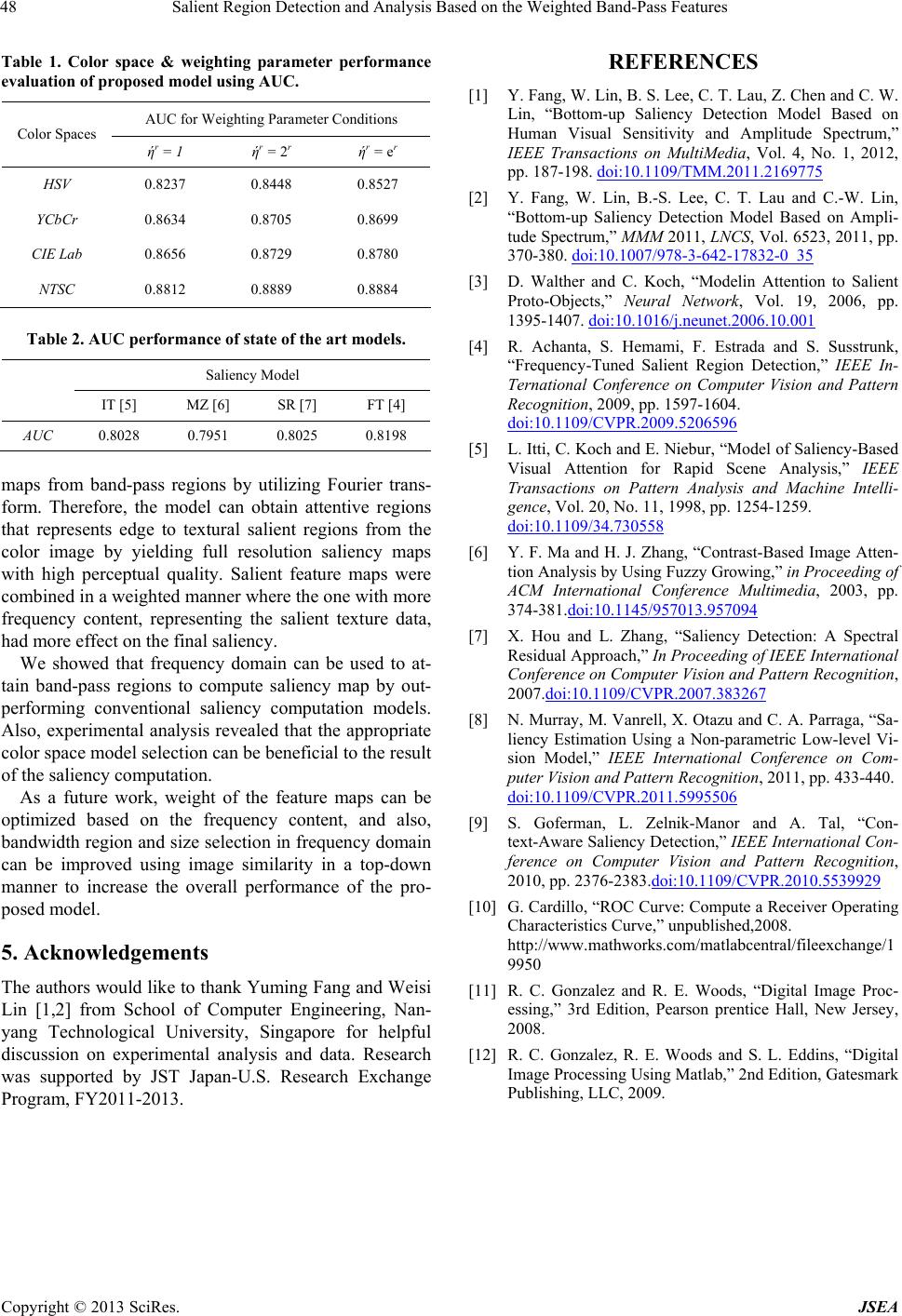

Table 1. Color space & weighting parameter performance

evaluation of proposed model using AUC.

AUC for Weighting Parameter Conditions

Color Spaces

ήr = 1 ήr = 2r ήr = er

HSV 0.8237 0.8448 0.8527

YCbCr 0.8634 0.8705 0.8699

CIE Lab 0.8656 0.8729 0.8780

NTSC 0.8812 0.8889 0.8884

Table 2. AUC performance of state of the art models.

Saliency Model

IT [5] MZ [6] SR [7] FT [4]

AUC 0.8028 0.7951 0.8025 0.8198

maps from band-pass regions by utilizing Fourier trans-

form. Therefore, the model can obtain attentive regions

that represents edge to textural salient regions from the

color image by yielding full resolution saliency maps

with high perceptual quality. Salient feature maps were

combined in a weighted manner where the one with more

frequency content, representing the salient texture data,

had more effect on the final saliency.

We showed that frequency domain can be used to at-

tain band-pass regions to compute saliency map by out-

performing conventional saliency computation models.

Also, experimental analysis revealed that the appropriate

color space model selection can be beneficial to the result

of the saliency computation.

As a future work, weight of the feature maps can be

optimized based on the frequency content, and also,

bandwidth region and size selection in frequency domain

can be improved using image similarity in a top-down

manner to increase the overall performance of the pro-

posed model.

5. Acknowledgements

The authors would like to thank Yuming Fang and Weisi

Lin [1,2] from School of Computer Engineering, Nan-

yang Technological University, Singapore for helpful

discussion on experimental analysis and data. Research

was supported by JST Japan-U.S. Research Exchange

Program, FY2011-2013.

REFERENCES

[1] Y. Fang, W. Lin, B. S. Lee, C. T. Lau, Z. Chen and C. W.

Lin, “Bottom-up Saliency Detection Model Based on

Human Visual Sensitivity and Amplitude Spectrum,”

IEEE Transactions on MultiMedia, Vol. 4, No. 1, 2012,

pp. 187-198. doi:10.1109/TMM.2011.2169775

[2] Y. Fang, W. Lin, B.-S. Lee, C. T. Lau and C.-W. Lin,

“Bottom-up Saliency Detection Model Based on Ampli-

tude Spectrum,” MMM 2011, LNCS, Vol. 6523, 2011, pp.

370-380. doi:10.1007/978-3-642-17832-0_35

[3] D. Walther and C. Koch, “Modelin Attention to Salient

Proto-Objects,” Neural Network, Vol. 19, 2006, pp.

1395-1407. doi:10.1016/j.neunet.2006.10.001

[4] R. Achanta, S. Hemami, F. Estrada and S. Susstrunk,

“Frequency-Tuned Salient Region Detection,” IEEE In-

Ternational Conference on Computer Vision and Pattern

Recognition, 2009, pp. 1597-1604.

doi:10.1109/CVPR.2009.5206596

[5] L. Itti, C. Koch and E. Niebur, “Model of Saliency-Based

Visual Attention for Rapid Scene Analysis,” IEEE

Transactions on Pattern Analysis and Machine Intelli-

gence, Vol. 20, No. 11, 1998, pp. 1254-1259.

doi:10.1109/34.730558

[6] Y. F. Ma and H. J. Zhang, “Contrast-Based Image Atten-

tion Analysis by Using Fuzzy Growing,” in Proceeding of

ACM International Conference Multimedia, 2003, pp.

374-381.doi:10.1145/957013.957094

[7] X. Hou and L. Zhang, “Saliency Detection: A Spectral

Residual Approach,” In Proceeding of IEEE International

Conference on Computer Vision and Pattern Recognition,

2007.doi:10.1109/CVPR.2007.383267

[8] N. Murray, M. Vanrell, X. Otazu and C. A. Parraga, “Sa-

liency Estimation Using a Non-parametric Low-level Vi-

sion Model,” IEEE International Conference on Com-

puter Vision and Pattern Recognition, 2011, pp. 433-440.

doi:10.1109/CVPR.2011.5995506

[9] S. Goferman, L. Zelnik-Manor and A. Tal, “Con-

text-Aware Saliency Detection,” IEEE International Con-

ference on Computer Vision and Pattern Recognition,

2010, pp. 2376-2383.doi:10.1109/CVPR.2010.5539929

[10] G. Cardillo, “ROC Curve: Compute a Receiver Operating

Characteristics Curve,” unpublished,2008.

http://www.mathworks.com/matlabcentral/fileexchange/1

9950

[11] R. C. Gonzalez and R. E. Woods, “Digital Image Proc-

essing,” 3rd Edition, Pearson prentice Hall, New Jersey,

2008.

[12] R. C. Gonzalez, R. E. Woods and S. L. Eddins, “Digital

Image Processing Using Matlab,” 2nd Edition, Gatesmark

Publishing, LLC, 2009.

Copyright © 2013 SciRes. JSEA