A Multi-Resolution Photogrammetric Framework for Digital Geometric Recording of Large Archeological Sites: Ajloun Castle-Jordan ()

Received 8 February 2016; accepted 28 March 2016; published 31 March 2016

1. Introduction

Digital documentation and modeling of historical structures is important for understanding, definition and recognition of the values of cultural heritage [1] [2] . 3D modeling of heritage sites and monuments in their current state requires an effective technique that is precise, flexible and portable due to accessibility problem and the needs for data acquisition in a short time to avoid disturbing the work and visitor in the site [3] - [5] . In the near past, 3D data collection based on laser scanning has become an additional standard tool for the generation of high quality 3D models of cultural heritage sites and historical buildings [6] . This technique allows quick and reliable measurement of millions of 3D points based on the run-time of reflected light pulses, which is then used to effectively generate a dense representation of the respective surface geometry. However, these systems are still quite expensive, and there is a need for experts and a considerable effort to conduct the necessary measurements. Furthermore, Terrestrial Laser Scanning point clouds do not have accurate geometric representation information about the object texture due to the large time difference between the scan set up. This results in different radiometric properties of the collected images, especially for outdoor applications [7] [8] .

A new trend has emerged in cultural heritage, which is the use of Image-based modeling (photometric modeling) to generate realistic and accurate virtual 3D models of the environment. Photogrammetry is the art and science of obtaining precise mathematical measurements and three-dimensional (3D) data from two or more stereo overlap photographs [9] [10] . Through the recent developments in modern digital image processing, together with the ever increasing progress development in computer vision, it has become an efficient and accurate data acquisition tool in cultural heritage applications [11] - [13] . Photogrammetric drawing presents a flexible and accessible survey tool. In particular, rectified photography, which is the most attractive photogrammetric product, is providing both geometric and surface texture for objects to be depicted [14] . The geometry is created by identifying sets of common points from two or more source photos also called stereoscopic or 3D imaging, and since standard digital cameras can be employed, the required images can be captured at low cost and relatively quickly [9] . One example is the photogrammetric reconstruction of the great Buddha of Bamiyan [15] . Realistic 3D models for architecture applications can be created based on a small number of photographs [16] . However, the main advantage of photogrammetry is the potential for simultaneously providing both good geometric accuracy and high resolution of surface texture for objects to be digitally depicted using underwater, terrestrial, and aerial or satellite imaging sensors. Additionally, photogrammetry is a flexible and efficient survey data collection tool that can be achieved by sufficient degree of automation [17] .

Aerial photogrammetric systems are one of the preferred ways to obtain three-dimensional information of the Earth’s surface [18] . Due to their high performances, aerial images obtained by metric aerial cameras or by high resolution satellite sensors have been used in the fields of preserving cultural heritage and objects of significant architectural values [19] - [23] . It appears to provide data of directly measured three-dimensional points, which is beneficial to improving the quality level of the reconstructed 3D architectural models [24] . However, the quality of the building models derived from aerial images is restricted by the ground resolution. In general, data have reached some decimeters which are inadequate for highly precise geometric position and fine detailed studies [25] [26] .

On the contrary, there were several attempts to create 3D models from ground-based view at high level of detail to enable virtual exploration of archeological monuments. Most common approaches involve acquiring data using stereo vision close range photogrammetry [15] [16] . Close range digital photogrammetry techniques introduce non-contact, flexible and accessible surveying tools for 2D - 3D archaeological recordation [5] [27] . These facade 3D models however, do not provide any information about roofs or terrain shape. Therefore, we will describe merging the highly detailed facade models with a complementary airborne model obtained from a DSM, in order to provide both the necessary level of detail for walkthroughs and the completeness for fly-thrus.

Recent developments in close range photogrammetry technique such as dense image matching has revived as a powerful tool for photogrammetric data capture and is currently used successfully in a number of airborne and terrestrial applications. The dense matching technique, called photo-based scanning, can produce a dense 3D point-cloud data that is required to create high-resolution geometric models. The tools apply the principles of standard photogrammetry but with full automation process [27] - [29] presented a new approach of multi view stereo (MVS) method in order to generate dense and precise 3D point clouds. The implementation is based on the Semi-Global Matching algorithm developed by [30] , followed by fusion step to merge the redundant depth estimation across single stereo models. In order to reduce the time and memory, a hierarchical coarse-to- fine algorithm used to derive search ranges for each pixel individually. The developed dense image matching algorithm was implemented in software called SURE.

In this paper, a low cost photogrammetric framework approach to generate textured 3D surface mesh is presented based on multi view overlapped stereo images with both high details at ground level, and complete coverage. The employment of close-range facade model photogrammetry has been accomplished using SURE software solution for multi-view stereo, which enables the derivation of dense point clouds from a given set of images and its orientations. Up to one 3D point per pixel is extracted, which enables a high resolution of details. A far-range aerial photo, containing complementary roof and terrain shape, is created from airborne aerial metric-camera stereo photos, then triangulated, and finally texture mapped with aerial imagery.

2. Methods

2.1. Photogrammetry Principles

Digital photogrammetry is an efficient tool for both 2D and 3D modeling of the objects. Photogrammetry refers to the practice of deriving 3D measurements from photographs. If an object point is observed in more than one image the 3D object, coordinates can be calculated as can be depicted in Figure 1.

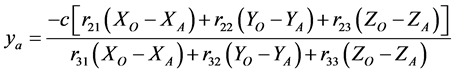

To measure 3D point coordinates, information from position and orientation of the camera are required. Also, the parameters of interior orientation have to be provided to describe the position of the image plane with knowing the center of projection of the camera. The orientation can be reconstructed if the object coordinates from the exterior and interior are available for a number of control points (point with known coordinates). Object and image coordinates could be determined as shown in the following equations:

(1)

(1)

(2)

(2)

where:

xa, ya: an object A’s image coordinates;

XA, YA, ZA: the object’s coordinates in object space;

![]()

Figure 1. Principle of photogrammetry for 3D object modeling [9] .

Xo, Yo, Zo: the object space coordinates of the camera position;

r11 − r33: the coefficients of the orthogonal transformation between the image plane orientation and object space orientation, and are functions of the rotation angles;

c: camera focal length.

Recent technological development in digital cameras, computer processors and computational techniques, such as sub-pixel image matching, make Photogrammetry a portable and powerful technique. It yields extremely dense and accurate 3D surface data with a limited number of photos captured with standard digital photography equipment in a relatively short period of time. Therefore, archeologists used Photogrammetry and its tools to help them in documentation and restoration because it’s easy, flexible and low cost technique. It needs a vertical camera to record from above or horizontally using a raised camera [31] [32] .

2.2. Site Structures and the Study Area Description

Ajloun Castle is located approximately 75 km north of Amman (Figure 2). It is a historical Ayyubid castle built at the top of Auf Mountain (1250 m above MSL) in AD 1183-1184. It was used to control the iron mines of Ajloun and protect the trade and commercial routes between Jordan and Syria. The castle commands views of the Jordan Valley and three Wadis leading into it―making it an important strategic link in the defensive chain against invasions threats. With its hilltop position, it was one in a chain of beacons and pigeon posts that enabled messages to be transmitted from Damascus to Cairo in a single day. Its foundations measure about 100 m on each side. The entrance was originally approached by a drawbridge, now a footbridge. Other defenses included; machicolations, a sloped glacis above a fosse and arrow slits. It was enlarged in AD 1214 with the addition of a new gate in the southeastern corner, and once boasted seven towers as well as a surrounding 15 m deep dry moat.

In the 17th century an Ottoman garrison was stationed in the Castle, after which it was used by local villagers. Earthquakes in 1837 and 1927 badly damaged the castle, though slow and steady restoration is continuing.

2.3. Methodological Framework

The general workflow for image-based 3D reconstruction is illustrated in Figure 3. For photogrammetric object

![]()

Figure 2. Study area location (Ajloun Castle Photo acquired from [33] ).

recording multiple photos are taken of the object from different positions, whereby coverage of common object parts should be available from at least three but preferably five photographs from different camera positions. After import of the images into the respective processing software the parameters for camera calibration (interior orientation) and (exterior) image orientations are automatically computed. The subsequent generation of 3D point clouds or 3D surface models is also carried out in full automatic mode. Only for the 3D transformation of the point cloud or the meshed model into a super ordinate coordinate system must the user measure control points interactively.

The adopted methodological framework in this research is described as following:

1) Image acquisition using airborne aerial overlapping stereo image pairs with complete site coverage and multi-view stereo with high details at ground level;

2) The VisualSFM [35] system was then used to perform automatic camera calibration. This software offers a step-by-step process for camera calibration and provides an interface to the PMVS software. VisualSFM uses scale-invariant feature transform features to find corresponding points in pairs of images, which then used to calculate the 3D position of each camera position relative to all others positions and relative to the model being reconstructed;

3) Importing Intrinsic and extrinsic camera orientations calculated using VisualSFM into intensifying points cloud using Surface Reconstruction (SURE) for dense image matching and 3D spars point generation;

4) Representation and preparing of resulted Cloud points generated from SURE using Cloud Compare Software. The resulted 3D points from SURE were presented using cloud compare software to visualize the point cloud for each image. The resulted 3D point cloud were checked and cleaned from irrelevant points;

5) Model meshing and smoothing through the use of Meshlab software to convert the point clouds into more practical triangular meshes. Meshlab was used to manipulate and smooth mesh surfaces;

6) After the geometry (i.e. the mesh) is constructed, Agisoft PhotoScan Software was used to generate 3D model texture. The texture mapping mode determines better visual quality of the final model;

7) Converting the 3D Model into KMZ format. Sketch Up software was used to import a 3D model file from Agisoft PhotoScan and Convert the 3D Model into KMZ format;

8) Building the 3D model geometry and reconstructing it in the virtual world using Google Earth software.

2.4. Data Collection and Preparation

For the 3D documentation of the large archaeological site of Ajloun Castle, the following data were employed:

1) Close range photos: high-resolution images have been collected using portable camera, Canon EOS 1100D, with a resolution of 4272 × 2848 pixels and a focal length of 18 mm. the close range images were used to document the castle walls with higher resolution and construct wall structures with high geometric details by means of dense image matching;

2) Aerial Photographs: 18 overlapped colored aerial photographs covering Ajloun Castle were acquired from the Aerial Photographic Archive for Archaeology in the Middle East (APAAME) project. APAAME is long- term research project between the University of Western Australia and Oxford University. The archive currently consists of over 91,000 images and maps, the majority of which are displayed on the archive’s Flickr site [33] . The images were acquired using a NIKON Digital SLR Aerial Camera Systems of focal length 28.0 - 105.0 mm. The original photos were converted from Joint Photographic Experts Group (JPEG) format to 8 bit per pixel RGB jpeg-images that are suitable for the VISUAL SFM software. Figure 4 shows a sample of used aerial photos with 105 mm focal length.

![]()

Figure 4. A sample Photo of APAAME used in 3D model generation [33] .

3. 3D Model Results and Analysis

3.1. Camera Position and Orientation Parameter VisualSFM

After acquiring both aerial and terrestrial images of Ajloun castle, photogrammetric 3D point cloud were generated using a photogrammetric structure from motion (SfM) technique for estimating 3D structures from 2D image series and automatically constructs a 3D point cloud from overlapping features [34] . The SfM system is implemented in VisualSFM software, which was developed at the University of Washington [35] [36] . The software is a re-implementation of the SfM system and it includes improvements by integrating both scale invariant feature transform (SIFT) on the graphics processing unit (SIFT GPU) and Multicore Bundle Adjustment [35] . Dense reconstruction can also be performed through VisualSFM using PMVS/CMVS (Patch or Cluster based Multi View Stereo Software. The Visual SFM workflow starts by loading the images into the software [35] . Next step was to identify and match features in consecutive overlapping images which done through SIFT system. It improves the efficiency of Structure from motion matching algorithm by introducing a preemptive feature matching that provides good balance between the speed and accuracy. The subsequent step is to calculate the interior and exterior parameters. Furthermore, the intrinsic and extrinsic camera orientations camera parameters were used for producing a georeferenced and dense point clouds for each overlapping pixels from the corresponding camera images by determining optimal camera positions using the bundle adjustment algorithm. Figure 5 shows the camera positions for a Ajloun castle. A sanity check for all close range camera positions by using the visual interface, but were unable to get the algorithm to recognize the top of the castle and sides of the top of the castle as part of a same point cloud. Thus, the output of VisualSfM was two separate dense point clouds.

The number of 3D spars cloud achieved from Visual SFM software was insufficient for generating 3D modeling. In order to overcome this problem, dense image matching algorithms, in particular, a hierarchical multi- view stereo based on the Semi-Global Matching were applied.

![]()

Figure 5. The positions of cameras used to generate a sparse model of Ajloun Castle.

3.2. Intensifying Points Cloud Using Surface Reconstruction (SURE)

We imported the result from VisualSFM, including dense point clouds and camera positions to software package called Surface Reconstruction (SURE), which was developed at the Institute for Photogrammetry at the University of Stuttgart (IFP). SURE enables the derivation of dense point clouds from a given set of images by using its orientation (camera parameters, camera rotation and translation). The SURE solution incorporates a Dense image matching algorithms (SGM), in particular, a hierarchical multi-view stereo based on the Semi-Global Matching, with a multi-view stereo triangulation [29] [37] , Therefore, single stereo models (using 2 overlapping images)can be processed and subsequently fused. The input of SURE is a set of images and the corresponding interior and exterior orientations. These orientations can be derived either automatically or by using classical image orientation approaches. In this case, the result was imported from VisualSFM, including dense point clouds and camera positions in VisualSFM (nvm) file. Up to one 3D point per pixel can be extracted, which enables a high resolution of details. The SURE algorithm densifies a point cloud by using the oriented images generated from the bundle adjustment. Firstly, images are undistorted and pair-wise to generate epipolar images and dense disparities are calculated across stereo pairs. The suitable image pairs are selected and matched using the Semi Global Matching (SGM) algorithm. The algorithm executes the image position alignment and orientation necessary to estimate disparities by minimizing the joint entropy between successive overlapping images [29] . Then, within a triangulation step the disparity information from multiple images is fused and 3D points or depth images are computed. Finally, redundant depth measurements are used to remove outliers and increase the accuracy of the depth measurements. The output of the software is point clouds or depth images. Figure 6 shows the reconstructed georeferenced and dense image matching results using the software SURE.

3.3. Point Cloud Points Representation and Preparing Using Cloud Compare Software

The resulted 3D point cloud was checked in the Cloud compare software. Cloud Compare is a 3D point cloud and triangular mesh processing software. It has generic 3D data editing and processing software. The used data structures is an “octree” that lets large point clouds (millions of points in 3D) to be saved in memory and showed allowing the differences between two large data sets to be calculated very fast in a few seconds. The resulted 3D points from SURE were presented using cloud compare software to visualize the point cloud for each image (Figure 7).

It proved to be very difficult to get a good and clean point cloud from the optical imagery even though the

![]()

Figure 6. Dense image point clouds generated by the software SURE using the SfM method’s outputs, about 33 million points.

![]()

Figure 7. The resulted of 3D spar points of the site building facades generated by the SURE software (as viewing in Cloud Compare software).

imagery was of high quality. The point could contain imperfections and noise, or simply irrelevant points which is not a part of the final model. The cleaning of the data, i.e. the removal of objects not relevant to the documentation, is an important step in the 3D modelling. Pre-model cleaning results in smaller models, especially on sites with full of irrelevant objects as in the case of Ajloin Castle, where there are many cloud points data modeled the trees surrounding the castle (Figure 8).

3.4. Mesh Generation

3D modeling is the process of obtaining a digital mathematical representation of an object in three dimensions. Surfaces can either be formed by direct connection of points which then become part of the surface [38] [39] or by creating a best-fit surface through the points [40] . The first method allows the creation of the model from a complete, unstructured point cloud, but it has the disadvantage of being very susceptible to instrument noise and scan alignment errors. On other hand, the best-fit surface generating algorithms perform automatic noise reduction by approximating the surface and as a result remove noise. However, the risk in all smoothing functions is the possible loss of relevant detailed features, which might be problematic when study the situation of surfaces. In this research, Surface mesh processing was performed using open-source available software. MeshLab is a portable and extensible system for the processing and editing of unstructured 3D triangular meshes developed at the Visual Computing Lab, which is an Institute of the National Research Council of Italy in Pisa [41] . The software offers a range of sophisticated mesh processing tools with a graphical user interface. The.ply files were imported into MeshLab, where they processed and re-sampled using the so-called iso-parameterization algorithm. This algorithm calculated an almost-isometric surface triangulation, while preserving the main shape of the volume [42] . The derived model is not satisfactory even it has huge number of faces. As shown in Figure 9, some of detailed information is lost when only using photos for reconstructing large study area in such as Ajloun Castle. Some of the regions on the model are blurred. For large study area, even detailed photos are provided for reconstructing small regions. The whole model will still be built on a large scale. This is an effect which will drag down detail information when photos describing large area are used together.

Therefore the 3D model of the Ajloun castle was simplified and cleaned from artefacts with Meshlab’s smoothing filters and tools for curvature analysis and visualization. It registers multiple range maps based on the

![]()

Figure 8. The final 3D Spar points of Ajloun castle (after removing irrelevant cloud points).

Iterative Closest Point (same as Cloud Compare) algorithm. The final 3D virtual model from based on Meshlab software tool is shown in Figure 10.

3.5. Building Model Texture

Due the fact that models generated from PhotoScan have smooth surfaces and well structures in general, but detailed information is missing on some area [43] . Therefore, the fine resulted 3D mesh generated with meshlab software was exported into PhotoScan software for building the model texture. In this workflow both software can be utilized. In such way, we get 3D model with both fine detailed geometry achieved with meshlab, and smooth surface accomplished with PhotoScan software. Figure 11 illustrates the Generated model with fine geometry and satisfactory surface texture as shown in PhotoScan software.

However, detailed information is yet not clear on some parts of the roof, where some of the regions are blurred and distorted. This is due to the low resolution of the available aerial photos used for roof construction, where the detailed photos were available for reconstructing small regions. This effect will drag down detail information when photos describing large area are used together.

![]() (a)

(a)![]() (b)

(b)

Figure 10. Generated model with fine geometry when viewing in meshlab Software, (a) wireframe; (b) hidden lines.

3.6. Model Registration and Accuracy Assessment

Model registration is to georeference the recovery 3D digital model to the real world coordinates. The registration procedure started with 3D Survey measurement of several Ground Control Points (GCP). A Sokkia GRX2 fully integrated dual-constellation receiver used for measuring the coordinate of marked points. To achieve a practical accuracy in the range of few centimeters, the measurements were repeated two times in different satellite constellations based on a Real Time Kinematic (RTK) network solution. These GCPs were used to define the tachometric network, which was measured with a reflectorless Nikon NIVO Total Station at one second (1") accuracy to survey the control points which are measurable in both terrestrial and aerial images and detectable in close range images. Introduction surveying marks on facades is strictly prohibited at the cultural heritage site. As an

![]()

Figure 11. Generated 3D model with Smooth Surface when viewing in PhotoScan, blue flags are control points.

alternative, a set of GCPs consists of highly distinguishable features on the walls around the outer façades, like edges of stones and windows or details of ornaments. The GNSS-based GCPs allow performing an absolute orientation in post processing, so that the resulting 3D model is georeferenced in the WGS 1984 coordinate system. After that, 9 out of the selected 31 control points were used to compute a 3D similarity transformation (7 parameters), which was then applied to the remaining 11 points.

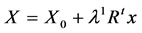

3D Conformal Transformation is a rigid Euclidean transform with scaling, between the primary (X, Y, Z) and the secondary (x, y, z) coordinate systems:

, or in extended form:

, or in extended form:![]() .

.

The inverse transform is:

Seven parameters describe the transform:![]() , i.e., 3 translation steps (orthogonal), 3 rotation angles and one scaling parameter. This transform is called conformal, because it does not change the shape of an object (only its relative position and size). Also, an analysis of the recovered 3D model coordinates with respect to some check points measured with the total station was performed. 22 well distributed all over the castle check points were compared with the recovered object coordinates and the differences are listed in Table 1. Their transformed coordinates were compared with the corresponding point coordinates measured by the total station (i.e. check points), yielding the residuals along the X, Y and Z axes, which RMS values are listed on Table 1.

, i.e., 3 translation steps (orthogonal), 3 rotation angles and one scaling parameter. This transform is called conformal, because it does not change the shape of an object (only its relative position and size). Also, an analysis of the recovered 3D model coordinates with respect to some check points measured with the total station was performed. 22 well distributed all over the castle check points were compared with the recovered object coordinates and the differences are listed in Table 1. Their transformed coordinates were compared with the corresponding point coordinates measured by the total station (i.e. check points), yielding the residuals along the X, Y and Z axes, which RMS values are listed on Table 1.

3.7. Model Reconstruction in Virtual 3D World

For reconstruction of objects geometry in the virtual 3D world, the model was added into Google Earth by exporting to KMZ from Sketch Up software. Figure 12(a) and Figure 12(b) depicts 3D meshed textured model of Ajloun castle as shown in Sketch Up and Google Earth software.

4. Conclusion and Recommendations

The finally obtained result of a 3D model of Ajloun castle uses integration or fusion of multi-view aerial and terrestrial imagery with accurate geometric position and fine details. The combination aims to optimize the advantages from the two techniques for high quality 3D models construction and for supporting the visual quality

![]()

Table 1. Summary of control and check point analysis for the recovered object points.

![]() (a)

(a)![]() (b)

(b)

Figure 12. (a) Generated 3D model when viewing as KMZ Format in Sketch up software; (b) Ajloun Castle’s historic buildings added as a 3D building layer on Google Earth.

of the generated 3D digital model. Recognizing the different advantages offered by 3D new metric survey techniques in the Cultural Heritage documentation, the acquisition of high quality 3D data is no longer dominated by the use of laser scanning. A growing number of software tools for detailed, reliable and accurate image based 3D digital model generation from airborne and close range imagery are available. Efficient stereo image matching based on multiple overlapping images can provide 3D models at good accuracies close to the sub-pixel level. This quality can still be improved by using different methods under research. Although the objectives of this paper have been accomplished and related research has been done, further research should also consider accuracy and efficiency improvements.

NOTES

![]()

*Corresponding author.