Effects of Bayesian Model Selection on Frequentist Performances: An Alternative Approach ()

Received 15 April 2016; accepted 19 June 2016; published 22 June 2016

1. Introduction

Statistical modeling usually deals with situation in which some quantity of interest is to be estimated from a sample of observations that can be regarded as realizations of some unknown probability distribution. In order to do so, it is necessary to specify a model for the distribution. There are usually many alternative plausible models available and, in general, they all lead to different estimates. Model uncertainty refers to the fact that it is not known which model correctly describes the probability distribution under consideration. A discussion of the issue of model uncertainty can be found e.g. in Clyde and George [1] . In Bayesian context, Bayesian mode averaging (BMA) has been successfully used to deal with model uncertainty (Hoeting et al. [2] ). The idea is to use a weighted average of the estimates obtained using each alternative model, rather than the estimate obtained using a single model. BMA and applications can be found in Marty et al. [3] , Simmons et al. [4] , Fan and Wang [5] , Corani and Mignatti [6] , Tsiotas [7] , Lenkoski et al. [8] , Fan et al. [9] , Madadgar [10] , Nguefack-Tsague [11] , and Koop et al. [12] . Clyde and Iversen [13] developed a variant of BMA in which it is not assumed that the true model belongs to competing ones (M-open framework).

Bayesian model selection involves selecting the “best” model with some selection criterion; more often the Bayesian information criterion (BIC), also known as the Schwarz criterion [24] is used; it is an asymptotic approximation of the log posterior odds when the prior odds are all equal. More information on Bayesian model selection and applications can be found in Guan and Stephens [25] , Clyde et al. [26] , Clyde [27] , Nguefack- Tsague [28] , Carvalho and Scott [29] , Fridley [30] , Robert [31] , Liang et al. [32] , and Bernado and Smith [33] . Other variants of model selection include Nguefack-Tsague and Ingo [34] who used BMA machinery to derive a focused Bayesian information criterion (FoBMA) which selects different models for different purposes, i.e. their method depends on the parameter singled out for inferences. Nguefack-Tsague and Zucchini [35] propose a mixture-based Bayesian model averaging method.

Conditioning on data at hand (it is usually the case), Bayesian model selection is free of model selection uncertainty. Since Bayesian inference is mostly concerned with conditional inference, this phenomenon is often overlooked so long as one is concerned with unconditional inference. Thus the motivation of this paper to raise awareness of the fact that model selection uncertainty is present in Bayesian modeling when interest is focused on frequentist performances of Bayesian post-model selection estimator (BPMSE).

The present paper is organized as follows: Section 2 presents the problem while Section 3 highlights the difficulties of assessing the frequentist properties of BPMSEs. The new method for taking into account model selection uncertainty is shown in Section 4 while an application for Bernoulli trials is given in Section 5. The papers ends with Concluding remarks.

2. Typical Bayesian Model Selection and the Problem

Bayesian model selection (formal or informal) can be summarized by the following main steps:

1. Quantity of interest

2. Data

3. Use x for exploratory data analysis

4. From (3), specify

, alternative plausible (parametric η) models, more often

, alternative plausible (parametric η) models, more often .

.

5. Use any model selection criteria and data x to select a model (model uncertainty) ,

, .

.

6. Specify a prior distribution for  from the selected model.

from the selected model.

7. Compute the posterior distribution for  from the selected model.

from the selected model.

8. Define a loss function.

9. Find the optimal decision rule. E.g. for square error loss,  ,

,  or any quantity, e.g. posterior properties for

or any quantity, e.g. posterior properties for .

.

More on Bayesian theory can be found in Gelman et al. [36] . When the analysis is conditioned on the ob- served data (conditional inference); there is no model selection uncertainty, only model uncertainty, since the data x (viewed as fixed) are used for all steps (including steps 3 and 4). However, if one needs the frequentist properties, the data should be viewed as random because steps 3 and 4 introduce model selection uncertainty and ,

, . The difficulties are now similar those of frequentist model selection. The remaining uncertainty includes the choice of the statistical model, the prior, and the loss function.

. The difficulties are now similar those of frequentist model selection. The remaining uncertainty includes the choice of the statistical model, the prior, and the loss function.

3. Bayesian Post-Model-Selection Estimator

Bayesian post-model-selection estimator (BPMSE) is referred to the Bayes estimator obtained after a model selection procedure has been applied. Here, a squared error loss is considered, but the main idea remains unchanged for any other loss function. Given the selection procedure, BPMSE can been written as

(1)

(1)

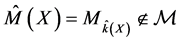

where ![]() if model

if model ![]() is selected and 0 otherwise. In the rest of the paper, for simplicity,

is selected and 0 otherwise. In the rest of the paper, for simplicity, ![]() each model

each model ![]() will be replaced only by

will be replaced only by ![]() in the integrals.

in the integrals.

Long-run performance of Bayes estimators: Usually, the goal of the analysis is to select a model for inference using any selection procedure. One is interested in evaluating the long run performance (frequentist performance) of the selected model. In general, Bayes estimators have good frequentist properties (e.g. Carlin and Louis [37] ; Bayarri and Berger [38] ). The Bayesian approach can also produce interval estimation with good performance, for example coverage probabilities. It is also known that if a Bayes estimator associated with a prior is unique, then it is admissible (Robert [31] ). There are also conditions under which Bayes estimator are minimax. The point is to see whether these frequentist properties still hold for Bayes estimators after model selection.

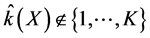

![]()

Interest is focused on studying the frequentist properties of![]() . The difficulties here are similar to those encountered in frequentist PMSEs. This is due to the partition of the sample space X by the selection procedure. This makes it difficult to derive the coverage probability of confidence intervals.

. The difficulties here are similar to those encountered in frequentist PMSEs. This is due to the partition of the sample space X by the selection procedure. This makes it difficult to derive the coverage probability of confidence intervals.

The frequentist risk: The frequentist risk of BPMSEs is defined as

![]() (2)

(2)

where L is a loss function. One can now see that this risk is difficult to compute; it is hard to prove admissibility and minimaxity properties of BPMSEs, since their associated priors are not known.

Coverage probabilities: When the data have been observed, one can construct a confidence region.

Suppose that after observing the data, model ![]() is selected. For large samples, Berger [39] considers the normal approximation

is selected. For large samples, Berger [39] considers the normal approximation

![]() (3)

(3)

and then derives an approximate region at the ![]() level given by

level given by

![]()

where ![]() is the a-quantile of

is the a-quantile of![]() .

.

A stochastic version (assuming normality) is given by

![]()

The coverage probability of the stochastic form is given by

![]()

which is now difficult, as it involves computing the variance and expectation of BPMSE.

Consistency: Another frequentist property of Bayes estimators is consistency. It is shown that, under appropriate regularity conditions, Bayes estimators are consistent (Bayarri and Berger [38] ). A question is whether BPMSEs are consistent, but it is hard to prove because one does not know the priors associated with BPMSEs.

4. Adjusted Bayesian Model Averaging

In this framework, interest is focused with the long run performance of BPMSES, not on posterior evaluation, since in the posterior evaluation, the model selection uncertainty problem does not exist. Under model selection uncertainty, from Equation (1), a fundamental ingredient is the selection procedure S. This selection procedure should depend on the objective of the analyst and should be taken into account in modeling uncertainty at two levels: prior and posterior to the data analysis. In the following, we define the posterior quantity and derive Bayesian-post-model selection in a coherent way. The new method is referred to as Adjusted Bayesian model averaging (ABMA).

4.1. Prior Model Selection Uncertainty

The initial representation of model uncertainty is captured by parameter prior uncertainty and the model space prior, the selection procedure is used to update model prior. Formally, consider the possible models![]() ; assign a prior probability

; assign a prior probability ![]() to the parameter of each model and a prior probability

to the parameter of each model and a prior probability ![]() to each model with the data X viewed as random. Let

to each model with the data X viewed as random. Let ![]() be event model

be event model ![]() is selected,

is selected, ![]() is considered to be the event model

is considered to be the event model ![]() is true. The probability of this event is referred to as prior model selection probability of model

is true. The probability of this event is referred to as prior model selection probability of model ![]() and denoted by

and denoted by![]() . This is to update prior model

. This is to update prior model ![]() using the selection proce- dure S.

using the selection proce- dure S. ![]() may be informative or not, but

may be informative or not, but ![]() is an informative prior. Making use of the fact that one of the models is true,

is an informative prior. Making use of the fact that one of the models is true, ![]() can been computed as

can been computed as

![]() (4)

(4)

where ![]() is the prior model selection probabilities of model

is the prior model selection probabilities of model ![]() given that

given that ![]() is the true

is the true

model. ![]() is the probability that

is the probability that ![]() is actually selected given that it is really the true model.

is actually selected given that it is really the true model.

The true state of the nature is that a given model is true; the decision here is to select a model. Given that model ![]() is true,

is true,![]() . These probabilities can be computed as

. These probabilities can be computed as

![]() (5)

(5)

The expectation is taken with respect to the true model![]() , provided that these expectations exist. Note that these probabilities do not longer depend on the observed data.

, provided that these expectations exist. Note that these probabilities do not longer depend on the observed data.

Table 1 shows the true state of the world (nature) and the decision (the selected model). The ![]()

![]() , the probability that

, the probability that ![]() is selected, given that

is selected, given that ![]() is the true model. Suppose that

is the true model. Suppose that ![]() is the true model, one would like

is the true model, one would like ![]() to be higher, ideally 1 (the correct decision). If model

to be higher, ideally 1 (the correct decision). If model ![]() is not selected

is not selected

with probability one, ![]() is called the probability of Type I error for model

is called the probability of Type I error for model![]() .

.

That is, if ![]() is the true model and the selection procedure S incorrectly does not select it, then the selection procedure has made a Type I Error.

is the true model and the selection procedure S incorrectly does not select it, then the selection procedure has made a Type I Error.

On the other hand, if ![]() is the true model, but the selection procedure selects

is the true model, but the selection procedure selects![]() , then this selection procedure has made a Type II error, with probability

, then this selection procedure has made a Type II error, with probability![]() ,

,![]() . The reliability of the selection criterion is given by the closeness of

. The reliability of the selection criterion is given by the closeness of ![]() to 1.

to 1.

4.2. Posterior Model Selection Uncertainty

When the data have been observed, the posterior model selection probability for each model ![]() is given by

is given by

![]() (6)

(6)

![]()

Table 1. True state (M) and selected models (![]() ).

).

where

![]() (7)

(7)

is the marginal likelihood of![]() . For

. For ![]() discrete, (7) is a summation.

discrete, (7) is a summation. ![]() is the conditional probability that

is the conditional probability that ![]() was the selected model. Computations are conditioned on each model, since one will never know the selection for random data. This is similar to the fact that the true model is not known, and each of the models can be viewed as a possible true model.

was the selected model. Computations are conditioned on each model, since one will never know the selection for random data. This is similar to the fact that the true model is not known, and each of the models can be viewed as a possible true model.

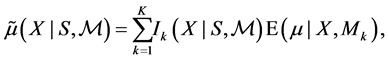

Posterior distribution: After the data x is observed, and given the selection procedure S, from the law of total probability, the posterior distribution of ![]() is then given by

is then given by

![]() (8)

(8)

![]() is an average of the posterior of each model

is an average of the posterior of each model![]() ,

, ![]() , weighted by posterior model selection probability.

, weighted by posterior model selection probability.

Posterior mean and variance:

Proposition 1 Under Equation (8), the posterior mean and variance are given by

![]() (9)

(9)

where ![]() and

and ![]() are respectively the posterior mean and the posterior variance of

are respectively the posterior mean and the posterior variance of ![]() for model

for model ![]() if

if ![]() was the selected model.

was the selected model.

Proof. Under Equation (8), the posterior mean is

![]()

The posterior variance under Equation (8) is

![]()

![]()

![]() is the posterior expectation loss for model

is the posterior expectation loss for model ![]() for taking the decision rule

for taking the decision rule ![]() rather than

rather than![]() .

.

The method can be then summarised as follows:

1. ![]() represents the prior model uncertainty,

represents the prior model uncertainty,

2. ![]() updates prior model uncertainty by taking into account the selection procedure,

updates prior model uncertainty by taking into account the selection procedure,

3. ![]() is the overall posterior representation of the model selection uncertainty.

is the overall posterior representation of the model selection uncertainty.

Note that if the unconditional model selection probability is equal to model prior, then the proposed weights are the same as BMA weights, namely the probability that each model is true given the data,![]() . For the proposed weights, one needs to compute the marginal likelihood and these model selection probabilities. Methods exist in the literature for doing such computations. These include Markov chain Monte Carlo methods, non-iterative Monte Carlo methods, and asymptotic methods. Other Bayesian methods based on mixtures include Ley and Steel [40] , Liang et al. [32] , Schäfer et al. [41] , Rodrguez and Walker [42] , and Abd and Al- Zaydi [43] . Some frequentist mixtures include Abd and Al-Zaydi [44] , and AL-Hussaini and Hussein [45] .

. For the proposed weights, one needs to compute the marginal likelihood and these model selection probabilities. Methods exist in the literature for doing such computations. These include Markov chain Monte Carlo methods, non-iterative Monte Carlo methods, and asymptotic methods. Other Bayesian methods based on mixtures include Ley and Steel [40] , Liang et al. [32] , Schäfer et al. [41] , Rodrguez and Walker [42] , and Abd and Al- Zaydi [43] . Some frequentist mixtures include Abd and Al-Zaydi [44] , and AL-Hussaini and Hussein [45] .

A basic property: From the non-negativity of Kullback-Leiber information divergence, it follows that![]() :

:

![]() (10)

(10)

where the expectation is taken with respect to the posterior distribution in Equation (8). This logarithm score rule was suggested by Good ( [46] ). This means that under the use of a selection criterion and the posterior distribution given in Equation (8), ABMA provides better predictive ability (under logarithm score rule) than any single selected model.

For computational purposes, ![]() can be written as

can be written as

![]() (11)

(11)

where ![]() is the Bayes factor, summarising the relative support for model

is the Bayes factor, summarising the relative support for model ![]() versus model

versus model ![]() using posterior model selection probabilities. Using Laplace approximation of the marginal likekihood, the weights in Equation (11) become

using posterior model selection probabilities. Using Laplace approximation of the marginal likekihood, the weights in Equation (11) become

![]() (12)

(12)

where ![]() is Bayesian information criterion for model

is Bayesian information criterion for model![]() .

.

5. Applications

Let ![]() be a quantity of interest with prior

be a quantity of interest with prior ![]() and posterior

and posterior ![]() (given data x);

(given data x); ![]() a sample space for any decision rule

a sample space for any decision rule![]() ;

; ![]() a statistical model distribution of x. The frequentist risk of

a statistical model distribution of x. The frequentist risk of ![]() is

is

![]()

The Bayes risk of ![]() is

is ![]() and is constant.

and is constant.

For some models, beta prior will be used for![]() ; e.g beta prior as follows:

; e.g beta prior as follows:![]() ,

, ![]() , then

, then![]() , therefore

, therefore

![]()

is the Bayes estimate of![]() . The marginal distribution of X is the beta-binomial

. The marginal distribution of X is the beta-binomial![]() , whose probability density function (Casella and Berger [47] ) is given by

, whose probability density function (Casella and Berger [47] ) is given by

![]()

Various results obtained in this Section are not sensitive to the variation of different parameters. R software [48] was used for computing.

5.1. Long Run Evaluation

5.1.1. Two-Model Choice

(a) ![]() and

and![]() ; with degenerate priors

; with degenerate priors![]() . Within the framework of hypothesis testing, Bernado and Smith [33] refer to (a) as “simple versus simple test” .

. Within the framework of hypothesis testing, Bernado and Smith [33] refer to (a) as “simple versus simple test” .

![]()

The posterior model probabilities ![]() are given by

are given by

![]() .

.

Model 1 is selected if![]() ,

,

![]()

![]()

![]()

![]()

![]()

![]()

BMA corresponds to weighting the models with their posterior; the corresponding estimator is ![]() .

.

The BPMSE ![]() if

if ![]() is selected and

is selected and ![]() otherwise.

otherwise.

For illustration of the case![]() , we take

, we take![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

,![]() .

.

Figure 1 illustrates the performances of BPMSE, BMA and ABMA. BMA and ABMA have similar perfor- mances. Only points ![]() and

and ![]() are relevant since the true model is one of the two. However, for some regions of the parameter space, BMA does not perform better than BPMSE. It is clearly shown from Figure 1 that ABMA outperforms BPMSE and BMA.

are relevant since the true model is one of the two. However, for some regions of the parameter space, BMA does not perform better than BPMSE. It is clearly shown from Figure 1 that ABMA outperforms BPMSE and BMA.

Figure 2 shows these estimators all together, with smallest risk being ABMA for all regions of the parameter space; again ABMA outperforms BMA and BPMSE.

(b) Consider the following two models:![]() ,

, ![]() , noninformative prior and

, noninformative prior and![]() .

.

Let the selection procedure consisting of choosing the model with higher posterior.

![]() and

and![]() ,

,

![]()

Figure 1. Risk of two proportions comparing BPMSE, BMA and ABMA estimators as a function of m.

![]()

Figure 2. Risk of two proportions comparing BPMSE, BMA and ABMA estimators as a function of m.

![]()

![]() is chosen if

is chosen if![]() .

.

![]()

![]() .

.

![]() .

.

![]() and

and![]() .

.

The parameters for simulating Figure 3 are![]() ,

, ![]() , that is

, that is![]() ,

,![]() . Again, Figure 3 clearly shows that ABMA performs better than BPMSE and BMA.

. Again, Figure 3 clearly shows that ABMA performs better than BPMSE and BMA.

(c) Consider the following two models:![]() ,

, ![]() (degenerate prior) and

(degenerate prior) and ![]() . Similar degenerate priors for model 1 can be seen in Robert [31] and Berger [39] .

. Similar degenerate priors for model 1 can be seen in Robert [31] and Berger [39] .

Estimators for![]() :

:

![]() .

.

![]() .

.

Figure 4 shows the MSE of BPMSE, BMA and ABMA. As can be seen BMA does not dominate BPMSE, but ABMA does. Figure 5 shows the MSE of BPMSE, BMA and ABMA. As can be seen BMA does not dominate BPMSE, but ABMA does.

5.1.2. Multi-Model Choice

(a) Consider also a choice between the following models: ![]() for arbitrary K models, with degenerate

for arbitrary K models, with degenerate![]() . Simulations shown in figure (fig:bma.30.simple.binomial.ps) are performed with

. Simulations shown in figure (fig:bma.30.simple.binomial.ps) are performed with ![]() and

and ![]()

(b) Consider also a choice between the following models: ![]() for arbitrary K models,

for arbitrary K models, ![]() ,

, ![]() ,

, ![]() ,

, ![]() and

and![]() .

.

Figure 6 shows the MSE of BPMSE, BMA and ABMA. As can be seen BMA does not dominate BPMSE, but ABMA does.

5.2. Evaluation with Integrated Risk

A good feature of integrated risk is that it allows a direct comparison of estimators (since it is a number). Con-

![]()

Figure 3. Risk of two proportions comparing BPMSE, BMA and ABMA as a function of m.

![]()

Figure 4. Risk of two proportions comparing BPMSE, BMA and ABMA as a function of m.

![]()

Figure 5. Risk of 30 simple models comparing BPMSE, BMA and ABMA as a function of m.

sider a choice between the following models: ![]() for arbitrary K models,

for arbitrary K models, ![]()

![]() ,

, ![]() ,

, ![]() ,

,![]() .

.

For each model (between 10 and 200), the integrated risk is computed and comparisons of estimators is given in Figure 7. The ABMA dominates BPMSE, BMA does not. All Figures 1-7 presented here showed that the new method ABMA outperforms BMA and BPMSE in the sense of having smallest risk throughout the parameter space.

6. Concluding Remarks

This paper has proposed a new method of assigning weights for model averaging in a Bayesian approach when

![]()

Figure 6. Risk of 30 full models comparing BPMSE, BMA and ABMA as a function of m.

![]()

Figure 7. Integrated risks comparing BPMSE, BMA and ABMA as a func- tion of the number of models.

the frequentist properties of the estimator obtained after model selection are of interest. It was shown via Bernoulli trials that the new method performs better than Bayesian post-model selection and Bayesian model averaging estimators using risk function and integrated risk. The method needs to be applied in more realistic and myriads situations before it can be validated. In addition, further investigations are necessary to derive its theoretical properties, including large sample theory.

Acknowledgements

The authors thank the Editor and the referee for their comments on earlier versions of this paper.