Emotional Awareness: An Enhanced Computer Mediated Communication Using Facial Expressions ()

Received 8 December 2015; accepted 25 January 2016; published 28 January 2016

1. Introduction

Despite rapid advances in information and communication technology (ICT), textual messages are still a main communication mode, used through email, weblogs, instant messaging and many other communication systems. When sending a written message, there is an inevitable lack of non-verbal cues which may reduce the ability to understand unspoken emotions [1] . According to the media richness theory, when conversing face-to-face, we may notice various non-verbal social cues such as facial expressions, tone of voice, body language and posture, gestures and so on. These enriching non-verbal cues, which may reduce misunderstandings, usually don’t exist in written communication [2] . Rich media with high channel capacity is more appropriate for affective communication, primarily because of the complexity of feelings and the importance of non-verbal messages [3] . Since text based communication is relatively lacking in cues, its emotional tone is often ambiguous. Emotions are likely to be inaccurately perceived in email communication [4] . In situations of irregular communication, and when the interpersonal context of the sender-receiver communication is less established, the uncertainty of the receiver’s reactions to the communication is high [5] .

Perspective-taking, an act of taking into account the attitude of the other that includes the ability of a person to empathize with the situation of another person is fundamental to interpersonal communication [6] . A lack of emotional perspective taking or emotional awareness is more noticeable in adolescents and children, whose emotional intelligence is less developed in comparison to adults [7] . In addition, the act of perspective taking is less likely to occur in computer mediated communication (from here after CMC). This is due to the fact that people have a tendency to be parsimonious in their messages since information transfer by typing, which is particularly heavy in CMC, requires cognitive effort [8] . Therefore, relying on relatively lean media might enhance an affective distance between communicators causing communicational situations ranging from small misunderstandings to alienation and even situations of hurting the feelings of others.

Unfortunately, a worldwide phenomenon that increased with the advances in communication technology is cyber bullying. Cyber bullies are those who use the web in order to bully and harass others. While the phenomenon itself is not new, the way it is carried out has changed radically with today’s technology [9] . Nowadays, we see more and more people involved in negative technology activities such as cyber bullying since various communication channels allow people to send anonymous messages. Communication with another person via a computer screen is a situation that is characterized by weak social context cues, causing behavior to be relatively self-centered, less regulated, and therefore acts can be extreme and impulsive [10] . Under the guise of anonymity, people feel free to express offensive attitudes and opinions [11] and often cause the person to whom they are addressing great distress to the point that he or she may feel abused.

There are many goals for communicating (such as influencing, managing relations, reaching a mutual outcome and requesting information), and many contexts (e.g. cooperative work, social relationships, etc.). While our approach is not limited to a certain goal or context, our main concern is to ensure that the intention of the sender would be interpreted correctly at the receiver's side, with a particular emphasis on reducing affective misunderstandings. We are especially interested in the idea of designing an emotional oriented CMC that would be able to prevent negative emotions, and consequently would form positive relationships between human beings. While previous works on emotion prediction in CMC concentrated on business communications [4] [12] , our approach is more suited for less formal contexts, such as chatting and writing in forums in social network systems. In addition, since emotional intelligence is less developed in adolescents and children [7] an emotional oriented CMC may be implemented in educational systems (such as school portals) as a means to teach sensitivity, empathy and social skills. There are previous works on emotion-related textual indicators, such as detecting deception in online dating profiles [13] , sentiment detection in the context of informal communication [14] , emotion detection in emails [15] and investigating the relationship between user-generated textual content shared on Facebook and emotional well-being [16] . There are earlier works that used emoticons to express emotion evoked by text in emails (such as [12] [17] ), but our focus is on CMC aimed at raising the awareness of a message sender to the emotional consequences for the message receiver in informal synchronic communication.

We offer an idea of an emotionally enhanced CMC system, which visually presents to the message sender an image of a facial expression depicting the assumed emotion a receiver would have upon reading the message, prior to sending the message. Among various communication strategies [5] , our approach combines three: affectivity, perspective taking and control. A facial expression of the receiver’s assumed emotion provides an affective component (affectivity) that takes the emotional view of the receiver into account (perspective taking), and allows the sender to revise the outgoing message (controlling by planning the communication ahead).

We are aware of the widespread use of emoticons (also known as “emoji”) in CMC, as a means for incorporating non-verbal cues in textual communication exchanges [18] , and therefore as an effective way to overcome potential misunderstanding. It should be clear that our approach is certainly not designed to replace the use of emoticons. Instead, it serves as an additional means to prevent possible emotional misinterpretation, by ensuring an awareness of the sender to the assumed emotion evoked at the receiver’s side, and allowing the sender to revise his message if necessary.

The rest of this paper is organized as follows. Section 2 describes the phase of designing a prototype of the emotionally enhanced CMC. Section 3 describes a user test conducted for demonstrating the emotionally enhanced CMC idea using the designed prototype, and shows the user test results. Section 4 presents our conclusions, discusses the research’s limitations and finally suggests future research directions and implications of the idea in several contexts.

2. Designing a Prototype System

2.1. Emotion Database

In order to create a homogeneous range of weights, the collected responses to the survey were normalized between 0 and 1. We tried out three different methods to calculate “emotion weights” indicating the extent that a keyword or phrase is charged with an emotion. The 3 methods are as follows:

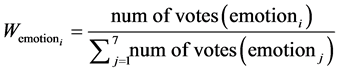

Method 1. Percent of total votes―based on the number of votes a specific emotion received for a keyword and divided by the total amount of votes that all emotions received for the same keyword. The weight for each emotion was calculated by using the following:

Table 1 shows an example of the emotion weights calculation for the keyword “Idiot” using method 1.

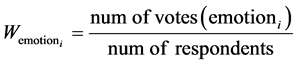

Method 2. Percent of total respondents―based on the number of respondents who chose the specific emotion for the keyword out of the total number of respondents. The weight for each emotion was calculated by using the following:

![]()

Table 1. Emotion weights calculation for the keyword “Idiot” using method 1.

Table 2 shows an example of the emotion weights calculation for the keyword “Idiot” using method 2.

Method 3. Relative to “top” emotion―for each keyword, the emotion with the highest number of votes gets a weight of 1. The weight of the other emotions for the same keyword is relative to the highest ranked emotion according to the number of votes. The weight for each emotion was calculated by using the following:

Table 3 shows an example of the emotion weights calculation for the keyword “Idiot” using method 3.

Whereas the keyword “love” evoked the emotion of happiness in almost all participants (60), the keyword “embarrassing” evoked a wider range of emotions as presented in Table 4.

We chose method 2 which takes into account the fact that respondents were able to select multiple emotions for each keyword by calculating the weight for each emotion based on the number of respondents (as opposed to the total number of votes for all emotion per keyword as in method 1).

2.2. Assuming a Message’s Emotion

2.3. Designing the User Interface

The CMC system prototype (Figure 2) was designed in a way that gives the sense of contemporary instant

![]() (a) (b)

(a) (b)

Figure 1. Comparison of emotion weight calculation methods (in Figure 1(a), method 3 is hidden behind method 2). (a) Keyword with high agreement, (b) keyword with low agreement.

![]()

Table 2. Emotion weights calculation for the keyword “Idiot” using method 2.

![]()

Table 3. Emotion weights calculation for the keyword “Idiot” using method 3.

messaging applications (such as Whats App and other synchronic messaging systems). Each message is displayed inside a speech bubble coming from the “speakers” side. The system is geared toward Hebrew speakers, thus the text input and conversation display area are on the right side. On the left side of the screen is an image with a facial expression depicting the assumed (calculated) emotion.

Layout. The prototype system uses 7 images of facial expressions representing the aforementioned 7 emotions (as shown in Figure 3). We chose images from an existing facial expression estimation technology [20] of a young adult Caucasian male. In a real working system, the image should correspond to the user’s age group (child, teenager, young adult, adult or senior), gender (male or female) and race, in order to create a higher level of empathy.

The interface includes a textual label (“tooltip”) for each image as an aid to ensure that the user understands the emotion displayed correctly.

Since our focus is on emotional feedback for the message sender and the user’s role as a message receiver is currently irrelevant, we did not want to insert unwanted noise in terms of incoming messages, thus the prototype only simulated a chat. In order to give the participants feedback after sending a message and a feeling of a “conversation” with back and forth messages, each message sent was answered by an automated response (a reply of “…”).

3. Demonstration and Evaluation

This section includes a description of a user test conducted for demonstrating the idea of an emotionally enhanced CMC, followed by the results we received in our evaluation of the acceptance and attitudes toward the idea.

3.1. Demonstration

The system prototype was used to demonstrate an emotionally enhanced CMC system. In total there were 30 participants, 4 of which did not follow all the stages of the testing, which resulted in referring to their responses as irrelevant and disregarding them from the analysis. Among the remaining 26 responses, there were 13 male participants and 13 female with an average age of 32.4 (ranging between 15 and 61). We used a convenience sample of participants, comprised of friends and family members, all of which are familiar with social network systems, and specifically with chatting systems.

Test Phases. The test system guided the participants through a number of phases including interacting with the system, a questionnaire regarding the perceived usability of the system, feedback regarding their perceived correctness of the system’s assumed emotions and their overall opinion regarding such a system.

First Phase. At the beginning the participants were presented with a short written explanation of the prototype and of the system’s purpose.

![]()

Figure 3. Facial expressions used in the system’s prototype (Retrevied from [20] ).

were to send a message, they were shown a dropdown list with a set of 5 random messages from the pool of 25 pre-composed messages. After selecting a message from the list and before sending it, the participants were instructed to press a “check” button in order to run the algorithm for calculating the message’s assumed emotion evoked and to display the image of the corresponding facial expression. To ensure that each participant would send 10 messages, an “end conversation” button which allowed participants to move forward to the next phase was hidden, and appeared only after sending the tenth message.

Accuracy of the System Phase. After finishing the “chat”, the participants were presented with a “teach me” screen, where they were asked to “teach” the system which emotion they thought was to be evoked by each message sent. A list of the 10 messages sent was presented, alongside the emotion that was displayed by the system in the previous chat phase. For each message, participants were able to mark a checkbox if the emotion displayed by the system was incorrect in their opinion, and then select the correct emotion from a dropdown list of 7 emotions. This phase had two purposes. The first was to evaluate the accuracy of the system in judging emotional reactions to messages. The second, was to allow participants to experience the act of teaching the system the correct emotion (which is intended to be a feature of a future emotional CMC system with “learning” abilities).

System Perceived Usability Phase. In this phase, the participants were presented with an adjusted system usability scale (SUS), which is a standard 10 item 5-point Likert scale questionnaire, resulting in a SUS score which allows a comparison of different systems [21] . SUS is considered as a tool that is highly robust and versatile for usability professionals, providing participants’ estimates of the overall usability of an interface, regardless of the type of interface [22] .

Open Questions Phase. Upon completing the use of the system and answering the SUS questionnaire, the participants were presented with 13 open questions regarding the system. The questions were about the perceived usability of the system (ease of use), their acceptance and willingness to use such a system, where do they think such a system would be useful and so on.

3.2. Evaluation

Results. We now present the results, divided into three parts: 1. System Usability Scale; 2. System Accuracy―examining the correctness of the system’s algorithm in assuming the emotion; 3. Open Questions―ana- lyzing the responses to the open questions in order to learn about the attitudes of the participants regarding the idea of an emotionally enhanced CMC.

System Usability Scale. Results for the SUS questionnaire appear in Table 5. The average of all the individual SUS scores is the SUS score for the interface.

An independent-samples t-test was conducted to compare the SUS scores between male and female respondents. A significant difference was found in the scores between men (M = 83.846; SD = 8.14) and women (M = 90; SD = 6.038); conditions: t(24) = 2.1892, p = 0.0385, meaning that the female participants perceived the system as significantly more usable than the male respondents. There is a need to interpret the received numeral SUS score to a textual description, to determine whether or not the received score indicates that the system is usable. We followed a previous study conducted in order to determine the meaning of SUS scores, and concluded that the total SUS score for both genders can be translated as “Excellent”, meaning the system has a very high level of usability [23] .

System Accuracy. The measurement of the system’s accuracy was based on the results of the “teach me” screen. Table 6 presents the percent of the cases in which the system’s calculated emotion was correct, according to the participants. The accuracy of the system is in terms of the percent of emotions displayed that were considered correct by the users out of the overall emotions displayed.

![]()

Table 5. SUS scores for all users and by gender.

![]()

Table 6. Accuracy in terms of the percent of correct emotions.

Open Questions. The open questions were about the usability of the system, the acceptance of such a system and the willingness to actively teach such a system.

System Usability. 80% of respondents (21) indicated that the emotion displayed by the system was understandable, though most users did not notice the tooltip with the textual label of the emotion displayed. From this we can conclude that a facial expression alone is usually enough to convey the emotion that would be evoked at the side of the message receiver. A recurring comment was that there were not enough emotions included in the system, including suggestions for specific emotions (such as “concern” and “disappointment”). This was noticed when the respondents were asked to say whether or not the emotion displayed by the system was correct. Another suggestion from the respondents, for improving the system, was to add an explanation of what part of the message is causing the emotion displayed by the system, such as a popup message or the use of colors to highlight the relevant part, without having to search for it or to make assumptions.

System Acceptance. 73% of the respondents (19), when asked if they would use such a system for communicating, stated that they would. The respondents who stated that they would not make use of such a system indicated that they have a high level of emotional intelligence and do not feel the need for such a system. When asked to elaborate on the situations in which such a system would be used, the prevalent answer was-when there was an uncertainty on whether the message being sent evokes the desired emotion in the receiver of the message. Another reason indicated for using such a system was during conversations with unfamiliar people or with people in a professional capacity (co-workers, managers, costumer relations).

Another question regarding the acceptance of such a system was whether or not the respondent would recommend such a system and if so, to whom. All but one of the respondents indicated that they would recommend such a system, to family members and to friends. A number of respondents indicated that such a system should be used among co-workers and in business related conversations. Another recommendation was that such a system would be effective for people with a low level of emotional intelligence (such as people diagnosed with Asperger syndrome) and for children and teenagers.

Willingness to Teach the System. We asked about the users’ willingness to actively teach the system the correct emotional reaction for a message. Three levels of interaction with the system were asked about, each level includes the previous interaction and an increased level of user involvement: 1-Noting the correctness of the emotion displayed (correct/incorrect); 2-Selecting a correct emotion; 3-Marking which specific keyword or phrase evoked the emotion. Overall, the responses indicate a high level of willingness to teach such a system, with remarks such as “if it would help someone” and “if it would cause the system to be accurate”. The few reservations raised about teaching the system were in regards to the amount of effort a user needs to invest in order to teach the system. Some of the respondents considered the third level of interaction (marking a specific keyword or phrase) as too complicated and had asked whether there was some sort of gain or incentive from such an interaction with the system.

4. Conclusions and Discussion of Limitations and Future Directions

4.1. Limitations and Future Directions

Another shortcoming is that we conducted our evaluation with a small group of users, comprised mostly of friends and family members, meaning that the results and conclusions should be verified with future more extensive studies.

To evoke emotional responses of empathy, we used photographic expressions of a “real” person, instead of using Chernoff style faces or some other type of lifelike cartoon faces. But our realistic approach may have disadvantages such as difficulty to empathize with dissimilar persons (e.g. opposite gender, different age, race, etc.). People experience more empathic emotions when interacting with people with whom they have a communal relationship, or proximity [28] . Future work that deals with designing systems that express emotions by faces should take into account a wider range of photographic images, which correspond to the user’s group in order to ensure a higher level of empathy.

For creating more realistic conversations, following emotional CMC system prototypes should deal with AI issues. In real CMC, several distinct emotions may come up during a single conversation, and the reaction to a specific message may be affected by many contextual factors that were not addressed in the current system prototype. Such factors include previous utterances exchanged in the same conversation or in past conversations, the communicators current context (e.g. mood, goal, beliefs), the nature of the emotional relationships between communicators prior to the conversation (e.g. pleasant versus tense relationships), or the types of relationships between communicators (e.g. group types such as professional, familial, and friendship, with additional sub- groups types such as siblings, parent-child, co-workers, employee-employer and so on). Also, if a comment in a message is about a third party, the emotion evoked in the receiver might be different than the emotion if the comment was directed at him. A real CMC system that would implement our idea should deal with the complexity of human communication.

Another aspect that makes written utterances hard for a computerized system to interpret is identical words: many words can be used as either an adjective or a noun in different contexts and tools that can overcome this issue are needed. For example, PCFG (Probabilistic Context-Free Grammars) [29] is a method that allows determining the role of the word in the sentence statistically. These tools are indeed relevant and should be dealt with in future emotional CMC interactive systems.

A real emotionally enhanced CMC system needs to understand the meaning of a keyword or phrase, based on the conversational context. Of course, this requires AI components. Language is full of figurative speech; these are expressions that are used in certain situations and contexts, such as idioms and metaphors. Idioms such as “break a leg” and “piece of cake” have two different meanings, one is the literal (taking the words in their most basic sense) and the other is figurative (meaning “good luck” and “it’s very easy”, respectively in the examples given above). Another example is the metaphorical phrase “out of this world” that has two different meanings?while one may refer to something that is extraterrestrial, the other may describe something as remarkable or excellent. Intelligent systems should “know” if an utterance is intended to be taken literally or figuratively.

An additional route to follow in emotional CMC designs is to expand the basic emotions to a richer variety, since the human emotional range expands beyond the 6 universally recognized emotions. Also, CMC systems should support the notion of “mixed” emotions such as “happily surprised” and “sadly angry” [32] .

4.2. Implications

Emotionally enhanced CMC aimed at increasing the message sender's awareness of the receiver's emotional reaction can be applied to social network systems, education contexts, and also to work-related communication and computer-supported cooperative work (CSCW).

Schools have an important role in fostering not only children's cognitive development but also their social and emotional development [33] . In the twenty-first century, when elementary and high school children are increasingly using ICT, it is more important than ever that educators be aware of the emotional distance that can be intensified by these communication media. This is why we consider educational contexts, especially school portals with online chatting and forums, as most suitable and we even consider them as an opportunity to teach youngsters the values and skills of consideration for others, compassion and empathy.

Since the practice of medicine is fraught with emotion [34] , healthcare systems that involve communication between medical staff and patients, will surely benefit from an emotionally sensitive CMC. Health support technologies with emotionally enhanced CMC may help medical staff move from detached concern to empathic guidance.

Nowadays, elderly people (age 65 and older) deepen their adoption of technology and, their usage of social media had tripled since 2010 [35] . Emotionally enhanced CMC systems have a high potential for bridging gaps that are due to language differences between generations. A great deal of work in the area of designing for the elderly is already invested in making “age-friendly” interfaces with a focus on human computer interactions [36] , but additional emphasis is needed to be put on interpersonal interactions via computers. We are convinced that CMC systems that raise emotional awareness would have a positive effect on intergeneration relationships, especially between grandparents and their grandchildren. Future CMC systems using facial expressions to convey the assumed emotion evoked in the receiver can also highlight specific parts of the message that evoked the emotion, so the message sender would be encouraged to decide if an additional explanation is needed to clarify an expression that is likely to be misunderstood.

Our current demonstration and evaluation shows that the idea of an emotionally enhanced CMC system is acceptable and perceived useful for interpersonal engagement in CMC systems. We are certain that any human communication can benefit from systems that reduce emotional misinterpretation, and that encourage users to control their communicative actions and be more thoughtful.