Monocular Vision Based Boundary Avoidance for Non-Invasive Stray Control System for Cattle: A Conceptual Approach ()

1. Introduction

Intensive monitoring of domestic cattle has many economic and welfare benefits, but to realize these, timely notifications of changes in animal conditions, environmental and physiological events can advance at a rate for which constant monitoring of cattle would be necessary. Free-ranging cattle are controlled by herders. Holding, monitoring or gathering free-ranging cattle require flexible husbandry practices for efficient and effective low stress animal management. Behavioral theory and practical experience indicate that cattle can be taught to respond to auditory cues [1] . Virtual fences techniques for cattle control are achieved by applying aversive stimuli (sound or vibration) to an animal when it approaches a predefined boundary. It is implemented by a small smart collar integrated with a Global Positioning System (GPS) sensor, flash memory, wireless transceivers, and a small CPU; essentially, each node is a small wireless computing device.

The field of wireless sensor networks particularly focuses on applications involving autonomous use of compute, sensing, and wireless communication devices; applicable to animal tracking and monitoring. The work of [2] developed a moving virtual fence algorithm to control cow herd. A smart collar consisting GPS receiver, PDA, WiFi, flash card for WLAN and audio amplifier with speaker was used for each cow in the herd. The animal is given the boundary of a virtual fence in the form of a polygon specified by its coordinates. The location of the animal is tracked against this polygon using the GPS sensor mounted on the collar. When in the neighborhood of a fence, the animal is given a sound stimulus whose volume is proportional to the distance from the boundary, designed to keep the animal within boundaries. In Figure 1 a typical collar is attached around the neck of a cow; the GPS sensors and other sensor nodes are fixed on the collar.

Cattle domain experts have suggested using a library of naturally occurring sounds that are scary to the animals; such as a roaring tiger, a barking dog, a hissing snake; and randomly rotating between the sounds [3] . In most of the existing works on virtual fencing, boundaries of fences are static. Real world herding and control of cattle require dynamic and extensible virtual fences. This work will use monocular vision system for the identification of boundaries, a target recognition subsystem for identification of predefined boundaries to be avoided by cattle. Depth estimation from image structure using the concept of global Fourier transform and local wavelet transform will be used. Sound stimuli generated would be corresponding to the depth estimate.

The monocular vision boundary avoidance for non-invasive stray control system for cattle will require no motion planning algorithm to shift boundaries of virtual fences. It will also require no path planning protocol. This will resolve the inefficiencies in the existing virtual fencing schemes. The rest of this paper is arranged as follows. In Section 2, we present reviews of related works. We describe the overview of a monocular vision based control system for non-invasive stray control of cattle in Section 3. In Section 4, the proposed model formulation is presented while Section 5 offers conclusions and future work.

2. Related Works

Preliminary research has demonstrated that cows can be controlled and gathered autonomously using recorded audio cues associated with manual gathering [1] . Many works have also been done and are being done on animal tracking and monitoring mostly implemented with wireless sensor networks (WSN) and smart collars.

LynxNet: Wild Animal Monitoring using Sensor Networks presented by [4] ; was specifically designed to improve animal monitoring sensor system with low level software node control and communication. The methodology employed involved hardware design prototype of a highly mobile, energy efficient monitoring system that gathers accurate GPS position and multi-nodal sensor data and disseminates it control. The system of delay tolerant networked nodes to the consumer was used. The mobile nodes initiate all communication, which periodically poll for nearby base stations. The data collected at each node is transferred via radio frequency communication to an end user as shown in Figure 2. The forwarded data is analyzed to determine the behavioral pattern exhibited by the animals being monitored.

The work of [3] presented Wireless Sensor Devices for Animal Tracking and Control is an improvement wireless hardware devices in terms of radio range, solar capabilities, mechanical and electrical robustness and a multi sensor unit for animal tracking. The work carried out experiments with mote hardware using Atmel Atmega128 processor, and the Nordic NRF903 radio chip to prototype and debugs the electronics. The solutions proffered include presentation of a new family (Flock Family) of wireless sensor devices for animal tracking; developed with different engineering tradeoffs to existing devices and to meet the needs of particular pastoral environmental applications.

![]()

Figure 1. An animal carrying a sensor node [3] .

![]()

Figure 2. Lynx net system architecture [4] .

The work of [1] presented cows gathering using virtual fencing methodologies. The main objective of the work is to achieve gathering of animals with minimal human interaction using audio cues administered from Direction Virtual Fencing (DVF) equipment. DVF combines a global positioning system (GPS) technology and animal conditioning using sound and mild electrical stimulation; a directional movement of animal using bilateral cues. The draw backs are: cow training protocol required to optimize autonomous gathering has yet to be fully developed; and wireless radio link between base station (in WSN) and the cows used was unpredictable and relatively short. Hence there was high level of equipment failure.

Herds of animals such as cattle are complex systems; there are interactions with the environment such as looking for a water source(s) and search for green pasture; necessitating frequent migration from one location to another; with existing polygon coordinate virtual fences techniques limited control can be exerted to effectively move the animals around.

In this work we combine Artificial Intelligence; Networking (WSN) and Control Systems to develop a non- invasive stray control of animal (cattle) to unwanted environments.

3. Overview of Monocular Based Model for Non-Invasive Stray Control System of Cattle

Based on the existing work, cattle movement control is achievable with the adaptation of wireless sensor network (WSNs) technological innovations, Global Positioning System (GPS) for animal tracking schemes. This paper proposes a model to enhance the existing work on cattle movement control with the application of wireless sensor network (WSNs); Global Positioning System (GPS); Artificial Intelligence (Image Processing); Monocular Vision System and Target recognition sub-systems.

3.1. Cattle Monitoring with Wireless Sensor Network

Wireless sensor network in cattle monitoring schemes; and precisely in movement control is usually implemented with a sensor mounted collars placed on animals to track individual animal activities and it movement. One notable example of such is “ZebraNet” [5] . Devices mounted on the Zebra routinely exchange data such as GPS position with other devices that fall within their transmission range. If sufficient memory space is available, a user could download historical position data of multiple animals by approaching a single sensor node. The scheme in “ZebraNet” is based on a store and forward approach; the approach may however suffer a considerable delay in packet delivery or loss if the stored data is not frequently updated with the newly measured data.

3.2. Global Position System in Cattle Tracking

Signal that GPS satellites transmit to earth indicate the location of the satellites. A GPS sensor receives the signals and determines the location of animals carrying the sensors by measuring the distance between itself (sensor) and the satellite. There are at least 24 active GPS satellites orbiting around earth at all times. To get a reliable location reading, a typical GPS sensor combines the signals from a number of satellites. Cattle position data and migration pattern acquired, is transmitted node-to-node in the WSN. Data download can then be done from nearest base station for analysis. Over the last decade, many species of terrestrial wildlife have been fitted with GPS collars. Examples of such animals are moose [6] [7] . GPS tracking collars have been in cooperated in research on the ecology and management of grazing systems using sheep [8] and cattle [9] . By using GPS units in conjunction with Geographic Information Systems (GIS), animal distribution and movement can be evaluated and determined.

3.3. Monocular Vision System for Boundary Avoidance

Generally, obstacles could be avoided effectively with several sensors including infrared, sonar or laser [10] . A direct application found in robotics; an aspect of Artificial Intelligence. Monocular vision system estimate the distance of an obstacle (boundary) from a camera based on the image structures obtain by the camera; known as Depth Estimation. Depth estimation or extraction is the set of techniques and algorithms aiming to obtain a representation of a spatial structure of a scene or boundary. In other terms, to obtain a measure of the distance of ideally, each point of the scene or image structure.

Depth estimation from monocular cues consists of three basic steps. Initially a set of images and their corresponding depth maps are gathered (Arial Depth). Then suitable features are extracted from the images. Based on the features and the ground truth depth maps, learning is done using supervised learning algorithm. The depths of new images are predicted from this learnt algorithm. In a related work [11] used supervised learning to estimate 1-D distances to obstacles, for the application of autonomously driving a remote controlled car.

3.4. Target Recognition Sub-System

Target recognition processes use various methods and algorithms; the sine wave transfer method is used for real-time target recognition, and this signal is used as the process signal in digital signal processing and in analog/digital conversion [12] . Neural network model based and fuzzy logic based algorithms are also being used. The realized target recognition system makes a pixel comparison between the target images pre-recorded in the computer (pre-defined boundaries) and the target image received from the camera.

3.5. Animal Motion Prediction and Control

In the work of [13] ; Data-Driven Identification of Group Dynamics for Motion Prediction and Control; a distributed model structure for representing groups of coupled dynamic agent (Physical bodies) was proposed. The model combines effects from an agent’s inertia, interactions between agents, and interactions between each agent and its environment. Global positioning system tracking data were collected in field experiments from group of three (3) cows and a group of ten (10) cows. Least-squares method was used for fitting the model parameters based on measured position data. Based on analysis of model with collected data, hypothesis about cow stray behavioral pattern were proposed.

4. Flowchart of Proposed Model

In Figure 3 the collar mounted camera takes a capture of the scene (boundary); the captured image is transmitted node-to-node in the WSN. Data (captured image) download can then be done from nearest base station for

![]()

Figure 3. General flow chart diagram of the model.

recognition by the target recognition sub-system. If scene is a recognized boundary; the monocular vision based depth estimator estimates the distance of camera (animal) from the boundary (captured scene). Audio cue of an intensity equivalent to the magnitude of the distance estimated is generated and amplified. The amplified audio cue is applied to the animal (animal left or right ear). Animal responses in terms of animal new or current location; motion direction and speed (velocity) are then tracked by the GPS sensor. The tracked responses determine the next state of the camera.

The general flow chart diagram in Figure 3 shows the steps involved in the entire monocular vision based non-invasive stray control system. At the start of it operation the camera takes a snapshot of a boundary scene. The target recognition subsystem determines if the boundary is a “known boundary” or a “not known boundary”. If the boundary is a “known boundary”, that is the captured image of the boundary scene matches a pre-recorded image by a similarity of 75% or higher. Then the depth of the image (its distance from the camera) will be estimated using monocular vision. If the captured image does not match any pre-recorded image, then the boundary is “not a known boundary” and the image is simply discarded.

The sound cue generated is directly proportional to the image estimated distance (D). The sound cue is applied to the animal (cows) ears as a stimulus to give directional and dynamic control to the cow, hence the herds of cattle. It is suggested that pre-recorded scary sounds such as hissing snake or roaring lion be used to control cows away from unwanted environments [14] .

4.1. Target Recognition Sub-System

Pre-defined Boundaries: PB (Images pre-recorded on the Computer system).

Images of Boundaries Received from Camera: PC

Target Recognition Sub-system compares PC to PB

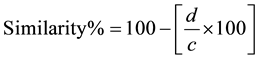

(1)

(1)

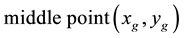

“d” indicates the total number of pixels scanned while “c” indicates the total number of different pixels. If Similarity (%) is 75% or higher match, then scene or boundary is a “known boundary”. If similarity (%) is <75%, then scene or boundary is “not a known boundary”. “Known boundaries” are to be avoided by animals (cattle); “not a known boundary” may or may not be avoided by animals (cattle). Pixels based calculation of middle point of object (image). The formula as follows [15] :

(2)

(2)

(3)

(3)

The coordinates of the middle point are found as an ordered series obtained by calculating the Euclidean distance (E) between the mid point  and each border pixel

and each border pixel .

.

(4)

(4)

where ; N is the number of corner pixels.

; N is the number of corner pixels.

4.2. Monocular Depth Estimation Model

The following is a description of image structure based on the textual patterns present in the image and their spatial arrangement [16] [17] .

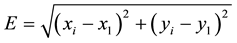

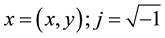

First level description: Magnitude of the global Fourier transform of the image. The discrete Fourier transform (FT) of an image is defined as [10] .

(5)

(5)

where i(x) is the intensity distribution of the image along the spatial variables ; and the spatial frequency variables are defined by

; and the spatial frequency variables are defined by  (units are in cycles per pixel); and h(x) is a circular window that reduces boundary effects.

(units are in cycles per pixel); and h(x) is a circular window that reduces boundary effects.

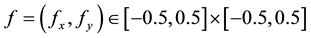

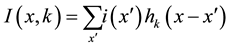

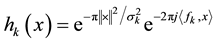

Second level description: Wavelet transforms defined in [10] as:

(6)

(6)

Different choices of the functions hk provide different representations for instance as in [15] . One of the most common functions is complex Gabor Wavelets:

(7)

(7)

In such a representation, I(x, k) is the output at the location ´ of a complex Gabor filter turned to the spatial frequency defined by fx. Our objective is to estimate the depth D of the scene (boundary) by means of the image features, v [10] .

![]() (8)

(8)

where

![]() (9)

(9)

and

![]() (10)

(10)

4.3. Animal Motion Prediction Model

Given a group of m agents, the model structure for an individual agent ![]() can be written in state-space, difference equation form as in [13]

can be written in state-space, difference equation form as in [13]

![]() (11)

(11)

Agent i’s state ![]() consists of its east position, north position, eastern component of velocity, and northern component of velocity after the

consists of its east position, north position, eastern component of velocity, and northern component of velocity after the ![]() iteration, and its position if given by

iteration, and its position if given by![]() . The time step ∆t is given by

. The time step ∆t is given by![]() , constant for all τ. The term ai represents damping,

, constant for all τ. The term ai represents damping, ![]() for zero damping and

for zero damping and ![]() for stable systems. The function

for stable systems. The function ![]() determines the coupling force applied by agent j to agent i. The function

determines the coupling force applied by agent j to agent i. The function ![]() represents the force applied by the environment to the agent at point

represents the force applied by the environment to the agent at point![]() .

. ![]() is a Zen mean, stationary, Gaussian white noise. Process uncorrelated with

is a Zen mean, stationary, Gaussian white noise. Process uncorrelated with ![]() used to model the unpredictable decision-motive processes of agent i.

used to model the unpredictable decision-motive processes of agent i.

4.4. Algorithm for the Camera Sequence or Mode of Operations

Step 1: Start (Camera)

Step 2: Snapshot (Camera)

Step 3: Sleep (Camera) For time t = t1 (min)

Step 4: Compute F(v) = 1/V

Step 5: Set time t2 ![]() F(v)

F(v)

Step 6: If time t > t2 (min)

Step 7: Then Sleep (Camera) for time t = t2

Step 8: else Sleep (Camera) for time t = t1

Step 9: wake (Camera)

Step 10: End (Camera)

The camera sleeps or delay it operation for time t (min) before “waking-up” again to capture images. The variable t can assume or be assigned two values t1 and t2. Time t1 (min) is a predefined camera delay time determined by the user. The value of t1 can be RESET; after which it is assigned to t. The values of t1 can be SET to 5 minutes or 10 minute as predetermined by the user. Time t2 is modeled as a function of animal (cow) velocity V; given as F(v) = 1/V. Time t2 (min) is assigned the value of F(v) each time it is computed. Then camera delay time t is assigned the value of t2.

4.5. Simulation of Camera Sequence or Mode of Operations

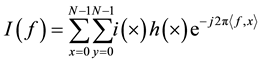

Figure 4 shows the simulation of the relationship (inverse proportionality) between camera delay time t2 (min) and animal velocity V (m/s). This indicates that when animal velocity towards a boundary is high, the camera delay time t2 is small. Time t2 (min) reduces as animal velocity V (m/s) increases.

![]()

Figure 4. Graph of camera delay time t2 (min) against animal velocity V (m/s).

5. Conclusion

In this paper, we proposed a model for an improved virtual fencing stray control system for cattle. The proposed model expressed as an algorithm, uses a target recognition sub-system to determine the boundary to be avoided; monocular vision based estimation of boundary distances and sound cues aversive stimuli to control or enforce animal movement away from protected environments. This is a substantial and an improved approach over existing animal control techniques.

Further Works

Future works will be to evaluate the depth error perception and the stability of the non-invasive stray control system. This could be done using an appropriate simulation tool and the performance metrics of interest. The results of the simulation could be benchmarked with the existing schemes in order to determine it efficiency.

NOTES

*Corresponding author.