Comparison of Fixed Point Methods and Krylov Subspace Methods Solving Convection-Diffusion Equations ()

1. Introduction

In the case of a linear system , the two main classes of iterative methods are the stationary iterative methods (fixed point methods) [1] - [3] , and the more general Krylov subspace methods [4] - [13] . When these two classical iterative methods suffer from slow convergence for problems which arise from typical applications such as fluid dynamics or electronic device simulation, preconditioning [14] - [16] is a key ingredient for the success of the convergent process.

, the two main classes of iterative methods are the stationary iterative methods (fixed point methods) [1] - [3] , and the more general Krylov subspace methods [4] - [13] . When these two classical iterative methods suffer from slow convergence for problems which arise from typical applications such as fluid dynamics or electronic device simulation, preconditioning [14] - [16] is a key ingredient for the success of the convergent process.

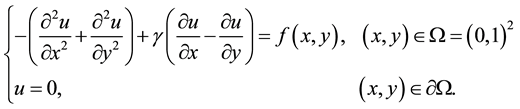

The goal of this paper is to find an efficient iterative method combined with preconditioning for the solution of the linear system  which is related to the following two-dimensional boundary value problem (BVP) [17] :

which is related to the following two-dimensional boundary value problem (BVP) [17] :

(1)

(1)

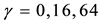

For parameter , we use finite difference method to discretize the Equation (1). Take

, we use finite difference method to discretize the Equation (1). Take  points in both the x- and y-direction and number the related degrees of freedom first left-right and next bottom-

points in both the x- and y-direction and number the related degrees of freedom first left-right and next bottom-

top. Now, we take , thus the grid size

, thus the grid size . For

. For , the convection term

, the convection term

equals to 0, using central difference method to the diffusion term, we get the discretization matrix of the Equation (1)

(2)

(2)

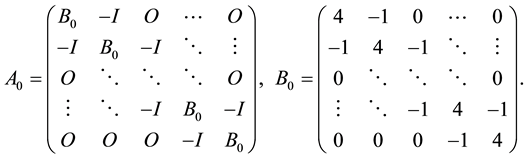

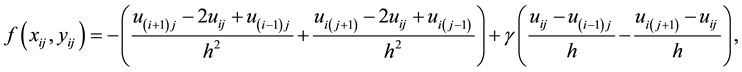

For , the diffusion term use a central difference and for the convection term use central differences scheme as the following:

, the diffusion term use a central difference and for the convection term use central differences scheme as the following:

(3)

(3)

we obtain the discretization matrix of the Equation (1)

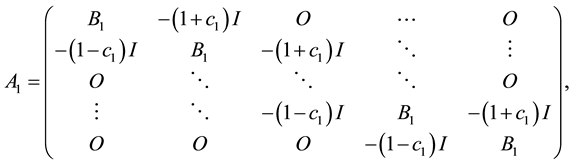

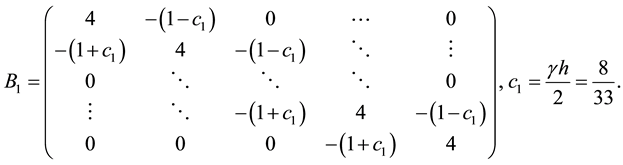

(4)

(4)

where

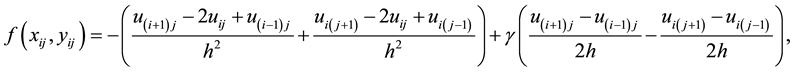

For , the diffusion term use a central difference and for the convection term use upwind differences scheme as the following:

, the diffusion term use a central difference and for the convection term use upwind differences scheme as the following:

(5)

(5)

we obtain the discretization matrix of the Equation (1)

(6)

(6)

where

![]()

2. Properties of the Discretization Matrix

In this section, we would first compute the eigenvalues of the discretization matrices![]() ,

, ![]() and

and ![]() of Equation (1), later analyze positive definite, diagonally dominant and symmetric properties of these matrices.

of Equation (1), later analyze positive definite, diagonally dominant and symmetric properties of these matrices.

2.1. Eigenvalues

Using MATLAB, the eigenvalues of the discretization matrices![]() ,

, ![]() and

and ![]() of Equation (1) are the following:

of Equation (1) are the following:

![]() (7)

(7)

2.2. Definite Positiveness

Matrix A is positive definite if and only if the symmetric part of A i.e. ![]() is positive definite. Since

is positive definite. Since

![]()

we have all the eigenvalues of ![]() (i = 0, 1, 2) are the following:

(i = 0, 1, 2) are the following:

![]() (8)

(8)

Therefore ![]() (i = 0, 1, 2) is positive definite, which means the discretization matrices

(i = 0, 1, 2) is positive definite, which means the discretization matrices![]() ,

, ![]() and

and ![]() of Equation (1) are positive definite.

of Equation (1) are positive definite.

2.3. Diagonal Dominance

For all the discretization matrices![]() ,

, ![]() and

and ![]() of Equation (1),

of Equation (1),

![]()

Therefore, the discretization matrices![]() ,

, ![]() and

and ![]() of Equation (1) are diagonally dominant.

of Equation (1) are diagonally dominant.

2.4. Symmetriness

It is easy to see that only ![]() is symmetric.

is symmetric.

3. Stationary Iteration Methods and Krylov Subspace Methods

The goal of this section is to find an efficient iterative method for the solution of the linear system![]() . Since

. Since ![]() are positive definite and diagonally dominant, we would use fixed point methods and Krylov subspace methods. In this section, first find the suitable convergence tolerance, later use numerical experiments to compare the convergence speed of various iteration methods.

are positive definite and diagonally dominant, we would use fixed point methods and Krylov subspace methods. In this section, first find the suitable convergence tolerance, later use numerical experiments to compare the convergence speed of various iteration methods.

3.1. Convergence Tolerance

Without loss of generality, take![]() , convergence tolerance

, convergence tolerance ![]() and assume that

and assume that![]() , then

, then

![]()

In order to achieve ![]() in the numerical experiments, we would set convergence tolerance

in the numerical experiments, we would set convergence tolerance![]() .

.

3.2. Numerical Experiments

Computational results using fixed point methods such as Jacobi, Gauss-Seidel, SOR etc. and projection methods such as PCG, BICG, BICGSTAB, CGS, GMRES and QMR are listed out in figures (Figures 1-21), for all three different ![]() values

values![]() . The projection methods are performed with different preconditioning methods such as Jacobi preconditioning, luinc and cholinc preconditioning.

. The projection methods are performed with different preconditioning methods such as Jacobi preconditioning, luinc and cholinc preconditioning.

The tables (Table 1 and Table 2) containing relevant computational details are also given below.

4. Conclusions

From the figures (Figures 1-21) and tables (Table 1 and Table 2), we obtain the following conclusions:

• The convergence speeds of SOR, Backward SOR and SSOR are faster than that of GS and Backward GS; while GS and Backward GS are faster than Jacobi;

• If matrix A is symmetric![]() , the convergence speeds of SOR, Backward SOR and SSOR are the same; otherwise

, the convergence speeds of SOR, Backward SOR and SSOR are the same; otherwise![]() , SSOR is faster than Backward SOR and SOR;

, SSOR is faster than Backward SOR and SOR;

• The convergence speeds of SOR and Backward SOR are the same, also for GS and Backward GS;

• From Table 2, the iteration steps and the time for all fixed point methods of the case in which ![]() are less than the case in which

are less than the case in which![]() , and also the case in which

, and also the case in which ![]() are less than the case in which

are less than the case in which![]() ;

;

• The upwind difference method is more suitable to be applied to convection dominant problem than the cen-

![]()

Figure 1. Comparison of convergence speed using fixed point methods when![]() .

.

![]()

Figure 2. Comparison of convergence speed using fixed point methods when![]() .

.

![]()

Figure 3. Comparison of convergence speed using fixed point methods when![]() .

.

![]()

Figure 4. PCG with different preconditionings when![]() .

.

![]()

Figure 5. BICG with different preconditionings when![]() .

.

![]()

Figure 6. BICGSTAB with different preconditionings when![]() .

.

![]()

Figure 7. CGS with different preconditionings when![]() .

.

![]()

Figure 8. GMRES with different preconditionings when![]() .

.

![]()

Figure 9. QMR with different preconditionings when![]() .

.

![]()

Figure 10. PCG with different preconditionings when![]() .

.

![]()

Figure 11. BICG with different preconditionings when![]() .

.

![]()

Figure 12. BICGSTAB with different preconditionings when![]() .

.

![]()

Figure 13. CGS with different preconditionings when![]() .

.

![]()

Figure 14. GMRES with different preconditionings when![]() .

.

![]()

Figure 15. QMR with different preconditionings when![]() .

.

![]()

Figure 16. PCG with different preconditionings when![]() .

.

![]()

Figure 17. Bicg with different preconditionings when![]() .

.

![]()

Figure 18. BICGSTAB with different preconditionings when![]() .

.

![]()

Figure 19. CGS with different preconditionings when![]() .

.

![]()

Figure 20. GMRES with different preconditionings when![]() .

.

![]()

Figure 21. QMR with different preconditionings when![]() .

.

![]()

![]()

Table 1. Computational details of different projection methods with different preconditioning.

![]()

Table 2. Computational details of different fixed point methods.

tral difference method;

• The convergence speed of the six projection methods including PCG, BICG, BICGSTAB, CGS, GMRES and QMR under luinc preconditioning are faster than under cholinc preconditioning, while under cholinc preconditioning are faster than Jacobi preconditioning;

• The six projection methods under Jacobi, luinc and cholinc are convergent when![]() , however, for

, however, for![]() , the PCG method are not convergent and also the CGS method under Jacobi preconditioning are not convergent when

, the PCG method are not convergent and also the CGS method under Jacobi preconditioning are not convergent when![]() .

.

Acknowledgements

I thank the editor and the referee for their comments. I would like to express deep gratitude to my supervisor Prof. Dr. Mark A. Peletier whose guidance and support were crucial for the successful completion of this paper. This work was completed with the financial support of Foundation of Guangdong Educational Committee (2014KQNCX161, 2014KQNCX162).