Neural Network Based on SET Inverter Structures: Neuro-Inspired Memory ()

1. Introduction

Single-Electron Devices (SEDs) have attracted much attention since the 1980s when it was that they could be used to fabricate memory devices, low-power logic devices and high-performance sensors. Among SEDs, Single- Electron Transistors (SETs) have been the most studied device, because it was seen as a potential successor for present metal-oxide-semiconductor MOS transistor [1] .

This device is made of an island connected through two tunneling junctions to a drain and a source electrode. The distance between the two conductors is in order of few nanometers, and electron can possibly tunnel through the insulator [2] , when the absolute voltage difference across the junction is decreased due to the event [3] .

The idea of combining Single-Electron Transistors (SETs) in neural networks architectures has raised considerable interest over recent years because of its potentially unique functionalities. The rationale behind neural networks and SET devices is the possibility of taking advantages of both technologies [3] [4] : the high gain, speed of the SETs, learning capacity and problem-solving abilities of the Artificial Neural Networks (ANNs), in order to lead to Single-Electron Memories (SEMs) with low-power consumption, high density of the SETs and brain behavior qualities.

In this paper, we present simulations of the two main parts of an elementary neural network based on SET (Perceptron) using SIMON [5] simulator and MATLAB [6] . The purpose of our work was therefore the presentation of the advantages to simulate such an elementary neural network by a Monte Carlo simulator [5] which features a graphical circuit editor embedded in a graphical user interface as well as the simulation of co-tunnel events and a single-step interactive analyses mode. The idea then was to present this neural network as a model of a “smart” SET/SEM device.

2. Single-Electron Devices

2.1. Single-Electron Transistor

Since the 1980s, developments in both semiconductor technology and theory have lead to a completely new field of research focusing on devices whose operation is based on the discrete nature of electrons tunneling through thin potential barriers. These devices which exhibit charging effects including Coulomb blockade are the single-electron devices [7] .

The single-electron tunneling technology is the most promising future technology generations to meet the required increase in density and performance and decrease in power dissipation [8] . The main component of SET is the tunnel junction that can be implemented using silicon or metal-insulator-metal structures, GaAs quantum dots.

The tunnel junction can be thought of as a leaky capacitor then electrons are blockaded, the blockade can be overcome and a current flows if the junction voltage V is above the threshold given by the charging energy. Then the junction behaves like resistor.

Recent research in SET gives new ideas which are going to revolutionize the random access memory and digital data storage technologies.

2.2. Single-Electron Memories

During the last few years the development of new memory devices has attracted much attention These single- electron memories provides a great potential for SETs to be used in the design of nonvolatile Random Access Memory (RAMs), for example, for mobile computer and communication applications [9] .

Several single-electron memory cells have been proposed in the literature such us the single-electron flip-flop, the Multiple Tunnel Junction (MTJ) proposed by Nakazato and Ahmed [10] , the Q0-independent memory and others.

Each memory differs from the other by such properties as the complexity of the architecture, the dependence of background charges and the operating temperature.

Neural networks are suitable architectures for SET devices and the combination of the two technologies has many advantages. Because they are too small SET devises can yield to a powerful neural network with extremely low power dissipation.

3. Neural Network

3.1. The Neural Biological Model

The human brain contains more than a billion of neuron cells, each cell works like a simple processor. The massive interaction between all cells and their parallel processing only makes the brain’s abilities possible [11] .

By definition, “neurons are basic signaling units of the nervous being in which each neuron is a discrete cell whose several processes are from its cell body” [12] .

Figure 1 shows the biological model of the neuron which has four main regions to its structure: The cell body, the dendrites, the axon and the heart of the cell “the cell body” which contains the nucleolus and maintains protein synthesis.

The dendrites collect synaptic potentials from other neurons and transmit them to the soma. The soma performs a non-linear processing step (called fire model): if the total synaptic potential exceeds a certain threshold then a spike is generated [13] . A spike is transmitted to other neurons via synapses, the points of connection between two neurons.

Synapses dominate the architecture of the brain and are responsible for structural plasticity [14] , robustness of the brain and electrochemical communication takes place at these junctions.

3.2. Artificial Neural Model

3.2.1. Perceptron

The most well-known neural processing element for artificial analog neural networks is one of the Perceptron models of Rosenblatt (1962) [15] . This model is an extension to the older neural model of McCulloch and Pitts (1943) [16] .

The perceptron forms the basic neural processing element for the artificial analog neural networks, a single layer neural network with weights and biases trained to produce a correct target vector [17] . It represents the combination of a neuron and n number of synapses. Figure 2 shows the complete perceptron.

![]()

Figure 2. The complete perceptron, consisting of multiple synapses and a binary neuron.

In the literature, many types of neural networks hardware based on single-electron transistor can be found. Our work is based on a complete two-input perceptron implemented in SET technology by Van de Haar and Hoekstra [18] , which is composed of two important stage, a SET inverter performs the function of the synapse while another one perform the activation function of the neuron.

SIMON simulations are shown using an input test-pattern that enters all possible states of the neural network. We should know that the various coupling capacitances between device elements, such as the capacitance of tunnel junction, are extracted from SIMON simulator after several trials using stationary simulation. In fact, for every such time step “even number tunnel events are simulated and averaged”.

3.2.2. The Synapse

The synapse is the point of connection between neurons, it allows to store the analog weight value Wi and to multiply them by digital input signal Xi.

The basic principle of a synapse is to perform the following two functions.

・ Modify connection strength.

・ Store weight.

In the SET inverter, the analog values of Wi and Xi are represented by the voltages VWi and VXi. Figure 3 shows the SET inverter structure based synapse where VW0 is Weight value.

The digital input signals VX0 are set by the supervisor or by the output of another perceptron. The analog weights Wi and the digital inputs signals Xi are normalized between 0 and 1. In order to translate these digital values to voltages in the SET inverter structure, we simulate the transfer function using SIMON simulator with the parameters set as chosen in Figure 3.

The transfer function of the SET inverter based synapse is shown in Figure 4. VX0 is set to 0 and 6 mV in or- der to obtain this simulation result.

The transfer function is approximately linear when the input voltage VW0 evolves in the region 3.9 mV < VW0 < 5.8 mV. In this case the structure has an inversion operation, as a consequence the analog weight value Wi = 1 is mapped to 3.9 mV and Wi = 0 is mapped to 5.8 mV.

The translation Table 1 shows the translation of parameter Wi into voltage VWi. Note that parameter Xi can only yield 0 or 1 in other words VXi can be 0 or 6 mV.

3.2.3. The Neuron

The task of the neuron is to sum all weighted analog input signals Xi and classify the summed signals by means of an activation function. The activation function is a limiting function and different activation functions are found, in fact, the most commonly used functions, are the hard-limiter and the sigmoid function [17] .

The neuron should perform the following two functions:

![]()

Figure 3. Synapse based on the SET inverter structure.

![]()

Figure 4. Transfer function of the SET inverter based synapse.

![]()

Table 1. Translate table Wi on voltages Vwi.

・ Gather input signals.

・ Classify the results.

In Figure 5, a two-input neuron based on a SET inverter structure is shown. The coupling capacitors CC0 and CC1 perform the summation function and the activation function is carried out by the SET inverter structure [17] .

The parameter set for the neuron is scaled with a factor 0.8 with respect to the parameter set of the synapse, in order to obtain output signals in the same voltage range.

Two important parameters can be distinguished. The first parameter is the SET junction capacitance value CT, this parameter describes the behavior of a SET circuit operating in the single-electron transport regime. The second parameter is the tunnel resistance RT, which is needed to describe the behavior of a SET circuit in the high current regime.

The neuron’s activation function is a hard-limiter function, as it is shown in Figure 6 the threshold voltage, which is the input voltage Vg for which the output voltage changes, is set to 3.3 mV approximately in this configuration. Which correspond to the threshold parameter S0 = 0.55.

3.2.4. Simulation of the Perceptron

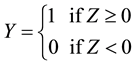

The perceptron developed by Rosenblatt (1957) is an artificial neuron [15] -[17] , whose activation function is a step function, it consists of multiple synapses and a neuron which is designed using the SET inverter structure. If the adder output is equal or greater than the threshold Z, then the neuron fires. If the adder output is smaller than the threshold Z then there is no neuron output.

In Equation (1), the output signal Y is expressed in terms of the input variables Xi and Wi for a general case:

(1)

(1)

Figure 7 shows a complete two-input perceptron, which is based on SET inverter structures. This perceptron was first published at the ICES2003 [17] [18] and simulated using P-Spice. Therefore, in our work special

![]()

Figure 5. Two-input SET inverter based neuron.

![]()

Figure 6. Transfer function of the SET inverter structure based neuron.

![]()

Figure 7. Two-input perceptron, based on SET inverter structures.

measures had to be taken in order to simulate this circuit correctly in SIMON.

Before this perceptron can be simulated, the desired output has to be defined. In order to check if the output signal is the desired signal, a good test-set of input signals has to be created using MATLAB taking into account Equation (1). In Table 2 a test-set of input signals and the desired output of a two-input perceptron is shown.

The threshold level S0 is assumed to be 0.35 (which is an arbitrary choice). Then the operation of the perceptron is fully tested using SIMON simulator and results are presented in Table 3. Comparing with the results obtained with P-Spice in [17] the output signal VY agrees with the desired output signals obtained from the test patterns of Table 2. However, these outputs are inverted with respect to the outputs obtained using MATLAB. In order to obtain a non-inverted output signal VY, an extra inversion stage (identical to the neuron stage of Figure 5) can be added to the perceptron of Figure 7.

A very interesting idea that brings multiple benefits to nanotechnology is to have a multi-level memory to a single electron [19] . In this context, we have tried to highlight the discrete of the charge of the electron node memory N1.

The shapes of control signals (the weight VWi & the input VXi) are very important to observe the charge evolution “Q” versus time. We have considered in this work that the architecture works in the same way as a memory.

Figure 8 shows the shape of the charge Q(t) in the island which allows us to count the number of electrons stored in the memory node (node 1) and to know the instant t when writing or erasing.

At first, the input voltage VX1 is zero and there is no electron at the quantum dot N1. By applying a negative input voltage VX1 = −6 mV, a certain number of electrons are stored inside the quantum dot and no more electrons will be transported out of there. Because of the Coulomb blockade effect and the potential barrier, the electron cannot flow back to the ground.

This result shows that the number of electrons in the memory node (the red curve for Vw1 = 9 mV) after the charging increases when the input voltages increase until it reaches 5 electrons.

After returning VX1 to zero electrons are not lost, this property provides a great potential for the network to be used in the design of nonvolatile RAMs.

The weight VW1 plays the role of parameters which are adjusted at the training process. As we can see in Figure 8 the number of charge stored in node N1 depends of the value of VW1. When the value of VW1 is equal to 9 mV five electrons are stored in the node N1 but when we decrease the value of the weight the number of the writing electron increase until it reach ≈19 electron for Vw1 = 1 mV. It can be seen that this memory can be con-

![]()

Table 2. Test-set of input signals and the desired output.

![]()

Table 3. A test-set of input signals.

![]()

Figure 8. Simulation results of the charge and influence of the value of the weight VW1 and the input VX1.

sidered as a smart memory because we can manipulate the number of charges stored in the memory by a learning algorithm.

This learning algorithm is used to train the network for a particular purpose, in our case achieve extraordinary storage densities at an extremely low power consumption.

4. Conclusions

In this paper a complete two-input perceptron, which is based on SET inverter structures, was presented, discussed and simulated using SIMON simulator and MATLAB. It was also proved that this architecture works as a random access memory. Two features are important in this memory: the first is its low-power consumption and the second is its dependence of the charge stored to the variation of the weight.

The advantages of such Monte Carlo simulator “SIMON” which is designed to solve capacitance systems that contain tunnel junctions are the possibility to investigate the evolution of charge versus time by the main of simulation process based on orthodox theory.

This work opens the prospect of smart memory in which one can manipulate the number of bits stored by the mean of learning algorithm.

NOTES

*Corresponding author.