Theoretical Economics Letters

Vol. 3 No. 1 (2013) , Article ID: 28160 , 4 pages DOI:10.4236/tel.2013.31008

An Information Theoretic Approach to Understanding the Micro Foundations of Macro Processes

1Department of Agricultural and Resource Economics, University of California, Berkeley, USA 2Department of Agricultural and Resource Economics and Graduate School, University of California, Berkeley, USA

Email: sberto@berkeley.edu, gjudge@berkeley.edu

Received December 13, 2012; revised January 14, 2013; accepted February 15, 2013

Keywords: Empirical Exponential Likelihood; Information Theory

ABSTRACT

In the context of a simple equilibrium macro process we suggest a probability basis for recovering information regarding the unknown and unobservable micro process, and solving the resulting inverse problem.

1. Introduction

Policy makers often use macro indicators without knowledge of its allocative and distributive micro impacts. Although observations from economic behavior systems are micro and statistical in nature, the world we perceive is a macro one and the data we work with are often single valued in content. In addition, the dynamic transition process between the two worlds-economic systems often remains hidden. Given single valued-point equilibrium outcome data, in this paper we seek a probabilistic way to unlock the corresponding hidden micro content. In the context of an invisible hand economy, we consider a single market process and the aggregate price data provided by a point equilibrium in the price and quantity space. Visualizing an economic-market process that involves agents in the form of buyers and sellers that are driven by an optimization principle, our objective is to use these data to recover information at the micro level and to provide a measure of the stability-order of the process. Following a traditional economic equilibrium approach, leads to imaginary individuals coming to an imaginary market with ready-made bid and offer prices. Traditionally, this leads to conceptual demand and supply functions involving unknown and unobservable parameters and a solution that satisfies a single valued equilibrium concept. In a traditional context, this framework becomes the basis for a range of logical conclusions involving such concepts as excess demand and supply, movements along a demand of supply function, and shifts in the demand and supply functions that are not observable.

Realistically, what evolves data wise from a market equilibrium, is a point in the price-quantity space that summarizes the aggregate data-information regarding buyers and sellers. Assuming a general price-quantity relationship, this macro-aggregate data point is useful in a summary way, but what would be more useful from a market performance standpoint, is information on the price probability-frequency distributions underlying the unknown and unobservable possible micro prices that produced the single macro state equilibrium result. Capturing the unknown underlying discrete micro state space price frequencies from the information gained by observing the aggregate-average price outcome data, requires the solution of an inverse problem. This type of information recovery provides a way of interpreting the outcome of micro market experiments as a probability measure on a defined discrete state space. The resulting discrete price-quantity state space probabilities 1) provide a bridge between the macro and the micro properties of the market process while avoiding the underlying micro details and 2) a basis for understanding agent behavior and the efficiency of market processes-outcomes. The recovered probabilities may be used to indicate how agents’ behavior may change the probabilities, and thereby affect the stability, competitive nature, and informational content of the market process, and the resulting macro indicator/outcome.

The paper proceeds as follows: given an aggregate market equilibrium price and quantity, in Section 2 we develop a model for the price state space and Section 3 outlines the conceptual and methodological basis for recovering, in an informational-probability context, the underlying micro distributions of the unobserved possible state space prices. Section 4 presents an illustrative example and in Section 5 we summarize the implications of the micro-macro bridge.

2. Modeling the Price State Space

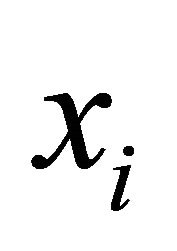

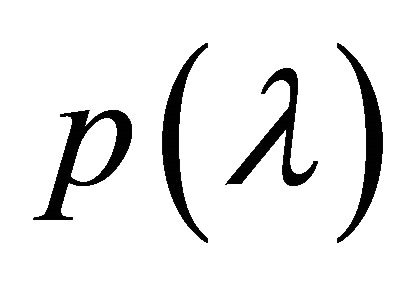

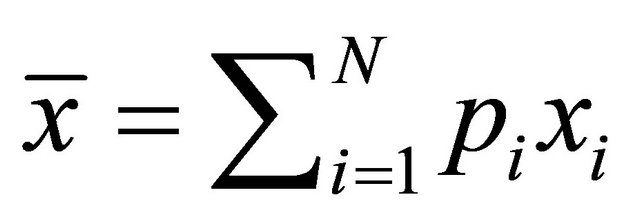

As a basis for defining the information recovery problem, we model a single market process where the aggregate-average outcome price![]() , is a result of market experiments carried out a large number of times. Each experiment reveals one of n potentially equally likely price outcomes

, is a result of market experiments carried out a large number of times. Each experiment reveals one of n potentially equally likely price outcomes . Assume as the market operates, the experiment is repeated a large number of times, but the only thing we know is the average price resulting from these trials. Given this sample of price information, and nothing else, what probability-price frequency pi should we assign to each of the possible

. Assume as the market operates, the experiment is repeated a large number of times, but the only thing we know is the average price resulting from these trials. Given this sample of price information, and nothing else, what probability-price frequency pi should we assign to each of the possible  price outcomes? With an average price of

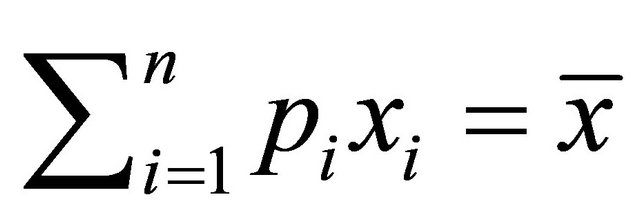

price outcomes? With an average price of![]() , the resulting moment constraint

, the resulting moment constraint

, (1)

, (1)

can be satisfied by an infinite number of probability mass functions(pmfs)-price frequencies. In other words, there are a large number of price frequencies that can lead to a mean market price ![]() . The price information recovery problem is how to choose one of the possible pmfs-price frequencies, to represent the market price behavior. The sample

. The price information recovery problem is how to choose one of the possible pmfs-price frequencies, to represent the market price behavior. The sample  frequencies are not given to us. However, the probability space defines, in exponential form, a unique family of pmfs-price frequencies,

frequencies are not given to us. However, the probability space defines, in exponential form, a unique family of pmfs-price frequencies,  , where fi

, where fi

represents the frequency of the price outcomes equal to the prescribed mean. Consequently, the problem may be solved in a statistical information theoretic context by Lagrange multiplier-extremum methods.

3. An Information Theoretic Approach to Information Recovery

One way to solve this ill-posed inverse problem for the unknown probabilities pi, without making a large number of assumptions, imposing artificial information to make the problem tractable, or introducing additional information, is to formulate it as an extremum problem. This type of extremum problem is in many ways analogous to allocating probabilities in a contingency table where pi and qi are, respectively, the observed and reference distributions of a given event. This is a well developed information theoretic method, and the solution is achieved by minimizing the divergence between the two sets of probabilities, as the data will permit. That is, we optimize a goodness-of-fit (pseudo-distance measure) criterion, subject to the data-moment space constraint. One attractive set of divergence measures (see [1,2]) involves the following Cressie-Read(CR) power divergence distance measure, ([3,4])

(2)

(2)

where  is an arbitrary and unspecified parameter.

is an arbitrary and unspecified parameter.

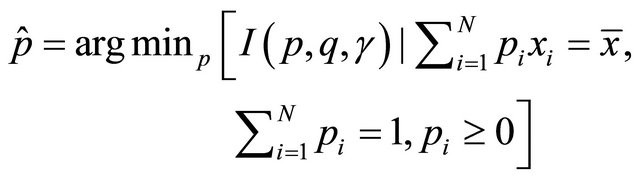

In the context of recovering the unknown price probability distribution, if we use the CR criterion (2), this suggests we seek the following extremum solution:

(3)

(3)

where the reference distribution q is specified as a uniform distribution.

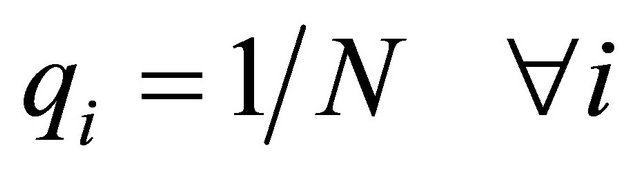

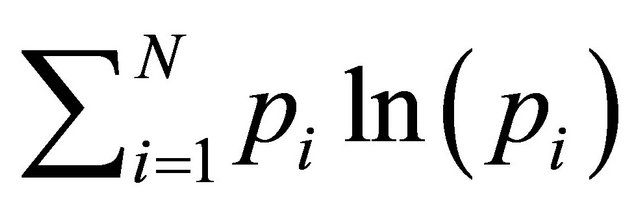

In the limit, as g, ranges from −1 to 1, a family of CR functionals emerge. Three variants of I(p, q, g) have received explicit attention in the literature [5]. If we assume for expository purposes that the reference distribution is discrete uniform,  , and let the CR statistic g ® 0, then I(p, q, g) converges to the maximum empirical exponential likelihood (MEEL the criterion

, and let the CR statistic g ® 0, then I(p, q, g) converges to the maximum empirical exponential likelihood (MEEL the criterion

. This criterion is also known in the literature as the maximum entropy solution of [5].1 What emerges with the MEEL solution is a descriptive measure of the corresponding unknown price probability distribution(s), which in our case is consistent with the mean of prices. Solutions for these distance measures cannot be written in closed form and must be numerically determined.

. This criterion is also known in the literature as the maximum entropy solution of [5].1 What emerges with the MEEL solution is a descriptive measure of the corresponding unknown price probability distribution(s), which in our case is consistent with the mean of prices. Solutions for these distance measures cannot be written in closed form and must be numerically determined.

Information Theoretic Formulation

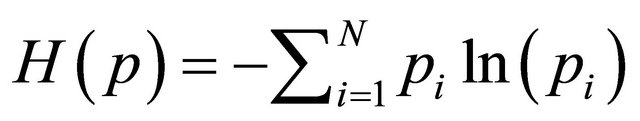

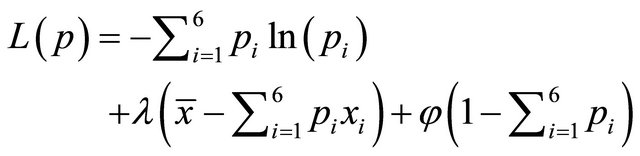

If, consistent with our multinomial formulation, we use the CR criterion with g → 0, we select the probabilities that maximize

(4)

(4)

subject to

(5)

(5)

and the condition that the probabilities must sum to one

(6)

(6)

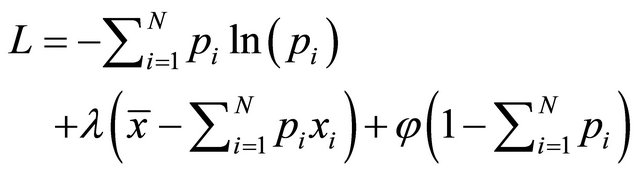

The corresponding Lagrangian for the extremum problem is

(7)

(7)

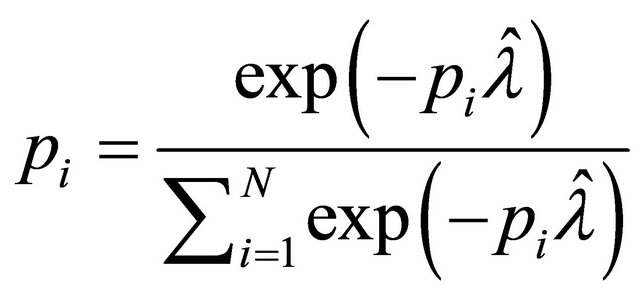

Since H is strictly concave, there is a unique interior solution and solving the first-order conditions yields the exponential result

(8)

(8)

for the ith outcome. In this context, the chosen distribution is, out of the possible solutions, the one that is numerically identical with the frequency distribution that can be realized in the greatest number of ways. In other words the frequency distribution can be realized experimentally in more ways than any other distribution that satisfies the mean constraint. We note again that  is a member of a canonical exponential family with mean

is a member of a canonical exponential family with mean

.

.

This price formulation provides one way to express the probabilistic-statistical nature of micro process-data. It recognizes that demand and supply transactions-outcomes may occur, with a different probability, at any place in the price state space ensemble. The monotonic probability distributions are different from quantum economic theory formulations that suggest symmetric mean based distributions (see [7]).

4. An Illustrative Example

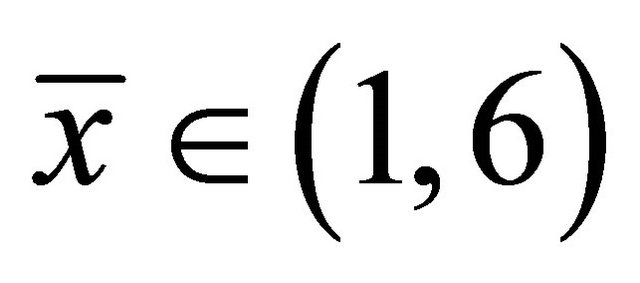

As an illustration of an ill-posed economic inverse problem, assume in the context of the previous sections, that the possible price space may be defined by the first six integers,  The only information given is that the average price outcome for a large number of independent trials is

The only information given is that the average price outcome for a large number of independent trials is![]() . There are an infinite number of probability distributions supported on

. There are an infinite number of probability distributions supported on  that have a mean of

that have a mean of![]() .2 In a situation like this, if we follow the MEEL formulation (4) we would select the probabilities that maximize

.2 In a situation like this, if we follow the MEEL formulation (4) we would select the probabilities that maximize

(9)

(9)

with corresponding Lagrangian

(10)

(10)

If , the constraint set is non-empty and compact. Given that H(p) is strictly concave, there is a unique interior solution to the problem. By solving the first order conditions, the MEEL probability exponential distribution places the weight

, the constraint set is non-empty and compact. Given that H(p) is strictly concave, there is a unique interior solution to the problem. By solving the first order conditions, the MEEL probability exponential distribution places the weight

(11)

(11)

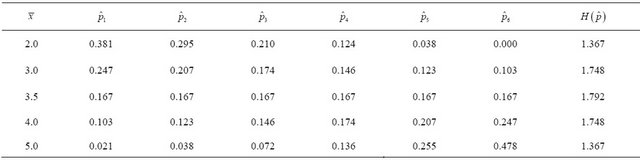

The resulting MEEL price frequency distribution estimates for various values of ![]() are presented in Table 1.

are presented in Table 1.

The maximum of the CR functional  and minimum micro price information occurs with a mean of

and minimum micro price information occurs with a mean of  and the corresponding uniform price distribution. Other monotonic distributions of the state space price probabilities are, using a MEEL distance measure, in the form of exponential distributions.

and the corresponding uniform price distribution. Other monotonic distributions of the state space price probabilities are, using a MEEL distance measure, in the form of exponential distributions.

5. Summary

There are well developed economic general equilibrium price and allocation models over space and time. In microeconomics, events decisions-actions take place instantaneously or in a moment in time. In this paper, using information theoretic methods, we have attempted, in a probability context to capture the price implications of this type of action-outcome process. It is important to recognize a world where micro price transactions may reflect non-uniform distributions and to evaluate the resulting allocation and distribution implications. Economic data reflecting average outcomes abounds. Creative use of the information theoretic methods introduced in this paper should permit the recovery, in a probabilistic form, of information from the aggregate data and identify unusual

Table 1. Estimated MEEL distributions for various![]() .

.

![]() is the mean value of the distribution,

is the mean value of the distribution,

![]() the frequencies, and H(p) a measure of uncertainty.

the frequencies, and H(p) a measure of uncertainty.

price variability and evaluate micro behavior outcomes.

While the focus in this paper has for expository purposes been on a particular problem in economics, the implications are general for all of the behavioral sciences. For example, consider the hidden dynamics of voting systems in political science, as we move from individuals voting to the general results of an election. Or in a general game-decision context the hidden dynamics and an unobservable stochastic-probabilistic micro components.

6. Acknowledgements

We thank the editor and an anonymous referee for the comments and Mike George, Wendy Tam Cho, Alexander Gorban, Leo Simon, and David Wolpert for great comments and suggestions.

REFERENCES

- A. Gorban and G. Judge, “Entropy the Markov Ordering Approach,” Entropy, Vol. 12, No. 5, 2009, pp. 1145- 1193.

- G. Judge and R. Mittelhammer, “An Information Theoretic Approach to Econometrics,” Cambridge University Press, Cambridge, 2012.

- N. Cressie and T. Read, “Multinomial Goodness of Fit Tests,” Journal of Royal Statistical Society, Vol. 46, No. 3, 1984, pp. 448-464.

- T. Read and N. Cressie, “Goodness of Fit Statistics for Discrete Multivariate Data,” Springer Verlag, New York, 1988. doi:10.1007/978-1-4612-4578-0

- E. Jaynes, “Information Theory and Statistical Mechanics,” In: K. W. Ford, Ed., Statistical Physics, W. A. Benjamin, New York, 1963, pp. 181-218.

- A. Owen, “Empirical Likelihood,” Chapman and Hall, New York, 2001. doi:10.1201/9781420036152

- E. Smith and D. Foley, “Classical Thermodynamics and Economic General Equilibrium Theory,” Journal of Economic Dynamics and Control, Vol. 32, No. 1, 2008, pp. 7-65.

- A. Golan, G. Judge and D. Miller, “Maximum Entropy Econometrics,” John Wiley and Sons, Chichester, 1996.

NOTES

1When ( ( ‒1, then  leads to the estimation criterion that is equivalent to the [6] maximum empirical likelihood (MEL) criterion

leads to the estimation criterion that is equivalent to the [6] maximum empirical likelihood (MEL) criterion . The MEL criterion is consistent with a result that assigns discrete mass across the possible outcomes, and in the sense of objective function analogies, it is closest to the classical maximum-likelihood approach.

. The MEL criterion is consistent with a result that assigns discrete mass across the possible outcomes, and in the sense of objective function analogies, it is closest to the classical maximum-likelihood approach.

2Those familiar with a theoretical basis for information recovery, will recognize that this could also be [5] variant of a six-sided die problem, where the average is given for a large number of rolls of the die (see [8], p. 12).