Journal of Signal and Information Processing

Vol.07 No.01(2016), Article ID:64018,11 pages

10.4236/jsip.2016.71007

Identity Verification of Individuals Based on Retinal Features Using Gabor Filters and SVM

Mohamed A. El-Sayed1,2, M. Hassaballah3, Mohammed A. Abdel-Latif3

1Department of Mathematics, Faculty of Science, Fayoum University, Fayoum, Egypt

2CS Department, College of Computers and Information Technology, Taif University, Taif, KSA

3Department of Mathematics, Faculty of Science, South Valley University, Qena, Egypt

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 23 October 2015; accepted 26 February 2016; published 29 February 2016

ABSTRACT

Authentication reliability of individuals is a demanding service and growing in many areas, not only in the military barracks or police services but also in applications of community and civilian, such as financial transactions. In this paper, we propose a human verification method depends on extraction a set of retinal features points. Each set of feature points is representing landmarks in the tree of retinal vessel. Extraction and matching of the pattern based on Gabor filters and SVM are described. The validity of the proposed method is verified with experimental results obtained on three different commonly available databases, namely STARE, DRIVE and VARIA. We note that the proposed retinal verification method gives 92.6%, 100% and 98.2% recognition rates for the previous databases, respectively. Furthermore, for the authentication task, the proposed method gives a moderate accuracy of retinal vessel images from these databases.

Keywords:

Image Preprocessing, Gabor Filter, SVM, Authentication, Identification, Verification, Retinal Features, Feature Extraction, Query Image

1. Introduction

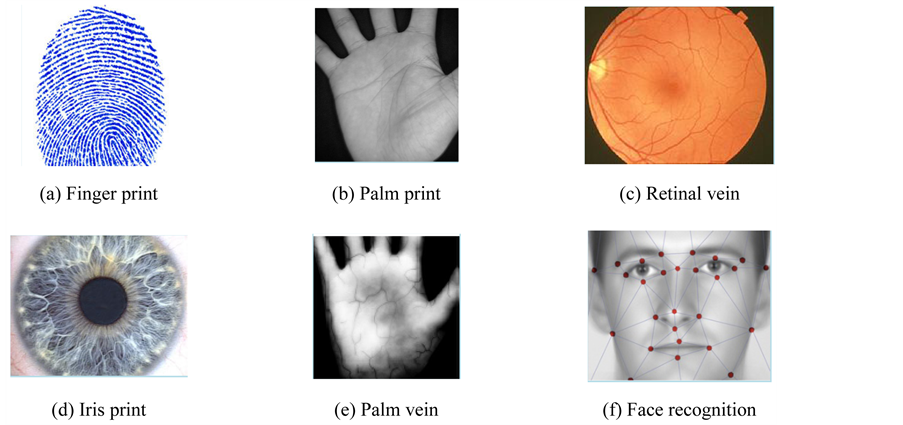

Accurate and reliable identification and verification play a significant role for systems of security and modern progress of reliable authoritative systems. Some biometric patterns of a user for recognition and identification are hand geometry, palm print, handwriting, gait, face, voice, palm vein, iris, key-stroking and retina as shown in Figure 1. Though, there are systems with highly accurate depend on the exiting biometrics, but all of these can simply be faked. Moreover, each sample of patterns has its strengths and feebleness and the selection relies on the application [1] [2] . Among these samples, digital retinal image has high rate accuracy for Identification and verification. Recognition of retinal images is powerful against cheat and has a minimal rate of false acceptance and rate of false rejection. Retina of human involves blood-vessels pattern, which is unique and characterize in each person and can be applied in a biometric system [3] [4] .

Biometric recognition oftentimes creates use of a comparator unit which can be executed in two distinct manners, namely user verification (also called authentication) and user identification [1] [5] . The former performs authentication based on “are you who you claimed to be” mode. This mainly involves a straight forward one to one comparison, whereby the final verdict is a binary “accept” or “reject” decision. Commonly the identifiers are the format of smart cards or IDs of individual. On the other hand, the latter performs an exhaustive one to many searches on the entire user database to solve the “who are you” question. In this context, the main objective of a person identification task is to find the closest matching identity if exists. Identification is often required by several surveillance and forensics applications [6] [7] .

Vertebrate retina is a light sensitive tissue lines the inner layer of the eye. We have reached optics to the analysis of visual images on the retina, where the same function as the camera during the filming of movies. Upon the arrival of light to the retina begins a series of chemical and electrical events that lead to the presence of nerve impulses that are sent to various visual centers of the brain where transport through the optic nerve. The retina is the distinctive style of the form of the blood vessels; it is not easy to be repeated between persons, as well as the change becomes rare. Even patterns of the right eye and the left eye of a same person are different. Also, the retina in the eyes of identical twins are unique, different and remains unchanged over a lifetime (In case if the person is infected with the eye such as retinal detachment, glaucoma, pterygium, retinitis, cataracts, eyestrain… etc.) [8] .

The uniqueness and importance of blood vessels and its pattern in human retina were revealed by two eye specialists Carleton and Goldstein during researching on diseases which infect eye [9] . They detected that each eye of individual has a single pattern of vascular that can be applied for authentication of persons. Paul Tower [10] discovered that identical twins have unique and distinctive patterns. It is almost impossible to forge a human retina as it is existing at behind of the eye and is not exposed to outer environment [3] . The Uniqueness and stability makes the recognition of retina is a notable solution to security and protection in the nearer future. Woodward et al. [11] present many technical solutions to catching images of retinal and their applied in biometrics advanced in identity assurance in the information age.

The applications for the biometric identification and verification of identities were examined initially using fundus cameras commonly used in ophthalmological applications [12] . In order to scan retina, the person must

Figure 1. Some biometric patterns for identification and verification.

position his/her eye very close to the lens of camera. During scanning process, one must remain still and must remove glasses to avoid signal interference. Once the scanner is activated, the green light moves in a complete circle and vascular pattern of retinal is captured [3] .

EyeDentify Company proposed the first system of identification based on commercial retina scanner called EyeDentification 7.5 [13] . Zana and Klein [14] were used evaluation of curvature to locate retinal vessel patterns in a noisy region. Morphological open operations are used to improve the segmentation results for next dealing of out coming binary digital images [15] .

A coefficient of cross correlation was defined in [16] based retinal identification, where the image captured is registered and the matching step is performed by correlating the pattern of vascular. In a retinal authentication system proposed by Bevilacqua et al. [17] , the accumulation matrix is utilized for detecting vascular bifurcation and cross over point. Akram et al. [18] presented a retina recognition method in which they represented feature points by bifurcations and endings vessel. For generating a feature vector, relative angles and distances between a candidate feature point and its four closet feature points are computed. In fact, most of the existing approaches in this direction either focused on extraction of feature points or on reliable extraction of vascular pattern.

The area of optical disk in the digital retinal image was found based on a fuzzy circular Hough transform by Ortega et al. [19] . In [19] , a pattern was defined using the optic disc as reference structure and using multi-scale analysis to compute a feature vector around it. The dataset used consists of 60 images that were rotated 5 times each. While, Fatima et al. [20] presented a technique known as windowing technique for filtration of false feature points which occur due to false vascular structures. This technique detects and eliminates the false points that are present. Support vector machine (SVM) has been a promising method for data classification and Verification [21] . Its success in practice is drawn by its solid mathematical foundation which conveys the following two salient properties: 1) Margin maximization; the classification boundary function of SVM maximizes the margin, which in machine learning theory, corresponds to maximizing the generalization performance given a set of training data; 2) Nonlinear transformation of the feature space using the kernel trick; SVM handles a nonlinear classification efficiently using the kernel trick which implicitly transforms the input space into another high dimensional feature space.

SVMs are a group of related methods of supervised learning applied for verification and classification. A classification issue commonly includes with training and testing data that involves of some data instances. In the training set, every instance includes one value from among target values and various characteristics. The main goal of SVM is to generate a pattern that prophesies target value of data instances in the testing set that are specified only the characteristics [22] -[25] . Example of supervised learning is classification operation through SVM. Known identifiers help show whether the system is executing in a correct path or not. This notification indicates to a desirable answer, supporting the accuracy of the system, or be used to help the system learn to perform correctly. Identification step in SVM classification are closely connected to the known classes. This is named feature extraction or feature selection. SVM classification and feature extraction with each other have an employ even when prediction of anonymous models is not needed. They can be applied to distinguish key sets which are interested in any operation identify the classes [25] .

The rest of this paper is organized as follows. In Section 2, the proposed system of authentication is offered, particularly the preprocessing, the feature point selection and the stages of matching. Section 3 describes the analysis of preprocessing technique. In Section 4, the feature extraction stage using Gabor filter is presented. Section 5 describes the feature extraction and classification using SVM, while Section 6 discusses the experimental results. Finally, conclusions are presented in Section 7.

2. The Proposed System of Authentication Based on Retinal Images

Technology of retinal recognition holds and analyzes the samples of the thin nerves of retinal vessel which are on the backwards of the eyeball. The system treats light which enter to the pupil of eye. Acquisition of input retinal images is in real-time because of the pattern of retinal image is visible only when the individual is alive. It hides when a person is dead. So deception or cheating is unallowable in the vessel patterns recognition. A powerful performance of retinal recognition must be stable with modifies in size, location and directions of the retinal vessel patterns. The proposal system of individual authentication contains four main phases: enhancement of image, extraction of feature using Gabor filter, construction of feature vectors database and SVM matching as illustrated in Figure 2.

Figure 2. The flowchart of the proposed authentication method.

3. Preprocessing Technique

In automatic verification, it is not necessary to process the surrounding background and noisy regions in the retina digital image because of taken a more processing time at all phases. Cutting and cropping out the area that includes the feature of retinal image reduces the number of processes on the image [26] . While, the preprocessing step is an important operation to extract accurately the region of interest (ROI). The ROI of retinal image is may be optic disc region, macula region, fovea region or blood vessels. The proposed method can be applied to any one. The following steps summarize the preprocessing stage in the proposed method:

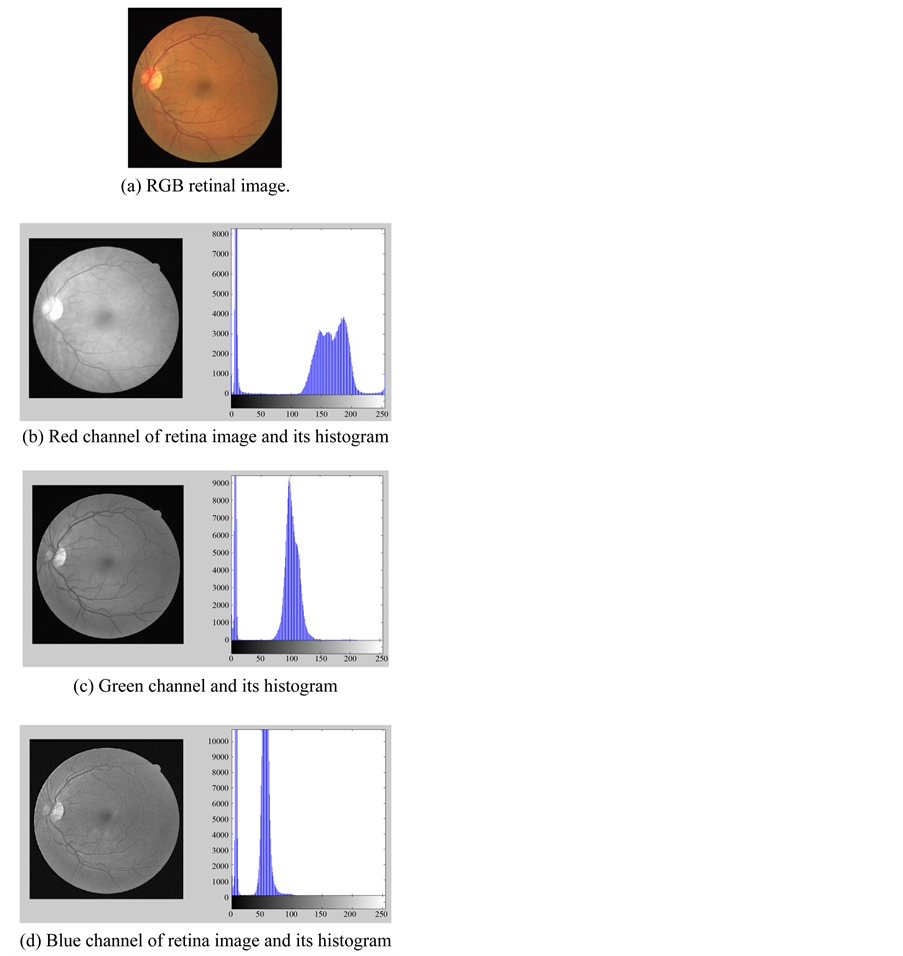

1) Read the input image I. (See Figure 3). In this steps the green channel is selected where this channel produces the superior contrast among vessels in retina image and different features, and the retina itself.

2) Create morphological a flat, disk-shaped structuring element  with a radius of 3 pixels.

with a radius of 3 pixels.

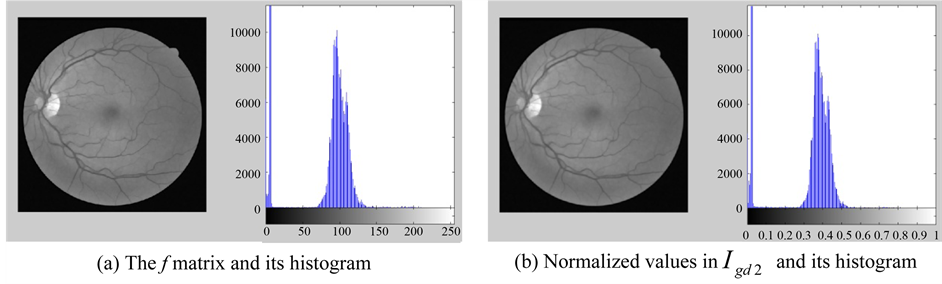

3) Create the matrix f, by remove snowflakes having a radius less than 3 pixels from  by opening it with the disk-shaped structuring element

by opening it with the disk-shaped structuring element  created in step 2 (See Figure 4(a)).

created in step 2 (See Figure 4(a)).

4) Convert the matrix f to double precision to  matrix.

matrix.

5) Creates a two-dimensional average filter  of [3 3] vector. Then, filters the 2D array

of [3 3] vector. Then, filters the 2D array  with the 2D filter

with the 2D filter . The result

. The result  has the same size and class as

has the same size and class as . The output is

. The output is  as shown in Figure 4(b).

as shown in Figure 4(b).

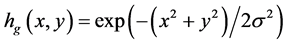

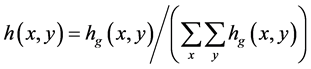

6) Creates a two-dimensional Gaussian filter  using:

using:

(1)

(1)

and

(2)

(2)

returns a rotationally symmetric Gaussian low-pass filter of vector [9 9] with standard deviation

7) Creates a two-dimensional average filter

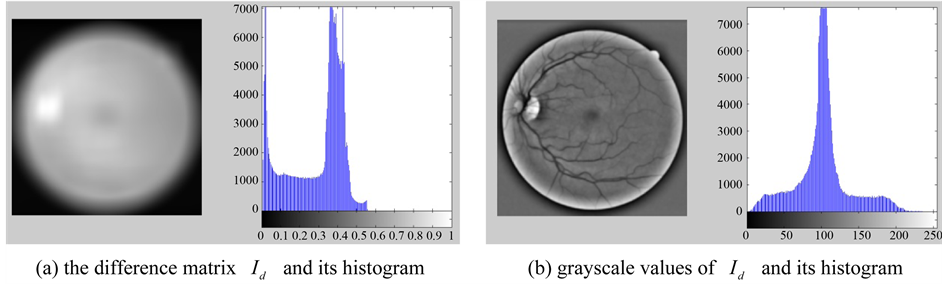

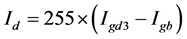

8) Calculate the difference matrix, it returns a matrix

9) Convert the matrix

Figure 3. Original retina image, RGB channels and their histograms.

4. Feature Selection Based on Gabor Filter

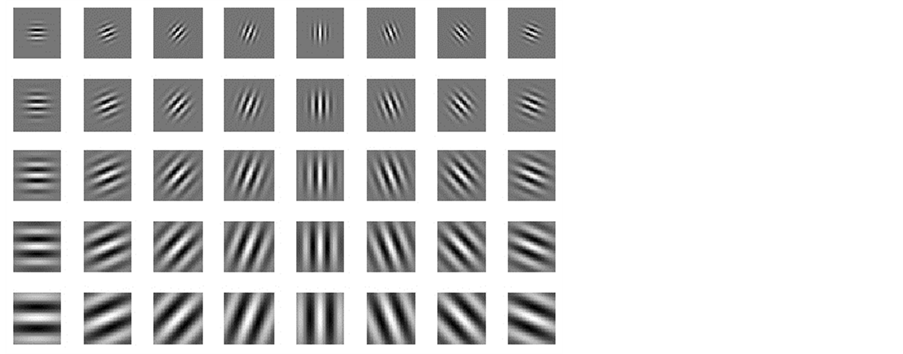

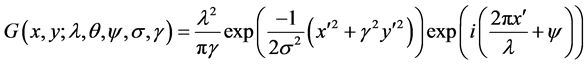

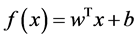

The recurrence and representations of direction in Gabor wavelets (candidate/filters) are identical to those of the persons optical system and they have been establish to be especially suitable for texture representation and recognition [27] . Gabor filters have been to a large degree utilized in applications of pattern analyses [27] -[29] . The most significant characteristic of Gabor filters is their stability to lightness, revolution, translation and scale. Moreover, they can fight photometric sensations, such as image noise and changes of illumination. In the zone of spatial, a 2-D Gabor filter is a function of Gaussian kernel modified by a wave of complex sinusoidal plane, given by

Figure 4. Remove snowflakes, and average filter

Figure 5. The difference matrix, and its grayscale values.

and

where

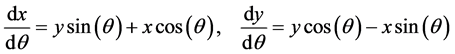

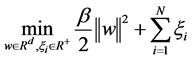

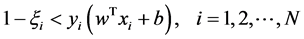

5. Feature Selection and Classification Using SVM

The proposed supervised method for features evaluation is a linear SVM [30] . Let S be a set of N training samples

subject to the linear constraints

Figure 6. Eight orientations and five scales using Gabor wavelets.

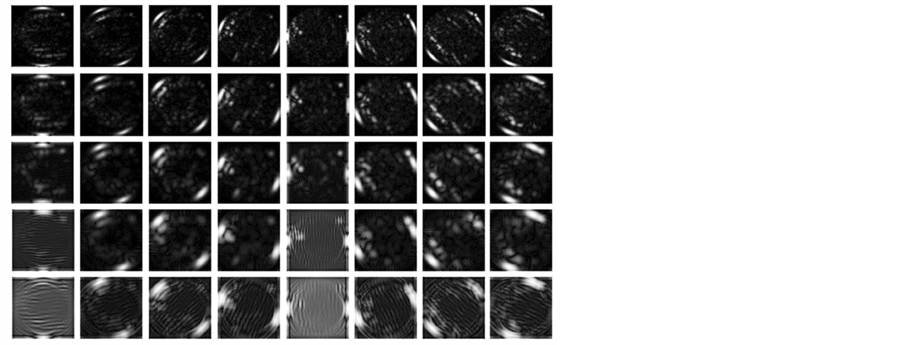

Figure 7. Results of feature extraction after applying filters.

where w is the weight vector, b is a bias term which is also learned from training data, each

The variances in various recurrences and directions in the retinal image is extracted by Gabor filters. The vector size of the resulting feature of image size (120 × 120) is calculated by multiply the number of orientations and scales (8 × 5) divided by (4 × 4) which represent the row and column down sampling factors, i.e. the vector size in this case (120 × 120) × (8 × 5)/(4 × 4) = 36,000. The size of feature vector can be reduced using reduction dimensionality methods for increasing the robustness of the extracted features. The Principal components analysis (PCA) (PCA), general discriminant analysis (GDA) or linear discriminant analysis (LDA) can be applied to reduce the dimensionality of feature vector. The details for these methods are presented in [27] . In LDA-based methods, the features number relies on the classes number in the problem of classification. The features number can be nearly equal to the number of classes. In this work, it is obtained a significant reduction of the features number of dimensionality up to 199 instead of 36,000 using LDA method.

6. Experimental Results and Discussion

6.1. Dataset

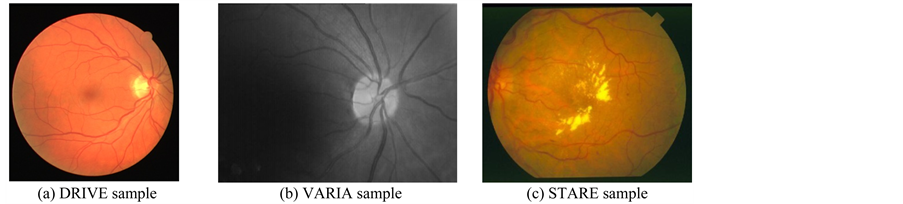

There is database a commonly obtainable namely DRIVE acronym for “Digital Retinal Images for Vessel Extraction”. It contains of 40 color photographs of eye fundus. The photographs were acquired from the population of diabetic retinopathy screening consists of 453 categories among 31 and 86 years of age in the Netherlands. Each image has been common practice JPEG compressed format. The second database of retinal images database were used, namely VARIA database. It is a collection of retinal images applied for authentication objectives. The VARIA database contains 233 images, taken from 139 different person. The images have been captured with a TopCon non-mydriatic camera NW-100 sample and are optic disc centered with a resolution of 768 × 584 [31] .

The third database, originally collected by Hoover et al. [32] , is the structured analysis of the retina (STARE). It consists of a total of twenty eye fundus color images where ten of them contain pathology. The images were captured using a TopCon TRV-50 fundus camera with FOV equal to thirty-five degree. Each image resolution is 700 × 605 pixels with eight bits per color channel and it’s available in PPM format. The set of twenty images are not divided into separated training and testing sets. Figure 8 shows examples of retinal images from the DRIVE, VARIA and STARE database. In the STARE database we can see same difficulties as the DRIVE data set, but the STARE contains ore abnormal images.

6.2. Results

The proposed method is implemented and executed on MATLAB stand and has been examined on above-men- tioned retinal datasets. We used the following performance measures in our analysis to determine the accuracy of proposed method, Equal Error Rate (EER), False Acceptance Rate (FAR), Genuine Acceptance Rate (GAR), and False Rejection Rate (FRR). FAR means the accepting imposter rate, it is a measure of the number of cheaters users accept falsely in the system as “genuine” users. FRR represents the rate of rejecting genuine user, it is a measure of the number of falsely rejected many genuine users by the system as “imposters”. GAR represents the rate of accepting genuine user, it is the ratio of the extracted features of every user to other image patterns of the same user. After complete the comparisons, if the value of similarity rate is greater than the value of fixed threshold, then the user is accepted as a genuine. While if the user is rejected, it means that a real user is not acceptable. i.e. it implies that falsely rejected. This locates false rejection rate FRR. We can find the measurement of these inaccuracy ratio by mapping a chain of impostor scores and genuine scores into chart based on their score and frequency. In order to get False Acceptance Rate (FAR), We compare vector features of every user to other vector features of all users in the data base.

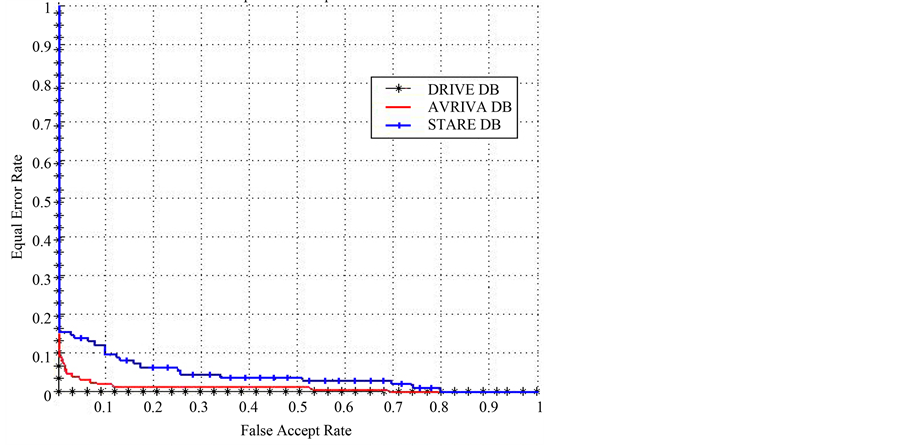

During the processing of comparison, if the score of matching is greater than the fixed threshold, then it means that an imposter is acceptable. At fixed thresholds, whole the comparisons are achieve on the vector database to calculate FRR, FAR and GAR. The system repeats this experiment with each new value of the threshold. The input retina images in DRIVE images database have a good quality, so the proposed retinal authentication method produced 100% rate of recognition and 0% EER with a good separation of inter-class space between genuine users and quacks. The system was obtained 98.2% rate of recognition and 0.013% EER in the VARIA database. Since most of input retina images in STARE images database were diseased ones, the system obtained 92.6% rate of recognition and an EER of 0.12%. Figure 9 and Figure 10 shows the separated distance between the two classes is greater in DRIVE database compared to VARIA and STARE databases. Table 1 offers the values acquired for the Verification Rate and EER measures for the above standard databases.

The performance and accuracy of proposed retinal authentication method were presented through ROC curve. The ROC curves were created by changing the threshold on the categorization image. The optimal value of the threshold is picked to obtain maximization of the accuracy. The relation between FAR and GAR of the above

Figure 8. Samples of three databases used in the experiments.

Figure 9. ROC drawn between verification rate (GAR), and false acceptance rate (FAR).

Figure 10. ROC drawn between equal error rate (EER), and false acceptance rate (FAR).

Table 1. Performance analysis of the proposed method for different databases.

databases is shown in Figure 9. FRR and FAR are equal but The EER is get from the curve of ROC represent between FRR and FAR, see Figure 10.

7. Conclusion

A simple and efficient method of authentication is introduced in this paper based on identification of retinal features. This method includes blood-vessel segmentation, creation of feature pattern and then matching of these features. Three commonly databases, DRIVE, VARIA and STARE are used in experiments to calculate the performance analysis. The method is stable to multiple and rotary shift of digital retina images. It gets rates of recognition of 100%, 98.2% and 92.6% for the DRIVE, VARIA and STARE digital datasets respectively. This preserves larger rate of recognition even for huge datasets holding noise of images. Moreover, the proposed method can be used to other images in which it is of benefit to find the vascular consisting of a blood vessel.

Cite this paper

Mohamed A.El-Sayed,M.Hassaballah,Mohammed A.Abdel-Latif,11, (2016) Identity Verification of Individuals Based on Retinal Features Using Gabor Filters and SVM. Journal of Signal and Information Processing,07,49-59. doi: 10.4236/jsip.2016.71007

References

- 1. Luo, H., Xin, F., Pan, J.-S., Chu, S.-C. and Tsai, P.-W. (2010) A Survey of Vein Recognition Techniques. Information Technology Journal, 9, 1142-1149.

http://dx.doi.org/10.3923/itj.2010.1142.1149 - 2. Prabhakar, S., Pankanti, S. and Jain, A.K. (2003) Biometric Recognition: Security and Privacy Concerns. IEEE Security and Privacy, 1, 33-42.

http://dx.doi.org/10.1109/MSECP.2003.1193209 - 3. Das, R. (2007) Retinal Recognition Biometric Technology in Practice. Keesing Journal of Documents & Identity, 22, 11-14.

- 4. El-Sayed, M.A., Bahgat, S.F. and Abdel-Khalek, S. (2013) New Approach for Identity Verification System Using the Vital Features Based on Entropy. International Journal of Computer Science Issues (IJCSI), 10, 11-17.

- 5. Jain, A.K., Flynn, P.J. and Ross, A.A., Eds. (2007) Handbook of Biometrics. Springer-Verlag, Heidelberg.

- 6. Jain, A.K., Ross, A. and Prabhakar, S. (2004) An Introduction to Biometric Recognition. IEEE Transactions on Circuits and Systems for Video Technology, 14, 4-20.

http://dx.doi.org/10.1109/TCSVT.2003.818349 - 7. Rhodes, K.A. (2003) Information Security: Challenges in Using Biometrics. United States General Accounting Office, Technical Report # GAO-03-1137T.

- 8. Haykin, S. (2003) Neural Networks—A Comprehensive Foundation. 2nd Edition, Pearson Education, New Delhi.

- 9. Simon, C. and Goldstein, I. (1935) A New Scientific Method of Identification. New York State Journal of Medicine, 35, 901-906.

- 10. Tower, P. (1955) The Fundus Oculi in Monozygotic Twins: Report of Six Pairs of Identical Twins. Archives of Ophthalmology, 54, 225-239.

http://dx.doi.org/10.1001/archopht.1955.00930020231010 - 11. Woodward, J.D., Orlans, N.M. and Higgins, P.T. (2003) Biometrics: Identity Assurance in the Information Age. Osborne McGraw-Hill, New York.

- 12. Jain, A.K., Bolle, R.M. and Pankanti, S. (2005) Biometrics: Personal Identification in Networked Society. Kluwer Academic Publishers, Dordrecht.

- 13. Farzin, H., Abrishami-Moghaddam, H. and Moin, M.-S. (2008) A Novel Retinal Identification System. EURASIP Journal on Advances in Signal Processing, 2008, Article ID: 280635.

http://dx.doi.org/10.1155/2008/280635 - 14. Zana, F. and Klein, J.C. (2001) Segmentation of Vessel-Like Patterns Using Mathematical Morphology and Curvature Evaluation. IEEE Transactions on Image Processing, 10, 1010-1019.

http://dx.doi.org/10.1109/83.931095 - 15. Zhang, Y., Hsu, W. and Lee, M.L. (2009) Detection of Retinal Blood Vessels Based on Nonlinear Projections. Journal of Signal Processing Systems, 55, 103-112.

http://dx.doi.org/10.1007/s11265-008-0179-5 - 16. Fukuta, K., Nakagawa, T., Hayashi, Y., Hatanaka, Y., Hara, T. and Fujita, H. (2011) Personal Identification Based on Blood Vessels of Retinal Fundus Images. Proceedings of SPIE, 6914.

- 17. Bevilacqua, V., Cariello, L., Columbo, D., Daleno, D., Fabiano, M.D., Giannini, M., Mastronardi, G. and Castellano, M. (2008) Retinal Fundus Biometric Analysis for Personal Identifications. In: Huang, D.-S., Wunsch II, D.C., Levine, D.S. and Jo, K.-H., Eds., Advanced Intelligent Computing Theories and Applications. With Aspects of Artificial Intelligence, Lecture Notes in Computer Science, Vol. 5227, Springer, Berlin, 1229-1237.

http://dx.doi.org/10.1007/978-3-540-85984-0_147 - 18. Akram, M.U., Tariq, A. and Khan, S.A. (2011) Retinal Recognition: Personal Identification Using Blood Vessels. 6th International Conference on Internet Technology and Secured Transactions, Abu Dhabi, 11-14 December 2011, 180-184.

- 19. Ortega, M., Penedo, M.G., Rouco, J., Barreira, N. and Carreira, M.J. (2009) Retinal Verification Using a Feature Points-Based Biometric Pattern. EURASIP Journal on Advances in Signal Processing, 2009, Article ID: 235746.

http://dx.doi.org/10.1155/2009/235746 - 20. Fatima, J., Syed, A.M. and Usman Akram, M. (2013) Feature Point Validation for Improved Retina Recognition. IEEE Workshop on Biometric Measurements and Systems for Security and Medical Applications (BIOMS), Napoli, 9 September 2013, 13-16.

http://dx.doi.org/10.1109/BIOMS.2013.6656142 - 21. Yu, H., Han, J. and Chang, K.C. (2002) PEBL: Positive-Example Based Learning for Web Page Classification Using SVM. Proceedings of the 8th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD’02), Edmonton, 23-25 July 2002, 239-248.

http://dx.doi.org/10.1145/775047.775083 - 22. Cristianini, N. and Shawe-Taylor, J. (2000) An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods. Cambridge University Press, Cambridge.

http://dx.doi.org/10.1017/CBO9780511801389 - 23. Jonsson, K., Kittler, J., Li, Y.P. and Matas, J. (2002) Support Vector Machines for Face Authentication. Image and Vision Computing, 20, 369-375.

http://dx.doi.org/10.1016/S0262-8856(02)00009-4 - 24. Statnikov, A., Aliferis, C.F., Hardin, D.P. and Guyon, I. (2011) A Gentle Introduction to Support Vector Machines in Biomedicine: Theory and Methods. World Scientific Publishing Co Pte Ltd, Hackensack.

http://dx.doi.org/10.1142/7922 - 25. Tomar, D. and Agarwal, S. (2015) Twin Support Vector Machine: A Review from 2007 to 2014. Egyptian Informatics Journal, 16, 55-69.

http://dx.doi.org/10.1016/j.eij.2014.12.003 - 26. Akram, M.U., Nasir, S., Anjum, M.A. and Javed, M.Y. (2009) Background and Noise Extraction from Colored Retinal Images. IEEE World Congress on Computer Science and Information Engineering, Los Angeles, 31 March-2 April 2009, 573-577.

http://dx.doi.org/10.1109/csie.2009.753 - 27. Shen, L.L., Bai, L. and Fairhurst, M. (2007) Gabor Wavelets and General Discriminant Analysis for Face Identification and Verification. Image and Vision Computing, 25, 553-563.

http://dx.doi.org/10.1016/j.imavis.2006.05.002 - 28. Liu, C. and Wechsler, H. (2002) Gabor Feature Based Classification Using the Enhanced Fisher Linear Discriminant Model for Face Recognition. IEEE Transactions on Image Processing, 11, 467-476.

http://dx.doi.org/10.1109/TIP.2002.999679 - 29. Meshgini, S., Zadeh, A.A. and Arabi, H.S. (2013) Face Recognition Using Gabor Based Direct Linear Discriminant Analysis and Support Vector Machine. Computers & Electrical Engineering, 39, 727-745.

http://dx.doi.org/10.1016/j.compeleceng.2012.12.011 - 30. Haghighat, M.B.A. and Namjoo, E. (2011) Evaluating the Informativity of Features in Dimensionality Reduction Methods. 5th International Conference on Application of Information and Communication Technologies, AICT, Baku, 12-14 October 2011, 1-5.

http://dx.doi.org/10.1109/icaict.2011.6110938 - 31. Ortega, M., Penedo, M.G., Rouco, J., Barreira, N. and Carreira, M.J. (2009) Personal Verification Based on Extraction and Characterization of Retinal Feature Points. Journal of Visual Languages and Computing, 20, 80-90.

http://dx.doi.org/10.1016/j.jvlc.2009.01.006 - 32. Yan Lam, B.S. and Yan, H. (2008) A Novel Vessel Segmentation Algorithm for Pathological Retina Images Based on the Divergence of Vector Fields. IEEE Transactions on Medical Imaging, 27, 237-246.