Journal of Software Engineering and Applications

Vol. 5 No. 5 (2012) , Article ID: 19434 , 11 pages DOI:10.4236/jsea.2012.55040

A New Maturity Model for Requirements Engineering Process: An Overview

![]()

1Department of Software Engineering, College of IT, Universiti Tenaga Nasional, Kajang, Malaysia; 2Advanced Informatics School, Universiti Teknologi Malaysia, Kuala Lumpur, Malaysia; 3Faculty of Computer Science and Information Technology, Universiti Putra Malaysia, Serdang, Malaysia.

Email: badariah@uniten.edu.my, shamsul@utm.my, azim@fsktm.upm.edu.my

Received January 2nd, 2012; revised February 7th, 2012; accepted March 29th, 2012

Keywords: Requirements Engineering (RE); Process Improvement; Maturity Model

ABSTRACT

It is widely acknowledged that Requirements Engineering (RE) has an important implication for the overall success of software or system development projects. As more and more organizations consider RE as the principal problem areas in the projects, improving RE process therefore appears critical for future business success. Moreover, nowadays there are evidences that support improving RE process maturity can contributes to improved business performance. There exist generic Software Process Improvement (SPI) standards, specialised RE process improvement models as well as guidance and advices on RE. However, they suffer from various issues that limit their adoption by organizations that are interested to assess and improve their RE process capability. Therefore, the research presented in this paper proposes a new RE process improvement model. The model is built by adapting and expanding the structure of the continuous representation of the formal maturity framework Capability Maturity Model Integration for Development (CMMI-DEV) developed by the Software Engineering Institute (SEI) through three rounds of development and validation stages, which involved RE and CMMI expert panel in the software industry. This paper aims to provide an overview on what, why and how we build the maturity model for RE. The intention is to provide a foundation for future development in the area of RE process improvement.

1. Introduction

System and software development projects have been plagued with problems since the 1960s [1]. Since then, Requirements Engineering (RE) has become one of the central research topics in the field of software engineering. Although progress in RE has been painfully slow with software development projects continue to experienced problems associated with RE [2], research effort in the area continues to be done. These research are mainly motivated by the list of potential benefits expected to be brought about by the successful implementation of an improved RE process. It is widely acknowledged that RE process has an important implication for the overall success of the projects [3,4]. Moreover, there is now empirical evidence, such as demonstrated in [5,6], that support the claimed benefits of RE in improving a software project by improving productivity [7,8], assuring quality [7,9], and reducing project risk [10].

A RE process is a structured set of activities which are followed to gather, evaluate, document, and manage requirements for a software or software containing-product throughout its development lifecycle. There exist RE standards that set out general principles and give detailed guidance for performing the RE process. Examples of RE standards include the IEEE Guide for Developing System Requirements Specifications [11], and the IEEE Recommended Practice for Software Requirements Specifications [12]. However, these standards offer no aid for selecting appropriate methods or for designing a RE process optimized for a particular organization [13]. An expert panel consists of both practitioners and academics agreed that RE process remains the most problematic of all software engineering activities, in a survey performed in [14]. Moreover, results of three surveys involving software development companies in UK [15,16], Australia [17], and Malaysia [18] confirmed that these companies still considered RE problems very significant.

Another survey [19], clearly demonstrate that RE process improvement is an important issue. Consequently, many organizations seek to improve RE process by adopting a generic Software Process Improvement (SPI) standards and frameworks [20] such as Software Engineering Institute’s (SEI’s) Capability Maturity Model (CMM) and Capability Maturity Model for Integration (CMMI) [21], ISO/IEC 15504, and Six Sigma [22]. However, a European survey of organizations engaged in SPI programs during the 1980s confirmed that the SPI models then available offered no cure for Requirements Engineering problems [13]. These enthusiastic adopters of SPI programs found that while SPI brought them significant benefits, their problems in handling requirements remain hard to solve.

This and several other problems related to the process have motivated the development of several specialised RE process improvement models. They include the Requirements Engineering Good Practice Guide (REGPG) [1]; the Requirements Capability Maturity Model (RCMM) [14,23]; the Requirements Engineering Process Maturity Model (REPM) [24], and the Market-Driven Requirements Engineering Process Model (MDREPM) [25]. However, these models also suffer from problems and issues that could hinder organizations from adopting them. The models not only are integrated with the obsolete and unsupported CMM or SW_CMM since the release of the new maturity model CMMI, but they are also either too complex or applicable to only limited type of RE process and application domain or exist in draft form and yet to be completely developed and validated.

Inspired by the strengths of the existing generic SPI and RE process improvement models, we started a study to build a new, complete model that can be used to assist organizations in assessing and improving their RE process maturity levels. The model is known as REPAIM that stands for Requirements Engineering Process Assessment and Improvement Model. Based on prior work, to our knowledge, the new RE maturity model component of the REPAIM has been completely and consistency provided with detailed, explicit guidance and advices on RE practices and that centres improvement on RE best-practices, which is presented within the CMMI-DEV standard. Therefore, the maturity model can be used to interpret the implementation of RD and REQM process areas of CMMI-DEV perhaps without being dependent highly on consultants. Also, our model can provide insights into the effects of SPI to software organisations mainly in the country that are yet to be certified, in particular with the CMMI-DEV certification.

In this paper we explain the stages involved in developing the RE maturity model and the motivations on building the REPAIM as well the rationales for building the model based on existing framework and assessment methods. In section three, we present an overview of model components, and section four summarizes and concludes this paper.

2. Methodology

The RE process improvement model was developed through multiple rounds of development and validation stages, which involved expert panel from the industry. Three rounds of model developments were performed to deal with the results of the first two rounds of validation, which were fed back to the development stage to improve the model. The multiple rounds of validation were designed based the Delphi method, which has been modified to fit the purpose of the model validation from the one originally developed by Norman Dalkey in 1950s [26]. Besides being acknowledged by some as a popular and well-known research method [27], the Delphi method is also considered by others as the technique that can be ‘effectively modified’ to meet the needs and nature of the study [28]. Reviews of how the Delphi method has been implemented by others show that the number of Delphi rounds range between one to five and a three round is typical [29,30]. Although Cantrill, Sibbald, and Buetow [31] stated that the expert panel sizes in the Delphi studies vary from 4 to 3000, the panel size can be as small as 3 experts only. In this research, the validation stage involved three rounds and 32 experts [32]. The panel size in this research is determined in line with recommendation by Cantrill and colleagues that panel size “should be governed by the purpose of the investigation”.

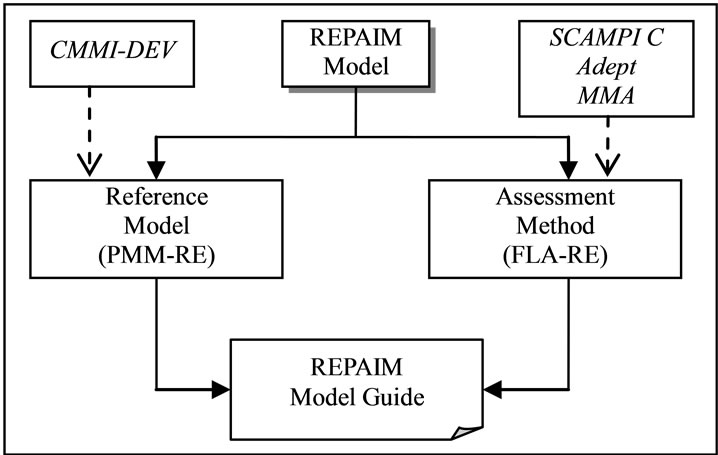

The REPAIM has two main components: the RE process maturity model and the RE process assessment method. The RE process maturity model is known as the PMM-RE, which stands for Process Maturity Model for RE, while the RE process assessment method is known as FLA-RE, which stands for Flexible Lightweight Assessment method for RE. Hence, the two main steps of the REPAIM development stage comprise building the PMM-RE maturity model and building the FLA-RE process assessment method. These two steps have their own activities in building each of the REPAIM components. The activities in creating the RE process maturity model were emulated from the ways the existing generic and specific RE process improvement models were built. To develop PMM-RE, the maturity model framework was first created. Then, it was followed by the identification of the structure and components of the maturity model. Finally, the PMM-RE is completed after each component in the model has been defined with detailed information. The development of the model framework, structure, and detail components was guided by five identified success criteria: completeness, consistency, practicality, usefulness, and verifiability as explained in our other paper [33]. To develop the FLA-RE assessment method, firstly the RE assessment stages and steps were identified based on observations of the existing assessment methods including SCAMPI Class C [34], Adept [35], and Modular Mini-assessment (MMA) [36]. Then, the components of the assessment method were defined with detailed information. Finally the tools to support the assessment method were prepared.

2.1. Motivations for Developing the Model

They are various motivators for this work. We embark on this study for the same reason that Linscomb [37] stressed in his article, that an organization should define and measure the RE process maturity because it is economic (to get more business and retain existing business) and it is the right thing to do. This research is also motivated by potential benefits expected to be brought about by successful implementation of an improved RE process to the overall success of projects [3,4] as demonstrated by empirical research in Chisan [5] and Damian et al. [6]. Most importantly, review to other research in the recent years such as Beecham et al. [38], Beecham, Hall, and Rainer [14], Gomes and Pettersson [39], Gorschek and Tejle [24], Sommerville and Ransom [40], Wiegers [41], and Young [2] has shown that there remain the need for an alternative RE process improvement model. This is mainly due to the fact that existing RE textbooks or generic SPI models and standards in particular CMMI-DEV or even the RE specialised process improvement models such as REGPG, REPM, R-CMM and MDREPM seem unable to help software organisations in assessing and improving their RE process capability and maturity.

Inspired to offer a solution to organizations interested to improve their RE processes, we performed an empirical study to justify our then future research work, which is to develop this model, detailed in [18]. To our surprise, results of the study involving software development companies appraised with various maturity levels of CMMI-DEV indicate that high-maturity ratings do not correlate with better performance and do not indicate effective, high-maturity practices. Possible reason for this include what Humphrey stressed in [42] that “… with increasing marketplace pressure, organization often focus on maturity levels rather than process capability… we now see cases where high-maturity ratings do not indicate effective, high-maturity practices. It is not that their appraisal process is faulty or that organizations are dishonest, merely that the maturity framework does not look deeply enough into all organizational practices”. When further investigated, we found out that many “good” practices were omitted from the CMMI because they could not be generalized to the broad audience of the model as stated by Moore [43]. In the case of RE process, omissions can be seen in practices of Requirements Development Process Area (RD PA) when compared with the RE process practices or activities commonly found in the literature.

Apart from the earlier mentioned motivations, we are also interested to offer a way out of the following issues that surround the CMMI-DEV:

1) The CMMI-DEV does not define RE maturity the way it should be defined based on industry standard and practices as elaborated in [37]. In the staged representation, CMMI-DEV splits the entire RE domain into two PAs in two separate maturity levels, with the order Requirements Engineering Process Area (REQM PA) first then followed by Requirements Development Process Area (RD PA). Hence, this order of RE implementation and institutionalizations is not always logical and can create issue [37]. For example, if an organization does not have an institutionalized way of eliciting requirements (at maturity level 3), by right the organization would not have any requirements to be managed at lower maturity level 2. In the continuous representation, for another example, there might be a case where an organization with a high capability for REQM (for example, 5) has a low capability level (for example, 0) for RD PA is not always logical.

2) Like ISO 9000, CMMI-DEV does not tell specifically how to actually do the REQM and RD work as stated by Leffingwell and Widrig [44] and Humphrey [42]. Thus, to create a comprehensive software, or RE, process improvement approach that would satisfy the demanding ISO 9000 or CMMI assessors, organizations are forced to depend highly on paid consultants or CMMI training and/or experiences of their team members or reference books, causing the cost associated with the model to be very high.

3) The CMMI-DEV process maturity profile, published in September 2011 and accessible from the SEI website, shows that the numbers of organizations adopting the model in most countries are still low with 58% countries only have 10 or fewer appraisals [45]. The primary reason of not-adopting CMMI is high cost associated with the model apart from other reasons including organizations were too small, organizations had no time, organizations were unsure of the SPI benefits, organizations had other priorities, and organizations were using another SPI approach [46,47].

Despite those issues that surround the CMMI-DEV, we chose to build our model based on this known maturity framework for several reasons as detailed in the next sub-section.

2.2. Rationales for Developing the RE Maturity Model Based on CMMI-DEV

Even though software engineering has witnessed the development of several other generic SPI standards and models we limit our model’s compatibility to CMMIDEV version 1.3 which was released in November 2010 [48]. Mainly because of the easy accessibility of this model compared to other models. Basing our model on the latest version of a known formal software process improvement framework offers the practitioners several advantages. Amongst the rationale for basing our model on this maturity framework is that:

1) The CMMI framework is being adopted worldwide and is one of the few process models that attempts to define maturity levels of IT-related processes [37]. The CMMI also remains a de facto standard for “softwareintensive system development” [21].

2) The CMMI framework is based on best practices derived from many years of empirical study and contains guidelines for RE practices. Even though these RE practices are treated differently from the standards RE components found in the literature and lack implementation details, the RE processes are integrated with software development.

3) The CMMI-DEV is designed to be tailored and adapted to focus on specific needs as it is a normative model [49]. As suggested by Philips [50], community that is interested in focusing at specific area of process improvement may need to “provide interpretive guidance or expanded coverage of specific practices and goals” for the area. This is basically what we aimed to achieve in our model.

4) While our model can be used independently to assess RE process maturity (or capability), basing it on CMMI-DEV will enable practitioners to use it in conjunction with an on-going CMMI-DEV programme.

Our model, the REPAIM, taps into the strengths of the CMMI-DEV and reflect the update made to the framework to form a specialised best practice RE model. Like the other RE process improvement models, our model will take practitioners from a high level view of the RE practices, through to a detailed descriptions and to a process assessment method to guide companies towards satisfying their specific RE process improvement and general company goals.

2.3. Rationales for Developing the RE Assessment Method Based on Existing Methods

The RE process maturity model is built based on the CMMI-DEV and initially SCAMPI Class C seems appropriate for the reference model as according to Hayes et al. [34], the SCAMPI is “applicable to a wide range of appraisal usage modes, including both internal process improvement and external capability determinations”. But, SCAMPI Class C method (just like CMMI group of standards) was initially written for large organization and is consequently difficult to apply in small company settings because of its complex requirements and the need to commit significant resources to achieve the CMMI certification [51]. On the other hand, both MMA and Adepts methods have a set of guidelines for conducting CMM or CMMI conformant software process assessment, respecttively, and focus on small companies.

However, both SCAMPI Class C and MMA methods focus only at reviewing an organization’s processes. Whereas, the Adepts provides additional steps for establishing process improvements initiatives and to see the progress that could have been accomplished from implementing the initiatives. Hence, the approach in the research is to combine several features and functions of these three assessment methods in a single process assessment method. The aim is to optimize the applicability and usefulness of the developed assessment method. Moreover, the new RE process maturity model is a specialised reference model. Hence, a specialised process assessment that can help organizations particularly small companies, examine their RE process against the model should be developed too. This approach is actually very similar with the way MA-MPS is developed for MRMPS Process Reference Model [52]. The term assessment used in this thesis implies that an organization can perform informal assessments to and for itself. These assessments are intended to motivate organizations to initiate or continue the RE process improvement programs.

3. Overview of the RE Maturity Model

The new proposed model, REPAIM, is a specialized RE process improvement and assessment model. The model aims to be recognised as an applicable model to organizations which develop system and software products. The REPAIM also defines rules to implement and assess itself, hence it support and assures a coherent use according to its definitions. As mentioned earlier, REPAIM has two components: PMM-RE reference model and the FLA-RE assessment method. Both model components are currently described in a single document called a REPAIM model guide, which was given to the expert panel during the validation stage. This is as shown in Figure 1.

The PMM-RE reference model contains the requirements that organizations must implement to be compliant with the REPAIM Model. As mentioned earlier, the theoretical base used to create the PMM-RE is the CMMI-DEV. PMM-RE therefore does not present a ‘new’ framework. We place it within the formal maturity framework to guide practitioners towards improving their RE processes using a proven and familiar methodology. PMM-RE contains definitions of the RE process maturity levels. It also provide interpretive guidance and expanded coverage of practices and goals for the RE process. FLA-RE assessment method describes assessment requirements, assessments stages and steps, assessment

Figure 1. Components of the RE maturity model.

indicators and assessors’ requirements. While FLA-RE is applicable to organizations developing software or software-containing product, it is mainly oriented to the small and medium-size enterprises (SMEs). The FLA-RE assessment stages and steps are based on characteristics of several process assessment methods. However, details of this assessment method are not covered in this paper.

3.1. PMM-RE Model Descriptions

The PMM-RE is a direct adaptation of the CMMI-DEV continuous representation where as an organization progresses in capability for a RE process, this mean the organization is becoming more mature in the RE process. The PMM-RE initially has 6 maturity levels numbered 0 through 5, which were adapted from the six capability levels of the CMMI-DEV version 1.2 [21] as explained in our other paper [33] and thesis [53]. However, to ensure that the proposed model can complement the latest version 1.3 that was released in November 2010 [48], PMM-RE has been tuned to contain four RE maturity levels only: 0 (Incomplete), 1 (Performed), 2 (Managed), and 3 (Defined).

Like the CMMI-DEV capability levels, the PMM-RE maturity levels consists of a generic goal and its related generic practices which can improve organizations’ RE processes. At each RE maturity level, organizations need to implement several RE practices to achieve the RE goal for the specific level. Unlike CMMI-DEV that separate goals and practices into generic and specific goals and practices to be satisfied by several process areas for a specific capability level, in this model, we consider all practices as RE practices since this model focuses at a single process area only.

A RE practice is an essential task that must be performed as part of RE process. Practices may be performed formally or informally. However, it does not mean that it is done frequently or that most practitioners will necessarily perform the practices. Each RE practice in the PMM-RE has its practice guideline, which consists of components similar to the example shown in Figure 2. Each RE practice has a set of consistent components as follows:

1) Purpose—describes the aim that the practice is intended to achieve.

2) Descriptions—statements that explain what the practice is, and why the practice is performed.

3) Sub-practices—statements that provide guidance for interpreting and implementing the practice. They are meant only to provide ideas that may be useful for RE process improvement.

4) Typical Input/Output—lists examples input necessary for a practice to begin, and output produced by the practice. Input should not be optional component and output, generally, should be produced by one and only one practice. The examples are called typical output because there are often other outputs that may not listed.

5) Techniques—lists all techniques to performing the practice or different forms the output of the practice may

Figure 2. Components of the PMM-RE.

take. A practice may have none, one, or more related techniques. A technique must be related to at least one practice. The techniques listed in this document are intended to cover the most common and widespread use in the community.

6) Elaborations—statements that provide more details or information about the practice and its components. Explanations of techniques may be provided in this component.

3.2. RE Maturity Levels

Each RE maturity level of REPAIM consist of a RE goal and its related RE practices, which can help improve the organization’s RE processes. The four RE maturity levels of the model provide a way to measure how well organizations can and do improve their RE processes. These RE maturity levels describe an evolutionary path for an organization that wants to improve its RE process. RE maturity levels may be determined by performing an assessment to the RE practices. Like the CMMI-DEV, the assessment can be performed for organizations that comprise entire companies (usually for small companies), or group of projects within a company. For an organization to reach a particular RE maturity level, it must satisfy all of the set of RE practices that are targeted for improvement.

In general, various sources of references were referred and consulted in defining the components of the PMMRE model, as follows:

1) The generic practices (GPs) and specific practices (SPs) of the CMMI for Development [21,48].

2) The tasks of the Guide to the Business Analysis Body of Knowledge (BABOK Guide) version 2.0 [54].

3) The Education Units (EUs) of the IREB Certified Professional for Requirements Engineering Syllabus, Foundation Level, version 2.0 [55].

4) IEEE Guide for Developing System Requirements Specifications, IEEE Standard 1233, 1998 Edition [11].

5) IEEE Guide for Software Engineering Body of Knowledge (SWEBOK), version 2004 [56].

6) Other sources such as books, conference and journal articles.

A short description of each RE maturity level follows.

3.2.1. Level 0: Incomplete RE Process

A RE maturity level 0 process is characterized as an “incomplete RE process”, which is similar to the characteristics of the capability level 0 of the CMMI-DEV. An incomplete RE process is a process that either is not performed or partially performed. This means one or more of the RE practices are not implemented. There is no RE goal exists for this level since there is no reason to institutionalize a partially performed RE process. 3.2.2. Level 1: Performed RE Process A RE maturity level 1 process is characterized as a “performed RE process”. At maturity level 1, the RE goal of level 1 is satisfied and all of the RE practices are implemented. At this maturity level, requirements are elicited, analysed, prioritised, documented, verified and validated; requirements changes are managed, requirements traceability is maintained; and requirements status tracking is established to the extent that it can demonstrate that all requirements have been implemented. In addition, requirements are allocated among product releases and components, and inconsistencies between requirements and the project work products related to the RE process are identified and resolved.

RE maturity level 1 often results in important improvements. However, those improvements cannot be maintained if they are not institutionalized, which can only be achieved through the implementation of RE practices at levels 2 and 3. Also, a performed RE process usually does not have the basic infrastructure in place to support the RE process. Therefore, organizations mature at level 1 RE maturity level 1 often produce products that work but they frequently exceed their budgets and do not meet their schedules [57]. According to them, amongst the common problems found in such organizations relate to vague requirements, lack of traceability, undefined RE process, insufficient RE resource, lack of training and poor levels of skills.

There are all together sixteen RE practices implemented in this RE maturity level. The RE practices implemented at 1evel 1 includes:

RP 1.1 Conduct Requirements Elicitation;

RP 1.2 Establish a Standard Requirements Document Structure;

RP 1.3 Obtain an Understanding of Requirements;

RP 1.4 Obtain Commitment to Requirements;

RP 1.5 Analyze Requirements;

RP 1.6 Analyze Requirements to Achieve Balance;

RP 1.7 Prioritize Requirements;

RP 1.8 Model Requirements;

RP 1.9 Develop the Customer Requirements;

RP 1.10 Develop the Product Specifications;

RP 1.11 Verify Requirements;

RP 1.12 Validate Requirements;

RP 1.13 Manage Requirements Traceability;

RP 1.14 Allocate Requirements;

RP 1.15 Manage Requirements Changes;

RP 1.16 Identify Inconsistencies between Project Work and Requirements.

In general, initially, the selection of the RE practices of the PMM-RE model are guided mostly by the generic goals and practices, and the specific goals and practices of the REQM and RD process areas of the CMMI-DEV. Subsequently the other sources of references are used to expand the detailed guidelines of the RE practices to help organizations approach these practices. At the same time, the other sources of references helped add new or rephrase or exclude certain practices from the CMMI-DEV. Thirteen out of the sixteen RE practices of level 1 are initially adapted from the specific practices of the REQM and RD process areas of the CMMI-DEV. Some of these CMMI-DEV’s specific practices have been renamed to ease understanding and to reflect better visibility of what the practice is all about, while some others simply inherit the name from the maturity framework. For example, the adapted RD SP 3.2 Establish a Definition of Required Functionality has been renamed to RP 1.8 Model Requirements to reflect better visibility of the practice.

In another case, managing requirements traceability is one of the main tasks in requirements management activi -ty [53]. In the PMM-RE, REQM SP 1.4 Maintain Bidirectional Traceability of Requirements practice though adapted has been renamed to RP 1.13 Manage Requirements Traceability. Bidirectional traceability is “the ability to trace both forward and backward (i.e., from requirements to end products and from end product back to requirements” [58] and adoption of CASE tools can ease the implementation of this practice [59]. However, it appears that CASE tool adoption is still one of the challenges experienced by practitioners and in other related studies [60-62]. Therefore, it is reasonable to say that if the REQM SP 1.4 is to be maintained as it is, it might makes the PMM-RE model to appear less practical to the organizations. By renaming the practice, it means that any form of traceability implementation should be sufficient, which implies that bidirectional traceability is now an optional practice and so is adoption of CASE tool. This kind of decision is mainly motivated by the practicality success criteria of the model development.

The remaining three practices, RP 1.11 Verify Requirements, RP 1.2 Establish a Standard Requirements Documents Structure, and RP 1.7 Prioritize Requirements have been added to the model because they are considered as common RE practices [41,54-55,63-64]. For example, although requirements verification is known as another critical RE practice on top of the requirements validation, only the RD SP 3.5 Validate Requirements practice is present in CMMI-DEV. Therefore, this practice has been added and known as RP 1.11 Verify Requirements. Nevertheless, it has been added to the model by being adapted from the specific goal of the Verfication (VER) process area of the CMMI-DEV [21].

3.2.3. Level 2: Managed RE Process

A RE maturity level 2 process is characterized as a “managed RE process”. At maturity level 2, RE process is planed, institutionalised for consistent performance, and executed in accordance with policy; involves relevant stakeholders; allocated with adequate resources; people are trained with the appropriate skills; RE process is monitored, controlled and reviewed; RE process work products are placed under appropriate levels of control; RE process adherence is evaluated; and RE status is reviewed by higher management. The process discipline reflected by this RE maturity level helps to ensure that existing RE practices are retained even during time of stress. For a managed RE process, the process descriptions, standards, and procedures are applicable to a particular project, or organizational.

There are ten RE practices implemented at this RE maturity level. The RE practices implemented at level 2 includes:

RP 2.1 Establish an Organizational Requirements Engineering Policy;

RP 2.2 Plan the Requirements Engineering Process;

RP 2.3 Provide Adequate Resources;

RP 2.4 Identify and Involve Relevant Stakeholders;

RP 2.5 Assign Responsibility;

RP 2.6 Train People;

RP 2.7 Manage Configurations;

RP 2.8 Monitor and Control the RE Process;

RP 2.9 Objectively Evaluate Adherence;

RP 2.10 Review Status with Higher Level Management.

These ten RE practices are mostly adapted from the generic goal (GG) 2 of the CMMI-DEV and its generic practices. However, detailed guidelines for several of these practices are also constructed by referring to three other CMMI process areas namely Project Planning (PP), Project Management and Control (PMC) and Process and Product Quality Assurance (PPQA). In addition, books and articles on software project management are also referred to create the detailed description of the ten practices such as the Project Management Body of Knowledge (PMBOK) [65], Schwalbe [66], Hughes and Cotterell [67], Olson [68], and Wysocki, Beck, and Crane [69]. The BABOK version 2.0 and IREB CPRE Foundation Level Syllabus version 2.0 are also referred.

3.2.4. Level 3: Defined RE Process

A RE maturity level 3 process is characterized as a “defined RE process”. At this maturity level, RE process is typically described in more detail and is performed more rigorously than at level 2. A defined RE process clearly states the process purpose, assumptions, related standards, policy, what activities are carried out, the structuring or schedule of these activities, who is responsible for each activity, the inputs and outputs to/from the activity, what resources are allocated, and the tools used to support the RE process. Similar to the capability level 3 of CMMIDEV, at this RE maturity level, the standards, process descriptions, and procedures for a project are tailored from the organizational standard RE process. This is to ensure that the defined RE processes of two or more projects in the same organization are consistent. Hence, the organizational standard RE process should be established and improved over time. A standard RE process is the one that describes the fundamental RE process element that are expected in the defined RE process.

This maturity level also involves collecting information such as work products, process and product measures, measurement data and other improvement information. Two examples of commonly used RE process and product measures include number of requirements changes, and estimates of work product size, effort, and cost. These items are then stored in the organization’s measurement repository and the organization’s process assets library. The improvement proposals for the organizational RE process assets should also be collected. These activities are required to support the future use and improvement of the organization’s RE processes and process assets. There are only two RE practices implemented in this RE maturity level. The two RE practices implemented at level 3 are:

RP 3.1 Establish a Defined RE Process;

RP 3.2 Collect Improvement Information.

The two RE practices implemented in this maturity level are adapted from the two generic practices (GP3.1 Establish a Defined Process and GP 3.2 Collect Improvement Information) of the CMMI-DEV [21]. Still, the detailed guidelines of these practices are constructed by referring to one specific practice of the CMMI-DEV’s Integrated Project Management (IPM) process area (IPM SP 1.1 Establish the project’s Defined Process) and to four specific practices of the Organizational Process Definition (OPD) process area (OPD SP 1.1, 1.3, 1.4, 1.5) [21].

All RE practices in the model are given unique numbers and listed in a sequence. However, the way the practices are listed in the sequence does not dictate any specific order of implementation or requirements engineering lifecycle. Iterative or agile methodology may require that the practices be performed in parallel, whereas phased methodology (e.g. waterfall model) may require multiple practices to be performed in every phase. Organizations may implement the practices in any order, as long as the necessary input to the practice is present.

4. Conclusion and Future Work

In this paper, we describe what, why and how we construct a specialised RE process improvement and assessment model—called the REPAIM—with the aim to assist organizations in assessing and improving their RE process based on a proven maturity framework. The REPAIM has four RE maturity levels, which is adopted from the latest version 1.3 of the CMMI-DEV and has been constructed with the following characteristics:

• The entire RE domain is considered as a single process area which consist of both requirements development (elicitation, analysis, specification, verification and validation) and requirements management. This is to eliminate any possibility for an organization (or project) to encounter issues or illogical order of RE implementation and institutionalisation.

• The model looks deeply into all its RE practices as it comprise adequate components (i.e. purpose, descriptions, sub-practices, typical input/output, techniques, and elaborations) to provide detailed guidance for practitioners in improving and assessing their RE processes.

• The model is designed mainly oriented for SMEs to allow higher adoption rate among practitioners.

• The model is constructed by referring to various sources of reference to ensure that it reflect the industry standards and practices.

The REPAIM has gone through three rounds of development and validation stages involving RE and CMMI experts in the industry. Results of the validation performed, involving 33 experts [53] indicates that 80% of the experts support that the REPAIM is complete, consistent, practical, useful and verifiable. The high support of the experts therefore suggests that the REPAIM generally meets its development success criteria. The experts agreed that the REPAIM:

• is able to help assess RE processes and prioritise their improvement;

• adapts and complements existing maturity standards and assessment methods;

• adaptable to the needs of organizations.

Despite the strengths abovementioned, REPAIM is not without weaknesses. One small hiccup of the model is that it appears that training is still needed by the practitioners in order to interpret and understand the model despite the detailed information provided to the model. Moreover, even though the model is recognized by the experts as an adaptation of existing models, standards and assessment methods, yet it is not fully accepted as a model that is simple to understand since it appears to require further examples, templates, and elaborations before the model could be used effectively. One probable explanation for the findings is that “developers are rather sceptical at using written routines” [70]. In order to rectify these two drawbacks of the proposed REPAIM, future research could work on building self-training packages on RE activities, which is compatible with the model. The self-training packages concept is similar to Action Package [71] and Self-Training Package [72]. Providing training packages alone however may not be sufficient to eliminate the skepticisms that practitioners might have with the detailed guidelines of REPAIM. Therefore, future research also should work on exploring Experience Management (EM)-like [73] software tool to support the self-training packages in quest to help software developers self-trained the proposed REPAIM or any other model-based process improvement approach.

5. Acknowledgements

We thank all the experts for their participations in the validation rounds of the model. The validation stage is part of a project funded by Universiti Tenaga Nasional through grant number J5100-50-184.

REFERENCES

- I. Sommerville and P. Sawyer, “Requirements Engineering: A Good Practice Guide,” John Wiley & Sons, Chichester, 1997.

- R. R. Young, “Effective Requirements Practices,” Addison-Wesley Longman Publishing Co., Inc., Boston, 2001.

- H. F. Hofmann and F. Lehner, “Requirements Engineering as a Success Factor in Software Projects,” Software, IEEE, Vol. 18, No. 4, 2001, pp. 58-66. doi:10.1109/MS.2001.936219

- S. Martin, A. Aurum, R. Jeffery and B. Paech, “Requirements Engineering Process Models in Pactice,” Proceedings of the Seventh Australian Workshop in Requirements Engineering (AWRE’2002), Deakin University, Melbourne, 2-3 December 2002, pp. 141-155.

- J. Chisan, “Theory in Practice: A Case Study of Requirements Engineering Process Improvement,” Master Thesis, the University of Victoria, Victoria, 2005.

- D. Damian, D. Zowghi, L. Vaidyanathasamy and Y. Pal, “An Industrial Case Study of Immediate Benefits of Requirements Engineering Process Improvement at the Australian Center for Unisys Software,” Empirical Software Engineering, Vol. 9, No. 1, 2004, pp. 45-75. doi:10.1007/s10664-005-1288-4

- H. Wohlwend and S. Rosenbaum, “Software Improvements in an International Company,” Proceedings of the 15th International Conference on Software Engineering (ICSE 93), Baltimore, Maryland, 17-21 May 1993, pp. 212- 220.

- S. Lauesen and O. Vinter, “Preventing Requirement Defects: An Experiment in Process Improvement,” Requirements Engineering, Vol. 6, No. 1, 2001, pp. 37-50. doi:10.1007/PL00010355

- J. D. Herbsleb and D. R. Goldenson, “A Systematic Survey of CMM Experience and Results,” Proceedings of the 18th International Conference on Software Engineering—ICSE, Berlin, 25-29 March 1996, pp. 323-330.

- J. G. Brodman and D. L. Johnson, “Return on Investment (ROI) from Software Process Improvement as Measured by US Industry,” Software Process: Improvement and Practice, Vol. 1, No. 1, 1995, pp. 35-47.

- IEEE, “IEEE Guide for Developing System Requirements Specifications. IEEE Std 1233, 1998 Edition,” Institute of Electrical and Electronics Engineers, Inc., New York, 1998.

- IEEE, “IEEE Recommended Practice for Software Requirements Specifications. IEEE Std 830-1998,” Institute of Electrical and Electronics Engineers, Inc., New York, 1998.

- P. Sawyer, “Maturing Requirements Engineering Process Maturity Models,” In: J. L. Mate and A. Silva, Eds., Requirements Engineering for Sociotechnical Systems, Idea Group Inc., Hershey, 2004, pp. 84-99.

- S. Beecham, T. Hall and A. Rainer, “Defining a Requirements Process Improvement Model,” Software Quality Journal, Vol. 13, No. 3, 2005, pp. 247-279. doi:10.1007/s11219-005-1752-9

- S. Beecham, T. Hall and A. Rainer, “Software Process Improvement Problems in Twelve Software Companies: An Empirical Analysis,” Empirical Software Engineering, Vol. 8, No. 1, 2003, pp. 7-42. doi:10.1023/A:1021764731148

- T. Hall, S. Beecham and A. Rainer, “Requirements Problems in Twelve Software Companies: An Empirical Analysis,” IEEE Proceedings of Software, Vol. 149, No. 5, 2002, pp. 153-160. doi:10.1049/ip-sen:20020694

- M. Niazi and S. Shastry, “Role of Requirements Engineering in Software Development Process: An Empirical Study,” Proceedings of the Multi Topic Conference (INMIC 2003), Islamabad, 8-9 December 2003, pp. 402-407.

- B. Solemon, S. Sahibuddin and A. A. A. Ghani, “Requirements Engineering Problems and Practices in Software Companies: An Industrial Survey,” Advances in Software Engineering, Vol. 59, 2009, pp. 70-77. doi:10.1007/978-3-642-10619-4_9

- M. Ibanez and H. Rempp, “European Survey Analysis,” European Software Institute, Technical Report, 1996.

- N. P. Napier, J. Kim and L. Mathiassen, “Software Process Re Engineering: A Model and Its Application to an Industrial Case Study,” Software Process: Improvement and Practice, Vol. 13, No. 5, 2008, pp. 451-471. doi:10.1002/spip.390

- M. B. Chrissis, M. Konrad and S. Shrum, “CMMI: Guidelines for Process Integration and Product Improvement,” 2nd Edition, Upper Saddle River, Addison-Wesley, Boston, 2007.

- J. R. Persse, “Process Improvement Essentials: CMMI, Six SIGMA, and ISO 9001,” O’Reilly Media, Inc., Sebastopol, 2006.

- S. Beecham, T. Hall and A. Rainer, “Building a Requirements Process Improvement Model,” University of Hertfordshire, Technical Report, Hatfield, 2003.

- T. Gorschek and K. Tejle, “A Method for Assessing Requirements Engineering Process Maturity in Software Projects,” Master Thesis, Blekinge Institute of Technology, Ronneby, 2002.

- F. Pettersson, M. Ivarsson, T. Gorschek and P. Ohman, “A Practitioner’s Guide to Light Weight Software Process Assessment and Improvement Planning,” Journal of Systems and Software, Vol. 81, No. 6, 2007, pp. 972-995. doi:10.1016/j.jss.2007.08.032

- N. Dalkey and O. Helmer, “An Experimental Application of the Delphi Method to the Use of Experts,” Management Science, Vol. 9, No. 3, 1963, pp. 458-467. doi:10.1287/mnsc.9.3.458

- B. F. Beech, “Changes: The Delphi Technique Adapted for Classroom Evaluation of Clinical Placements,” Nurse Education Today, Vol. 11, No. 3, 1991, pp. 207-212. doi:10.1016/0260-6917(91)90061-E

- F. T. Hartman and A. Baldwin, “Using Technology to Improve Delphi Method,” Journal of Computing in Civil Engineering, Vol. 9, No. 4, 1995, pp. 244-249. doi:10.1061/(ASCE)0887-3801(1995)9:4(244)

- P. M. Mullen, “Delphi: Myths and Reality,” Journal of Health Organization and Management, Vol. 17, No. 1, 2003, pp. 37-52. doi:10.1108/14777260310469319

- G. J. Skulmoski, F. T. Hartman and J. Krahn, “The Delphi Method for Graduate Research,” Journal of Information Technology Education, Vol. 6, 2007, pp. 1-21.

- J. A. Cantrill, B. Sibbald and S. Buetow, “The Delphi and Nominal Group Techniques in Health Services Research,” International Journal of Pharmacy Practice, Vol. 4, No. 2, 1996, pp. 67-74. doi:10.1111/j.2042-7174.1996.tb00844.x

- S. S. Y. Lam, K. L. Petri and A. E. Smith, “Prediction and Optimization of a Ceramic Casting Process Using a Hierarchical Hybrid System of Neural Networks and Fuzzy Logic,” IIE Transactions, Vol. 32, No. 1, 2000, pp. 83-91. doi:10.1023/A:1007659531734

- B. Solemon, S. Shahibuddin and A. A. A. Ghani, “Redefining the Requirements Engineering Process Improvement Model,” Proceedings of the Asia-Pacific Software Engineering Conference, APSEC 2009, Penang, 1-3 December 2009, pp. 87-92.

- W. Hayes, E. E. Miluk, L. Ming and M. Glover, “Handbook for Conducting Standard CMMI Appraisal Method for Process Improvement (SCAMPI) B and C Appraisals, Version 1.1,” Software Engineering Institute (SEI), Pittsburgh, 2005.

- F. McCaffery, D. McFall and F. G. Wilkie, “Improving the Express Process Appraisal Method,” In: F. Bomarius and S. KomiSirvio, Eds., Product Focused Software Process Improvement, Springer, 2005, pp. 286-298.

- K. E. Wiegers and D. C. Sturzenberger, “A Modular Software Process Mini-Assessment Method,” IEEE Software, Vol. 17, No. 1, 2000, pp. 62-69.

- D. Linscomb, “Requirements Engineering Maturity in the CMMI,” 5 June 2008. http://www.stsc.hill.af.mil

- S. Beecham, T. Hall, C. Britton, M. Cottee and A. Rainer, “Using an Expert Panel to Validate a Requirements Process Improvement Model,” Journal of Systems and Software, Vol. 76, No. 3, 2005, pp. 251-275. doi:10.1016/j.jss.2004.06.004

- A. Gomes and A. Pettersson, “Market-Driven Requirements Engineering Process Model-MDREPM,” Master Thesis, Blekinge Institute of Technology, Ronneby, 2007.

- I. Sommerville and J. Ransom, “An Empirical Study of Industrial Requirements Engineering Process Assessment and Improvement,” ACM Transactions on Software Engineering and Methodology (TOSEM), Vol. 14, No. 1, 2005, pp. 85-117. doi:10.1145/1044834.1044837

- K. E. Wiegers, “Software Requirements,” 2nd Edition, Microsoft Press, Redmond, 2003.

- W. S. Humphrey, “CMMI: History and Direction,” In: M. B. Chrissis, et al., Eds., CMMI: Guidelines for Process Integration and Product Improvement, 2nd Edition, Upper Saddle River, Addison-Wesley, Boston, 2007, pp. 5-8.

- J. W. Moore, “The Role of Process Standards in Process Definition,” In: M. B. Chrisses, et al., Eds., CMMI: Guidelines for Process Integration and Product Improvement, Upper Saddle River, Addison-Wesley, Boston, 2007, pp. 93-97.

- D. Leffingwell and D. Widrig, “Managing Software Requirements: A Use Case Approach,” 2nd Edition, Addison-Wesley Professional, Boston, 2003.

- SEI, “Process Maturity Profile, September 2011,” 7 October 2011. http://www.sei.cmu.edu/cmmi/casestudies/profiles/pdfs/upload/2011SeptCMMI-2.pdf

- N. Khurshid, P. L. Bannerman and M. Staples, “Overcoming the First Hurdle: Why Organizations Do not Adopt CMMI,” Proceedings of the ICSP 2009-International Conference on Software Process (Co-Located with ICSE 2009), Vancouver, 16-17 May 2009, pp. 38-49.

- M. Staples, et al., “An Exploratory Study of Why Organizations Do not Adopt CMMI,” The Journal of System and Software, Vol. 80, No. 6, 2007, pp. 883-895. doi:10.1016/j.jss.2006.09.008

- SEI, “CMMI® for Development, Version 1.3,” Software Engineering Institute, Pittsburgh, Technical Report, 2010.

- M. C. Paulk, C. Weber, B. Curtis and M. B. Chrisses, “The Capability Maturity Model: Guidelines for Improving the Software Process,” Addison-Wesley, Reading, 1995.

- M. Philips, “CMMI: From the Past and Into the Future,” In: M. B. Chrissis, et al., Eds., CMMI: Guidelines for Process Integration and Product Improvement, 2nd Edition, Upper Saddle River, Addison-Wesley, Boston, 2007, pp. 11-15.

- S. P. Sun, “MSC Malaysia APEC Workshop on Software Standards for SMEs and VSEs—A New Global Standard for Today’s International Economy,” 2010. http://newscentre.msc.com.my/articles/1312/1/MSC-Malasia-APEC-Workshop-on-Software-Standards-for-SMEs-and-VSEs--A-New-Global-Standard-for-Todays-International-Economy/Page1.html

- K. Weber, et al., “Brazilian Software Process Reference Model and Assessment Method,” Computer and Information Sciences-ISCIS 2005, Vol. 3733, 2005, pp. 402-411. doi:10.1007/11569596 43

- B. Solemon, “Requirements Engineering Process Assessment and Improvement Approach for Malaysian Software Industry,” PhD Thesis, Department of Computer Science and Information System, Universiti Teknologi Malaysia, Skudai, Johor, 2012.

- IIBA, “A Guide to the Business Analysis Body of Knowledge (BABOK Guide) Version 2.0,” International Institute of Business Analysis, Ontario, 2009.

- IREB, “Syllabus IREB Certified Professional for Requirements Engineering—Foundation Level-Version 2.0. English,” 2010. http://www.certified-re.de/fileadmin/IREB/Lehrplaene/Lehrplan_CPRE_Foundation_Level_english_2.1.pdf

- IEEE, “IEEE Guide for Software Engineering Body of Knowledge (SWEBOK) version 2004,” Institute of Electrical and Electronics Engineers, Inc., New York, 2004.

- S. Beecham, T. Hall, and A. Rainer, “Defining a Requirements Process Improvement Model,” University of Hertfordshire, Hatfield, 2003.

- SEI, “CMMI Models,” 2009. http://www.sei.cmu.edu/cmmi/start/faq/models-faq.cfm

- L. K. Meisenbacher, “The Challenges of Tool Integration for Requirements Engineering,” Proceedings of the Situational Requirements Engineering Processes (SREP’05) In Conjunction with 13th IEEE International Requirements Engineering Conference, Paris, 29-30 August 2005, pp. 188-195.

- [61] I. Aaen, A. Siltanen, C. Sørensen and V. P. Tahvanainen, “A Tale of Two Countries: CASE Experiences and Expectations,” In: K. E. Kendall, et al., Eds., The Impact of Computer Supported Technologies on Information Systems Development, IFIP Transactions A-8, North-Holland Publishing Co., Amsterdam, 1992, pp. 61-93.

- [62] R. J. Kusters and G. M. Wijers, “On the Practical Use of CASE-tools: Results of a Survey,” Proceedings of the 6th International Workshop on CASE, Singapore, 19-23 July 1993, pp. 2-10.

- [63] A. Kannenberg and D. H. Saiedian, “Why Software Requirements Traceability Remains a Challenge,” CrossTalk: The Journal of Defense Software Engineering, 2009, pp. 14-19.

- [64] I. Sommerville, “Software Engineering,” 8th Edition, Addison-Wesley, Boston, 2007.

- [65] S. Robertson and J. Robertson, “Mastering the Requirements Process,” Addison-Wesley, Harlow, 1999.

- [66] PMI, “A Guide to the Project Management Body of Knowledge (PMBOK Guide),” PMI Project Management Institute, Newtown Square, 2004.

- [67] K. Schwalbe, “Information Technology Project Management,” 2nd Edition, Course Technology, Thomson Learning, Massachusetts, 2002.

- [68] B. Hughes and M. Cotterell, “Software Project Management,” 3rd Edition, McGraw-Hill Companies, London, 2002.

- [69] D. L. Olson, “Introduction to Information System Project Management,” McGraw-Hill, Boston, 2001.

- [70] R. K. Wysocki, J. R. Beck and D. B. Crane, “Effective Project Management,” 2nd Edition, Wiley Computer Publishing, New York, 2000.

- [71] R. Conradi and T. Dyba, “An Empirical Study on the Utility of Formal Routines to Transfer Knowledge and Experience,” ACM SIGSOFT Software Engineering Notes, Vol. 26, No. 5, 2001, pp. 268-276.

- [72] J. A. Calvo-Manzano Villalón, et al., “Experiences in the Application of Software Process Improvement in SMES,” Software Quality Journal, Vol. 10, No. 3, 2002, pp. 261-273.

- [73] P. Saliou and V. Ribaud, “ISO-Standardized Requirements Activities for Very Small Entities,” Proceedings of the 16th International Working Conference on Requirements Engineering: Foundation for Software Quality, Essen, 30 June-2 July 2010, pp. 145-157.

- [74] V. Ribaud, P. Saliou and C. Y. Laporte, “Towards Experience Management for Very Small Entities,” International Journal on Advances in Software, Vol. 4, No. 1-2, 2011, pp. 218-230.