American Journal of Operations Research

Vol. 2 No. 1 (2012) , Article ID: 17828 , 8 pages DOI:10.4236/ajor.2012.21005

Statistical Analysis of Process Monitoring Data for Software Process Improvement and Its Application

1Fuculty of Science and Engineering, Hosei University, Tokyo, Japan

2Graduate School of Engineering, Tottori University, Tottori, Japan

Email: kees959@hotmail.com, m10t7004b@edu.tottori-u.ac.jp, yamada@sse.tottori-u.ac.jp

Received November 28, 2011; revised December 29, 2011; accepted January 14, 2012

Keywords: Software Process Improvement; Process Monitoring; Design Quality Assessment; Multiple Regression Analysis; Principal Component Analysis; Factor Analysis; Quantitative Project Assessment

ABSTRACT

Software projects influenced by many human factors generate various risks. In order to develop highly quality software, it is important to respond to these risks reasonably and promptly. In addition, it is not easy for project managers to deal with these risks completely. Therefore, it is essential to manage the process quality by promoting activities of process monitoring and design quality assessment. In this paper, we discuss statistical data analysis for actual project management activities in process monitoring and design quality assessment, and analyze the effects for these software process improvement quantitatively by applying the methods of multivariate analysis. Then, we show how process factors affect the management measures of QCD (Quality, Cost, Delivery) by applying the multiple regression analyses to observed process monitoring data. Further, we quantitatively evaluate the effect by performing design quality assessment based on the principal component analysis and the factor analysis. As a result of analysis, we show that the design quality assessment activities are so effective for software process improvement. Further, based on the result of quantitative project assessment, we discuss the usefulness of process monitoring progress assessment by using a software reliability growth model. This result may enable us to give a useful quantitative measure of product release determination.

1. Introduction

In recent years, with dependence of the computerized system, software development has become more largescaled, complicated, and diversified. At the same time, customer’s demand of high quality and shortened delivery has increased. Therefore, we have to pursue the project management efficiently in order to develop highly quality software products. Also, we need to statistically analyze process data observed in software development projects. Based on the process data, we can establish the PDCA (Plan-Do-Check-Act) management cycle in order to improve the software development process with respect to software management measures about QCD (Quality, Cost, Delivery) [1,2].

There are many risks latent in promoting software projects. These risks often lead to QCD (Quality, Cost, Delivery) related problems, such as system failures, budget overruns, and delivery delays which may cause the project to fail. In order to lead a software project to become successful, project managers need to conduct adequate project management techniques in the software development process with technological and management skills. However, it is not easy for them to respond to all the risks. Therefore, we discuss the following two improvement activities:

• Process monitoring activities;

• Assessment activities of design quality.

Process monitoring activities review the process from the early-stage of the project by a third person of the quality assurance unit to find latent project risks and QCD related problems as shown in Figure 1 [3]. The process monitoring activity may help project managers to pursue the project management efficiently and also improve management process to lead a project to success. Assessment activities of design quality evaluate the completeness of required specifications and design specifications by third person as well as process monitoring activities. The assessment activities of design quality may improve the software development process by eliminating software faults.

The organization of the rest of this paper is as follows: Based on the results of Fukushima and Kasuga [4,5], Section 2 analyzes actual process monitoring data by using multivariate linear analyses, such as multiple regression analysis, principal component analysis, and factor

Figure 1. Overviews of the process monitoring activities.

analysis. At the same time, we use collaborative filtering to estimate of the missing value. Based on the derived quality prediction models, we make the software process factors affecting quality of software product clear. Section 3 evaluates the effect quantitatively by introducing design quality assessment activities into process monitoring based on the principal component analysis and the factor analysis. Further, Section 4 discusses a method of quantitative project assessment with the process monitoring data based on a software reliability growth model. Finally, Section 5 summarizes the results obtained in this paper.

2. Factorial Analysis Affecting the Software Quality

2.1. Process Monitoring Data

We analyze the factors affecting the quality of software products by using the process monitoring data as shown in Table 1. Ten variables measured by the process monitoring are used as explanatory variables. Three variables such as software management measures of QCD are used as objective variables. These variables are defined in the following:

X1: The number of problems detected in the contract review.

X2: The number of days how long it took for the problems to be solved in the contract review.

X3: The number of problems detected in the development planning review.

X4: The number of days how long it took for the problems to be solved in the development planning review.

X5: The number of problems detected in the design completion review.

X6: The number of days how long it took for the problems to be solved in the design completion review.

X7: The number of problems detected in the test planning review.

X8: The number of days how long it took for the problems to be solved in the test planning review.

X9: The number of problems detected in the test completion review.

X10: The number of days how long it took for the problems to be solved in the test completion review.

Yq: The number of detected faults given by the following expressions:

(The number of faults) = (The number of faults detected during acceptance testing) + (The number of faults detected during production).

Yc: The cost excess rate given by the following expressions:

(Cost excess rate) = (Actual cost value)/(Scheduled software development cost).

If the cost excesses rate is over 1.0, it means that the expenses exceed the software development budget.

Yd: The number of delivery-delay days to the shipping time planned at the project initiation time.

There are some missing values in Table 1. Therefore, we apply collaborative filtering [6] to estimate of these missing values. And projectNo.17-projectNo.21 are ones in which design quality assessment was carried out, whereas projectNo.1-projectNo.16 are ones in which design quality assessment was not assessed.

2.2. Multiple Regression Analysis

By using the process monitoring data in Table 1, we can perform correlation analysis among the explanatory and objective variables as follows:

• Contract review, design completion review, and test completion review have shown strong correlations to the measures of QCD.

• Yq has shown strong correlation to Yc and Yd.

Based on the correlation analysis, we can find that it is important to reduce the number of faults and ensure the software quality in order to prevent cost excess and delivery-delay. Therefore, X5, X7, and X10 are selected as important factors for estimating a software quality prediction model [7,8].

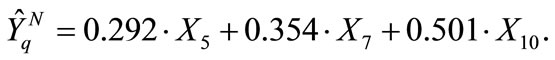

Then, a multiple regression analysis is applied to the process monitoring data as shown in Table 1. Then, using X5, X7, and X10, we have the estimated multiple regression equation predicting for software faults,  , given by Equation (1) as well as the normalized multiple regression expression,

, given by Equation (1) as well as the normalized multiple regression expression,  , given by Equation (2):

, given by Equation (2):

Table 1. Observed process monitoring data.

(1)

(1)

(2)

(2)

In order to check the goodness-of-fit adequacy of our model, the coefficient of multiple determination R2 is calculated as 0.735. Furthermore, the squared multiple correlation coefficient adjusted for degrees of freedom (adjusted R2), called the contribution ratio, is given by 0.669. The result of multiple regression analysis is summarized in Tables 2-3.

From Table 2, it is found that the precision of these multiple regression equations is high. Then, we can predict the number of faults detected for the final products by using Equation (1). From Equation (2), the order of the degree affecting the objective variable Yq is X5 < X7 < X10. Therefore, we conclude that the design completion review, the test planning review, and the test completion review have an important impact on product quality.

3. Analysis of the Effect of Design Quality Assessment

3.1. Analysis Data

We analyze the effect of design quality assessment by using the process monitoring data in Table 1. Then, we assume that the model signifies that although the risks at the start of the project negatively affect the management measures of QCD, the QCD can be improved by process monitoring activities and design quality assessment. Based on this hypothetical model, we analyze by using initial project risks data (as shown in Table 4) as well as the process monitoring data. These new variables are explained in the following:

X11: The risk ratio of project initiation. The risk ratio is given by the following expressions:

(3)

(3)

Table 2. Table of analysis of variance.

Table 3. Estimated parameters.

Table 4. Initial project risks data.

where the risk estimation checklist has weight (i) in each risk item (i), and the risk score ranges between 0 and 100 points. Project risks are identified by interviewing using the risk estimation checklist. From the identified risks, the risk score of a project is calculated by Equation (3).

X12: The development size. The development size is given by the following expressions: Development size (Kilo (103) steps) = (Number of newly developed steps) + 1.5 × (Number of modified steps) + (Influential rate) × (Number of reused steps). The influential rate ranges between 0.01 and 0.1.

X13: The number of days during development period.

X14: The estimated man-hours (the development budgets divided by the development cost per hour).

3.2. Principal Component Analysis

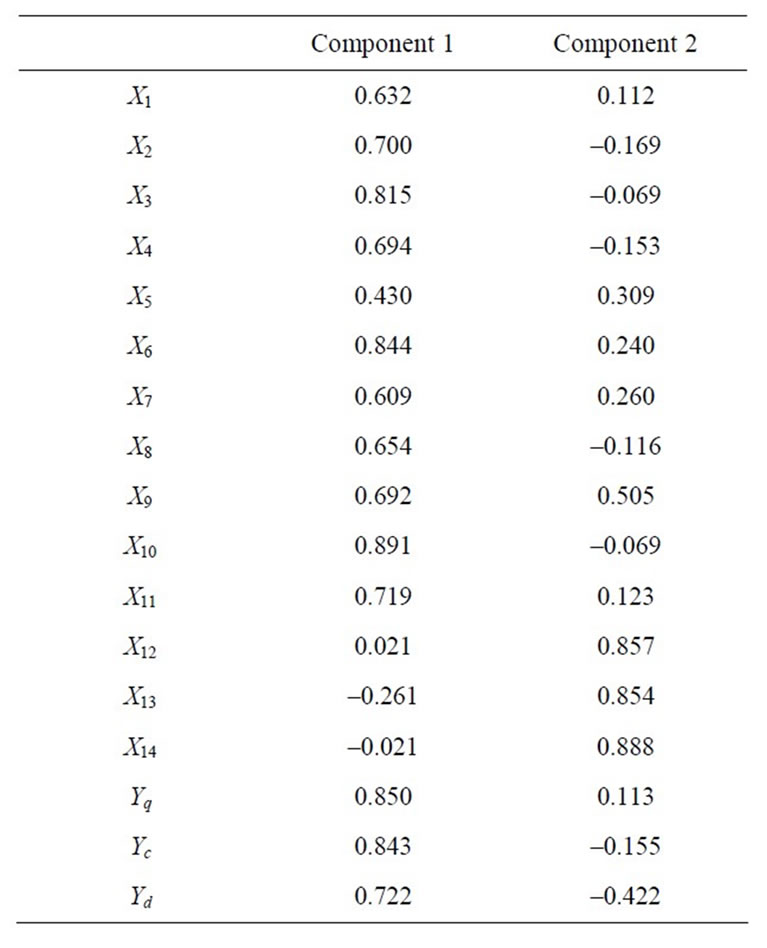

In order to clarify the relationship among variables and analyze the effect of design quality assessment activities on the management measures of QCD, principal component analysis [7,8] is performed by using the process monitoring data and initial project risks data in Tables 1 and 4. It is found that the precision of analysis is high from Table 5. And the factor loading values are obtained as shown in Table 6. The principal component scores are obtained as shown in Table 7. From Table 6, let us newly define the first and second principal components as follows:

• The first principal component is defined as the measure for QCD attainment levels.

• The second principal component is defined as the measure for software project estimation (development size, period, effort).

We obtain a scatter plot of the factor loading values in Figure 2. From Figure 2, it is found that the factors of process monitoring have shown positive correlation to the management measures of QCD. Therefore, we can consider that the process monitoring activities have an important impact on the management measures of QCD.

Further, we also obtain a scatter plot of the principal component scores as shown in Figure 3. Projects in which design quality assessment was carried out are indicated by the “ ” marks, whereas “

” marks, whereas “ ” marks indicate

” marks indicate

Table 5. Summary of eigenvalues and principal components.

Table 6. Factor loading values.

Table 7. Principal component scores.

Figure 2. Scatter plot of the factor loading values.

that design quality assessment was not performed. From Figure 3, it is found that the values of the first principal components are small. This result has shown that the projects in which the design quality assessment activities were carried out can reduce the number of faults, the cost excess, and the delivery-delay.

Figure 3. Scatter plot of the principal component scores.

3.3. Factor Analysis

Factor analysis is performed by using the process monitoring data and initial project risks data in Tables 1 and 4 as well as principal component analysis. Then, the method of varimax rotation is applied to the rotation of factor axes. From Table 8, it is found that the precision of analysis is high. And the factor loading values are obtained as shown in Table 9. The factor scores are obtained as shown in Table 10.

From Table 9, it is found that X5, X6, X9, and X10 are the same group factors considered as “the value of project attainment”, X1, X2, X3, X4, and X8 as “the value of project planning”, X7, X11, Yq, and Yc as “the value of quality and cost”, and X12, X13, X14, and Yd as “the value of estimation and delivery”.

From Table 10, it is found that the values of the third factor of all the projects in which the design quality assessment activities were carried out are small. This result has shown that the projects in which the design quality assessment activities were carried out can reduce the number of faults, the cost excess, and the delivery-delay.

4. Quantitative Project Assessment

Next, we discuss quantitative project assessment based on the process monitoring data. A project progress growth curve in the process monitoring activities is assumed to be the relationship between the number of process monitoring progress phases and the cumulative number of QCD problems detected during the process monitoring. Then, we apply Moranda geometric Poisson model [9], which is a software reliability growth model (SRGM), to the process monitoring data on X1, X3, X5, X7, and X9 as shown in Table 1.

We discuss project progress modeling based on the Moranda geometric Poisson model because an analytic treatment of it is relatively easy. Then, we choose the number of process monitoring progress phases as the alternative unit of testing-time by assuming that the observed data for testing-time are discrete in an SRGM.

In order to describe a fault-detection phenomenon dur-

Table 8. Analysis precision.

Table 9. Factor loading values.

ing processing monitoring progress phase i ( ![]()

), let Ni denote a random variable representing the number of problems detected during ith project monitoring progress interval (Ti–1, Ti] (T0 = 0;

), let Ni denote a random variable representing the number of problems detected during ith project monitoring progress interval (Ti–1, Ti] (T0 = 0; ). Then, the problem-detection phenomenon can be described as follows:

). Then, the problem-detection phenomenon can be described as follows:

(4)

(4)

where Pr{A} means the probability of event A, and

![]() = the average number of problems detected in the first interval (0, T1]k = the decrease ratio of the number of problems detected by process monitoring activities.

= the average number of problems detected in the first interval (0, T1]k = the decrease ratio of the number of problems detected by process monitoring activities.

From Equation (4), setting Ti = i ( ), we obtain the following quantitative project assessment measures, that is, the expected cumulative number of problems detected up to nth process monitoring progress

), we obtain the following quantitative project assessment measures, that is, the expected cumulative number of problems detected up to nth process monitoring progress

Table 10. Factor scores.

phase, E(n), and the expected total number of problems latent in the software project,  , are given as Equations (5) and (6), respectively:

, are given as Equations (5) and (6), respectively:

(5)

(5)

(6)

(6)

Project assessment measures play an important role in quantitative assessment of process monitoring progress. The expected number of remaining problems, r(n), represents the number of problems latent in the software project at the end of nth process monitoring progress phase, and is formulated as

, (7)

, (7)

and the instantaneous MTBP which means mean time between problem occurrences is formulated as

(8)

(8)

Further, a project reliability represents the probability that a problem dose not occur in the time-interval (n, n + 1] (n ≥ 0) given that the process monitoring progress has been going up to phase n. Then, the project reliability function is derived as

. (9)

. (9)

We present numerical examples by using the Moranda geometric Poisson model for ProjectNo.5. Figure 4 shows the estimated cumulative number of problems detected, E(n), and the actual measured values during process monitoring progress interval (0, n] where the estimated parameters are given as ![]() = 4.39 and

= 4.39 and  = 0.773 by using a method of maximum-likelihood. Figure 5 shows the estimated expected number of remaining problems, r(n). From Figure 5, it is found that there are 5 problems remaining at the end of test completion review phase (n = 5).

= 0.773 by using a method of maximum-likelihood. Figure 5 shows the estimated expected number of remaining problems, r(n). From Figure 5, it is found that there are 5 problems remaining at the end of test completion review phase (n = 5).

Further, the estimated instantaneous MTBP is obtained as shown in Figure 6. From Figure 6, it is found that the process monitoring activities is going well because the MTBP is growing. As for project reliability, it is necessary to keep conducting the process monitoring activities because the project reliability after the test completion review is 39 percent.

5. Concluding Remarks

In this paper, we have discussed statistical data analysis for actual activities for process monitoring and design quality assessment, and analyzed these effects for software process improvement quantitatively by applying the methods of multivariate analysis. We have found how process factors affect the management measures of QCD by applying the multiple regression analyses to observed process monitoring data. Further, we have evaluated the effect quantitatively by performing design quality assessment based on the principal component analysis and

Figure 4. The estimated cumulative number of detected problems, E(n).

Figure 5. The estimated expected number of remaining problems, r(n).

Figure 6. The estimated instaneous MTBP, MTBP(n).

multiple regression analysis, we have found that the design completion review, the test planning review, and the test completion review have an impact on final product quality. Then, we can consider that the problems in the test planning review and the test completion review were influenced by those in the design completion review. That is, it is very important to manage the design quality in software development. At the same time, we have quantitatively confirmed that the design quality assessment activities are so effective for software process improvement.

Further, as a result of quantitative project assessment, we have confirmed the usefulness of process monitoring progress assessment based on the Moranda geometric Poisson model. These results enable us to give a useful quantitative measure of product release determination.

As an above-mentioned result, in order to lead a software project to become successful, it is important to perform continuous improvement of the software development process by conducting adequate project management techniques such as process monitoring and design quality assessment activities.

In the future, we need to derive a highly accurate quality prediction model, and find the factors which influence management measures of QCD in order to lead a software project to become successful.

6. Acknowledgements

We are grateful to Dr. Kimio Kasuga and Dr. Toshihiko Fukushima for actual process monitoring data. Also the authors would like to thank Mr. Akihiro Kawahara and Mr. Tomoki Yamashita at Graduate School of Engineering, Tottori University. This work was supported in part by the Grant-in-Aid for Scientific Research (C) (Grant No.22510150) from Ministry of Education, Culture, Sports, Science, and Technology of Japan.

REFERENCES

- S. Yamada and M. Takahashi, “Introduction to Software Management Model,” Kyoritu-Shuppan, Tokyo, 1993.

- S. Yamada and T. Fukushima, “Quality-Oriented Software Management,” Morikita-Shuppan, Tokyo, 2007.

- T. Fukushima and S. Yamada, “Improvement in Software Projects by Process Monitoring and Quality Evaluation Activities,” Proceedings of the 15th ISSAT International Conference on Reliability and Quality in Design, 2009, pp. 265-269.

- T. Fukushima, K. Kasuga and S. Yamada, “Quantitative Analysis of the Relationships among Initial Risk and QCD Attainment Levels for Function-Upgraded Projects of Embedded Software,” Journal of the Society of Project Management, Vol. 9, No. 4, 2007, pp. 29-34.

- K. Kasuga, T. Fukushima and S. Yamada, “A Practical Approach Software Process Monitoring Activities,” Proceedings of the 25th JUSE Software Quality Symposium, 2006, pp. 319-326.

- M. Tsunoda, N. Ohsugi, A. Monden and K. Matsumoto, “An Application of Collaborative Filtering for Software Reliability Prediction,” IEICE Technical Report, SS2003- 27, 2003, pp. 19-24.

- S. Yamada and A. Kawahara, “Statistical Analysis of Process Monitoring Data for Software Process Improvement,” International Journal of Reliability, Quality and Safety Engineering, Vol. 16, No. 5, 2009, pp. 435-451. doi:10.1142/S0218539309003484

- S. Yamada, T. Yamashita and A. Fukuta, “Product Quality Prediction Based on Software Process Data with Development-Period Estimation,” International Journal of Systems Assurance Engineering and Management, Vol. 1, No. 1, 2010, pp. 72-76. doi:10.1007/s13198-010-0004-y

- P. B. Moranda, “Event-Altered Rate Models for General Reliability Analysis,” IEEE Transactions on Reliability, Vol. R-28, No. 5, 1979, pp. 376-381. doi:10.1109/TR.1979.5220648