Paper Menu >>

Journal Menu >>

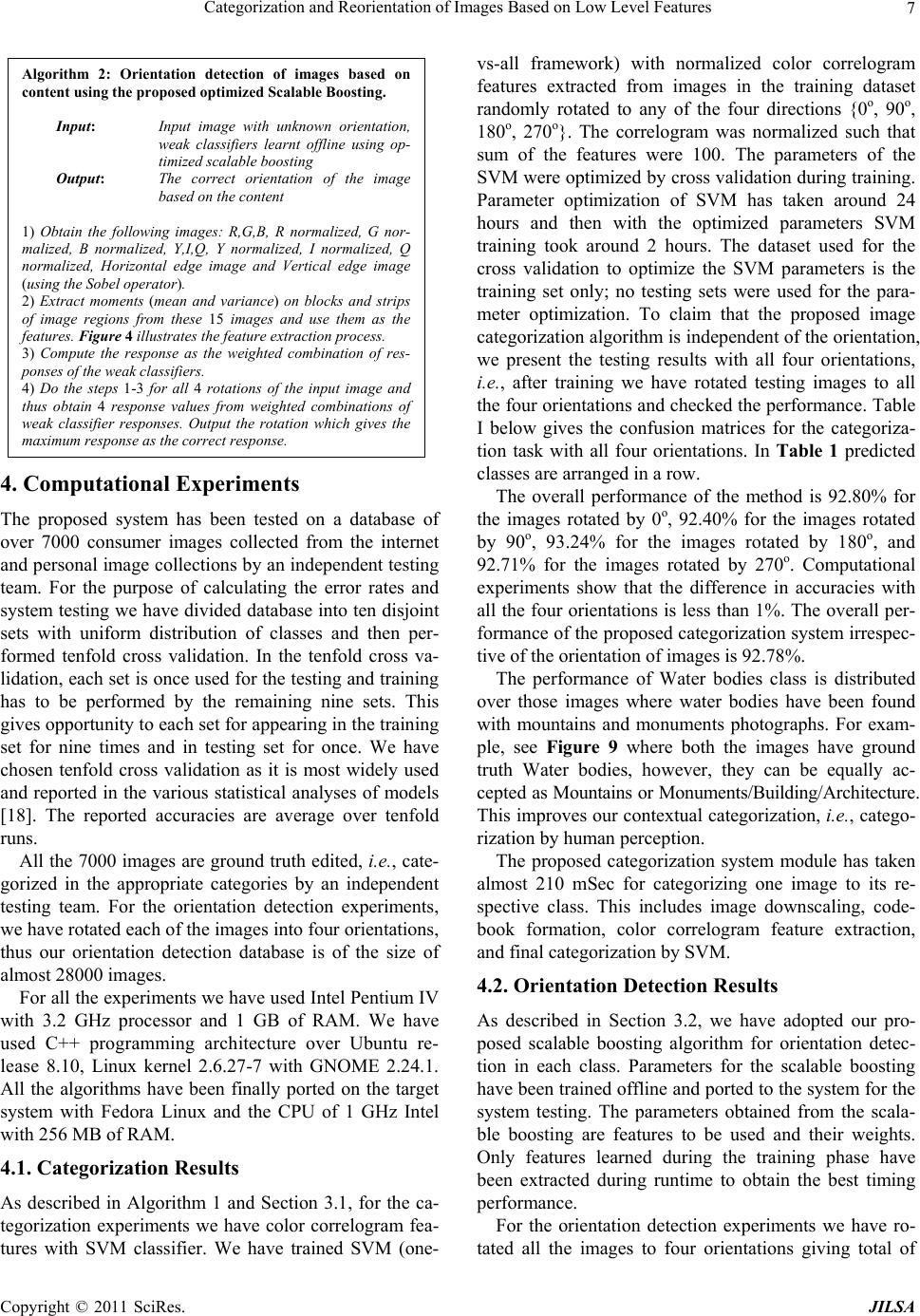

p J ournal o f In doi:10.4236/jil s Copyright © 2 0 Cate g on Lo w Rajen Bhat t 1 Samsung Indi a Delhi, India. Email: rajen.b h Received June 2 ABSTRA C A hierarchic a s ented. The p r A t the second s ystem has be e chine learnin g s calable boos s ented on a c o p lications to v Keywords: C a Su p 1. Introdu c Digital Conte n of vital impo r available to c o internet, digit a home record e form of mem o With the adv a imaging tech n images are i n casions like f a iday tours, a d rates large n u p resent a DC M automatically ries and dete c 270 o } based o images is an i m indexing, se a collections. O lem because o b efore image to the comp u with the vie w telli g ent Lear n s a.2011.31001 P 0 11 SciRes. orizat i w Lev t 1 , Gaurav S a Software Cent e h att@gmail.co m 2 2 nd , 2010; revi s C T a l system to p e r oposed syste m stage, it detec t e n specially d e g algorithms o ting have bee n o llection of a b v arious digit al a tegorization, D p port Vector M c tion n t Manageme n r tance with gr o o nsumers thro a l cameras, ca m e rs. Digital sti l o ries captured a ncements in d n ologies, hig h n creasing at t h a mily parties a d venture, and s u mber of stil M solution for categorize i m c t their orienta t o n the conte n m portant step a rching, and r O rientation de t o ften digital c a capture and w u ter they are r w geometry a n n in g S y stems P ublished Onlin e i on a n el Fea t harma 1 , Ab h e r, Noida, India , schaudhury@ g s ed July 29 th , 2 0 e rform autom a m first categori z t s their correc t e signed for e m o ptimized to s n used to dev e b out 7000 con s media produc t D igital Conte n M achines n t (DCM) has o wing multim e ugh various m m corders, mo b l l images are by various el d igital camera s h resolution a h e consumer e n a nd gathering s s till photograp l images. In still digital i m m ages into fo u t ions from {0 o n t analysis. C a for enabling m r etrieving im a t ection is an i a mera users r o w hen such im a r otated and n o n d human vis u and App l icati o e February 201 1 d Reo r t ures h inav Dhall 1 ; 2 Electrical En g g mail.co m 0 10; accepted S e a tic categoriz a z es images to s t orientati on o u m bedded devic e s uit the embe d e lop classifie rs s umer images t s and brings p n t Manage me n become an ar e e dia informati o m ediums such a b ile p hones, a n most importa n ectronic mea n s and in-came r a nd fine quali t n d. Various o s , outbound h o hy hobby gen this paper, w m ages which c a u r broad categ o o , 90 o , 180 o , a n a tegorization o m any tasks, e. g a ges from lar g i mportant pro b o tate the came r a ges are fetch e o t synchroniz e u al system. A u o ns, 2011, 3, * 1 (http://www.S c r ienta t , Naresh K u g ineering Depa r e ptember 23 rd , 2 a tion and reor s ome a priori d u t of {0 o , 90 o , e s application s d ded impleme n rs for categori z collected fro m p attern recogn i nt , F eature Se l e a o n a s n d n t n s. r a t y c- o l- e- w e a n o - n d o f g ., g e b - r a e d e d u - tomati c as it el i tion of The i.e., fi r For th e b road c Archit e of the i rotatio n Then w tion o f feature s To m device s inexpe n of ima g ficatio n machi n scalabl e The p some o scribe orienta t * *-** c iRP.org/journ a t ion o f u mar 1 , Santa r tment, Indian I n 2 010 ientation of i m d efined categ o 180 o , and 270 o s using only lo w n tation like s u z ation and or i m open resour c i tion solutions l ection, Orient a c orientation d i minates the n many rotated i solution prop o r st categorizat e categorizatio n c atego r ies nam e e cture, Water b i nput image i s n invariant f e w ithin each ca t f images by t s of that parti c m ake the prop o s , we make u n sive features b g es. To furthe r n and orientati n es (SVM) for e boosting for p resent paper o f the related the propose d t ion detection a l / jilsa) f Ima g nu Chaudh u n stitution of Te c m ages using c o o ries using rot a o } using categ o w level color a u pport vector i entation dete c c es. The prop o to the consu m a tion Detectio d etection adds n eed to manua l i mages. o sed by us is ion and then n of images w e e ly Mountains , b odies, and P o s unknown to t e atures for th e t egory, we det e t he method t u c ular category. o sed approach u se of simple b ased on colo r r speed up th e on detection, w categorizatio n the orientatio n is organized a work has be e d approach f o in Section 3. g es Ba s u ry 2 c hnology Delhi, o ntent analysi s a tion invarian t o ry specific m o a nd edge feat u machines (S VM c tion. Results o sed system fi n m er electronics n, Scala bl e B o convenience f l ly correct the hierarchical i n orientation d e have consid e , Monuments/ B o rtraits. As th e t he system, w e e categorizati e ct the correc t u ned to the suitable fo r e m and comput a r and edge inf o e later stages o w e use Suppo n and highly o p n detection. s follows. In S e n described. o r categoriza t Computatio n JILSA 1 s ed New is is p r e- t features. o del. The u res. Ma- VM s) and are p r e- n ds it ap- domain. o osting, f or users orien t a- n nature, d etection. e red four B uil d ing/ e rotation e extract on task. t orien t a- content- m bedded a tionally o rmation o f classi- rt vector p timized S ection 2 We de- t ion and n al expe-  Categorization and Reorientation of Images Based on Low Level Features Copyright © 2011 SciRes. JILSA 2 riments have been reported in Section 4. Section 5 con- cludes the paper with target applications and directions for further research. 2. Related Work Image categorization is an area of much recent research. However, most of the work uses high time complexity point detector scale invariant features (SIFT) [1]. SIFT features require high computational complexity and memory usage. A recent representation of images, bag of words [2], derived from text document analysis has been shown to be very good for categorization tasks [2-4]. However, one cannot make use of such complex features because of hardware limitations and hence one cannot perform point detection and description for potentially multiple points on a full image. Orientation detection is also a well researched field. A Bayesian learning approach has been proposed in [5]. In [6,7], authors have used SVMs with content-based fea- tures for orientation detection. They have used spatial color moments (CM) and edge direction histograms (EDH) and trained eight parallel SVMs, two for each class with the two different features, i.e., CM and EDH. They have used classifier combination using averaging and another SVM on top of the eight SVM outputs. Adaboost-based approach proposed in [8] also used CM and EDH features. They have trained an indoor versus outdoor classifier using similar Adaboost algorithm and then used the category specific orientation detection. However, we feel that classifying images into indoor and outdoor is very broad and does not cover conceptually classes used by consumers to sort the images. Novel fea- tures based on Quadrature Mirror Filters (QMF) were proposed in [9]. These features were used with a two stage hierarchy of nonlinear SVM. The first stage clas- sified the image as portrait or landscape and the second stage further classified landscape as 0 or 180 degrees and the portrait as 90 or 270 degrees. Scalable boost- ing-based approach has been invented by Google re- searcher in [10]. In [11], a large scale performance evaluation for image reorientation problem using dif- ferent features and color spaces have been proposed to identify most useful ones. SVM have been trained for different subsets of the features and their performance comparisons were presented. In [12], pre-classification into known categories have been presented before orientation detection. The four categories considered were close-ups and wide views of natural and artificial (man-made) things, giving a total of 4 categories. How- ever, the categorization is not enough contextually and more specific categorization should be considered for the consumer applications. 3. The Proposed DCM Framework The proposed DCM framework is first categorization and then category specific orientation detection as articulated in Figure 1 with some example images. In the following sections we describe the features and machine learning approaches used for the stated problem. 3.1. Image Categorization We categorize images into four classes namely Moun- tains, Monuments/Building/Architecture, Waterbodies, and Portraits. Figure 2 shows examples of the images Figure 1. Categorization and orientation correction.  Categorization and Reorientation of Images Based on Low Level Features Copyright © 2011 SciRes. JILSA 3 Figure 2. Example images from the four categories. from the database. Portraits images are those images where human faces are prominent. 3.1.1. Low Level Color Correlogram Features We solve the categorization problem with color correlo- gram features and SVM. For any pixel, the color corre- logram gives the probability of a pixel at a distance k away to be of certain color. To be precise, pick any pixel p1 of color Ci in the image I, at distance k away from p1 pick another pixel p2, the probability that p2 is of certain color Cj can be defined by color correlogram feature. Color correlogram features are defined as [13] 12 12 iji j k C,CC C Prppk, pI, pI (1) We use the dynamic programming algorithm given in [13] to compute the color correlogram feature for the given image. First we compute the following two quantities: c I0 c,h x,y kxi,y|ik c I0 c,v x,y kx,yj|jk (2) Here are the counts of the number of pixels of a given color within a given distance from a fixed pixel in the positive horizontal and vertical directions. Then ig- noring boundaries, the un-normalized correlogram is computed as 11 Γ22 22 22 j,h j,h ij Ci j,vj,v cc k c,c xk,yk xk,yk x,y I cc xk,yk xk,yk I kk kk (3) Then finally the correlogram is computed as c Γ 8 ij ij i k,c k c,c c I IhI k (4) The choice of color correlogram as a feature was mo- tivated by two factors: (a) the input images may be ro- tated by multiples of 90 degrees and color correlogram is rotation invariant; and (b) images are expected to be col- or images and color distribution (global and spatially relative) is definitely a good discriminator for the differ- ent classes. While a histogram feature also captures color information from an image, it loses all the spatial infor- mation. Color correlogram feature captures the spatial (relative) correlation of colors while enabling efficient computation. It has been proposed and used for image indexing and retrieval [13]. We show that the feature along with a suitable classifier can be used for image categorization giving good results. 3.1.2. Color C odebook Formation The color correlogram features are of dimension d × d × c were d is the distance parameter and c is the number of colors in the image. To obtain a decent length of feature vector with good compromise between vector length and discrimination power, the color space has to be quantized and the image has to be downscaled in the color space. To quantize the color space, the simplest method is stan- dard downscaling using uniform bin quantization. How- ever, uniform bin quantization assumes a uniform distri- bution of colors in the database images. Since the images are not random but constrained to be consumer images, the distribution of the colors in the images is expected to be a complex multimodal distribution instead of a simple uniform distribution. To capture the modes of the distri- bution we opted to sample pixels from the database im- ages and then to form the codebook for quantization. We use the pixel samples and perform K-means clustering to find the representative codebook vectors. These vectors are then used for downscaling the images by assigning each image pixel to the nearest codebook vector based on the Euclidean distance. 3.1.3. Support Vector Machines (SVM) Once the images are represented with color correlogram vectors, we use support vector machines (SVM) [14] for categorization of the vectors into 4 classes. SVM are statistical classifiers which, given training instance-label pairs 11 n ii ii x ,y,xR ,y,, solve the following optimization problem, 1 1 2 l Ti ,b, i min C Subject to 10 T iii, i, yxb (5) SVM finds a separating hyper plane, in the φ(.) space which is induced high dimensional space, having the maximum margin [15] and has got proven generalization capability. Figure 3 is the toy classification problem and separating hyper plane in 2D space. Samples on the mar- gin are called support vectors. We train one-vs-all SVM classifier on the training data. We have used the kernel SVM with radial basis function as a kernel. RBF kernels are described as 20 T ijiii j K x ,xxxexpxx,. (6) The RBF kernel is one of the most important and pop- ular kernel functions. It adds a bump around each data  Categorization and Reorientation of Images Based on Low Level Features Copyright © 2011 SciRes. JILSA 4 Figure 3. Example problem solved with linear SVM. point: 2 1 m ii i f xexpxxb (7) Using the RBF kernel each data point is mapped as ar- ticulated in the Figure 4 below: Example classification boundary generated by the RBF kernel classifier is shown in Figure 5 below. The cost parameter C and the kernel parameter were optimized using cross validation. We have used libSVM [15] for the target implementation. Figure 4. Mapping data points using the RBF kernel func- tion. Figure 5. Example classification boundary generated by RBF kernel SVM. 3.1.4. Image Categorization Al g orithm Given a new image the categorization system works as follows. First, the image is rescaled to a smaller size so that the computation time can be saved. We have per- formed various experimentations by downscaling images to 640*480 and 320*240 resolution. However, with 320*240 downscaling we have found that there is only marginal drop in the classification ac- curacy and that can be covered by tuning the SVM train- ing parameters. While there is almost four times drop in the computation time and complexity of the feature ex- traction algorithm. Considering these computations, we have downscaled every image to 320*240 resolution during training and testing time. Then the image is also downscaled in color dimension to lower number of col- ors using the codebook of colors formed by K-means clustering of database pixel samples explained above in II.A.2. Once the image is downscaled to smaller number of colors, an indexed image is formed containing the pixel-to-codebook color index information. The color correlogram is then computed on this indexed image. The color correlogram is rotation invariant, i.e., it is the same for any of the four orientation of the image on which it is being calculated. The correlogram is then used as input to the SVM model trained offline using the training da- taset. The SVM returns the label of the category to which the image belongs. The category corresponding to the label is then given as the output of the system. Algo- rithm 1 below gives the steps of the computation. 3.2. Image Orientation Detection Once the images are categorized into four categories, we proceed to detect the correct orientation based on their content analysis. As our target is embedded and fast run time algorithm is required, we again work with simple low level color and edge features. For the classification task, we have identified the simple classifier combination with Adaboost algorithm. 3.2.1. Feature E xtraction The orientation detection algorithm used is based on the scalable boosting [10]. To suit the implementation over embedded devices and to meet the challenge of time and accuracies, we have optimized the scalable boosting ap- proach. The vanilla version of scalable boosting uses the following feature extraction scheme. From the original image, 15 simple transformed single channel images are computed as shown in Figure 6: 1-3: R,G,B, channels 4-6: Y,I,Q (transformation of R,G,B image) chan- nels 7-9: Normalized versions of R,G,B channels (li- nearly scaled to span 0-255)  Categorization and Reorientation of Images Based on Low Level Features Copyright © 2011 SciRes. JILSA 5 Figure 6. Features calculated for scalable boosting algo- rithm. 10-12: Normalized versions of Y,I,Q channels (li- nearly scaled to span 0-255) 13: Intensity (simple average of R,G,B) 14-15: Horizontal and Vertical edge images, respec- tively, computed from intensity (i.e., gray level) images For each of these transformed images, the mean and variance of the entire image have been computed. Addi- tionally, mean and variance of square sub-regions of the image have also been computed. The sub regions cover 11 11 to 22 66 of the image which gives total of 1 + 4 + 9 + 16 + 25 + 36 = 91 squares. Also the mean and variance of vertical and horizontal slices of the image that cover 1/2 to 1/6 of the image have been computed. There are total 2 + 3 + 4 + 5 + 6 = 20 horizontal and 20 vertical slices. Thus there are total of 1965 features 15912020 for mean and 1965 features for va- riance gives total features 3930. However, calculating 3930 dimensional feature vector over 15 channels is computationally heavy for the em- bedded devices. We have optimized at this stage by first computing integral image over each channel [16]. An integral image is calculated by summed area table algo- rithm which quickly and efficiently generates the sum of values in a rectangular subset of a grid. The integral im- age at location x,y contains the sum of the pixels above and to the left of x,y with x,y inclusive (Figure 7), i.e., '' '' xx,yy I nI x,yI x,y (8) where I nIx,y is the integral image and I x',y' is the original image. Using the following pair of recurrences: 1 1 s x,ys x,yIx,y I nI x,yInI x,ysx,y (9) where s x,y is the cumulative row sum, 10sx, and 10 I nI ,y , an integral image can be com- puted. The integral image can be computed in one pass over the original image. From the database images (al- most 7000 in number and scaled down to 640*480 for orientation detection) during training time with scalable boosting the feature extraction time was 36 hours. Using the integral image, this time has been reduced to 3 hours; 12 times reduction compared to the vanilla scalable boosting algorithm. Another scope for optimization was found in moment (mean and variance) calculation when the images are rotated. Even though the image is rotated still the value of the block remains the same. Hence a mapping was done for a block at location (x,y) to its corresponding position in the rotated image. This saved in recalculation of block mean and variance value of the rotated integral Figure 7. The value of the integral image at point (x, y). Algorithm 1: Rotation invariant categorization of input consumer images. Input: Image with unknown orientation, color codebook built offline, SVM models trained offline Output: The category of the image, e.g., Moun- tains, Monuments/Building/Architecture, Water bodies, or Portraits, based on the content 1) Downscale the input image in color dimension with re- spect to the codebook learnt offline. This will give an indexed image IIND, whose each pixel will contain the index of the corresponding codebook v ec t o r. 2) Form the correlogram using the indexed image. We have used a codebook of size 12 and we have set the distance pa- rameter d = 4 in our model. This gives us a vector of size 12 × 12 × 4 = 576 dimensions. 3) Use the SVM model to obtain the label of the category to which the vector belongs to. Output the category corres- ponding to the label returned by the SVM. (x, y)  Categorization and Reorientation of Images Based on Low Level Features Copyright © 2011 SciRes. JILSA 6 image. This has further helped to reduce the feature ex- traction time during training and testing. 3.2.2. Scal abl e Boosting Classifier Scalable boosting makes use of simple weak classifiers strategically combined with Adaboost algorithm to craft the final strong classifier. It uses the output of a simple binary comparison between two of the features described in the previous sub section as the weak classifier. Two simple Boolean operators (and their inverses) are used: 1) featurei > featurej 2) Difference of features within 25% of featurei: 025 ij i feature feature. feature Vanilla scalable boosting use the difference of features within 5% as well, however, we have found that by per- forming various optimizations, difference of features within 25% difference features are sufficient to achieve the target accuracies with reduction in the time complex- ity. Thus compared to vanilla scalable boosting which has 46334700 (almost 46 Million, 3930 3930 3 ) fea- tures, our implementation has 30889800 (more than 30 Million, 3930 3930 2 ) features. Still this is extremely large number of weak classifiers to consider. The main steps of the Adaboost algorithm are shown in Figure 8 [17]. At every iteration, Adaboost selects the best weak classifier for the weighted errors of the pre- vious step. The weight changes in Step 4 are such that the weak classifier picked in Step 3 would have an error of 0.5 on the newly weighted samples, so it will not be picked again at the next iteration. Once all the weak classifiers are selected, they are combined to form a strong classifier by a weighted sum, where the weights are related to the re-weighting factors that were applied in Step 4. Computing the accuracies of all the weak classifiers at each iteration is time-consuming, though it affects only the training time and not the runtime, computing all the features will be very prohibitive. To reduce the training time, we have randomly selected which weak classifiers will be evaluated at each iteration, and select the new classifier from only those that were evaluated. At each iteration, the set of classifiers to be evaluated is randomly chosen again. Because of practical resource considerations during training, we have limited the num- ber of weak classifiers examined in each iteration. We have experimented with 10000 to 50000 features at each iterations with the step size of 5000. This has varied training time for each AdaBoost classifier to approx- imately 1-3 days. In the final system, to meet the time- accuracy trade-off, we have adopted to use the strong classifiers generated by 30000 classifiers evaluation at each iteration of AdaBoost. Along with this setting of scalable boosting classifier, we have taken optimization steps described in the sub section III.B.1 above. 3.2.3. Ima ge Ori e nt at i o n Detection Algorithm We have optimized the scalable boosting algorithm as described in the above sections and weights for the com- bination of weak classifiers are learnt offline. Thus we have the weak classifiers and their weights learnt from offline training of the model. With a new image coming in, we use the features extracted to compute the response of the weak classifiers and combine the weighted res- ponses to form the final response. This response is ob- tained for all 4 rotations of the image. The rotation with the maximum response is taken to be the correct rotation of the image and is given as the output to the user. Algorithm 2 enumerates the steps for orientation de- tection using Scalable Boosting. Figure 8. A boosting algorithm with {0, 1} targets.  Categorization and Reorientation of Images Based on Low Level Features Copyright © 2011 SciRes. JILSA 7 4. Computational Experiments The proposed system has been tested on a database of over 7000 consumer images collected from the internet and personal image collections by an independent testing team. For the purpose of calculating the error rates and system testing we have divided database into ten disjoint sets with uniform distribution of classes and then per- formed tenfold cross validation. In the tenfold cross va- lidation, each set is once used for the testing and training has to be performed by the remaining nine sets. This gives opportunity to each set for appearing in the training set for nine times and in testing set for once. We have chosen tenfold cross validation as it is most widely used and reported in the various statistical analyses of models [18]. The reported accuracies are average over tenfold runs. All the 7000 images are ground truth edited, i.e., cate- gorized in the appropriate categories by an independent testing team. For the orientation detection experiments, we have rotated each of the images into four orientations, thus our orientation detection database is of the size of almost 28000 images. For all the experiments we have used Intel Pentium IV with 3.2 GHz processor and 1 GB of RAM. We have used C++ programming architecture over Ubuntu re- lease 8.10, Linux kernel 2.6.27-7 with GNOME 2.24.1. All the algorithms have been finally ported on the target system with Fedora Linux and the CPU of 1 GHz Intel with 256 MB of RAM. 4.1. Categorization Results As described in Algorithm 1 and Section 3.1, for the ca- tegorization experiments we have color correlogram fea- tures with SVM classifier. We have trained SVM (one- vs-all framework) with normalized color correlogram features extracted from images in the training dataset randomly rotated to any of the four directions {0o, 90o, 180o, 270o}. The correlogram was normalized such that sum of the features were 100. The parameters of the SVM were optimized by cross validation during training. Parameter optimization of SVM has taken around 24 hours and then with the optimized parameters SVM training took around 2 hours. The dataset used for the cross validation to optimize the SVM parameters is the training set only; no testing sets were used for the para- meter optimization. To claim that the proposed image categorization algorithm is independent of the orientation, we present the testing results with all four orientations, i.e., after training we have rotated testing images to all the four orientations and checked the performance. Table I below gives the confusion matrices for the categoriza- tion task with all four orientations. In Table 1 predicted classes are arranged in a row. The overall performance of the method is 92.80% for the images rotated by 0o, 92.40% for the images rotated by 90o, 93.24% for the images rotated by 180o, and 92.71% for the images rotated by 270o. Computational experiments show that the difference in accuracies with all the four orientations is less than 1%. The overall per- formance of the proposed categorization system irrespec- tive of the orientation of images is 92.78%. The performance of Water bodies class is distributed over those images where water bodies have been found with mountains and monuments photographs. For exam- ple, see Figure 9 where both the images have ground truth Water bodies, however, they can be equally ac- cepted as Mountains or Monuments/Building/Architecture. This improves our contextual categorization, i.e., catego- rization by human perception. The proposed categorization system module has taken almost 210 mSec for categorizing one image to its re- spective class. This includes image downscaling, code- book formation, color correlogram feature extraction, and final categorization by SVM. 4.2. Orientation Detection Results As described in Section 3.2, we have adopted our pro- posed scalable boosting algorithm for orientation detec- tion in each class. Parameters for the scalable boosting have been trained offline and ported to the system for the system testing. The parameters obtained from the scala- ble boosting are features to be used and their weights. Only features learned during the training phase have been extracted during runtime to obtain the best timing performance. For the orientation detection experiments we have ro- tated all the images to four orientations giving total of Algorithm 2: Orientation detection of images based on content using the proposed optimized Scalabl e B o os t in g . Input: Input image with unknown orientation, weak classifiers learnt offline using op- timized scalable boosting Output: The correct orientation of the image based on the content 1) Obtain the following images: R,G,B, R normalized, G nor- malized, B normalized, Y,I,Q, Y normalized, I normalized, Q normalized, Horizontal edge image and Vertical edge image (using the Sobel operator). 2) Extract moments (mean and variance) on blocks and strips of image regions from these 15 images and use them as the features. Figure 4 illustrates the feature extraction process. 3) Compute the response as the weighted combination of res- ponses of the weak classifiers. 4) Do the steps 1-3 for all 4 rotations of the input image and thus obtain 4 response values from weighted combinations of weak classifier responses. Output the rotation which gives the maximum response as the correct response.  Categorization and Reorientation of Images Based on Low Level Features Copyright © 2011 SciRes. JILSA 8 Table 1. Confusion matrices for the categorization. Rotated by 0o Mountains Monuments Water Bodies Portraits Mountains 93.648649 4.234234 1.936937 0.180180 Monuments 0.275482 99.559229 0.055096 0.110193 Water Bodies 4.714202 8.839128 85.9128 0.471420 Portraits 0.151515 7.575758 0.151515 92.121212 Rotated by 90o Mountains 90.045045 3.918919 5.765766 0.270270 Monuments 0.991736 98.181818 0.771350 0.055096 Water Bodies 7.012375 5.067767 87.684148 0.235710 Portraits 0.303030 5.984848 0 93.712121 Rotated by 180o Mountains 94.774775 3.693694 1.486486 0.045045 Monuments 0.385675 99.559229 0.055096 0 Water Bodies 5.715969 7.778433 85.798468 0.707130 Portraits 0.227273 6.742424 0.151515 92.878788 Rotated by 270o Mountains 94.909910 3.063063 1.936937 0.090090 Monuments 1.432507 98.016529 0.220386 0.330579 Water Bodies 11.019446 4.773129 83.794932 0.412493 Portraits 0.454545 5.151515 0.227273 94.166667 (a) (b) Figure 9. Ambiguous images; (a) an image which can be accepted as either Water Bodies or Mountains and (b) an image which can be accepted as either Monument/Building/Architecture or Water Bodies. 40000 images (nearly 10000 images for each class). We have adopted ten fold cross validation here as well and results reported are average of all the runs. This is re- peated ten times and the results are averaged. The overall performance of the proposed method for orientation de- tection is 96.01% for Mountain class, 92.07% for the Mounments/Building/Architecture class, 89.10% for Wa- terbodies class, and 91.28% for Portrait class. Table 2 below gives the confusion matrices for the orientation detection task with all four orientations. Here predicted classes are arranged in a row. The proposed orientation detection system module has taken almost 63 mSec for categorizing one image to its respective class. This includes orientation of image to four directions and obtaining scalable boosting estima- tion of their orientation. 4.3. Coupled System (Categorization and Orientation) The final testing has been performed with categorization system coupled with orientation detection system. During this testing, we have passed all the testing images have been passed through the coupled system where they have first categorized and then operated by the category spe-  Categorization and Reorientation of Images Based on Low Level Features Copyright © 2011 SciRes. JILSA 9 Table 2. Confusion matrices for the orientation dete c tion. Mountains 0 90 180 270 0 100 0 0 0 90 1.486486 98.153153 0 0.360360 180 8.873874 0.450450 90 0.675676 270 2.837838 1.261261 0 95.900901 Monuments 0 100 0 0 0 90 7.382920 91.129477 0.275482 1.212121 180 9.201102 1.212121 88.209366 1.377410 270 9.035813 1.763085 0.220386 88.980716 Water Bodies 0 99.941072 0 0 0.058928 90 4.007071 91.868002 0 4.124926 180 26.104891 0.117855 72.657631 1.119623 270 5.303477 2.710666 0 91.985857 Portraits 0 91.590909 2.196970 4.166667 2.045455 90 2.424242 90.833333 2.727273 4.015152 180 3.484848 2.651515 90.984848 2.878788 270 2.272727 3.030303 2.954545 91.742424 cific scalable boosting to detect and correct their orienta- tions. The system accuracy has been found to be 85.46% with average time taken for categorization and orienta- tion detection of single image was almost 300 mSec. This time includes the correction of orientation of image if it is found to be other than 0o. We have adopted to tag these images by their respec- tive category by adding the metadata information in the EXIF (Exchangeable Image File) format of JPEG images. Images with incorrect orientations have been redirected to the 0o. From the second page onwards, start from the top of the page in a single column format. The JILSA header and authors’ information from page one are not displayed. Do not insert include any header, footer or page number. JILSA editors will include the page num- ber, authors’ initials and the article number. 5. Conclusions and Further Research In this paper, we have proposed the digital content man- agement solution which is computationally less complex to result high time performance over embedded system and having better accuracy for categorization and orien- tation detection for general consumer images. The present system achieves 93.24% for rotation invariant categori- zation for 4 classes. When compared to scene categoriza- tion method of [19], we achieve a performance of 93.24% on 4 categories, while they achieve 89% on 4 categories (forest, mountains, country, and coast). In addition to the higher accuracy, the categories chosen by us are more general from consumer perspective and we make use of simple low level features for reducing time complexity. For example, Country category considered by [19] is of no use in consumer scenarios and they have not considered the Portrait class which is the most common class of consumer images. Compared to the best performance of image orienta- tion detection 79.1% reported in [14], we achieve 96.01% for orientation detection for Mountain class, 92.07% for Monuments class, 89.10% for Water bodies, and 91.28% for the portrait class. Further, database used in [11] was simple and taken from Corel collection containing scenic shots, tourist, and people shots. We are able to achieve best accuracies than [14] by first categorizing the images into more homogeneous categories and then training the algorithm independently on each category. The overall accuracy of the proposed system is 85.46%, which is higher than the one reported in [11]. The proposed system has been developed to run on embedded platform with limited computing resources. This restriction did not allow us to compute higher com- plexity SIFT features [1], and we have investigated some low level features combined with strong optimized ma- chine learning algorithms to achieve the presented accu- racies and timing performance. The DCM (Digital Content Management) applications developed in addition to the proposed core algorithms for categorization and orientation detection form an impor- tant part of the product. In general, we are proposing true DCM solution in which images are auto categorized first and then reo- riented to reduce the burden of doing these operations manually or by inserting manual metadata in the EXIF files of JPEG encoded images. Various other features like color-based clustering, time and date-based cluster-  Categorization and Reorientation of Images Based on Low Level Features Copyright © 2011 SciRes. JILSA 10 ing, similar scene detection, blur detection, and mapping image colors with music mood selection for album play along with the proposed categorization and orientation detection solutions bring pattern recognition-based con- sumer electronic products to the end users. To the best of our knowledge accuracies reported by us are best known in the literature and the proposed is the first of its kind product presenting a comprehensive DCM solution to the consumer electronics. REFERENCES [1] D. G. Lowe, “Distinctive Image Features from Scale-Invariant Keypoints,” International Journal on Computer Vision, Vol. 60, No. 2, 2004, pp. 91-110. doi:10.1023/B:VISI. 0000029664.99615.94 [2] J. Willamowski, D. Arregui, G. Csurka, C. R. Dance and L. Fan, “Categorizing Nine Visual Classes Using Local Ap- pearance Descriptors,” International Conference on Pat- tern Recognition, Cambridge, 2004. [3] L. Fei-Fei and P. Perona, “A Bayesian Hierarchical Model for Learning Natural Scene Categories,” IEEE Computer Society Conference on Computer Vision and Pattern Rec- ognition, San Diego, 20-25 June 2005, pp. 524-531. [4] S. Lazebnik, C. Schmid and J. Ponce, “Beyond Bags of Features: Spatial Pyramid Matching for Recognizing Nat- ural Scene Categories,” Proceedings of Computer Vision and Pattern Recognition, IEEE, New York, 2006, pp. 2169-2178. [5] A. Vailaya, H.-J. Zhang, C. Yang, F.-I. Liu and A. K. Jain, “Automatic Image Orientation Detection,” IEEE Transac- tions on Image Processing, Vol. 11, No. 7, 2002, pp. 746-755. doi:10.1109/TIP.2002.801590 [6] Y. Wang and H. Zhang, “Content-Based Image Orienta- tion Detection with Support Vector Machines,” Proceed- ings of IEEE Workshop on Content-Based Access of Image and Video Libraries, IEEE, New York, 2001, pp. 17-23. doi:10.1109/IVL.2001.990851 [7] Y. M. Wang and H.-J. Zhang, “Detecting Image Orienta- tion Based on Low-Level Visual Content,” Computer Vi- sion and Image Understanding, Vol. 93, No. 3, 2004, pp. 328-346. doi:10.1016/j.cviu.2003.10.006 [8] E. Tolstaya, “Content-Based Image Orientation Recogni- tion,” GraphiCon, Moscow, 2007. [9] S. Lyu, “Automatic Image Orientation Detection with Natural Image Statistics,” Proceedings of 13th Internation- al Multimedia Conference, Singapore, 6-11 November 2005, pp. 491-494. doi:10.1145/1101149.1101259 [10] S. Baluja, “Automated Image-Orientation Detection: A Scalable Boosting Approach,” Pattern Analysis and Ap- plications, Vol. 10, No. 3, 2007, pp. 247-263. doi:10. 1007/s10044-006-0059-1 [11] S. Baluja and H. A. Rowley, “Large Scale Performance Measurement of Content-Based Automated Image- Orientation Detection,” Proceedings of International Conference on Image Processing, Vol. 2, 2005, pp. 514-517. [12] H. L. Borgne and N. E. Oconnor, “Pre-Classification for Automatic Image Orientation,” Proceedings of IEEE In- ternational Conference on Acoustics, Speech, and Signal Processing, Tou Louse, 14-19 May 2006, pp. 125-128. doi:10.1109/ICASSP.2006. 1660295 [13] J. Huang, S. R. Kumar, M. Mitra, W.-J. Zhu and R. Zabih, “Image Indexing Using Color Correlograms,” IEEE Computer Vision and Pattern Recognition, San Juan, 1997, pp. 762-768. [14] B. Scholkopf and A. J. Smola, “Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond,” The MIT Press, Cambridge, 2001. [15] C.-C. Chang and C.-J. Lin, “LIBSVM: A Library for Support Vector Machines,” 2001. http://www.csie,ntu.edu. tw/~cjlin/libsvm [16] F. Crow, “Summed Area Tables for Texture Mapping,” Proceedings of Special Interest Group in Graphics, ACM, Minneapolis, 1984, pp. 207-212. [17] Y. Freund and R. E. Schapire, “A Short Introduction to Boosting,” Journal of Japanese Society of Artificial Intel- ligence, Vol. 14, No. 5, 1999, pp. 771-780. [18] R. Kohavi, “A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection,” Proceed- ings of 15th International Joint Conference on Artificial Intelligence, Nagoya, 23-29 August 1997, pp. 1137-1143. [19] A. Oliva and A. Toralba, “Modeling the Shape of the Scene : A Holistic Representation of the Spatial Envelope,” International Journal on Computer Vision, Vol. 42, No. 3, 2001, pp. 145-175. doi:10.1023/A:1011139631724 |