Paper Menu >>

Journal Menu >>

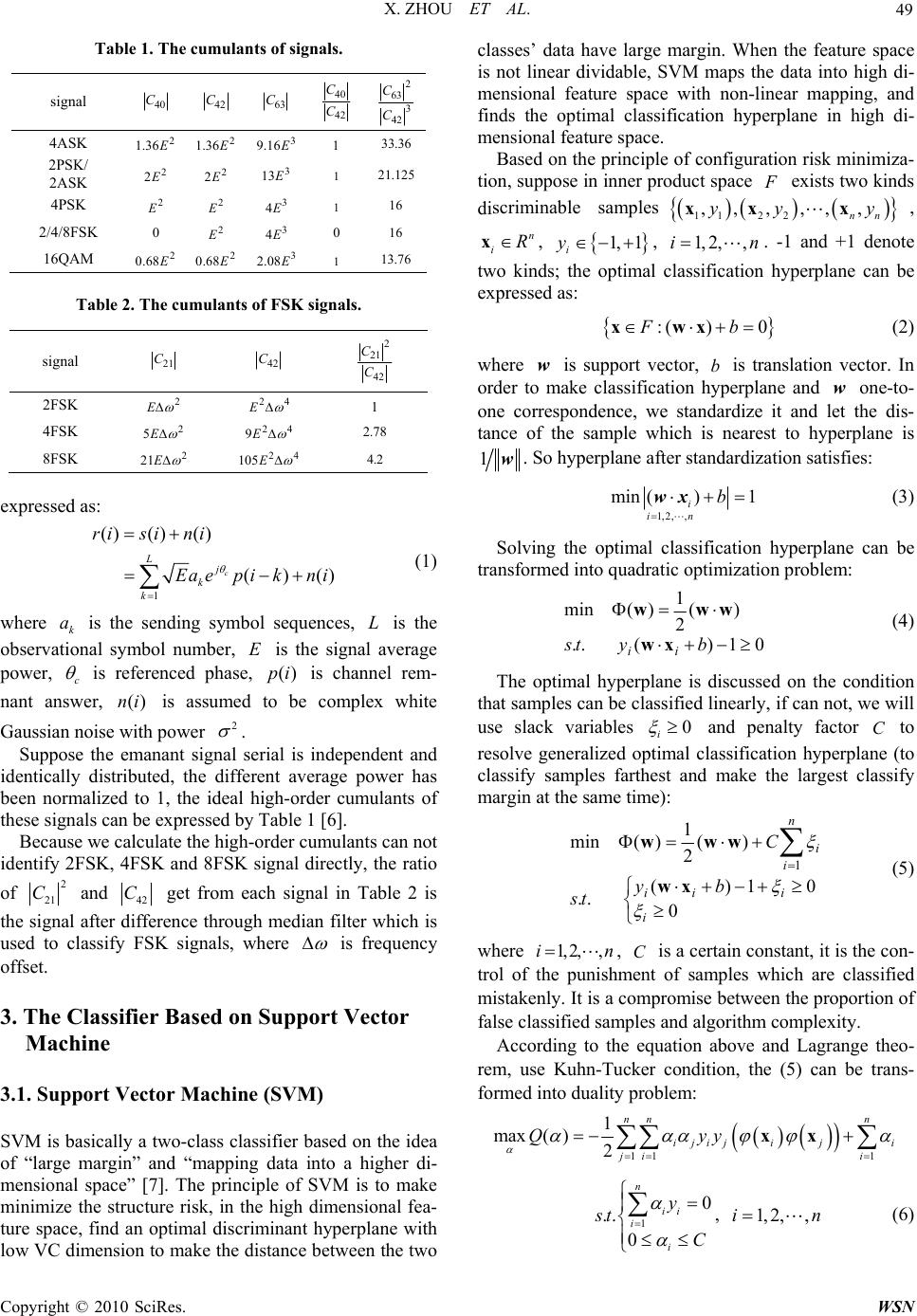

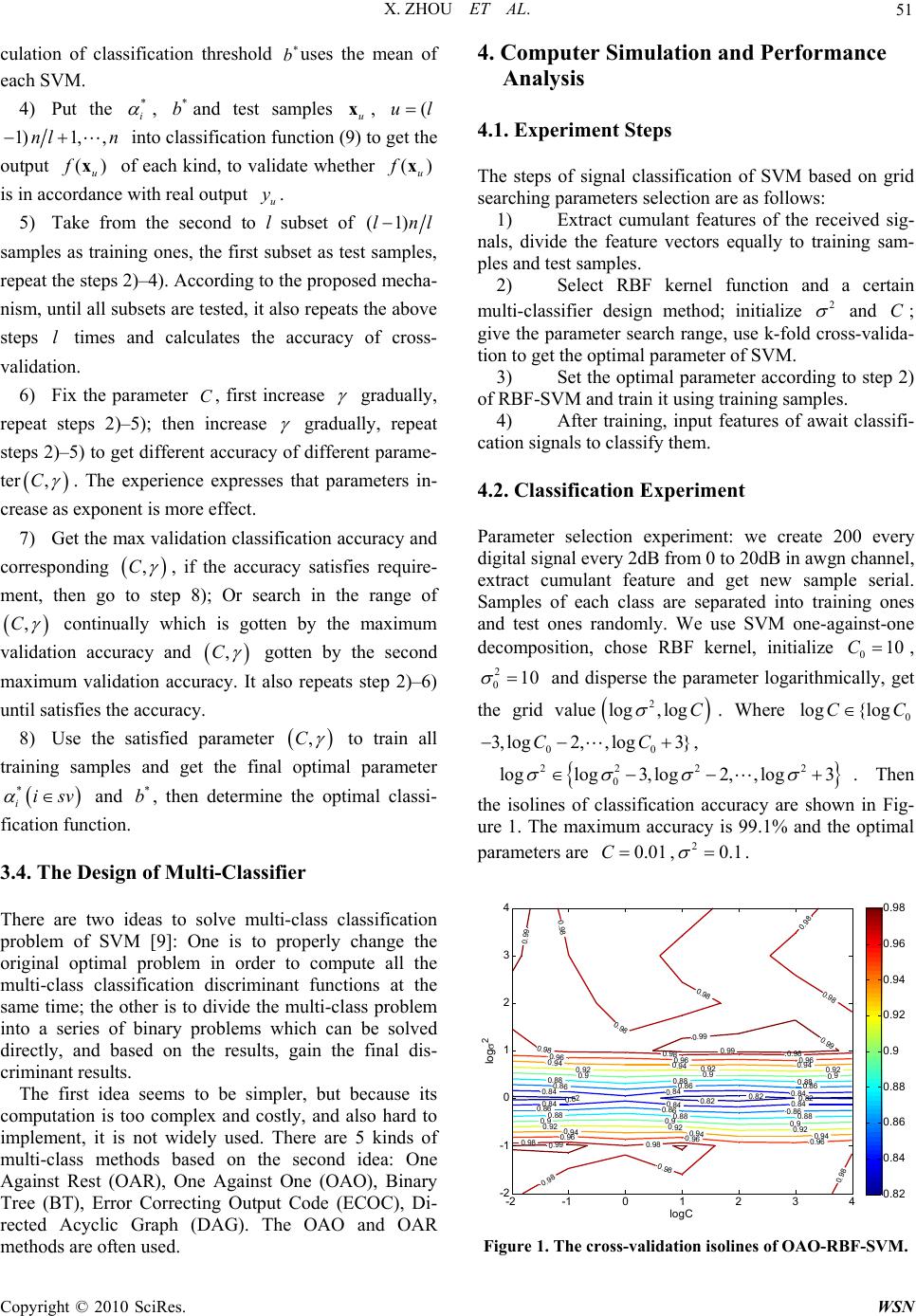

Wireless Sensor Network, 2010, 2, 48-52 doi:10.4236/wsn.2010.21007 anuary 2010 (http://www.SciRP.org/journal/wsn/). Copyright © 2010 SciRes. WSN Published Online J Signal Classification Method Based on Support Vector Machine and High-Order Cumulants Xin ZHOU, Ying WU, Bin YANG Zhengzhou Informa tion Science and Technology Institute, Zhengzhou, China Email: zx007_0_0@126.com Received September 15, 2009; revised November 13, 2009; accepted November 18, 2009 Abstract In this paper, a classification method based on Support Vector Machine (SVM) is given in the digital modu- lation signal classification. The second, fourth and sixth order cumulants of the received signals are used as classification vectors firstly, then the kernel thought is used to map the feature vector to the high dimensional feature space and the optimum separating hyperplane is constructed in space to realize signal recognition. In order to build an effective and robust SVM classifier, the radial basis kernel function is selected, one against one or one against rest of multi-class classifier is designed, and method of parameter selection using cross- validation grid is adopted. Through the experiments it can be concluded that the classifier based on SVM has high performance and is more robust. Keywords: High-Order Cumulants, Support Vector Machine, Kernel Function, Signal Classification 1. Introduction Automatic modulation classification (MC) is an interme- diate step between signal detection and demodulation, and plays a key role in various civilian and military ap- plications. It is also one of many key technologies in software radio and cognitive radio. The recognition methods in early years are mainly about signal waveform, frequency, transient amplitude and transient phase [1]. The performances of these methods descend quickly when they face to low SNR. Statistical decision and pattern recognition based on statistics are two main methods in approaching MC problem in recent years [2]. The first method is based on hypothesis testing; problem it has to face is that needs to give proper hypothesis and strict data analysis to get the correct decision threshold. The Reference [3] uses neural net to solve MC problem and gets better effect. But because the sample length is limit, the neural net is easy to bring the phenomenon of over- learning and local minimal value. There are some re- searchers use support vector machine (SVM) to solve MC problem, and get higher classification accuracy [4,5]. But in the two references they neither gave how to select the optimal parameter of SVM classifier and how to construct multi-class SVM. In this paper, we introduce the support vector machine firstly, then re- search the selection methods of kernel function and its parameter, and study on the multi-classes classifica- tion methods, and then apply them to digital signal classification. We also compare the SVM with other common classifiers. The paper is organized as follows: In Section 2, the robust feature extraction based on high-order cumulants is proposed. In Section 3, the multi-classifier based on SVM is designed. The principle of SVM is introduced firstly, then the kernel and parameter selection are given, the method of decomposing multi-class classifier is used. In Section 4, we input the signal feature to multi-class SVM classifier to do experiment. In Section 5, the paper is concluded. 2. Feature Extraction Based on High-Order Cumulants High-order cumulant is a tool of mathematics which de- scribes the high order statistical characteristic of random process. It not only can remove the influence of Gauss noise, but also is robust to the rotation and excursion of the constellation diagram. We suppose the classifier works in the interrelated and synchronization environment. The received signal has carried out carrier frequency synchronization and timing synchronization, but the unknown referenced phas ed offset exists. The output signal of receiver can be  X. ZHOU ET AL. 49 Table 1. The cumulants of signals. signal 40 C 42 C63 C 42 40 C C 3 42 2 63 C C 4ASK 2 36.1 E 2 36.1 E3 16.9 E 1 36.33 2PSK/ 2ASK 2 2E 2 2E3 13E 1 125.21 4PSK 2 E 2 E 3 4 E 1 16 2/4/8FSK 0 2 E 3 4 E 0 16 16QAM 2 68.0 E 2 68.0 E3 08.2 E 1 76.13 Table 2. The cumulants of FSK signals. signal 21 C 42 C 42 2 21 C C 2FSK 2 E 42 E 1 4FSK 2 5 E 42 9 E 78.2 8FSK 2 21 E 42 105 E 2.4 expressed as: 1 ()() () ()( c Lj k k risi ni Ea ep ikn i ) (1) where is the sending symbol sequences, is the observational symbol number, is the signal average power, k a c L E is referenced phase, is channel rem- nant answer, is assumed to be complex white Gaussian noise with power ()pi ()ni 2 . Suppose the emanant signal serial is independent and identically distributed, the different average power has been normalized to 1, the ideal high-order cumulants of these signals can be expressed by Table 1 [6]. Because we calculate the high-order cumulants can not identify 2FSK, 4FSK and 8FSK signal directly, the ratio of 2 21 C and 42 C get from each signal in Table 2 is the signal after difference through median filter which is used to classify FSK signals, where is frequency offset. 3. The Classifier Based on Support Vector Machine 3.1. Support Vector Machine (SVM) SVM is basically a two-class classifier based on the idea of “large margin” and “mapping data into a higher di- mensional space” [7]. The principle of SVM is to make minimize the structure risk, in the high dimensional fea- ture space, find an optimal discriminant hyperplane with low VC dimension to make the distance between the two classes’ data have large margin. When the feature space is not linear dividable, SVM maps the data into high di- mensional feature space with non-linear mapping, and finds the optimal classification hyperplane in high di- mensiona l f ea ture space. Based on the principle of conf iguration risk minimiza- tion, suppose in inn er product space exists two kinds discriminable samples F 11 2 ,,,,,, nn yxx x 2 yy , , n iRx 1, 1 i y , 1, 2,,in . -1 and +1 denote two kinds; the optimal classification hyperplane can be expressed as: :( )0Fb xwx (2) where is support vector, is translation vector. In order to make classification hyperplane and one-to- one correspondence, we standardize it and let the dis- tance of the sample which is nearest to hyperplane is wbw 1w. So hyperplane after standardization satisfies: 1,2, , min ()1 i in b wx (3) Solving the optimal classification hyperplane can be transformed into quadratic optimization problem: 01)(.. )( 2 1 )(min byts ii xw www (4) The optimal hyperplane is discussed on the condition that samples can be classified linearly, if can not, we will use slack variables 0 i and penalty factor to resolve generalized optimal classification hyperplane (to classify samples farthest and make the largest classify margin at the same time): C 001)( .. )( 2 1 )(min 1 i iii n i i by ts C xw www (5) where 1, 2,,in , is a certain constant, it is the con- trol of the punishment of samples which are classified mistakenly. It is a compromise between the propor tion of false classified samples and algorithm complexity. C According to the equation above and Lagrange theo- rem, use Kuhn-Tucker condition, the (5) can be trans- formed into duality problem: 11 1 1 max( )2 nn n ijijiji ji i Qyy xx 1 0 .. 0 n ii i i y st C , (6) 1, 2, ,in C opyright © 2010 SciRes. WSN  X. ZHOU ET AL. 50 Use kernel function (,)() () ijij k xxxx , the quadratic problem can be represented by [8]: 11 1 1 max( )(,) 2 nn n ijiji ji ji i Qyyk xx 1 0 .. 0 n ii i i y st C , (7) 1, 2,,in ni ,,2,1 The classification threshold can be gotten by any support vector use (8): b 01)( 1by y ii n i iii xw xw , (8) The optimal classification discriminant function ex- pressed by kernel function is: bkybf SV iii i x xxxwx ),(sgnsgn)( (9) where . SV iii i y x xw )( According to optimal problem (7), the complexity of SVM has nothing to do with dimension of feature, but is restricted by the number of samples. SVM needs to compute the kernel functions between every two training samples, to generate a kernel function matrix which has elements, and n is the number of training sam- ples. nn* 3.2. The Selection of Kernel Function In fact, changing kernel parameter is to change mapping function implicitly, and change the complexity of sam- ples’ distribution in feature space. So the selection of kernel function and parameters are very important. There are 3 kin ds o f kernels that are usua lly used [ 8]: 1) Dimensional polynomial kernel of degree , the expression is: d d pk ]),[(),( yxyx (10) where and are custom parameters; If p d0p and , it is called linear kernel function. The operation speed of kernel function is fast. 1d 2) Radial basis function kernel, the expression is: 2 2 exp),( yx yx k (11) where , it controls the width of kernel function and needs to be confirmed. 20 3) Neural Network kernel fun ction, the expression is: vk ),(tanh),( yxyx (12) where and are parameters. Only some values sat- isfy Mercer condition can be used . v Because the feature space of radial basis function ker- nel is limitless, the limit samples in this feature space must be linearly discriminable, so it is most commonly used in classification. In this paper, we also select radial basis fun c tion kerne l. 3.3. The Parameter Selection of SVM In SVM classifier, the parameter selection of kernel function and penalty factor is very important. The pen- alty factor is the optimal compromise with the dis- tance between hyperplane and the nearest training point is farthest and the classification error is least. The pa- rameters of kernel function determine the data mapping into higher dimensional space. C There are many parameter selection methods, such as grid searching, GD algorith m, gradient descen t algorithm, genetic algorithm, simulated annealing algorithm and so on. The parameter evaluation criterion has k-fold cross- validation, leave-one-out (LOO), generalized approxi- mate cross-validation (GACV), approx imate span bound, margin-radius bound and so on. In this paper, we use k-fold cross-validation to select parameter , C (2 1 )of RBF-SVM. Suppose we have known samples, they construct sample set n , ii y x, 1, 2,, in ,. In order to differentiate kernel function, we use express the k value of the k-fold cross-validation. The steps of k-fold cross-validation are as followed: 1, 1 i y l 1) Divide sample set contains n samples to subset equally, each subset contains l nl samples. 2) Put from the first to (l-1) subset of (1)lnl samples as training ones, give a smaller value of pa- rameter (C, ), put in (7) and get the solution of La- grange operator * i ,the samples corresponding to * i which are more than zero are support vectors. 3) Put each * i into: lnl ji jijiji Tyy )1( 1, 2 **** 2exp xxwww svk lnl i jiiik yy sv b)1( 1 2 ** exp 1xx where s v is the number of support vector, and the cal- Copyright © 2010 SciRes. WSN  X. ZHOU ET AL. 51 culation of classification threshold * buses the mean of each SVM. 4) Put the * i , * band test samples u x, (ul 1)1, ,nl n into classification function (9) to get the output () u fx of each kind, to validate whether () u is in accordance with real output u y. fx 5) Take from the second to l subset of (1)lnl samples as training ones, the first subset as test samples, repeat the steps 2)–4). According to the proposed mecha- nism, until all subsets are tested, it also repeats the above steps l times and calculates the accuracy of cross- validation. 6) Fix the parameter C, first increase gradually, repeat steps 2)–5); then increase gradually, repeat steps 2)–5) to get different accuracy of different parame- ter ,C . The experience expresses that parameters in- crease as exponent is more effect. 7) Get the max validation classification accuracy and corresponding ,C , if the accuracy satisfies require- ment, then go to step 8); Or search in the range of ,C continually which is gotten by the maximum validation accuracy and ,C gotten by the second maximum validation accuracy. It also repeats step 2)–6) until satisfies the accuracy. 8) Use the satisfied parameter ,C to train all training samples and get the final optimal parameter * iisv and * b, then determine the optimal classi- fication function. 3.4. The Design of Multi-Classifier There are two ideas to solve multi-class classification problem of SVM [9]: One is to properly change the original optimal problem in order to compute all the multi-class classification discriminant functions at the same time; the other is to divide the multi-class problem into a series of binary problems which can be solved directly, and based on the results, gain the final dis- criminant results. The first idea seems to be simpler, but because its computation is too complex and costly, and also hard to implement, it is not widely used. There are 5 kinds of multi-class methods based on the second idea: One Against Rest (OAR), One Against One (OAO), Binary Tree (BT), Error Correcting Output Code (ECOC), Di- rected Acyclic Graph (DAG). The OAO and OAR methods are often used. 4. Computer Simulation and Performance Analysis 4.1. Experiment Steps The steps of signal classification of SVM based on grid searching parameters selection are as follows: 1) Extract cumulant features of the received sig- nals, divide the feature vectors equally to training sam- ples and test samples. 2) Select RBF kernel function and a certain multi-classifier design method; initialize 2 and C; give the parameter search range, use k-fold cross-valida- tion to get the optimal parameter of SVM. 3) Set the optimal parameter according to step 2) of RBF-SVM and train it using training samples. 4) After training, input features of await classifi- cation signals to classify them. 4.2. Classification Experiment Parameter selection experiment: we create 200 every digital signal every 2dB from 0 to 20dB in awgn channel, extract cumulant feature and get new sample serial. Samples of each class are separated into training ones and test ones randomly. We use SVM one-against-one decomposition, chose RBF kernel, initialize 010C , 2 010 and disperse the parameter logarithmically, get the grid value 2 log ,logC . Where 0 {lo gCClog 00 ,log3}CC3,log 2, , 22 22 0 loglog3,log2, ,log3 0.01 C . Then the isolines of classification accuracy are shown in Fig- ure 1. The maximum accuracy is 99.1% and the optimal parameters are ,. 20.1 0.82 0.82 0.82 0.82 0.84 0.84 0.84 0.84 0.84 0.84 0.86 0.86 0.86 0.86 0.86 0.86 0.88 0.880.88 0.88 0.88 0.88 0.9 0.9 0.9 0.9 0.9 0.9 0.92 0.92 0.92 0.92 0.92 0.92 0.94 0.94 0.94 0.94 0.94 0.94 0.96 0.96 0.96 0.96 0.96 0.96 0.98 0.98 0.98 0.98 0.98 0.98 0.98 0.98 0.98 0.98 0.98 0.98 0.98 0.99 0.99 0.99 0.99 0.99 logC log 2 -2 -10 1 2 34 -2 -1 0 1 2 3 4 0.82 0.84 0.86 0.88 0.9 0.92 0.94 0.96 0.98 Figure 1. The cross-validation isolines of OAO-RBF-SVM. C opyright © 2010 SciRes. WSN  X. ZHOU ET AL. Copyright © 2010 SciRes. WSN 52 Table 3. The simulation result at 5dB. output(classification accuracy) input 4ASK 2PSK/ 2ASK 4PSK 2FSK 4FSK 8FSK16QA M 4ASK 92.2 7.8 0 0 0 0 0 2PSK/ 2ASK 100 0 0 0 0 0 0 4PSK 0 0.6 99.4 0 0 0 0 2FSK 0 0 0 100 0 0 0 4FSK 0 0 0 2.6 97.4 0 0 8FSK 0 0 0 0.4 2.6 97.00 16QAM 0.8 4.2 5.8 0 0 0 89.2 Table 4. Comparison of different classification methods. Classifier Classification accuracy(%) Nearest distance classifier 80.2 Neural network 85.6 OAR-SVM 2=0.01,C=1 90.4 OAO-SVM 2=0.01,C=0.1 92.2 Test 1: In this experiment, we get the classification accuracy of different signals in awgn channel. The sam- ple frequency is 40kHz and carrier frequency is 8kHz. The length of signal is 1200, the symbol rate is 2000Bd, and we do 500 Monte Carlo experiments at 5dB. The OAO-SVM is used to get the classification accuracy in Table 3. From Table 3 we can see that SVM classifier can get higher classification accuracy at 5dB. The QAM classi- fication accuracy is lowest and is 89.2%. This is because the feature extraction of QAM is close to the feature of 2PSK and 4PSK, so it is easy to judge to the two signals mistakenly. Test 2: In this experiment, we compare SVM, neural network and the nearest distance discrimination classifier. The simulation assumption is the same as test1. We cal- culate the classification accuracy of 4ASK at 5 dB. The SVM uses RBF kernel and OAR and OAO classification algorithm, and then we do 500 Monte Carlo experiments. The classification accuracy is shown in Table 4. From Test 2 we can see that the classification accuracy of the nearest distance classifier is lowest, and then is neural network and SVM is highest. 5. Conclusions In this paper, we use the kernel thought of statistical learning theory for reference and use decomposition me- thods of multi-class classifier and method of parameter selection using cross-validation grid search to build ef- fective and robust SVM classifiers. We also use fourth and sixth cumulants of the received signals as the classi- fication vectors, to realize digital signals classification. From the computer simulation and analysis, we can get the following conclusion: 1) The feature vector of cumulants can remove the in- fluence of Gauss noise. It is robust and has high per- formance. 2) Classification method based on kernel function is less affected by dimension of input data. The classifica- tion capability of kernel classifier is affected by the ker- nel function and parameters, and a fine classification precision can only be obtained when kernel parameters are in special range. The classification stability can be effectively improved by parameter selection via cross- validate grid search method. If the proper parameters are chosen, the classification accuracy of SVM is high. 6. References [1] K. Nandi and E. E. Azzouz, “Automatic modulation rec- ognition [J],” Signal Processing, Vol. 46, No. 2, pp. 211– 222, 1995. [2] O. A. Dobre, A. Abdi, Y. Bar-Ness, et al., “Survey of automatic modulation classification techniques: Classical approaches and new trends [J],” IEE Communication, , Vol. 1, No. 2, pp. 137–156, 2007. [3] W. C. Han, H. Han, L. N. Wu, et al., “A 1-dimension structure adaptive self-organizing neural network for QAM signal classification [C],” Third International Con- ference on Natural Computation (ICNC 2007), HaiKou, August 24–27, 2007. [4] X. Z. Feng, J. Yang, F. L. Luo, J. Y. Chen, and X. P. Zhong, “Automatic modulation recognition by support vector machines using wavelet kernel [J],” Journal of Physics, International Symposium on Instrumentation Science and Technology, pp. 1264–1267, 2006. [5] H. Mustafa and M. Doroslovacki, “Digital modulation recognition using support vector machine classifier [C],” Proceedings of The Thirty-Eighth Asilomar Conference on Signals, Systems & Computers, November 2004. [6] O. A. Dobre, Y. B. Ness, and S. Wei, “Higher-order cyclic cumulants for high order modulation classification [C],” IEEE MILCOM, pp. 112–115, 2003. [7] Z. L. Wu, X. X. Wang, Z. Z. Gao, and G. H. Ren, “Auto- matic digital modulation recognition based on support vector machine [C],” IEEE Conference on Neural Net- works and Brain, pp. 1025–1028, October 2005. [8] V. Vapnik, “Statistical learning theory [M],” Wiley, 1998. [9] B. Gou and X. W. Huang, “SVM multi-class classifica- tion [J],” Journal of Southern Yangtze University, Vol. 21, pp. 334–339, September 2006. |