A Computational Comparison of Basis Updating Schemes for the Simplex Algorithm on a CPU-GPU System ()

1. Introduction

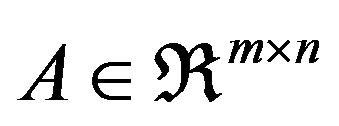

Linear Programming (LP) is the process of minimizing or maximizing a linear objective function to a number of linear equality and inequality constraints. Simplex algorithm is the most widely used method for solving Linear Programming problems (LPs). Consider the following linear programming problem in the standard form shown in Equation (1):

(1)

(1)

where ,

,  ,

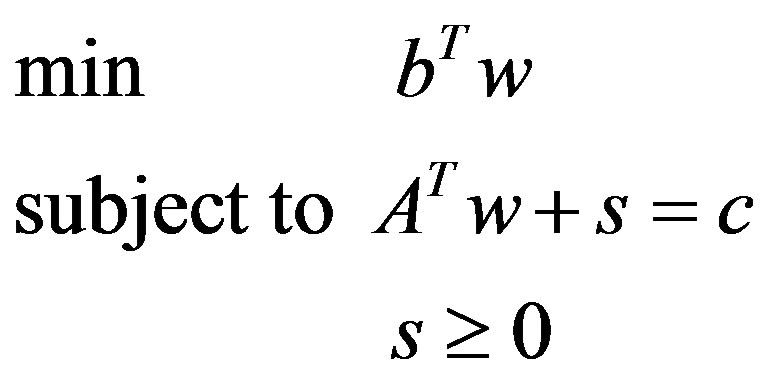

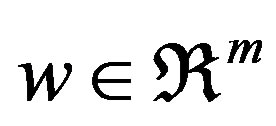

,  , and T denotes transposition. We assume that A has full rank, rank(A) = m, m < n. Consequently, the linear system Ax = b is consistent. The simplex algorithm searches for an optimal solution by moving from one feasible solution to another, along the edges of the feasible set. The dual problem associated with the linear problem in Equation (1) is shown in Equation (2):

, and T denotes transposition. We assume that A has full rank, rank(A) = m, m < n. Consequently, the linear system Ax = b is consistent. The simplex algorithm searches for an optimal solution by moving from one feasible solution to another, along the edges of the feasible set. The dual problem associated with the linear problem in Equation (1) is shown in Equation (2):

(2)

(2)

where  and

and .

.

The past twenty years have been a time of remarkable developments in optimization solvers. Real life LPs tend to be large in size. A growing number of problems demand parallel computing capabilities. The explosion in computational power (CPUs and GPUs) has made it possible to solve large and difficult LPs in a short amount of time. As in the solution of any large scale mathematical system, the computational time for large LPs is a major concern. The basis inverse dictates the total computational effort of an iteration of simplex type algorithms. This inverse does not have to be computed from scratch at any iteration, but can be updated through a number of updating schemes. All efficient versions of the simplex algorithm work with some factorization of the basis matrix B or its inverse B−1.

Dantzig and Orchard-Hays [1] have proposed the Product Form of the Inverse (PFI), which maintains the basis inverse using a set of eta vectors. Benhamadou [2] proposed a Modification of the Product Form of the Inverse (MPFI). The key idea is that the current basis inverse  can be computed from the previous inverse

can be computed from the previous inverse  using a simple outer product of two vectors and one matrix addition.

using a simple outer product of two vectors and one matrix addition.

LU decomposition produces generally sparser factorizations than PFI [3]. The LU factorization for the basis inverse has been proposed by Markowitz [4]. Markowitz used LU decomposition to fully invert a matrix, but used the PFI scheme to update the basis inverse during simplex iterations. Bartels and Golub [5] have later proposed a scheme to update a sparse factorization, which was more stable than using PFI. Their computational experiments, however, proved that it was more computationally expensive. Forrest and Tomlin [6] created a variant of the Bartels-Golub method by sacrificing some stability characteristics causing the algorithm to have a smaller growth rate in the number of non-zero elements relative to the PFI scheme. Reid [7] proposed two variants of the Bartels-Golub updating scheme that aim to balance the sparsity and the numerical stability of the factorization. A variant of the Forrest-Tomlin update was proposed by Suhl and Suhl [8]. Other important updating techniques can be found in Saunders [9] and Goldfarb [10]. A full description of most of these updating methods can be found in Nazareth [11] and Chvátal [12].

There have been many reviews and variants of these methods individually, but only a few comparisons between them that are either obsolete or don’t compare all these updating schemes. McCoy and Tomlin [13] report the results of some experiments on measuring the accuracy of the PFI scheme, Bartels-Golub method and ForrestTomlin scheme. Lim et al. [14] provide a comparative study between Bartels-Golub method, Forrest-Tomlin method and Reid method. Badr et al. [15] perform a computational evaluation of PFI and MPFI updating schemes. Ploskas et al. [16] compare PFI and MPFI updating schemes both on their serial and their parallel implementation.

Originally, GPUs used to accelerate graphics rendering. GPUs have gained recently a lot of popularity and High Performance Computing applications have already started to use them. The computational capabilities of GPUs exceed the one of CPUs. GPU is utilized for data parallel and computationally intensive portions of an algorithm. NVIDIA introduced Compute Unified Device Architecture (CUDA) in late 2006. CUDA enables users to execute codes on their GPUs and it is based on a SIMT programming model. Any performance improvements in the parallelization of the revised simplex algorithm would be of great interest. Using GPU computing for solving largescale LPs is a great challenge due to the capabilities of GPU architectures.

Some related works have been proposed on the GPU parallelization for LPs. O’Leary and Jung [17] proposed a CPU-GPU implementation of the Interior Point Method for dense LPs. Their computational results on Netlib Set [18] showed that some speedup can be gained for large dense problems. Spampinato and Elster [19] presented a GPU-based implementation of the Revised Simplex Algorithm on GPU with NVIDIA CUBLAS [20] and NVIDIA LAPACK libraries [21]. Their implementation showed a maximum speedup of 2.5 on randomly generated LPs of at most 2000 variables and 2000 constraints. Bieling et al. [22] also proposed a parallel implementation of the Revised Simplex Algorithm on GPU. They compared their GPU-based implementation with GLPK solver and found a maximum speedup of 18 in single precision. Lalami et al. [23] proposed a parallel implementation of the standard Simplex on a CPU-GPU systems. Their computational results on randomly generated dense problems of at most 4000 variables and 4000 constraints showed a maximum speedup of 12.5. Meyer et al. [24] proposed a mono and a multi-GPU implementation of the standard Simplex algorithm and compared their implementation with the CLP solver. Their implementation outperformed CLP solver on large sparse LPs. Li et al. [25] presented a GPU-based parallel algorithm, based on Gaussian elimination, for large scale LPs that outperform the CPU-based algorithm.

This paper presents a computational study in which the basis inverse is computed with five different updating schemes: 1) Gaussian elimination; 2) the built-in function inv of MATLAB; 3) LU decomposition; 4) product form of the inverse; and 5) a modification of the product form of the inverse; and incorporates them with the revised simplex algorithm. Then, we propose a parallel implementation of PFI and MPFI schemes, which were the fastest among the five updating methods, on a CPU-GPU System, which is based on MATLAB and CUDA.

The structure of the paper is as follows. In Section 2, a brief description of the revised simplex algorithm is presented. In Section 3, five methods that have been widely used for basis inversion are presented and analyzed. Section 4 presents the computational comparison of the serial implementations of the updating schemes. Computational tests were carried out on randomly generated LPs of at most 5000 variables and 5000 constraints. Section 5 presents the GPU-based implementations of two updating schemes. In Section 6, a computational study of the GPU-based implementations is performed. Finally, the conclusions of this paper are outlined in Section 7.

2. Revised Simplex Method

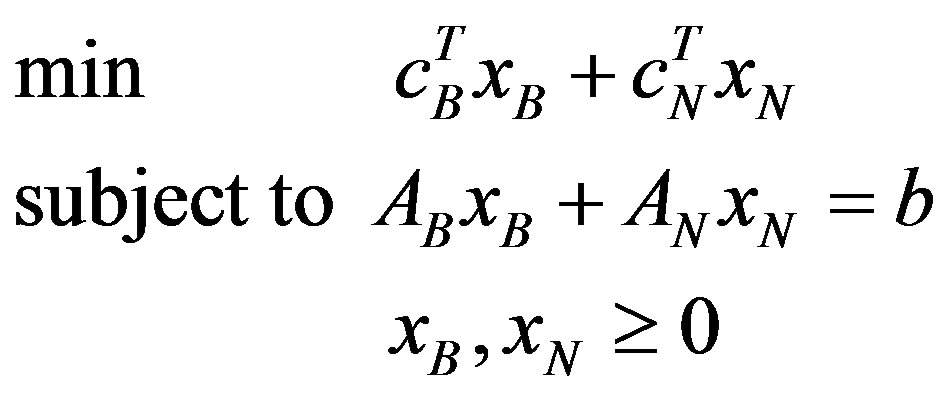

Using a partition (B, N) Equation (1) can be written as shown in Equation (3):

(3)

(3)

In the above problem, AB is an mxm non-singular submatrix of A, called basic matrix or basis. The columns of A belonging to subset B are called basic and those belonging to N are called non-basic. The solution of the linear problem  is called a basic solution. A solution

is called a basic solution. A solution  is feasible iff

is feasible iff ; otherwise the solution is infeasible. The solution of the linear problem in Equation (2) is computed by the relation

; otherwise the solution is infeasible. The solution of the linear problem in Equation (2) is computed by the relation , where

, where  are the simplex multipliers and s are the dual slack variables. The basis AB is dual feasible iff

are the simplex multipliers and s are the dual slack variables. The basis AB is dual feasible iff .

.

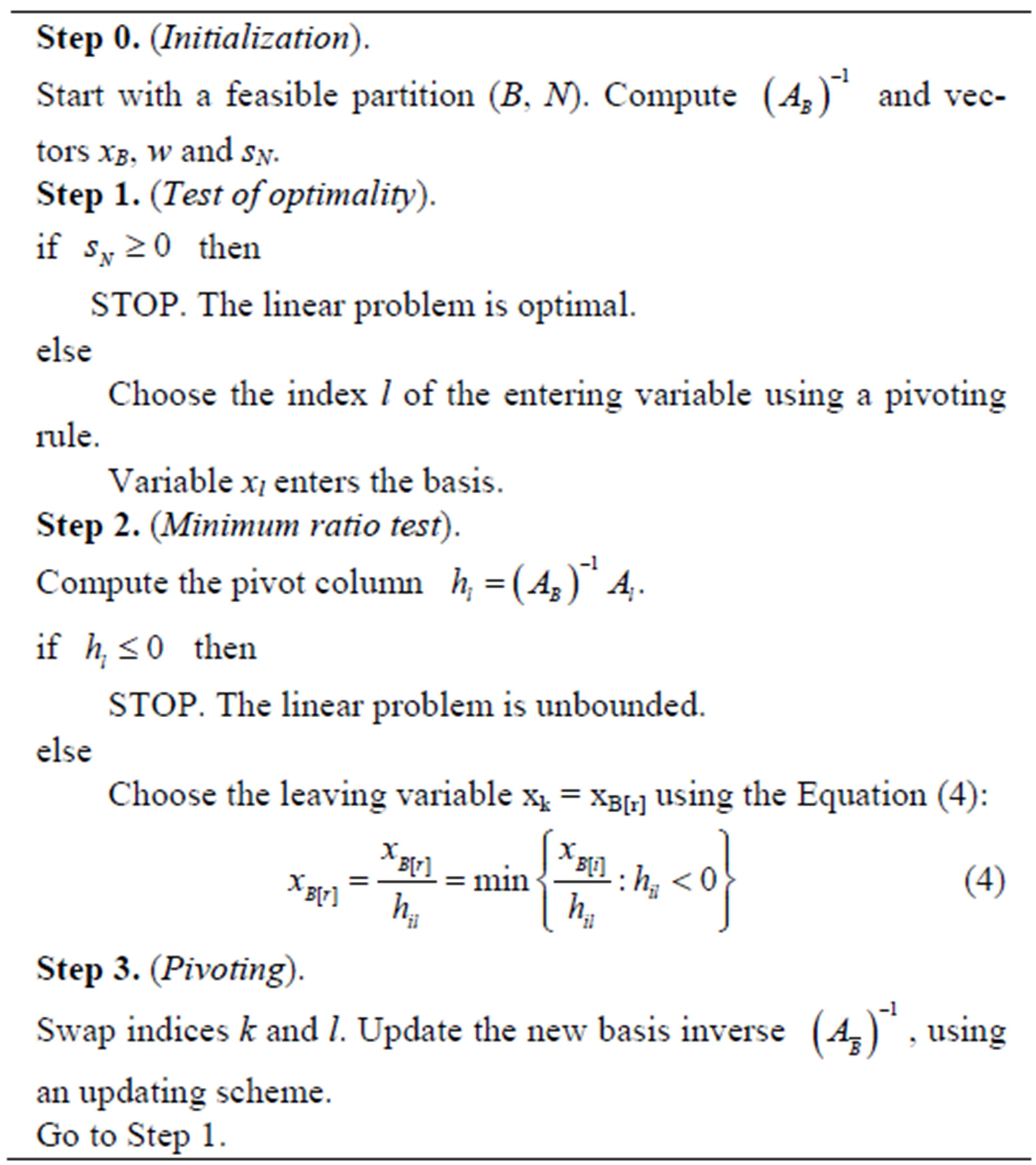

In each iteration, simplex algorithm interchanges a column of matrix AB with a column of matrix AN and constructs a new basis . Any iteration of simplex type algorithms is relatively expensive. The total work of an iteration of simplex type algorithms is dictated by the computation of the basis inverse. This inverse, however, does not have to be computed from scratch during each iteration of the simplex algorithm. Simplex type algorithms maintain a factorization of basis and update this factorization in each iteration. There are several schemes for updating basis inverse. In Section 3, we present eight well-known methods for the basis inverse. A formal description of the revised simplex algorithm [26] is presented in Table 1.

. Any iteration of simplex type algorithms is relatively expensive. The total work of an iteration of simplex type algorithms is dictated by the computation of the basis inverse. This inverse, however, does not have to be computed from scratch during each iteration of the simplex algorithm. Simplex type algorithms maintain a factorization of basis and update this factorization in each iteration. There are several schemes for updating basis inverse. In Section 3, we present eight well-known methods for the basis inverse. A formal description of the revised simplex algorithm [26] is presented in Table 1.

3. Basis Inversion Updating Schemes

3.1. Gaussian Elimination

Gaussian elimination is a method for solving systems of linear equations, which can be used to compute the inverse of a matrix. Gaussian elimination performs the

Table 1. Revised simplex algorithm.

following two steps: 1) Forward Elimination: reduces the given matrix to a triangular or echelon form and 2) Back Substitution: finds the solution of the given system. Gaussian elimination with partial pivoting requires O(n3) time complexity.

Gaussian elimination has been implemented on MATLAB using the mldivide operator. In order to find the new basis inverse using Gaussian elimination, one can use the Equation (5):

(5)

(5)

3.2. Built-In Function Inv of MATLAB

The basis inverse can be computed using the built-in function of MATLAB called inv, which uses LAPACK routines to compute the basis inverse. Due to the fact that this function is already compiled and optimized for MATLAB, its execution time is smaller compared with the other relevant methods that compute the explicit basis inverse; time-complexity, though, remains O(n3).

3.3. LU Decomposition

LU decomposition method factorizes a matrix as the product of an upper U and a lower L triangular factors, which can be used to compute the inverse of a matrix. In order to compute the U and L factors, the built-in function of MATLAB called lu has been used. LU decomposition can be computed in time O(n3).

3.4. MPFI

MPFI updating scheme has been presented by Benhamadou [3]. The main idea of this method is that the current basis inverse  can be computed from the previous inverse

can be computed from the previous inverse  using a simple outer product of two vectors and one matrix addition, as shown in the Equation (6):

using a simple outer product of two vectors and one matrix addition, as shown in the Equation (6):

(6)

(6)

The updating scheme of the inverse is shown in Equation (7).

(7)

(7)

The outer product of Equation (7) requires m2 multiplications and the addition of two matrices requires m2 additions. Hence, the complexity is .

.

3.5. PFI

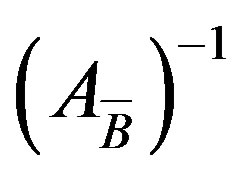

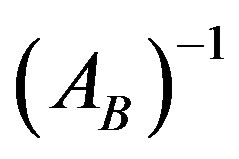

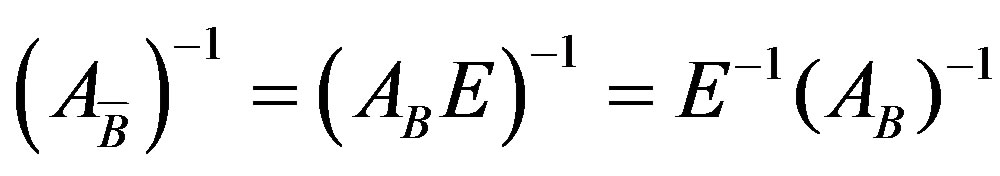

The PFI scheme, in order to update the new basis , uses information only about the entering and leaving variables along with the current basis

, uses information only about the entering and leaving variables along with the current basis . The new basis inverse can be updated at any iteration using the Equation (8).

. The new basis inverse can be updated at any iteration using the Equation (8).

(8)

(8)

where E−1 is the inverse of the eta-matrix and can be computed by the Equation (9):

(9)

(9)

If the current basis inverse is computed using regular multiplication, then the complexity of the PFI is .

.

4. Computational Results of Serial Implementations

Computational studies have been widely used, in order to examine the practical efficiency of an algorithm or even compare algorithms. The computational comparison of the aforementioned five updating schemes has been performed on a quad-processor Intel Core i7 3.4 GHz with 32 Gbyte of main memory running under Microsoft Windows 7 64-bit. The algorithms have been implemented using MATLAB Professional R2012a. MATLAB (MATrix LABoratory) is a powerful programming environment and is especially designed for matrix computations in general. All times in the following tables are measured in seconds.

The test set used in the computational study was randomly generated. Problem instances have the same number of constraints and variables. The largest problem tested has 5000 constraints and 5000 variables. All instances are dense. For each instance, we averaged times over 10 runs. A time limit of 20 hours was set that explains why there are no measurements for some updating methods on large instances. It should be noted that in MATLAB R2012a multithreading is enabled by default thus our implementations are automatically parallelized and executed using the available multicore CPU.

Table 2 presents the results from the execution of the above mentioned updating schemes. We have also included the execution time from MATLAB’s linprog built-in function, a function for solving linear programming problems. MATLAB’s linprog function includes two algorithms for large-scale and medium-scale optimization. The large-scale algorithm is based on Interior Point Solver [27], a primal-dual interior-point algorithm. LIPSOL used a Cholesky-infinity factorization that causes overhead during the factorization of dense matrices and as a result it cannot solve problems with more than 1500 variables and constraints. Due to this restriction, we have used in our comparison the medium-scale algorithm, which is a variation of the simplex method. Table 3 includes the execution time for the basis inverse of each updating scheme, while Table 2 presents the total execution time. The execution time of the basis inverse and the whole algorithm for each updating scheme is also graphically illustrated in Figures 1 and 2, respectively.

The MPFI updating scheme has the best performance. On the other hand, LU updating method has the worst performance. Another significant issue is the performance of Gaussian elimination, PFI, function inv and linprog of MATLAB which are close to each other and the results are not quite satisfactory.

5. Parallel Implementation of PFI and MPFI Updating Schemes on a CPU-GPU System

PFI and MPFI were the fastest updating schemes. In this section, we present the GPU-based implementations of these updating methods taking advantage of the power that modern GPUs offer. The parallel implementations of these updating methods are implemented on MATLAB and CUDA. The updating methods are built using both native MATLAB code and CUDA MEX files.

Both methods take as input the previous basis inverse , the pivot column hl, the index of the leaving variable (k) and the number of the constraints (m).

, the pivot column hl, the index of the leaving variable (k) and the number of the constraints (m).

5.1. GPU-Based MPFI

Let us assume that we have t gpu cores. Table 4 shows the steps that we used to compute the new basis inverse

with the MPFI scheme on the GPU.

with the MPFI scheme on the GPU.

5.2. GPU-Based PFI

Table 5 shows the steps that we used to compute the new basis inverse  with the PFI scheme on the GPU.

with the PFI scheme on the GPU.