A Class of Generalized Approximate Inverse Solvers for Unsymmetric Linear Systems of Irregular Structure Based on Adaptive Algorithmic Modelling for Solving Complex Computational Problems in Three Space Dimensions ()

Received 18 May 2016; accepted 9 July 2016; published 12 July 2016

1. Introduction

In recent years, extensive research work has been focused on the computation of exact and approximate inverse matrices for solving efficiently complex computational problems particularly on parallel computer systems [1] - [20] . In this article, a new class of Sparse Approximate Inverses matrices based on adaptive algorithmic modelling methods is presented. These adaptive algorithmic solution methods can be used for solving large sparse linear finite difference (FD) and finite element (FE) systems of irregular structures derived mainly from the discretization of parabolic and elliptic PDE’s in both two and three space dimensions [21] - [31] .

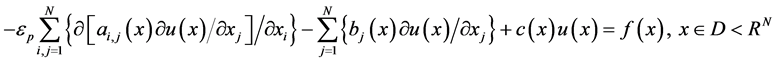

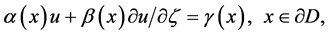

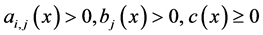

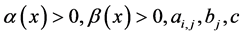

Let us consider a class of boundary value problems defined by the equation

(1.1)

(1.1)

subject to the general boundary conditions

(1.1a)

(1.1a)

where D is a closed bounded domain in RN, with N ≤ 3 and ∂D the boundary of D,  a predetermined singular perturbation parameter,

a predetermined singular perturbation parameter, ; and the coefficients

; and the coefficients  are continuous and differentiable functions in D,

are continuous and differentiable functions in D, ; f are sufficiently smooth functions on D and

; f are sufficiently smooth functions on D and  is the direction of the outward normal derivative.

is the direction of the outward normal derivative.

The discrete analogue of Equation (1.1) leads to the solution of the general linear system

, (1.2)

, (1.2)

where the coefficient matrix A is a large sparse real (n ´ n) matrix of irregular structure. The structure of A is shown in the following Figure 1.

For solving the system (1.2), there is a choice between direct and iterative, assuming that there are no barriers due to memory requirements for the former or excessive runtimes (e.g. time dependent problems) for the latter. Note that for generality purposes the coefficient matrix is assumed to be unsymmetric (case occurring in the discretization of flow equations that arise in certain Hydrology studies [32] and of irregular non-zero structure (case resulting from the triangulation of irregular or regular domains into irregular elements in pipeline networks [33] . Algorithmic solution methods for the linear systems (1.2) applicable to both two and three space dimensions can be applied [22] , where the unsymmetric coefficient matrix, in which all the off-centre terms are grouped into regular bands, can be factorized exactly to yield direct algorithmic procedures for the FD or FE solution.

It should be noted that in the case of very large sparse linear and nonlinear systems with coefficients of irregular structure, the memory requirements and the corresponding computational work are prohibitively high and the use of exact inverse solvers is usually not recommended. In such cases, preconditioned iterative techniques for solving numerically the FD or FE linear systems (1.2) can be used by deriving semi-direct solution methods following the principle [27] [34] that implicit procedures based on approximately decomposing discrete operators into easily invertible factors facilitating the solution of (1.2). Sparse factorization procedures yield efficient

![]()

Figure 1. The structure of coefficient matrix A.

procedures for the FE or FD solution by manipulating the problem of the fill-in terms, which occur during the factorization [22] [35] . Note that simple compact storage schemes for the considered data can be used and preconditioned algorithmic solvers do not require any searching operations. An important feature of the proposed adaptive algorithmic methods is the provision of a class of iterative methods for solving large sparse unsymmetric systems of irregular non-zero structure, with additional computational facilities, i.e. the choice of fill-in parameters, rejection parameters, entropy-adaptivity-uncertainty (EAU) parameters [36] , by which the best method for a given problem can be selected. The proposed methods have a universal scope of application for numerically solving of elliptic and parabolic boundary value problems by either FD or FE discretization methods in both two and three space dimensions with the only restriction being that the coefficient matrix should be diagonally dominant.

2. Approximate LU Decomposition and Approximate Inverse Methods

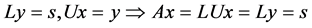

The approximate factorization techniques and approximate inverse methodologies have been widely used for solving a large class of linear and nonlinear systems resulting in complex computational problems [12] [29] [31] [37] - [49] . The LU factorization of a given matrix is characterized as a high level algebraic description of Gaussian elimination and by expressing the outcomes of matrix algorithms in the language of matrix factorizations facilitates generalization and certain connections between algorithms that may appear different at scalar level [47] . The solution of linear system can be computed by a two-step triangular solving process, i.e.

(2.1)

(2.1)

For solving the symmetric problem , a variant of the LU factorization in which A is decomposed into three-matrix product, i.e.

, a variant of the LU factorization in which A is decomposed into three-matrix product, i.e. , where D is diagonal and L, M are lower unit lower triangular. In this case the solution can be obtained in

, where D is diagonal and L, M are lower unit lower triangular. In this case the solution can be obtained in  flops by solving

flops by solving  (forward elimination),

(forward elimination),  and

and ![]() (by substitution). Note that if

(by substitution). Note that if ![]() then L = M and the computational work for the factorization is half of that required by Gaussian elimination. The factorization takes the form

then L = M and the computational work for the factorization is half of that required by Gaussian elimination. The factorization takes the form ![]() and can be used for solving symmetric problems, as well as the four-matrix product, i.e.

and can be used for solving symmetric problems, as well as the four-matrix product, i.e.![]() , where

, where ![]() is the transpose of T (Varga, 1962). In the case of symmetric positive definite systems the factorization

is the transpose of T (Varga, 1962). In the case of symmetric positive definite systems the factorization ![]() exists and is computationally stable. The factorization

exists and is computationally stable. The factorization ![]() is known as Cholesky factorization and by solving the triangular system

is known as Cholesky factorization and by solving the triangular system ![]() and

and![]() , then

, then![]() . Note that the Gaxpy Cholesky version requires

. Note that the Gaxpy Cholesky version requires ![]() flops, where Gaxpy is a BLAS level-2 routine defined algebraically as

flops, where Gaxpy is a BLAS level-2 routine defined algebraically as ![]() requiring

requiring ![]() operations.

operations.

In the general case, such as the case of three space dimensions and the finite element discretization, the coefficient matrix has an irregular structure of the nonzero elements, where the non-diagonal elements can be grouped in regular bands of width ![]() and

and ![]() (width parameters) in distances m and p (semi-bandwidths) respectively.

(width parameters) in distances m and p (semi-bandwidths) respectively.

The linear system (1.2) can be solved by direct (explicitly) or iterative (implicitly) methods depending on the availability of memory requirements. Several factorization/decomposition techniques can be used for facilitating the numerical solution of linear system (1.2), i.e. two, three, four term factorization schemes of the coefficient matrix A. Following an explicit solution of system (1.2) this system can equivalently written as

![]() , (2.2)

, (2.2)

where M is the inverse matrix of A, i.e.![]() . Since the computation of the exact inverse is a difficult computational problem particularly in the case of complex 3D problems, the approximate inverse matrix approach can be alternatively used.

. Since the computation of the exact inverse is a difficult computational problem particularly in the case of complex 3D problems, the approximate inverse matrix approach can be alternatively used.

Let us consider an approximate factorization of the coefficient matrix A,

![]() (2.3)

(2.3)

where ![]() and

and![]() , are lower and upper sparse triangular matrices of irregular structures of semi-bandwidths m and p retaining

, are lower and upper sparse triangular matrices of irregular structures of semi-bandwidths m and p retaining ![]() and

and ![]() fill-in terms respectively. The decomposition factors

fill-in terms respectively. The decomposition factors ![]() and

and ![]() are banded matrices with l1 and l2 the numbers of diagonals retained in semi-bandwidths m and p respectively (Figure 2 and Figure 3), of the following form.

are banded matrices with l1 and l2 the numbers of diagonals retained in semi-bandwidths m and p respectively (Figure 2 and Figure 3), of the following form.

The computation of the elements of the sparse decomposition factors has been presented in [23] [24] [27] .

![]()

Figure 2. The structure of lower decomposition factor![]() .

.

![]()

Figure 3. The structure of upper decomposition factor![]() .

.

The relationships of the elements of V matrix and the corresponding conventional (for ![]() and

and![]() ) is shown in the following Figure 4.

) is shown in the following Figure 4.

An analogue scheme can be obtained for matrix W, while the relationships of the elements of H matrix and the corresponding conventional (for ![]() and

and![]() ) is shown in the following Figure 5 (the same holds for the matrix F).

) is shown in the following Figure 5 (the same holds for the matrix F).

The (near) optimum values of fill-in parameters are mainly depended on the nature of the problem and structure of the coefficient matrix A [22] [47] - [49] .

3. Generalized Approximate Inverse Solvers for Unsymmetric Linear Systems of Irregular Structure

3.1. Introduction

An exact inverse algorithm based on adaptive algorithmic methodologies for solving linear unsymmetric systems of irregular structure arising in FD/FE discretization of boundary-value problems in three space dimensions has been recently presented [36] . This algorithm computes the elements of an exact inverse of a given unsymmetric matrix of irregular structure using an exact LU factorization [22] . The computational work of the EBAIM-1 algorithm is ![]() multiplications, while the memory requirements are (n ´ n) words. In the case of very large systems the memory requirements could be prohibitively high and the usage of approximate inverse iterative techniques is desirable.

multiplications, while the memory requirements are (n ´ n) words. In the case of very large systems the memory requirements could be prohibitively high and the usage of approximate inverse iterative techniques is desirable.

It should be also noted that in the case that only ![]() and

and ![]() fill-in terms are retained in semi-bandwidths m and p respectively, then a class of approximate inverse matrix algorithms for solving large sparse unsymmetric linear systems of irregular structure [50] arising in the FD/FE discretization of elliptic and

fill-in terms are retained in semi-bandwidths m and p respectively, then a class of approximate inverse matrix algorithms for solving large sparse unsymmetric linear systems of irregular structure [50] arising in the FD/FE discretization of elliptic and

![]()

Figure 4. The relationship of matrix V in its banded stored form and the corres- ponding one in Figure 1.

![]()

Figure 5. The relationship of matrix H in its stored form and the corres- ponding one in Figure 3.

parabolic boundary values, can be obtained. Such algorithms are described in the next sections.

A class of optimized approximate inverse variants can be obtained by considering a (near) optimized choice of the approximate inverse M depends on the selection of related parameters, i.e. fill-in parameters r1, r2, retention parameters δl1, δl2 and entropy-adaptivity-uncertainty (EAU) parameters [36] [51] . Note that the selection of retention parameter values as multiples of the corresponding semi-bandwidths of the original matrix leads to improved numerical results [22] . Then, the following sub-classes of approximate inverses, depending on the accuracy, storage and computational work requirements, can be derived as indicated in the following Figure 6.

where ![]() of sub-class I is a banded form of the exact inverse retaining

of sub-class I is a banded form of the exact inverse retaining ![]() elements along each row and column respectively, while its elements are equal to the corresponding elements of the exact inverse. The term

elements along each row and column respectively, while its elements are equal to the corresponding elements of the exact inverse. The term ![]() of sub-class II is a banded form of M, retaining only

of sub-class II is a banded form of M, retaining only ![]() elements along each row and column during the computational procedure of the approximate inverse and under certain hypotheses can be considered as a good approximation of the original inverse, while the entries of the approximate inverse in sub-class III have been retained after computing M*

elements along each row and column during the computational procedure of the approximate inverse and under certain hypotheses can be considered as a good approximation of the original inverse, while the entries of the approximate inverse in sub-class III have been retained after computing M* ![]() and are less accurate than the corresponding entries of

and are less accurate than the corresponding entries of![]() . Finally, in sub-class IV the elements of the approximate inverse can be computed.

. Finally, in sub-class IV the elements of the approximate inverse can be computed.

3.2. Approximate Inverse Algorithmic Methodologies

Algorithmic solution methods for the linear systems (1.2) applicable to both two and three space dimension can be applied [23] , where the unsymmetric coefficient matrix, in which all the off-centre terms are grouped into regular bands, can be factorized exactly to yield direct algorithmic procedures for the FD or FE solution. Alternatively, preconditioned iterative techniques for solving numerically the FD or FE linear systems (1.2) can be used by deriving semi-direct solution methods following the principle [28] that implicit procedures based on approximately decomposing discrete operators into easily invertible factors facilitating the solution of (1.2). Sparse factorization procedures yield efficient procedures for the FE or FD solution by manipulating the problem of the fill-in terms, which occur during the factorization. Note that simple compact storage schemes for the considered data can be used and preconditioned algorithmic solvers do not require any searching operations. An important feature of the proposed adaptive algorithmic methods is the provision of both direct and iterative methods for solving large sparse unsymmetric systems of irregular non-zero structure, with additional computational facilities, i.e. the choice of fill-in parameters, rejection parameters, entropy-adaptivity-uncertainty (EAU) parameters [36] [51] , by which the best method for a given problem can be selected. The proposed methods have a universal

![]()

Figure 6. Subclasses of approximate inverses.

scope of application for numerically solving of elliptic and parabolic boundary value problems by either FD or FE discretization methods in both two and three space dimensions with the only restriction being that the coefficient matrix be diagonally dominant.

Let us assume that![]() , a non-singular

, a non-singular ![]() matrix, is an approximate inverse of A, i.e.

matrix, is an approximate inverse of A, i.e. ![]() ,

,![]() . Note that if

. Note that if ![]() and

and ![]() non-zero elements have been retained in the corresponding decomposition factors, then

non-zero elements have been retained in the corresponding decomposition factors, then![]() , where M is the exact inverse of A. The elements of M can be determined by solving recursively the systems

, where M is the exact inverse of A. The elements of M can be determined by solving recursively the systems

![]() , (3.1)

, (3.1)

having main disadvantages, i.e. high storage requirements and computational work involved particularly in the case of solving very large unsymmetric linear systems. A class of approximate inverses ![]() can be obtained by retaining only

can be obtained by retaining only ![]() and

and ![]() diagonals in the lower and upper triangular parts of inverse respectively, the remaining elements being just not computed at all. Optimized forms of this algorithm are particularly effective for solving banded sparse FE systems of very large order, i.e.

diagonals in the lower and upper triangular parts of inverse respectively, the remaining elements being just not computed at all. Optimized forms of this algorithm are particularly effective for solving banded sparse FE systems of very large order, i.e. ![]() or in the case of narrow-banded sparse FE systems of very large order, i.e.

or in the case of narrow-banded sparse FE systems of very large order, i.e.![]() .

.

Let us consider now the approximate inverse of A with the form

![]() (3.2)

(3.2)

where ![]() are the fill-in parameters, i.e. the number of outermost off-diagonal entries retained in semi-band- widths m and p respectively.

are the fill-in parameters, i.e. the number of outermost off-diagonal entries retained in semi-band- widths m and p respectively.

Then, by post-multiplying Equation (3.2) by ![]() and pre-multiplying the same equation by

and pre-multiplying the same equation by![]() , we obtain

, we obtain

![]() (3.3)

(3.3)

where![]() . Note that in the 2D symmetric case, i.e.

. Note that in the 2D symmetric case, i.e.![]() ,

, ![]() , where r is the fill-in parameter, i.e. the number of outer-most off diagonal entries retained in semi-bandwidth of the tridiagonal factor Lr., by considering the equations in the analytical form for i-row with

, where r is the fill-in parameter, i.e. the number of outer-most off diagonal entries retained in semi-bandwidth of the tridiagonal factor Lr., by considering the equations in the analytical form for i-row with ![]() and the j-column with

and the j-column with ![]() respectively we can obtain

respectively we can obtain

![]() (3.4)

(3.4)

where ![]() is the Kronecker delta [21] [27] [52] .

is the Kronecker delta [21] [27] [52] .

The elements of the approximate inverse for ![]() can be determined successively as

can be determined successively as ![]() (i.e. elements of the n-th row of the inverse) and for

(i.e. elements of the n-th row of the inverse) and for ![]() we obtain

we obtain ![]() (i.e. the n-th column of the inverse). Proceeding in a similar manner we can explicitly determine for

(i.e. the n-th column of the inverse). Proceeding in a similar manner we can explicitly determine for ![]() and

and ![]() respectively the remaining elements

respectively the remaining elements![]() . In the following Figure 7 the form of an (8 × 8) approximate inverse matrix is indicatively demonstrated.

. In the following Figure 7 the form of an (8 × 8) approximate inverse matrix is indicatively demonstrated.

where ![]() is a (8 × 8) banded approximate inverse matrix with retention parameters

is a (8 × 8) banded approximate inverse matrix with retention parameters ![]() and

and![]() . Note that for simplicity reasons the case

. Note that for simplicity reasons the case ![]() is considered.

is considered.

3.3. Optimized Approximate Inverse Matrices and Storage Techniques

Let us consider the exact inverse M of the original coefficient matrix A in equation (2.1). Note that the computation of the inverse is indicated in the following characteristic diagram (Figure 8).

It should be noted that the diagonal elements (in bold) are firstly computed (starting from the last element of the inverse, i.e.![]() ) and then computing upwards/column-wise and from right to left/row-wise. Note also that

) and then computing upwards/column-wise and from right to left/row-wise. Note also that

![]()

Figure 7. The structure of an (8 × 8) banded approximate inverse.

![]()

Figure 8. Computing the elements of the approximate inverse M* of sub-class IV following the KS technique (K = 2)*. (*) Note that this computational technique will be referred as double-sweep (DS) technique.

the last diagonal element ![]() is computed and then all the elements of n-row (from right to left) and n-column (upwards). Then, only the diagonal element

is computed and then all the elements of n-row (from right to left) and n-column (upwards). Then, only the diagonal element ![]() is computed, and next the diagonal element

is computed, and next the diagonal element ![]() is computed and all the elements of (n-2)-row (from right to left) and the elements of (n-2)-column (upwards). Continuing in this way the rest elements of this approximate inverse are computed. It should be noted that the computational work of the resulting inverse M* of sub-class IV, is almost the half of that required by the approximate inverse of sub-class III. Note diagrammatically, as it is shown in Figure 8, only the underlined elements of the approximate inverse M* are computed.

is computed and all the elements of (n-2)-row (from right to left) and the elements of (n-2)-column (upwards). Continuing in this way the rest elements of this approximate inverse are computed. It should be noted that the computational work of the resulting inverse M* of sub-class IV, is almost the half of that required by the approximate inverse of sub-class III. Note diagrammatically, as it is shown in Figure 8, only the underlined elements of the approximate inverse M* are computed.

By generalized this storage saving computational technique, we consider the above DS technique can be replaced by k-sweep (KS) technique, i.e. after the computation of the last diagonal element![]() , all the elements of n-row (from right to left) and n-column (upwards). Then, only the diagonal elements

, all the elements of n-row (from right to left) and n-column (upwards). Then, only the diagonal elements ![]() are computed, and next the diagonal element

are computed, and next the diagonal element ![]() is computed and all the elements of (n-k-2)-row (from right to left) and the elements of (n-k-2)-column (upwards). Continuing in this way the rest elements of this approximate inverse are computed. It should be noted that the computational work of the resulting inverse M* of this sub-class by using the KS-storage technique is considerably smaller than that required by the approximate inverse resulting from the application of the DS storage technique. In the case of k = 2 the KS-storage technique reduces to the example shown in Figure 4.

is computed and all the elements of (n-k-2)-row (from right to left) and the elements of (n-k-2)-column (upwards). Continuing in this way the rest elements of this approximate inverse are computed. It should be noted that the computational work of the resulting inverse M* of this sub-class by using the KS-storage technique is considerably smaller than that required by the approximate inverse resulting from the application of the DS storage technique. In the case of k = 2 the KS-storage technique reduces to the example shown in Figure 4.

An optimized explicit banded approximate inverse by minimizing the memory requirements of EBAIM-1 algorithm

In order to minimize the memory requirements of EBAIM-1 algorithm, which in particular in the case of very large matrices of irregular structure can be prohibitively high, we consider the inverse M of equation (5.4) revolving its elements by 180˚ about the anti-diagonal removing the diagonal and the (δl-1) super diagonals in the first δl columns, while the rest δl sub-diagonals in the rest δl columns, then results the following form of the inverse (Figure 9).

4. The Optimized Approximate Inverse Algorithm

The application of this storage scheme on the approximate inverse leads to the following optimized approximate inverse algorithm. Note that the computation of the approximate inverse algorithm pre-assumes the approximate factorization of the coefficient matrix A, i.e.![]() , where

, where ![]() and

and ![]() are the lower and upper

are the lower and upper

![]()

Figure 9. Transformed forms of optimized approximate inverse M* (in banded storage).

sparse triangular decomposition factors [21] [23] - [25] [49] .

Algorithm OBAIM-1 (a, b, c, n, F, H, g, Γ, Ζ, ω, β, r1, r2, m, p, l1, l2, δl, M*)

Purpose: This algorithm computes the elements of the approximate inverse of a given real (n ´ n) matrix of irregular structure

Input: diagonal elements a of matrix A; superdiagonal elements b, subdiagonal elements c, n order of A; submatrices F, H, of upper triadiagonal decomposition factor U, superdiagonal elements g of L; submatrices Γ, Z, of lower tridiagonal matrix L; diagonal elements ω of L; subdiagonal elements β of L; fill-in parameters r1, r2; semi-bandwidths m, p; l1 and l2 numbers of diagonals retained in semi-bandwidths m and p respectively, ![]() and

and ![]() are the numbers of diagonal retained in approximate inverse M*/for simplicity reasons

are the numbers of diagonal retained in approximate inverse M*/for simplicity reasons ![]() is chosen/

is chosen/

Output: elements ![]() of the approximate inverse

of the approximate inverse ![]()

Computational Procedure:

step 1: let![]() ;

;![]() ;

;![]() ;

;![]() ;

;![]() ;

;![]() ;

; ![]() ;

;![]() ;

;![]() ;

; ![]()

step 2: for ![]()

step 3: for ![]()

step 4: if ![]() then

then

step 5: if ![]() then

then

step 6: if ![]() then

then

step 7: ![]()

step 8: else

step 9: ![]()

step 10: ![]()

step 11: else

![]()

step 12: ![]()

Step 13: else

if ![]() and

and ![]() then

then

step 14: if ![]() then

then

step 15: ![]()

step 16: ![]()

step 17: ![]()

step 18: ![]()

step 19: else

if ![]() and

and ![]() then

then

step 20: if ![]() then

then

step 21: ![]()

step 22: ![]()

step 23: ![]()

step 24: ![]()

step 25: ![]()

step 26: ![]()

step 27: else ![]()

step 28: ![]()

step 29: ![]()

step 30: ![]()

step 31: ![]()

step 32: ![]()

step 33: else

if ![]() then

then

step 34: if ![]() then

then

step 35: ![]()

step 36: ![]()

step 37: ![]()

step 38: ![]()

step 39: ![]()

step 40: ![]()

step 41: else ![]()

step 42: ![]()

step 43: ![]()

step 44: ![]()

step 45: ![]()

step 46: ![]()

step 47: ![]()

step 48: ![]()

step 49: ![]()

step 50: ![]()

step 51: else ![]()

step 52: ![]()

step 53: ![]()

step 54: ![]()

step 55: ![]()

step 56: ![]()

step 57: ![]()

step 58: ![]()

step 59: ![]()

step 60: ![]()

step 61: for ![]()

step 62: ![]()

step 63: form the approximate inverse matrix ![]()

The subroutine mw (n, δl, s, q, x, y) performs the transformation in the indexes of the explicit approximate inverse matrix from its banded form to the optimized form. This routine has the following form:

Subroutine mw (n, δl, s, q, x, y)

If ![]()

Then

![]()

![]()

else

![]()

![]()

The computational work of the optimized OBAIM-1 algorithm is ![]() multiplica-

multiplica-

tions, while the memory requirements have been reduced down to ![]() words. It should be also noted that a class of approximate inverse matrix can be considered containing several sub-classes of approximate inverses according to memory requirements, computational work, accuracy, as indicated in the diagrammatic schemes (Figure 3 and Figure 7).

words. It should be also noted that a class of approximate inverse matrix can be considered containing several sub-classes of approximate inverses according to memory requirements, computational work, accuracy, as indicated in the diagrammatic schemes (Figure 3 and Figure 7).

5. Explicit Adaptive Iterative Methods

A class of Adaptive Iterative Schemes for solving large sparse linear systems includes the following adaptive preconditioned iterative methods:

![]() , (Explicit preconditioned simultaneous displacement) (5.1)

, (Explicit preconditioned simultaneous displacement) (5.1)

![]() , (Explicit Preconditioned first order Richardson) (5.2)

, (Explicit Preconditioned first order Richardson) (5.2)

![]() (Explicit Preconditioned second order Richardson) (5.3)

(Explicit Preconditioned second order Richardson) (5.3)

![]() (Explicit Preconditioned Chebyshev) (5.4)

(Explicit Preconditioned Chebyshev) (5.4)

where![]() , α and β are predetermined acceleration parameters,

, α and β are predetermined acceleration parameters, ![]() and

and ![]() are sequences of preconditioned acceleration parameters and

are sequences of preconditioned acceleration parameters and![]() , i ≥ 0.

, i ≥ 0.

5.1. The Explicit Preconditioned Iterative Method

During the last decades extensive research work has been focused in the preconditioned approach and preconditioned iterative methods for solving large linear and nonlinear problems in sequential and parallel environments [5] [8] [11] [12] [14] [26] [29] [30] [38] [39] [41] [47] [53] - [58] . A predominant role in the usage of the preconditioned iterative schemes possess the explicit preconditioned Conjugate Gradient (EPCG) method and its variants using the sparse approximate inverse M* due to its superior convergence rate for solving very large complex computational problems [47] . A characteristic explicit solver of this sub-class is the Explicit Preconditioned Generalized Conjugate Gradient (EPGCG) method [58] . This basic EPCG method can be expressed in the following compact form:

Algorithm EPCG-1 (A, n, s, u0, u, r)

Purpose: This algorithm computes the solution vector of the linear system ![]() by using the explicit preconditioned generalized Conjugate Gradient method.

by using the explicit preconditioned generalized Conjugate Gradient method.

Input: A given matrix, n order of A, s known rhs vector, u0 initial guess

Output: solution vector u, residual r

Computational Procedure:

Step 1: let ![]() be an arbitrary initial approximation to the solution u

be an arbitrary initial approximation to the solution u

Step 2: set![]() , form

, form ![]() and set

and set ![]()

Step 3: for ![]() (until convergence)

(until convergence)

compute ![]()

//compute scalar quantities ![]() as follows://

as follows://

Step 4: form ![]() and set

and set ![]() (only for

(only for![]() )

)

Step 5: evaluate ![]() and compute

and compute ![]()

Step 6: compute ![]() and form

and form ![]()

Step 7: compute ![]() and evaluate

and evaluate ![]()

Step 8: compute ![]()

Step 9: if there is no convergence go to step 3,

Step 10: else

print the approximate solution ![]() and corresponding residual

and corresponding residual![]() .

.

Note that a good approximant M* leads obviously to an improved EPCG method. The effectiveness of the explicit preconditioned iterative methods for solving certain classes of elliptic boundary value problems on regular domains is related to the fact that the exact inverse of A (although is full) exhibits a similar fuzzy structure around the principal diagonal and m-diagonals [22] .

5.2. The Symmetric Case

In the case of symmetric coefficient matrix by using the four-matrix decomposition [27] [41] the corresponding inverse subclasses can be enlarged as follows in Figure 10.

where 1) the elements of exact inverse of subclass I are obtained after the exact decomposition ![]() of M+, with excessive memory and computational requirements, 2) the elements of the inverse

of M+, with excessive memory and computational requirements, 2) the elements of the inverse ![]() of subclass II have been computed after the application of the exact inverse algorithm

of subclass II have been computed after the application of the exact inverse algorithm![]() , while only

, while only ![]() and

and ![]() diagonals have been retained, 3) the elements of inverse MS2 of subclass III have been computed from the approximate inverse, while the exact decomposition

diagonals have been retained, 3) the elements of inverse MS2 of subclass III have been computed from the approximate inverse, while the exact decomposition ![]() has been applied, 4) the elements of the inverse of subclass IV have been computed from the approximate factorization and the banded approximate inverse algorithm has been used for computing the elements of the inverse

has been applied, 4) the elements of the inverse of subclass IV have been computed from the approximate factorization and the banded approximate inverse algorithm has been used for computing the elements of the inverse![]() , 5) the elements of inverse of subclass V have been retained only on the diagonal elements of the inverse, i.e.

, 5) the elements of inverse of subclass V have been retained only on the diagonal elements of the inverse, i.e.![]() , that is

, that is![]() , leading to a fast algorithm for computing of approximate inverse.

, leading to a fast algorithm for computing of approximate inverse.

Note that the largest elements of inverse matrix are mainly gathered around the main diagonal in distances ![]() and

and ![]() in a recurring wave like pattern (Lipitakis, 1984), where m and p are respectively the semi-bandwidths of the coefficient matrix A, and

in a recurring wave like pattern (Lipitakis, 1984), where m and p are respectively the semi-bandwidths of the coefficient matrix A, and ![]() and

and![]() . Based on this observation the selection of retention parameters

. Based on this observation the selection of retention parameters![]() ,

, ![]() is recommended to be multiples of values of m and p, leading to preconditioners with better performance [22] .

is recommended to be multiples of values of m and p, leading to preconditioners with better performance [22] .

An indication of the sparsity and memory requirements of optimized versions of approximate inverses is given in the following Table 1.

It should be noted that in the case that δl2 = 0 the approximate inverse algorithm is reduced to an algorithm for solving FE systems in two space dimensions of semi-bandwidth m, while if ![]() then the algorithm reduces to one for solving FD linear systems in three space dimensions of semi-bandwidths m and p [23] . In the case of δl1 = 1 and δl2 = 0, then the approximate inverse reduces to one for solving linear FD systems in two space dimensions of semi-bandwidth m [25] , while if

then the algorithm reduces to one for solving FD linear systems in three space dimensions of semi-bandwidths m and p [23] . In the case of δl1 = 1 and δl2 = 0, then the approximate inverse reduces to one for solving linear FD systems in two space dimensions of semi-bandwidth m [25] , while if ![]() then the approximate inverse reduces to the one for solving tridiagonal linear systems (Thomas algorithm) [59] .

then the approximate inverse reduces to the one for solving tridiagonal linear systems (Thomas algorithm) [59] .

![]()

Figure 10. Subclasses of inverses for the symmetric case.

![]()

Table 1. Memory and sparsity requirements of the approximate inverse matrix (n = 8000, m = 21, p = 401), where δl denotes here the retention parameters![]() .

.

6. Numerical Experiments

In this section a nonlinear case study by using approximate inverse preconditioned methods are presented.

The nonlinear case

Let us consider the nonlinear elliptic PDE

![]() (6.1)

(6.1)

where ![]() subject to the Dirichlet boundary conditions

subject to the Dirichlet boundary conditions

![]() (6.1a)

(6.1a)

where ∂R is the exterior boundary of the domain R.

Equation (6.1) arises in magnetohydrodynamics (diffusion-reaction, vortex problems, electric space charge considerations) with its existence and uniqueness assured by the classical theory [32] [33] . The solution of Equation (6.1) can be obtained by the linearized Picard and quasi-linearized Newton iterative schemes as outer iterative schemes of the form:

![]() and (6.2)

and (6.2)

![]() (6.3)

(6.3)

where δ denotes here the usual central difference operator.

The resulting large sparse nonlinear system is of the form

![]() , (6.4)

, (6.4)

where Ω is a block tridiagonal matrix [23] .

Then, composite iterative schemes can be used, where Picard/Newton iterations are the outer iteration, while the inner iteration can be carried out either directly by an exact algorithm or by an approximate algorithm in conjunction with an explicit iterative method (6.3). The latter method can be written as

![]() , (6.5)

, (6.5)

where the superscript l denotes the outer iteration index, the subscript I denotes the inner iteration and![]() .

.

The outer iteration was terminated when the following criterion was satisfied

![]() , (6.6)

, (6.6)

while the termination criterion of the inner iteration was

![]() (6.7)

(6.7)

where ε1 was taken initially as ![]() and then was decreased at each iterative step by 1/10 to 10−6, where it remained constant during the next iterative steps. Numerical experiments were carried out for nonlinear problem (6.4) with

and then was decreased at each iterative step by 1/10 to 10−6, where it remained constant during the next iterative steps. Numerical experiments were carried out for nonlinear problem (6.4) with ![]() and the initial guesses when u was on the boundary 0.0, 5.0, 10.0 were chosen as 0.0, 4.0, 6.0 respectively. The performance of the composite schemes Newton-Explicit Preconditioned Simultaneous Displacement (EPSD) and Picard/Newton-EPCG are given in the following Table 2 and Table 3.

and the initial guesses when u was on the boundary 0.0, 5.0, 10.0 were chosen as 0.0, 4.0, 6.0 respectively. The performance of the composite schemes Newton-Explicit Preconditioned Simultaneous Displacement (EPSD) and Picard/Newton-EPCG are given in the following Table 2 and Table 3.

7. Conclusions

A class of exact and approximate inverse adaptive algorithmic procedures has been presented for solving numerically initial/boundary value problems. Several subclasses of optimized variants of these algorithms have been also proposed for solving economically highly nonlinear systems of irregular structure. It should be stated that the proposed explicit preconditioned iterative methods and their related variants can be efficiently used for solving large sparse nonlinear systems of irregular structure of complex computational problems and for the numerical solution of highly nonlinear initial/ boundary value problems in two and three space dimensions.

![]()

Table 2. The performance of composite iterative schemes Picard/Newton for the nonlinear system (6.4) using the EPSD method (r = 4), with n = 361, m = 20, for several values of acceleration parameter α and retention parameters δl.

![]()

Table 3. The performance of composite iterative schemes Picard/Newton for the nonlinear system (6.4) using the EPCG method, with n = 361, m = 20, for several values of retention parameters δl and fill-in parameters r.

Future research work includes the parallelization of the proposed class of exact and approximate inverse matrices of irregular structure. These adaptive exact and approximate inverse algorithmic techniques can be used for solving efficiently highly nonlinear large sparse systems arising in the numerical solution of complex computational problems in parallel computer environments.